分布式集群ELK——ELK日志分析系统

1.ELK日志分析系统简介

1.1 日志服务器

1.2 ELK日志分析系统

- Elasticsearch

- Logstash

- Kibana

1.2 日志处理步骤

1.将日志进行集中化管理

2.将日志格式化(Logstash) 并输出到Elasticsearch

3.对格式化后的数据进行索引|和存储(Elasticsearch)

4.前端数据的展示(Kibana)

2. Elasticsearch介绍

2.1 Elasticsearch的概述

- 提供了一个分布式多用户能力的全文搜索引擎

2.2 Elasticsearch核心概念

- 接近实时

-集群 - 节点

- 索引

- 索引(库)-→类型(表)→文档(记录)

- 分片和副本

3.Logstash介绍

3.1 Logstash介绍

- 一款强大的数据处理工具

- 可实现数据传输、格式处理、格式化输出

- 数据输入、数据加工(如过滤,改写等)以及数据输出

3.2 LogStash主要组件

- Shipper

- Indexer

- Broker

- Search and Storage

- Web Interface

4. Kibana介绍

4.1 Kibana介绍

- 一个针对Elasticsearch的开源分析及可视化平台

- 搜索、查看存储在Elasticsearch索引中的数据

- 通过各种图表进行高级数据分析及展示

4.2 Kibana主要功能

- Elasticsearch无缝之 集成

- 整合数据,复杂数据分析

- 让更多团队成员受益

- 接口灵活,分享更容易

- 配置简单,可视化多数据源

- 简单数据导出

5. 配置Elasticsearch

安装步骤

1.安装Elasticsearch软件

2.加载系统服务

3.更改Elasticsearch主配置文件

4.创建数据存放路径并授权

5.启动Elasticsearch并查看 是否成功开启

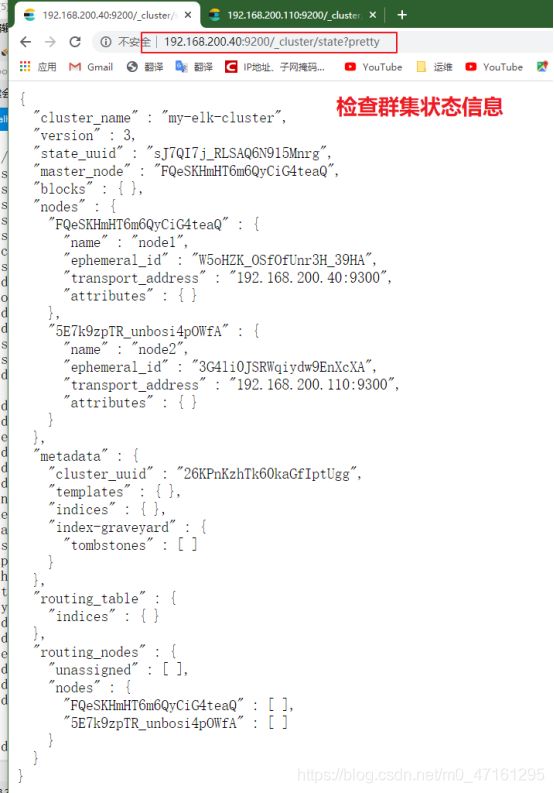

6.查看节点信息

6. 安装Elasticsearch-head插件

安装步骤

1.编译安装node

2.安装phantomjs

3.安装Elasticsearch-head

4.修改Elasticsearch主配置文件

5.启动服务

6.通过Elasticsearch-head查看 Elasticsearch信息

7.插入索引

7.安装部署logstash

安装步骤

1.在node3上安装Logstash

2.测试Logstash

3.修改Logstash配置文件

8.安装部署Kibana

安装步骤

1.在node1 服务器上安装Kibana,并设置开机启动

2.设 置Kibana的主配置文件/etc/kibana/kibana.yml

3.启动Kibana服务

4.验证Kibana

5.将Apache服务器的日志添加到Elasticsearch并通过Kibana显示

9.实验——安装部署ELK

9.1 实验环境

node1 192.168.200.40

node2 192.168.200.110

Apache服务器 192.168.200.120

9.2 实验拓扑

9.3 实验步骤

1 配置node1的Elasticsearch节点

[root@localhost ~]# hostnamectl set-hostname node1 //更改主机名

[root@localhost ~]# su //切换主机名

[root@node1 ~]# vim /etc/hosts //修改hosts文件,添加节点和地址

192.168.200.40 node1

192.168.200.110 node2

[root@node1 ~]# systemctl stop firewalld.service //停止防火墙

[root@node1 ~]# setenforce 0

[root@node1 ~]# ping node2 //查看与node2 节点网络是否连通

PING node2 (192.168.200.110) 56(84) bytes of data.

64 bytes from node2 (192.168.200.110): icmp_seq=1 ttl=64 time=0.525 ms

[root@node1 ~]# java -version //查看Java版本

openjdk version "1.8.0_181"

OpenJDK Runtime Environment (build 1.8.0_181-b13)

OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)

[root@node1 opt]# ls

elasticsearch-5.5.0.rpm rh

[root@node1 opt]# rpm -ivh elasticsearch-5.5.0.rpm //手工编译安装elasticsearch

[root@node1 opt]# systemctl daemon-reload //重新加载elasticsearch的配置文件

[root@node1 opt]# systemctl enable elasticsearch.service

Created symlink from /etc/systemd/system/multi-user.target.wants/elasticsearch.service to /usr/lib/systemd/system/elasticsearch.service.

[root@node1 opt]# cp -p /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak //备份

[root@node1 opt]# cd /etc/elasticsearch/

[root@node1 elasticsearch]# ls

elasticsearch.yml elasticsearch.yml.bak jvm.options log4j2.properties scripts

[root@node1 elasticsearch]# vim elasticsearch.yml //配置配置文件

17 cluster.name: my-elk-cluster //集群名字

23 node.name: node1 //节点名字

33 path.data: /data/elk_data //数据存放路径

37 path.logs: /var/log/elasticsearch //日志存放路径

43 bootstrap.memory_lock: false //不在启动的时候锁定内存

55 network.host: 0.0.0.0 //提供服务绑定的ip地址,0.0.0.0代表所有地址

59 http.port: 9200 //侦听端口为9200

68 discovery.zen.ping.unicast.hosts: ["node1", "node2"] //集群发现通过单播实现

[root@node1 elasticsearch]# grep -v "^#" elasticsearch.yml //查看修改配置八行,过滤检查

cluster.name: my-elk-cluster

node.name: node1

path.data: /data/elk_data

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: false

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["node1", "node2"]

[root@node1 elasticsearch]# mkdir -p /data/elk_data //递归创建数据存放路径

[root@node1 elasticsearch]# id elasticsearch //查看系统用户

uid=987(elasticsearch) gid=981(elasticsearch) 组=981(elasticsearch)

[root@node1 elasticsearch]# chown elasticsearch.elasticsearch /data/elk_data/ //赋予权限 属主属组

[root@node1 elasticsearch]# systemctl start elasticsearch.service //开启服务

[root@node1 elasticsearch]# netstat -antp | grep 9200

tcp6 0 0 :::9200 :::* LISTEN 69796/java

2 配置node2的Elasticsearch节点,和上面的操作一样

[root@localhost ~]# hostnamectl set-hostname node2

[root@localhost ~]# su

[root@node2 ~]# vim /etc/hosts

192.168.200.40 node1

192.168.200.110 node2

[root@node2 ~]# systemctl stop firewalld.service

[root@node2 ~]# setenforce 0

[root@node2 ~]# ping node1

PING node1 (192.168.200.40) 56(84) bytes of data.

64 bytes from node1 (192.168.200.40): icmp_seq=1 ttl=64 time=0.208 ms

[root@node2 ~]# java -version

openjdk version "1.8.0_181"

OpenJDK Runtime Environment (build 1.8.0_181-b13)

OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)

[root@node2 ~]# cd /opt/

[root@node2 opt]# ls

elasticsearch-5.5.0.rpm rh

[root@node2 opt]# rpm -ivh elasticsearch-5.5.0.rpm

[root@node2 opt]# systemctl daemon-reload

[root@node2 opt]# systemctl enable elasticsearch.service

Created symlink from /etc/systemd/system/multi-user.target.wants/elasticsearch.service to /usr/lib/systemd/system/elasticsearch.service.

[root@node2 opt]# cp -p /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak

[root@node2 opt]# cd /etc/elasticsearch/

[root@node2 elasticsearch]# ls

elasticsearch.yml elasticsearch.yml.bak jvm.options log4j2.properties scripts

[root@node2 elasticsearch]# vim elasticsearch.yml

17 cluster.name: my-elk-cluster

23 node.name: node2

33 path.data: /data/elk_data

37 path.logs: /var/log/elasticsearch

43 bootstrap.memory_lock: false

55 network.host: 0.0.0.0

59 http.port: 9200

68 discovery.zen.ping.unicast.hosts: ["node1", "node2"]

[root@node2 elasticsearch]# grep -v "^#" elasticsearch.yml

cluster.name: my-elk-cluster

node.name: node2

path.data: /data/elk_data

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: false

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["node1", "node2"]

[root@node2 elasticsearch]# mkdir -p /data/elk_data

[root@node2 elasticsearch]# id elasticsearch

uid=987(elasticsearch) gid=981(elasticsearch) 组=981(elasticsearch)

[root@node2 elasticsearch]# chown elasticsearch.elasticsearch /data/elk_data/

[root@node2 elasticsearch]# systemctl start elasticsearch.service

[root@node2 elasticsearch]# netstat -antp | grep 9200

tcp6 0 0 :::9200 :::* LISTEN 70239/java

3 使用win10客户端访问9200端口,查看elasticsearch服务

4 安装elasticsearch-head插件(数据可视化工具)

- 节点1

[root@node1 elasticsearch]# cd /opt/

[root@node1 opt]# ls

elasticsearch-5.5.0.rpm node-v8.2.1.tar.gz rh

elasticsearch-head.tar.gz phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 opt]# yum -y install gcc gcc-c++ make

[root@node1 opt]# tar zxvf node-v8.2.1.tar.gz

[root@node1 opt]# cd node-v8.2.1/

[root@node1 node-v8.2.1]# ./configure

[root@node1 node-v8.2.1]# make -j8 (开启几个核心编译)

[root@node1 node-v8.2.1]# make install

[root@node1 node-v8.2.1]# cd /opt/

[root@node1 opt]# tar jxvf phantomjs-2.1.1-linux-x86_64.tar.bz2 -C /usr/local/src/ //前端框架

[root@node1 opt]# cd /usr/local/src/

[root@node1 src]# ls

phantomjs-2.1.1-linux-x86_64

[root@node1 src]# cd phantomjs-2.1.1-linux-x86_64/

[root@node1 phantomjs-2.1.1-linux-x86_64]# ls

bin ChangeLog examples LICENSE.BSD README.md third-party.txt

[root@node1 phantomjs-2.1.1-linux-x86_64]# cd bin/

[root@node1 bin]# ls

phantomjs

[root@node1 bin]# cp phantomjs /usr/local/bin/

[root@node1 bin]# cd /opt/

[root@node1 opt]# tar zxvf elasticsearch-head.tar.gz -C /usr/local/src/

[root@node1 opt]# cd /usr/local/src/elasticsearch-head/

[root@node1 elasticsearch-head]# npm install

npm WARN deprecated [email protected]: fsevents 1 will break on node v14+ and could be using insecure binaries. Upgrade to fsevents 2.

npm WARN optional SKIPPING OPTIONAL DEPENDENCY: fsevents@^1.0.0 (node_modules/karma/node_modules/chokidar/node_modules/fsevents):

npm WARN notsup SKIPPING OPTIONAL DEPENDENCY: Unsupported platform for [email protected]: wanted {"os":"darwin","arch":"any"} (current: {"os":"linux","arch":"x64"})

npm WARN [email protected] license should be a valid SPDX license expression

up to date in 3.523s

[root@node1 elasticsearch-head]# vim /etc/elasticsearch/elasticsearch.yml

http.cors.enabled: true //开启跨域访问支持,默认为false

http.cors.allow-origin: "*" //跨域访问允许的域名地址

[root@node1 elasticsearch-head]# systemctl restart elasticsearch.service

[root@node1 elasticsearch-head]# npm run start & //切换到后台运行

[1] 115927

[root@node1 elasticsearch-head]#

> [email protected] start /usr/local/src/elasticsearch-head

> grunt server

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

[root@node1 elasticsearch-head]# netstat -antp | grep 9100

tcp 0 0 0.0.0.0:9100 0.0.0.0:* LISTEN 115937/grunt

[root@node1 elasticsearch-head]# netstat -antp | grep 9200

tcp6 0 0 :::9200 :::* LISTEN 115812/java

- 节点2

[root@node2 elasticsearch]# cd /opt/

[root@node2 opt]# ls

elasticsearch-5.5.0.rpm node-v8.2.1.tar.gz rh

elasticsearch-head.tar.gz phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node2 opt]# yum -y install gcc gcc-c++ make

[root@node2 opt]# tar zxvf node-v8.2.1.tar.gz

[root@node2 opt]# cd node-v8.2.1/

[root@node2 node-v8.2.1]# ./configure

[root@node2 node-v8.2.1]# make install

[root@node2 node-v8.2.1]# cd /opt/

[root@node2 opt]# tar jxvf phantomjs-2.1.1-linux-x86_64.tar.bz2 -C /usr/local/src/

[root@node2 opt]# cd /usr/local/src/

[root@node2 src]# ls

phantomjs-2.1.1-linux-x86_64

[root@node2 src]# cd phantomjs-2.1.1-linux-x86_64/

[root@node2 phantomjs-2.1.1-linux-x86_64]# ls

bin ChangeLog examples LICENSE.BSD README.md third-party.txt

[root@node2 phantomjs-2.1.1-linux-x86_64]# cd bin/

[root@node2 bin]# ls

phantomjs

[root@node2 bin]# cp phantomjs /usr/local/bin/

[root@node2 bin]# cd /opt/

[root@node2 opt]# tar zxvf elasticsearch-head.tar.gz -C /usr/local/src/

[root@node2 opt]# cd /usr/local/src/elasticsearch-head/

[root@node2 elasticsearch-head]# npm install

npm WARN deprecated [email protected]: fsevents 1 will break on node v14+ and could be using insecure binaries. Upgrade to fsevents 2.

npm WARN optional SKIPPING OPTIONAL DEPENDENCY: fsevents@^1.0.0 (node_modules/karma/node_modules/chokidar/node_modules/fsevents):

npm WARN notsup SKIPPING OPTIONAL DEPENDENCY: Unsupported platform for [email protected]: wanted {"os":"darwin","arch":"any"} (current: {"os":"linux","arch":"x64"})

npm WARN [email protected] license should be a valid SPDX license expression

up to date in 19.439s

[root@node2 elasticsearch-head]# vim /etc/elasticsearch/elasticsearch.yml

http.cors.enabled: true

http.cors.allow-origin: "*"

[root@node2 elasticsearch-head]# systemctl restart elasticsearch.service

[root@node2 elasticsearch-head]# npm run start &

[1] 116323

[root@node2 elasticsearch-head]#

> [email protected] start /usr/local/src/elasticsearch-head

> grunt server

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

[root@node2 elasticsearch-head]# netstat -antp | grep 9100

tcp 0 0 0.0.0.0:9100 0.0.0.0:* LISTEN 116333/grunt

[root@node2 elasticsearch-head]# netstat -antp | grep 9200

tcp6 0 0 :::9200 :::* LISTEN 116210/java

pretty&pretty' -H 'content-Type: application/json' -d '{"user":"zhangsan","mesg":"hello world"}'

{

"_index" : "index-demo",

"_type" : "test",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"created" : true

}

5 安装logstash——192.168.200.120

1.更改主机名,关闭防火墙,核心防护

[root@localhost ~]# hostnamectl set-hostname apache

[root@localhost ~]# su

[root@apache ~]# systemctl stop firewalld.service

[root@apache ~]# setenforce 0

2.安装Apache并启动

[root@apache ~]# yum -y install httpd

[root@apache ~]# systemctl start httpd.service

3.安装Java环境

[root@apache ~]# java -version

openjdk version "1.8.0_181"

OpenJDK Runtime Environment (build 1.8.0_181-b13)

OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)

4.安装logstash,启动

[root@apache ~]# cd /opt/

[root@apache opt]# rz -E

rz waiting to receive.

[root@apache opt]# ls

logstash-5.5.1.rpm rh

[root@apache opt]# rpm -ivh logstash-5.5.1.rpm

[root@apache opt]# systemctl start logstash.service

[root@apache opt]# ln -s /usr/share/logstash/bin/logstash /usr/local/bin/ //建立logstash软链接

5.logstash (Apache) 与elasticsearch (node)功能是否正常,做对接测试####

Logstash这个命令测试

字段描述解释:

-f通过这个选项可以指定logstash的配置文件,根据配置文件配置logstash

-e 后面跟着字符串该字符串可以被当做logstash的配置(如果是””,则默认使用stdin做为输入、stdout作为输出)

-t测试配置文件是否正确,然后退出

6、输入采用标准输入 输出采用标准输出 在Apache服务器上

[root@apache opt]# logstash -e 'input { stdin{} } output { stdout{} }'

www.kgc.com //输入

2020-09-16T07:19:26.160Z apache www.kgc.com //显示

www.benet.com

2020-09-16T07:19:32.708Z apache www.benet.com

7、使用rubydebug显示向西输出,codec为一种编解码器

[root@apache opt]# logstash -e 'input { stdin{} } output { stdout{ codec=>rubydebug } }'

{

"@timestamp" => 2020-09-16T07:21:08.619Z,

"@version" => "1",

"host" => "apache",

"message" => "www.kgc.com"

}

8、使用logstash将信息写入elasticsearch中

[root@apache opt]# logstash -e 'input { stdin{} } output { elasticsearch{ hosts=>["192.168.200.40"] } }'

。。。。。。省略。。。。。

9.配置logstash配置文件

[root@apache opt]# chmod o+r /var/log/messages

[root@apache opt]# ll /var/log/messages

-rw----r--. 1 root root 967722 9月 16 15:44 /var/log/messages

[root@apache opt]# vim /etc/logstash/conf.d/system.conf

input {

file{

path => "/var/log/messages"

type => "system"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["192.168.200.40:9200"]

index => "system-%{+YYYY.MM.dd}"

}

}

[root@apache opt]# systemctl restart logstash.service

6 安装kibana——192.168.200.40

1.安装kibana

[root@node1 elasticsearch-head]# cd /usr/local/src/

[root@node1 src]# rz -E

rz waiting to receive.

[root@node1 src]# ls

elasticsearch-head kibana-5.5.1-x86_64.rpm phantomjs-2.1.1-linux-x86_64

[root@node1 src]# rpm -ivh kibana-5.5.1-x86_64.rpm

[root@node1 src]# cd /etc/kibana/

[root@node1 kibana]# cp kibana.yml kibana.yml.bak

[root@node1 kibana]# ls

kibana.yml kibana.yml.bak

[root@node1 kibana]# vim kibana.yml

2 server.port: 5601 //打开kibana端口

7 server.host: "0.0.0.0" //kibana侦听的地址

21 elasticsearch.url: "http://192.168.200.40:9200" //和elasticsearch建立联系

30 kibana.index: ".kibana" //在elasticsearch中添加.kibana索引

[root@node1 kibana]# systemctl start kibana.service //启动kibana

[root@node1 kibana]# systemctl enable kibana.service //设置开机启动

Created symlink from /etc/systemd/system/multi-user.target.wants/kibana.service to /etc/systemd/system/kibana.service.

7 访问kibana网页

在浏览器输入192.168.200.40:5601访问kibana网页

设置好想要查询的时间点

8 对接Apache主机的日志

[root@apache conf.d]# cd /etc/logstash/conf.d/

[root@apache conf.d]# vim apache_log.conf

input {

file{

path => "/etc/httpd/logs/access_log"

type => "access"

start_position => "beginning"

}

file{

path => "/etc/httpd/logs/error_log"

type => "error"

start_position => "beginning"

}

}

output {

if [type] == "access" {

elasticsearch {

hosts => ["192.168.200.40:9200"]

index => "apache_access-%{+YYYY.MM.dd}"

}

}

if [type] == "error" {

elasticsearch {

hosts => ["192.168.200.40:9200"]

index => "apache_error-%{+YYYY.MM.dd}"

}

}

}

[root@apache conf.d]# logstash -f apache_log.conf