Apache Kudu 从源码开始构建并部署 Kudu 集群

官网 | 文档 | 源码

目录

- 1 概述

-

- 1.1 架构及概念和术语

- 1.2 支持的列类型

- 1.3 编码类型

- 1.4 列压缩

- 1.5 关于主键

- 2 编译

-

- 2.1 安装需要的依赖库

- 2.2 构建文档时需要的依赖(可选)

- 2.3 编译 Kudu

- 2.4 安装

- 2.5 构建文档(可选)

- 2.6 单独构建 Java Client(可选)

- 3 部署

-

- 3.1 开始部署

- 3.2 添加 Kudu Master 配置

- 3.3 添加 Kudu tserver配置

- 3.4 添加Kudu Master 外部环境变量

- 3.5 添加Kudu tserver 外部环境变量

- 3.6 这里添加一个 Kudu Master 服务的脚本

- 3.7 这里添加一个 Kudu tserver 服务的脚本

- 3.8 发送到其它节点

- 3.9 启动集群

- 4 测试

- 5 常用命令

1 概述

Kudu 是一个可以不依赖于 Hadoop 生态圈(但也可以与Hadoop 生态圈,如 Impala、Spark 、Hive 等集成)的开源的分布式数据存储引擎。Kudu 旨在利用下一代硬件和内存处理,专为快速变化的数据进行快速分析而设计,它降低了 Apache Flink、Apache Spark、Apache Impala等引擎的查询延迟。Kudu 可以解决使用 HDFS 存储技术很难实现或不可能实现的应用族系(families of applications)。

1.1 架构及概念和术语

一个完整的 Kudu 集群架构如下图所示,其中左侧是 3 个 Kudu Master,右侧为多个 Table Server。

- Master:Kudu 集群的主节点,可以有多个但给定的时间点只能有一个为 Leader 的 Master。它跟踪所有与集群相关的 Tablets、Tablet Server、Catalog Table 和其它元数据。 如果当前 Leader 消失则通过使用 Raft 共识算法选举新的 Leader。Master 还为客户端协调元数据操作,例如在创建新表时客户端在内部将请求发送给 Master,Master 将新表的元数据写入到 Catalog Table 中,并协调在 Tablet Server 上创建 Tablets 的过程。所有 Master 的数据都存储在一个 Table 中,可以复制到所有其它的候选 Master。Tablet Server 以设定的时间间隔向Master 发送心跳(默认为每秒一次)。

- Tablet Server:Tablet Server 存储 Tablets 并将其提供给客户端,对于给定的 Tablet ,只有一个 Tablet Server 充当 Leader,其它 Tablets 充当该 Tablet 的 Follower 副本。 仅 Leader 服务于写入请求,而 Leader 或 每个 Follower 服务于读取请求。使用 Raft 共识算法选举 Leader,一个 Tablet Server 可以服务多个 Tablets(上图右侧,一个横着的 Tablet Server 上可以有多个纵着的 Tablet),一个 Tablet 可以被服务于多个Tablet Server(上图右,一个纵着的是相同的 Tablet,以副本的形式在多个 Tablet Server 上)。

- Table:表是用户数据存储在 Kudu 中的地方,1个表有一个 schema 和一个完全有序的主键(必须指定主键)。一个表被切分为叫 tablets 的 segment。

- Tablet:Tablet 是表的连续 segment,类似于其它数据存储引擎或关系数据库中的分区。一个给定的 tablet 被复制到多个 tablet server 上,并且在任何给定的时间点这些副本中的一个被认为是 leader tablet。任何副本都可以提供读取的服务,但是写入时需要在 tablet server 正在服务中的 tablet 间达成共识。

- Catalog Table:Catalog Table是 Kudu 元数据的主要存储位置,它存储有关 Tables 和 Tablets 的信息,Catalog Table 不能直接读取或写入,相反它只能通过客户端 API 中暴露的元数据操作来访问。

- Tables:表 schema、location、state。

- Tablets:现有的 tablet 列表,tablet server 拥有每个 tablet 的副本、tablet 的当前状态以及开始和结束的 key(上图右侧的一个纵的 Tablet)。

1.2 支持的列类型

- boolean

- 8-bit signed integer

- 16-bit signed integer

- 32-bit signed integer

- 64-bit signed integer

- date (32-bit days since the Unix epoch)

- unixtime_micros

- single-precision (32-bit) IEEE-754 浮点数

- double-precision (64-bit) IEEE-754 浮点数

- decimal,带精度的小数类型

- varchar

- UTF-8 encoded string (最大 64KB uncompressed)

- binary (最大 64KB uncompressed)

1.3 编码类型

Kudu 表中的每一列都可以根据列类型使用编码类型创建。

| 列类型 | 编码 | 默认值 |

|---|---|---|

| int8, int16, int32, int64 | plain, bitshuffle, run length | bitshuffle |

| date, unixtime_micros | plain, bitshuffle, run length | bitshuffle |

| float, double, decimal | plain, bitshuffle | bitshuffle |

| bool | plain, run length | run length |

| string, varchar, binary | plain, prefix, dictionary | dictionary |

- plain 编码:数据以自然格式存储,例如

int32类型的值存储为 32 位固定大小的低位优先(little-endian)的整数。 - bitshuffle 编码:一组值被重新排列以存储每个值的最高有效位,然后是每个值的第二个最高有效位,依此类推,最后的结果是 LZ4 压缩的。Bitshuffle 编码对于具有许多重复值的列或在按主键排序时发生少量变化的值是一个不错的选择,详细可查看 bitshuffle 。

- run length编码:通过仅存储value和count将运行(连续重复值)压缩在列中,当按主键排序时 run length 编码对于具有许多连续重复值的列有效。

- prefix 编码:相同前缀压缩在连续的列值中,prefix 编码对于共享公共前缀的值或主键的第一列可能是有效的,因为行是按 tablets 中的主键排序的。

- dictionary 编码:构建了一个唯一值的字典,并且每个列值都被编码为其在字典中的对应索引。字典编码对低基数的列有效,如果给定行集的列值由于唯一值的数量太多而无法进行压缩,Kudu 将透明地回退到该行集的纯编码,这是在 flush 期间评估的。

1.4 列压缩

Kudu 的列支持的压缩格式有:

- LZ4

- Snappy

- zlib

当列使用 Bitshuffle 编码时其本质上是使用 LZ4 进行压缩的,否则列存储未进行压缩。 如果相比于原始扫描性能来说减少存储空间更重要,可考虑使用压缩。每个数据集的压缩方式都不同,但通常 LZ4 是性能最高的压缩编解码,zlib 的压缩比一般来说是最大的。对于 Bitshuffle 编码的列已默认使用 LZ4 压缩,因此不建议在此编码之上应用额外的压缩。

1.5 关于主键

Kudu 表创建的时候必须声明主键,主键可以由一个或多个列组成。Kudu的主键与 RDBMS 的主键一样具有唯一约束性,如果插入主键重复的数据时会报 duplicate key error 类错误。主键的列值不能为 null,并且不能为 boolean、float 、double 类型。主键在建表时一旦创建成功后不能更改。

2 编译

本次系统以 Centos 7、Kudu 1.15 为例,以 root 用户进行编译,如果是其它用户进行编译则需要 sudo 权限。

磁盘空间至少有 96GB 的空闲。

2.1 安装需要的依赖库

通过 yum 方式安装,如果环境中已经安装了,则可以从下面的命令中去掉。

# 1 安装必要的依赖

yum install -y autoconf automake cyrus-sasl-devel cyrus-sasl-gssapi \

cyrus-sasl-plain flex gcc gcc-c++ gdb git java-1.8.0-openjdk-devel \

krb5-server krb5-workstation libtool make openssl-devel patch \

pkgconfig redhat-lsb-core rsync unzip vim-common which

注意:编译 Kudu 1.15 时 GCC 版本至少为 7.0(需要支持 C+17)。

# 2 CentOS8 可跳过。

# 如果是 CentOS 或 RHEL 8.0 之前的版本需要安装如下依赖

yum install -y centos-release-scl-rh

yum install -y devtoolset-8

# 3 可选。如果需要支持 Kudu 的 NVM (non-volatile memory) block cache,则执行如下命令安装

yum install -y memkind

# memkind 需要 1.8.0 或更新版本,否则需要升级

yum install -y numactl-libs numactl-devel

git clone https://github.com/memkind/memkind.git

cd memkind

./build.sh --prefix=/usr

yum remove memkind

make install

ldconfig

2.2 构建文档时需要的依赖(可选)

如果需要构建文档,则需要安装 ruby,Centos7 可以执行如下命令,这部分为可选,如果不需要构建文档可跳过。

yum install gem graphviz ruby-devel zlib-devel

在构建 Kudu 1.15 版本的文档时,ruby 版本必须要求在 >= 2.3.0,否则构建文档时会报如下的错误 bundler requires Ruby version >= 2.3.0.。

# 查看 ruby 版本

ruby -v

# 如果版本低于 2.3.0,则需要升级环境的 ruby

wget https://repo.huaweicloud.com/ruby/ruby/ruby-2.6.9.tar.gz

tar -zxf ruby-2.6.9.tar.gz

cd ruby-2.6.9/

./configure --prefix=/usr/local/ruby

make && make install

#备份旧 ruby

mv /usr/bin/ruby /usr/bin/ruby.back

mv /usr/bin/gem /usr/bin/gem.back

# 创建新版 ruby 软连接

ln -s /usr/local/ruby/bin/ruby /usr/bin/ruby

ln -s /usr/local/ruby/bin/gem /usr/bin/gem

# 查看版本

ruby -v

2.3 编译 Kudu

wget https://repo.huaweicloud.com/apache/kudu/1.15.0/apache-kudu-1.15.0.tar.gz

tar -zxf apache-kudu-1.15.0.tar.gz

cd apache-kudu-1.15.0

# 使用 build-if-necessary.sh 脚本构建所有缺少的第三方库。

# 不使用 devtoolset 将导致 `Host compiler appears to require libatomic, but cannot find it

# 这个过程需要通过网络下载第三方依赖,可能需要较长的时间,

# 如果下载失败,可以再次执行下面的命令,会接着上次的开始继续下载

build-support/enable_devtoolset.sh thirdparty/build-if-necessary.sh

# 构建Kudu

# 为中间输出选择一个构建目录,该目录可以在文件系统中的任何位置,但 kudu 目录本身除外。

# 请注意,仍必须指定 devtoolset,否则您将得到如下错误

# `cc1plus: error: unrecognized command line option "-std=c++17"`。

mkdir -p build/release

cd build/release

../../build-support/enable_devtoolset.sh \

../../thirdparty/installed/common/bin/cmake \

-DCMAKE_BUILD_TYPE=release ../..

#-DKUDU_CLIENT_INSTALL=OFF \

#-DKUDU_MASTER_INSTALL=OFF \

#-DKUDU_TSERVER_INSTALL=OFF

# 编译。

# -j 指定并行编译(不宜太多,一般是 CPU 核数的两倍)。

make -j4

2.4 安装

这一步主要是将上一步编译的二进制部署文件,导出到指定的目录下

# 9 安装kudu

# 默认安装目录为 /usr/local,可通过 DESTDIR 指定

# kudu-tserver 和 kudu-master 可执行文件在 /usr/local/sbin

# Kudu 命令行工具在 /usr/local/bin

# Kudu 客户端库在 /usr/local/lib64/

# Kudu 客户端 headers 在 /usr/local/include/kudu

make DESTDIR=/opt/kudu install

#将web资源拷贝到导出目录下

cp ../../www /opt/kudu/

2.5 构建文档(可选)

make docs

在构建文档时可能会报如下的错误 Network error while fetching https://rubygems.org/quick/Marshal.4.8/jekyll-4.1.1.gemspec.rz (Net::OpenTimeout)

构建文档时提示无法访问 https 连接,详细可参考 http://stackoverflow.com/a/19179835/1227911。我们修改 ruby 的连接为阿里云镜像,设置成功后通过 gem sources -l 可查看到新设置的连接。

#sudo gem sources -r https://rubygems.org

#sudo gem sources -a http://rubygems.org

#gem sources -a https://ruby.taobao.org/

# 替换为华为ruby镜像

# gem sources --add https://repo.huaweicloud.com/repository/rubygems/ --remove https://rubygems.org/

# 或者阿里云镜像

gem sources --add https://mirrors.aliyun.com/rubygems/ --remove https://rubygems.org/

gem sources -l

2.6 单独构建 Java Client(可选)

通过 2.3 步会编译整个项目,对于 Java 项目,也可以单独构建指定的模块,使用如下方式进行构建,更详细的可以查看 java/README.adoc文档。

cd ../../java

./gradlew assemble

3 部署

假设在3台服务器上部署,可以这样规划。Kudu Master 生产环境中建议至少 3 个节点。数据目录将以放到数据盘上,便于后期使用过程随着数据的增长不影响系统的磁盘空间,也便于后期磁盘空间的扩展。

| Item | 说明 |

|---|---|

| Host | cdh01、cdh02、cdh03 |

| master | cdh01:7051、cdh02:7051、cdh03:7051 |

| tserver | cdh01:7050、cdh02:7050、cdh03:7050 |

| Master UI | cdh01:8051 cdh02:8051 cdh03:8051 |

| tserver UI | cdh01:8050 cdh02:8050 cdh03:8050 |

| home | /data/kudu-1.15.0(/usr/apache/kudu) |

| Data Path | /usr/apache/kudu/kudu-master /usr/apache/kudu/kudu-tserver |

| version | 1.15.0 |

3.1 开始部署

cd /usr/apache

# 1 创建 Kudu 用户和组

# 在 CDH 环境下,此用户和组可能已创建,如果创建了则可跳过

groupadd kudu

useradd -d /var/lib/kudu -s /sbin/nologin -g kudu kudu

# 2 创建需要的目录(cdh01、cdh02、cdh03)

mkdir /var/log/kudu

mkdir -p /data/kudu-1.15.0/conf.dist

# 这个作为Kudu的数据目录,生产环境这里指定到专门的数据盘。

mkdir /data/kudu-1.15.0/{kudu-master,kudu-tserver}

ln -s /data/kudu-1.15.0 /usr/apache/kudu

# 3 将编译的包添加到部署目录下

mv /opt/kudu/usr/local/* /usr/apache/kudu

mv /opt/kudu/www /usr/apache/kudu

# 4 如果环境已存在,先备份

mv /usr/bin/kudu /usr/bin/kudu.back

mv /usr/bin/kudu-master /usr/bin/kudu-master.back

mv /usr/bin/kudu-tserver /usr/bin/kudu-tserver.back

ln -s /usr/apache/kudu/bin/kudu /usr/bin/kudu

ln -s /usr/apache/kudu/sbin/kudu-master /usr/sbin/kudu-master

ln -s /usr/apache/kudu/sbin/kudu-tserver /usr/sbin/kudu-tserver

3.2 添加 Kudu Master 配置

vim /usr/apache/kudu/conf.dist/master.gflagfile,仅做参考,其中资源的大小可根据自己服务器的情况进行优化。

# Do not modify these two lines. If you wish to change these variables,

# modify them in /etc/default/kudu-master.

--fromenv=rpc_bind_addresses

--fromenv=log_dir

#--log_dir=/var/log/kudu

#--rpc_bind_addresses=cdh03:7051

--fs_wal_dir=/usr/apache/kudu/kudu-master

--fs_data_dirs=/usr/apache/kudu/kudu-master

#指定web 的资源路径

--webserver_doc_root=/usr/apache/kudu/www

--default_num_replicas=1

--master_addresses=cdh01:7051,cdh02:7051,cdh03:7051

--webserver_port=8051

--unlock_unsafe_flags=true

--max_num_columns=2000

--trusted_subnets=0.0.0.0/0

--scanner_ttl_ms=6000000

#--maintenance_manager_num_threads=9

#--block_cache_capacity_mb=3072

# 限制 80GB

#--memory_limit_hard_bytes=85899345920

#--max_clock_sync_error_usec=20000000

# 60 分钟

#--rpc_negotiation_timeout_ms=3000000

#--tablet_history_max_age_sec=86400

#--rpc_num_acceptors_per_address=5

#--consensus_rpc_timeout_ms=30000

#--follower_unavailable_considered_failed_sec=300

#--raft_heartbeat_interval_ms=60000

#--leader_failure_max_missed_heartbeat_periods=3

#--tserver_unresponsive_timeout_ms=60000

#--rpc_num_service_threads=10

#--min_negotiation_threads=10

#--max_negotiation_threads=50

#--rpc_num_acceptors_per_address=5

#--master_ts_rpc_timeout_ms=60000

#--rpc_service_queue_length=1000

#--raft_heartbeat_interval_ms=60000

#--heartbeat_interval_ms=60000

#--rpc_authentication=disabled

#xfs 不用设置

##block_manager=file

3.3 添加 Kudu tserver配置

vim /usr/apache/kudu/conf.dist/tserver.gflagfile,仅做参考,其中资源的大小可根据自己服务器的情况进行优化。

# Do not modify these two lines. If you wish to change these variables,

# modify them in /etc/default/kudu-tserver.

--fromenv=rpc_bind_addresses

--fromenv=log_dir

--fs_wal_dir=/usr/apache/kudu/kudu-tserver

--fs_data_dirs=/usr/apache/kudu/kudu-tserver

#指定web 的资源路径

--webserver_doc_root=/usr/apache/kudu/www

--tserver_master_addrs=cdh01:7051,cdh02:7051,cdh03:7051

--webserver_port=8050

--trusted_subnets=0.0.0.0/0

--rpc_authentication=disabled

3.4 添加Kudu Master 外部环境变量

vim /etc/default/kudu-master,以 cdh03 为例,其它机器 FLAGS_rpc_bind_addresses 配置项需要修改为对应值。

export FLAGS_log_dir=/var/log/kudu

export FLAGS_rpc_bind_addresses=cdh03:7051

3.5 添加Kudu tserver 外部环境变量

vim /etc/default/kudu-tserver,以 cdh03 为例,其它机器 FLAGS_rpc_bind_addresses 配置项需要修改为对应值。

export FLAGS_log_dir=/var/log/kudu

export FLAGS_rpc_bind_addresses=cdh03:7050

3.6 这里添加一个 Kudu Master 服务的脚本

vim /usr/apache/kudu/bin/kudu-master,该脚本可用于启动或停止 Kudu Master 服务。

#!/usr/bin/env bash

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# Starts a Kudu Master Server

#

# chkconfig: 345 92 8

# description: Kudu Master Server

#

# Notes on service/facility dependencies:

# -- Kudu server components require local file systems

# to be mounted during start and stop to have access to config, data

# and log files (which are usually placed at local filesystems).

# -- If remote filesystems (NFS, etc.) are present, some related data

# might reside there, so $remote_fs is also in the dependencies.

# -- Kudu is a distributed system and usually deployed at multiple machines,

# so $network is in the dependencies for start and stop.

# -- Kudu server components use precision timing provided by hardware clock,

# so $time is in the dependencies for start.

# -- Kudu assumes time is synchronized between participating machines,

# so ntpd service required for the start phase.

#

### BEGIN INIT INFO

# Provides: kudu-master

# Short-Description: Kudu Master Server

# Default-Start: 3 4 5

# Default-Stop: 0 1 2 6

# Required-Start: $time $local_fs $network ntpd

# Required-Stop: $local_fs $network

# Should-Start: $remote_fs

# Should-Stop: $remote_fs

### END INIT INFO

. /lib/lsb/init-functions

. /etc/default/kudu-master

RETVAL_SUCCESS=0

STATUS_RUNNING=0

STATUS_DEAD=1

STATUS_DEAD_AND_LOCK=2

STATUS_NOT_RUNNING=3

STATUS_OTHER_ERROR=102

ERROR_PROGRAM_NOT_INSTALLED=5

ERROR_PROGRAM_NOT_CONFIGURED=6

RETVAL=0

SLEEP_TIME=5

DAEMON="kudu-master"

DESC="Kudu Master Server"

EXEC_PATH="/usr/sbin/$DAEMON"

SVC_USER="kudu"

#CONF_PATH="/etc/kudu/conf/master.gflagfile"

CONF_PATH=/usr/apache/kudu/conf.dist/master.gflagfile

RUNDIR="/var/run/kudu"

PIDFILE="$RUNDIR/$DAEMON-kudu.pid"

INFOFILE="$RUNDIR/$DAEMON-kudu.json"

LOCKDIR="/var/lock/subsys"

LOCKFILE="$LOCKDIR/$DAEMON"

# Print something and exit.

kudu_die() {

echo $2

exit $1

}

# Check if the server is alive, looping for a little while.

kudu_check() {

for i in $(seq 1 $SLEEP_TIME); do

if [ -f $INFOFILE ]; then

return $STATUS_RUNNING

fi

sleep 1

done

return $STATUS_DEAD

}

[ -n "$FLAGS_rpc_bind_addresses" ] || kudu_die $ERROR_PROGRAM_NOT_CONFIGURED \

"Missing FLAGS_rpc_bind_addresses environment variable"

[ -n "$FLAGS_log_dir" ] || kudu_die $ERROR_PROGRAM_NOT_CONFIGURED \

"Missing FLAGS_log_dir environment variable"

install -d -m 0755 -o kudu -g kudu /var/run/kudu 1>/dev/null 2>&1 || :

[ -d "$LOCKDIR" ] || install -d -m 0755 $LOCKDIR 1>/dev/null 2>&1 || :

start() {

[ -x $EXEC_PATH ] || kudu_die $ERROR_PROGRAM_NOT_INSTALLED \

"Could not find binary $EXEC_PATH"

[ -r $CONF_PATH ] || kudu_die $ERROR_PROGRAM_NOT_CONFIGURED \

"Could not find config path $CONF_PATH"

checkstatus >/dev/null 2>/dev/null

status=$?

if [ "$status" -eq "$STATUS_RUNNING" ]; then

log_success_msg "${DESC} is running"

exit 0

fi

rm -f $INFOFILE

/bin/su -s /bin/bash -c "/bin/bash -c 'cd ~ && echo \$\$ > ${PIDFILE} && exec ${EXEC_PATH} --server_dump_info_path=$INFOFILE --flagfile=${CONF_PATH} > ${FLAGS_log_dir}/${DAEMON}.out 2>&1' &" $SVC_USER

RETVAL=$?

if [ $RETVAL -eq $STATUS_RUNNING ]; then

kudu_check

RETVAL=$?

if [ $RETVAL -eq $STATUS_RUNNING ]; then

touch $LOCKFILE

log_success_msg "Started ${DESC} (${DAEMON}): "

return $RETVAL

fi

fi

log_failure_msg "Failed to start ${DESC}. Return value: $RETVAL"

return $RETVAL

}

stop() {

killproc -p $PIDFILE $EXEC_PATH

RETVAL=$?

if [ $RETVAL -eq $RETVAL_SUCCESS ]; then

log_success_msg "Stopped ${DESC}: "

rm -f $LOCKFILE $PIDFILE $INFOFILE

else

log_failure_msg "Failure to stop ${DESC}. Return value: $RETVAL"

fi

return $RETVAL

}

restart() {

stop

start

}

checkstatusofproc(){

pidofproc -p $PIDFILE $DAEMON > /dev/null

}

checkstatus(){

checkstatusofproc

status=$?

case "$status" in

$STATUS_RUNNING)

log_success_msg "${DESC} is running"

;;

$STATUS_DEAD)

log_failure_msg "${DESC} is dead and pid file exists"

;;

$STATUS_DEAD_AND_LOCK)

log_failure_msg "${DESC} is dead and lock file exists"

;;

$STATUS_NOT_RUNNING)

log_failure_msg "${DESC} is not running"

;;

*)

log_failure_msg "${DESC} status is unknown"

;;

esac

return $status

}

condrestart(){

[ -e $LOCKFILE ] && restart || :

}

check_for_root() {

if [ $(id -ur) -ne 0 ]; then

echo 'Error: root user required'

echo

exit 1

fi

}

service() {

case "$1" in

start)

check_for_root

start

;;

stop)

check_for_root

stop

;;

status)

checkstatus

RETVAL=$?

;;

restart)

check_for_root

restart

;;

condrestart|try-restart)

check_for_root

condrestart

;;

*)

echo $"Usage: $0 {start|stop|status|restart|try-restart|condrestart}"

exit 1

esac

}

service "$@"

exit $RETVAL

3.7 这里添加一个 Kudu tserver 服务的脚本

vim /usr/apache/kudu/bin/kudu-tserver,该脚本可用于启动或停止 Kudu tserver 服务。

#!/usr/bin/env bash

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# Starts a Kudu Tablet Server

#

# chkconfig: 345 92 8

# description: Kudu Tablet Server

#

# Notes on service/facility dependencies:

# -- Kudu server components require local file systems

# to be mounted during start and stop to have access to config, data

# and log files (which are usually placed at local filesystems).

# -- If remote filesystems (NFS, etc.) are present, some related data

# might reside there, so $remote_fs is also in the dependencies.

# -- Kudu is a distributed system and usually deployed at multiple machines,

# so $network is in the dependencies for start and stop.

# -- Kudu server components use precision timing provided by hardware clock,

# so $time is in the dependencies for start.

# -- Kudu assumes time is synchronized between participating machines,

# so ntpd service required for the start phase.

#

### BEGIN INIT INFO

# Provides: kudu-tserver

# Short-Description: Kudu Tablet Server

# Default-Start: 3 4 5

# Default-Stop: 0 1 2 6

# Required-Start: $time $local_fs $network ntpd

# Required-Stop: $local_fs $network

# Should-Start: $remote_fs

# Should-Stop: $remote_fs

### END INIT INFO

. /lib/lsb/init-functions

. /etc/default/kudu-tserver

RETVAL_SUCCESS=0

STATUS_RUNNING=0

STATUS_DEAD=1

STATUS_DEAD_AND_LOCK=2

STATUS_NOT_RUNNING=3

STATUS_OTHER_ERROR=102

ERROR_PROGRAM_NOT_INSTALLED=5

ERROR_PROGRAM_NOT_CONFIGURED=6

RETVAL=0

SLEEP_TIME=5

DAEMON="kudu-tserver"

DESC="Kudu Tablet Server"

EXEC_PATH="/usr/sbin/$DAEMON"

SVC_USER="kudu"

#CONF_PATH="/etc/kudu/conf/tserver.gflagfile"

CONF_PATH="/usr/apache/kudu/conf.dist/tserver.gflagfile"

RUNDIR="/var/run/kudu"

PIDFILE="$RUNDIR/$DAEMON-kudu.pid"

INFOFILE="$RUNDIR/$DAEMON-kudu.json"

LOCKDIR="/var/lock/subsys"

LOCKFILE="$LOCKDIR/$DAEMON"

# Print something and exit.

kudu_die() {

echo $2

exit $1

}

# Check if the server is alive, looping for a little while.

kudu_check() {

for i in $(seq 1 $SLEEP_TIME); do

if [ -f $INFOFILE ]; then

return $STATUS_RUNNING

fi

sleep 1

done

return $STATUS_DEAD

}

[ -n "$FLAGS_rpc_bind_addresses" ] || kudu_die $ERROR_PROGRAM_NOT_CONFIGURED \

"Missing FLAGS_rpc_bind_addresses environment variable"

[ -n "$FLAGS_log_dir" ] || kudu_die $ERROR_PROGRAM_NOT_CONFIGURED \

"Missing FLAGS_log_dir environment variable"

install -d -m 0755 -o kudu -g kudu /var/run/kudu 1>/dev/null 2>&1 || :

[ -d "$LOCKDIR" ] || install -d -m 0755 $LOCKDIR 1>/dev/null 2>&1 || :

start() {

[ -x $EXEC_PATH ] || kudu_die $ERROR_PROGRAM_NOT_INSTALLED \

"Could not find binary $EXEC_PATH"

[ -r $CONF_PATH ] || kudu_die $ERROR_PROGRAM_NOT_CONFIGURED \

"Could not find config path $CONF_PATH"

checkstatus >/dev/null 2>/dev/null

status=$?

if [ "$status" -eq "$STATUS_RUNNING" ]; then

log_success_msg "${DESC} is running"

exit 0

fi

rm -f $INFOFILE

/bin/su -s /bin/bash -c "/bin/bash -c 'cd ~ && echo \$\$ > ${PIDFILE} && exec ${EXEC_PATH} --server_dump_info_path=$INFOFILE --flagfile=${CONF_PATH} > ${FLAGS_log_dir}/${DAEMON}.out 2>&1' &" $SVC_USER

RETVAL=$?

if [ $RETVAL -eq $STATUS_RUNNING ]; then

kudu_check

RETVAL=$?

if [ $RETVAL -eq $STATUS_RUNNING ]; then

touch $LOCKFILE

log_success_msg "Started ${DESC} (${DAEMON}): "

return $RETVAL

fi

fi

log_failure_msg "Failed to start ${DESC}. Return value: $RETVAL"

return $RETVAL

}

stop() {

killproc -p $PIDFILE $EXEC_PATH

RETVAL=$?

if [ $RETVAL -eq $RETVAL_SUCCESS ]; then

log_success_msg "Stopped ${DESC}: "

rm -f $LOCKFILE $PIDFILE $INFOFILE

else

log_failure_msg "Failure to stop ${DESC}. Return value: $RETVAL"

fi

return $RETVAL

}

restart() {

stop

start

}

checkstatusofproc(){

pidofproc -p $PIDFILE $DAEMON > /dev/null

}

checkstatus(){

checkstatusofproc

status=$?

case "$status" in

$STATUS_RUNNING)

log_success_msg "${DESC} is running"

;;

$STATUS_DEAD)

log_failure_msg "${DESC} is dead and pid file exists"

;;

$STATUS_DEAD_AND_LOCK)

log_failure_msg "${DESC} is dead and lock file exists"

;;

$STATUS_NOT_RUNNING)

log_failure_msg "${DESC} is not running"

;;

*)

log_failure_msg "${DESC} status is unknown"

;;

esac

return $status

}

condrestart(){

[ -e $LOCKFILE ] && restart || :

}

check_for_root() {

if [ $(id -ur) -ne 0 ]; then

echo 'Error: root user required'

echo

exit 1

fi

}

service() {

case "$1" in

start)

check_for_root

start

;;

stop)

check_for_root

stop

;;

status)

checkstatus

RETVAL=$?

;;

restart)

check_for_root

restart

;;

condrestart|try-restart)

check_for_root

condrestart

;;

*)

echo $"Usage: $0 {start|stop|status|restart|try-restart|condrestart}"

exit 1

esac

}

service "$@"

exit $RETVAL

3.8 发送到其它节点

主要是以下几个文件

/usr/apache/kudu/conf.dist/master.gflagfile

/usr/apache/kudu/conf.dist/tserver.gflagfile

/etc/default/kudu-tserver

/etc/default/kudu-master

3.9 启动集群

# 1 权限和用户组设置

chown -R kudu:kudu /usr/apache/kudu-1.15.0

chown -R kudu:kudu /usr/apache/kudu

chown -R kudu:kudu /var/log/kudu

chown kudu:kudu /etc/default/kudu-master

chown kudu:kudu /etc/default/kudu-tserver

chmod +x /usr/apache/kudu/bin/kudu-master

chmod +x /usr/apache/kudu/bin/kudu-tserver

# 2 使用脚本方式启动

/usr/apache/kudu/bin/kudu-master start

/usr/apache/kudu/bin/kudu-tserver start

如果报 /lib64/libstdc++.so.6: version 'GLIBCXX_3.4.21' not found , 如果没有则在编译服务器上查找 find / -name "libstdc++.so*",拷贝到 /lib64。执行 strings /usr/lib64/libstdc++.so.6 | grep GLIBC,如果没有对应的版本则需要升级 gcc。

4 测试

# 1 查看服务状态

# 2 使用脚本方式启动

/usr/apache/kudu/bin/kudu-master status

/usr/apache/kudu/bin/kudu-tserver status

# 3 测试表

# 会输出 Using auto-created table 'default.loadgen_auto_0fb2ae2cfecd4b13b222477ef02b15f9'

# 这个就是测试的表名

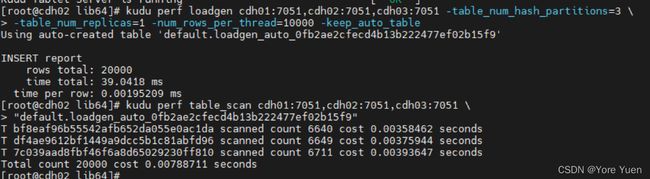

kudu perf loadgen cdh01:7051,cdh02:7051,cdh03:7051 -table_num_hash_partitions=3 \

-table_num_replicas=1 -num_rows_per_thread=10000 -keep_auto_table

#Using auto-created table 'default.loadgen_auto_69feea5b392c4992a7b672940206ad72'

#INSERT report

# rows total: 20000

# time total: 130.092 ms

# time per row: 0.00650458 ms

# 4查看表数据信息

#由上面输出的信息可以查看到表名,

kudu perf table_scan cdh01:7051,cdh02:7051,cdh03:7051 \

"default.loadgen_auto_0fb2ae2cfecd4b13b222477ef02b15f9"

# 5扫描数据

kudu table scan cdh01:7051,cdh02:7051,cdh03:7051 \

"default.loadgen_auto_0fb2ae2cfecd4b13b222477ef02b15f9"

# 6查看表分区情况

kudu table list -tables "default.loadgen_auto_0fb2ae2cfecd4b13b222477ef02b15f9" \

-list-tablets cdh01:7051,cdh02:7051,cdh03:7051

# 7 描述表

kudu table describe cdh01:7051,cdh02:7051,cdh03:7051 \

"default.loadgen_auto_0fb2ae2cfecd4b13b222477ef02b15f9"

浏览器访问 Kudu Master UI

- http://cdh01:8051

- http://cdh02:8051

- http://cdh03:8051

[图片]

浏览器访问 Kudu tserver UI

- http://cdh01:8050

- http://cdh02:8050

- http://cdh03:8050

[图片]

5 常用命令

#方式一:集群服务启动

# 不推荐,这种方式查看状态和关停都不太方便

kudu-master --flagfile=/usr/apache/kudu/conf.dist/master.gflagfile &

kudu-tserver --flagfile=/usr/apache/kudu/conf.dist/tserver.gflagfile &

#方式二:集群服务启停

# 推荐

/usr/apache/kudu/bin/kudu-master start

/usr/apache/kudu/bin/kudu-tserver start

# 2 查看Kudu 信息

# kudu master 检查所有表(这个必须使用 kudu 用户才能看)

sudo -u kudu kudu cluster ksck cdh01:7051,cdh02:7051,cdh03:7051

# 3 Master 信息列表

kudu master list cdh01:7051,cdh02:7051,cdh03:7051

# tserver 信息列表

kudu tserver list cdh01:7051,cdh02:7051,cdh03:7051

# 4 查看所有的表

kudu table list cdh01:7051,cdh02:7051,cdh03:7051

# 5 查看表分区情况

kudu table list -tables impala::impala_kudu_model.t_m_tag_cus_res \

-list-tablets cdh01:7051,cdh02:7051,cdh03:7051

# 6 扫描数据

kudu table scan cdh01:7051,cdh02:7051,cdh03:7051 \

"default.loadgen_auto_0fb2ae2cfecd4b13b222477ef02b15f9"

# 7 重平衡

sudo -u kudu kudu cluster rebalance bdd11,bdd12,bdd13,app1,es2

# 8 动态修改参数

kudu master set_flag bdd11:7051 scanner_ttl_ms 6000000 --force

kudu tserver set_flag bdd11:7050 scanner_ttl_ms 6000000 --force

# 9 描述表

kudu table describe bdd11:7051,bdd12:7051,bdd13:7051,app1:7051,es2:7051 \

impala::impala_kudu_model.t_m_bus_tmp_ply

# 10 删除表

kudu table describe bdd11:7051,bdd12:7051,bdd13:7051,app1:7051,es2:7051 <table_name>

# 11 重命名表名

kudu table rename_table bdd11:7051,bdd12:7051,bdd13:7051,app1:7051,es2:7051 \

impala::impala_kudu_model.t_m_tag_cus_res_20210707_r t_m_tag_cus_res_20210707_r

# 12 create, 1.10 版本不支持

kudu table create shuke1:7051,shuke2:7051,shuke3:7051 {"schema":{"columns":[{"desired_block_size":0,"compression_algorithm":"DEFAULT_COMPRESSION","column_name":"c_ply_no","comment":"","column_type":"STRING","encoding":"AUTO_ENCODING"},{"desired_block_size":0,"compression_algorithm":"DEFAULT_COMPRESSION","column_name":"insu_obj","comment":"","column_type":"STRING","encoding":"AUTO_ENCODING"},{"desired_block_size":0,"compression_algorithm":"DEFAULT_COMPRESSION","column_name":"ply_type","comment":"","column_type":"STRING","encoding":"AUTO_ENCODING"}],"key_column_names":["c_ply_no","insu_obj","ply_type"]},"partition":{"hash_partitions":[{"seed":0,"columns":[0],"num_buckets":5}]},"num_replicas":3,"extra_configs":{"configs":{}},"table_name":"t_m_bus_tmp_ply___"}