zookeeper

zookeeper

- zookeeper

-

- 1.2 zk的特点

- 1.3 zk的数据结构

- 1.4 zk的应用场景

- 1.5 zk的下载

- 1.6 搭建zk

- 1.7 zk的配置文件解读

- 1.8 zk集群的搭建

- 1.9 第一次启动的选举机制

- 1.10 非第一次选举机制

- 1.11 ZNode节点信息

- 1.12 节点的类型

- 1.13 节点的监控

- 1.14 JAVA代码操作zk

- 1.15 节点的监听

- 1.16 判断节点是否存在

- 1.17 客户端写数据

- 1.15 服务器动态上下线

- 1.18 服务器的动态上下线实现

- 1.19 ZooKeeper 分布式锁

- 1.20 Curator 框架实现分布式锁案例

zookeeper

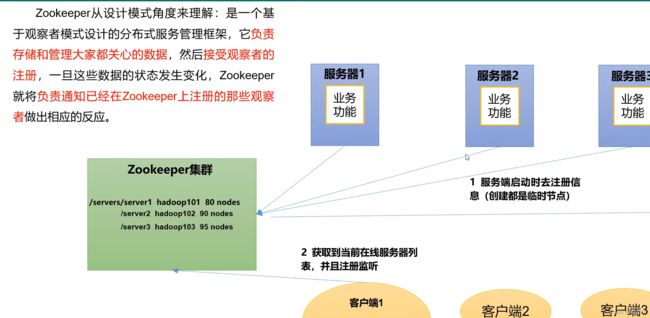

- zookeeper从设计的模式来说,他是一个基于观察者模式的分布式服务管理框架,

它负责存储和管理大家关心的数据,然后接受观察者的注册,一旦这些数据发生了变化,zookeeper就将负责通知已在zookeeper上观察的观察者做出相对的反应

1.2 zk的特点

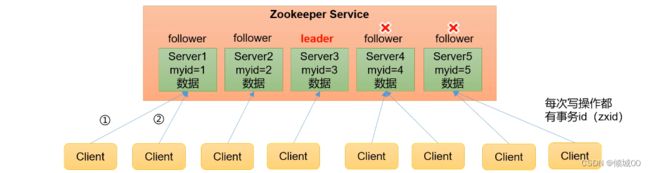

- 1) zk有一个领导者(Leader),多个跟随者(Follewer)组成的集群

- 2)集群中只要有

半数以上的节点存活,zk就能正常服务,所以zk适合安装安装奇数服务器 - 3) 全局数据一致性,每个Server保存一份相同的数据副本,Client无论连接哪一个Server,数据都是一致的

- 4)更新读写顺序执行,来自同一个Client的请求安其发送的顺序会依次执行

- 5)数据更新的原子性,一次数据更新要么全部成功,要吗全部失败

- 6)实时性,在一定的时间范围内,Client能读到最新的数据

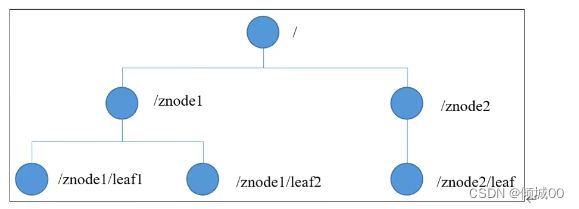

1.3 zk的数据结构

1.4 zk的应用场景

提供的服务包括统一的命名服务,统一的配置管理,统一的集群管理,服务器节点动态上下线,软负载均衡等

- 统一命名

在分布式的环境下需要经常对应用/服务进行统一命名便于识别

例如:IP不容易记住,但是域名能记住

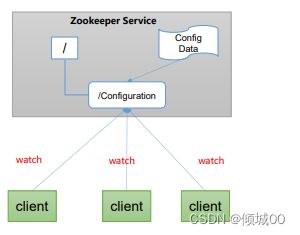

- 统一的配置管理

1)在分布式的环境下,配置的同步非常的常见,

一般要求一个集群中,所有的配置是一样的,比如kafka集群

对配置文件修改之后希望尽快的同步到各个节点上

2)配置管理可由zk实现,

可以将配置信息写入到zk的一个znode中

各个客户端监听这个znode

一旦这个znode中的数据修改,zk将通知各个客户端的服务器

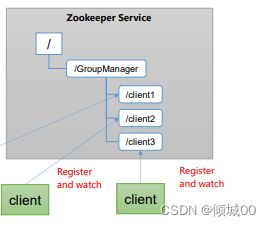

- 统一的集群管理

- 1) 分布式环境中,掌握每个节点的状态是必要的,

- 可以根据节点实施状态做出一点调整

- 2)zk可以实现监控节点的变化,

- 可以将节点信息写入到zk的一个Zznde

- 监听这个znode并获取他的实时状态变化

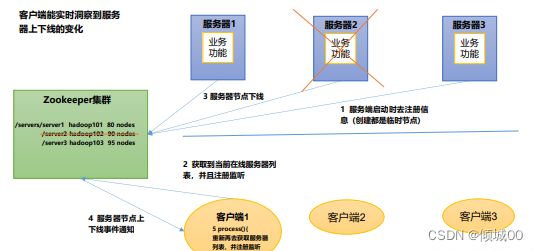

- 服务器的上下线

- 客户端可以实施的监控服务的上下线的变化

- 负载均衡

- 在zk中记录每个服务器的访问次数,让访问最终少的服务器去处理最新的客户端请求

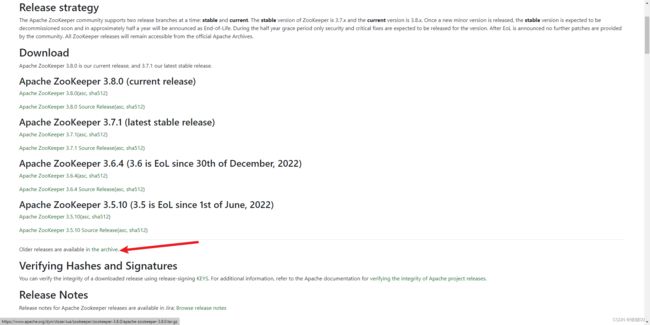

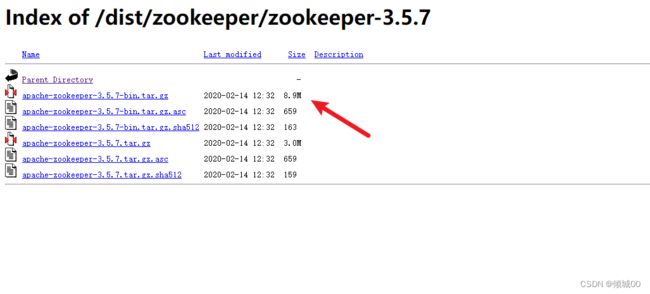

1.5 zk的下载

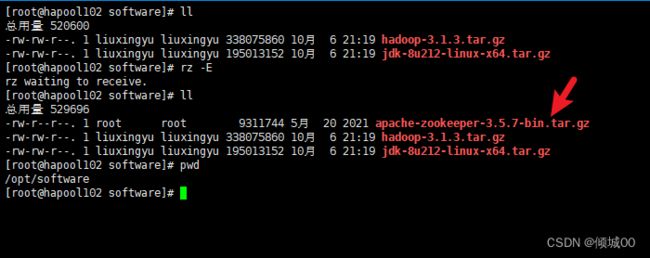

1.6 搭建zk

- 首先安装java环境

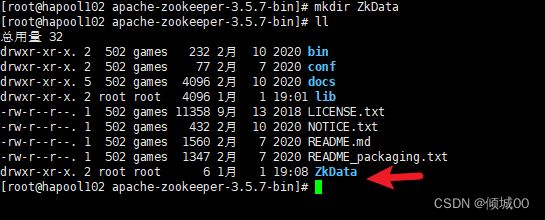

tar -zxvf apache-zookeeper-3.5.7-bin.tar.gz -C /opt/module/ - 解压到module目录 - 进入之后创建一个ZkData文件,用来存储数据

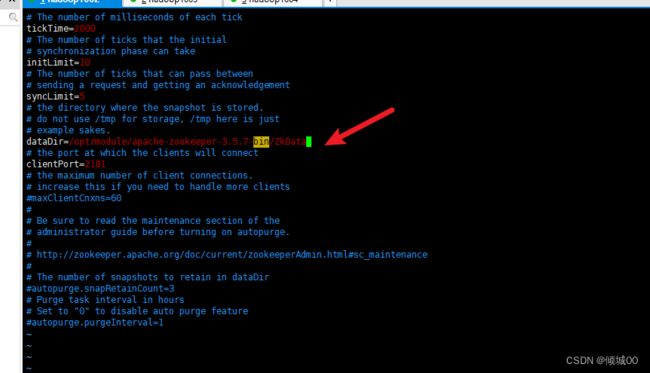

- 在conf 文件下修改 vim zoo_sample.cfg 文件

- 修改存储位置,默认是存在临时文件中的,现在手动修改指向刚才创建的目录

- 修改配置文件的名字 mv zoo_sample.cfg zoo.cfg

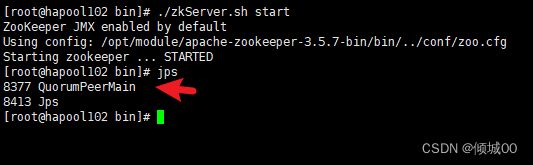

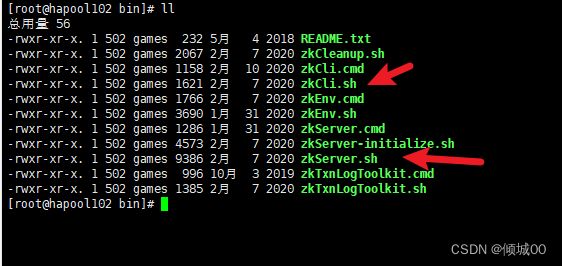

- 在bin目录中,上面这个是启动客户端,下面这个是启动服务端

- 启动客户端

- 在bin目录

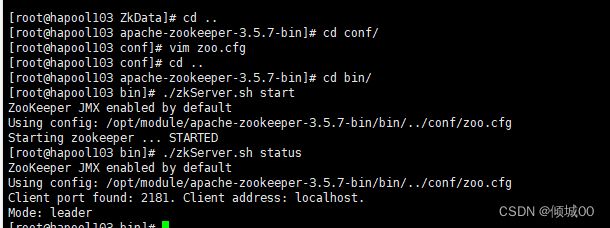

- ./zkServer.sh start

- 查看状态

./zkServer.sh status

- 启动客户端

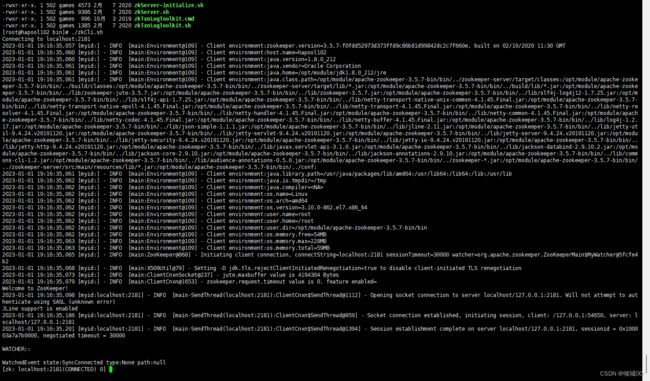

./zkCli.sh

输入 quit 即可退出

- 关闭zk

./zkServer.sh stop

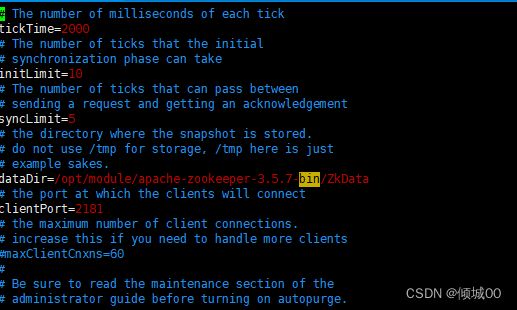

1.7 zk的配置文件解读

-

Zookeeper中的配置文件zoo.cfg中参数含义解读如下:

-

Leader和Follower初始连接时能容忍的最多心跳数(tickTime的数量)

-

3)syncLimit:5 同步通讯时限

Leader和Follower之间通信时间如果超过syncLimit * tickTime,Leader认为Follwer死

掉,从服务器列表中删除Follwer。 -

4)dataDir:保存Zookeeper中的数据

注意:默认的tmp目录,容易被Linux系统定期删除,所以一般不用默认的tmp目录。 -

5)clientPort = 2181:客户端连接端口,通常不做修改。

1.8 zk集群的搭建

-

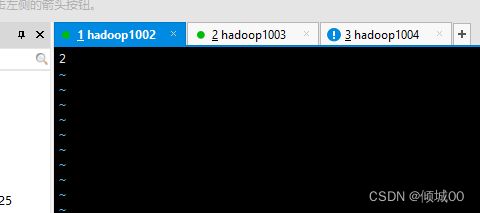

配置服务器编号

首先去/opt/module/apache-zookeeper-3.5.7-bin/ZkData

的目录下创建一个名为myid的文件,以我是102,103,104这三台集群搭建

-

这个的作用是一个唯一的标识,可以理解为身份证,标识自己是那一台服务器

-

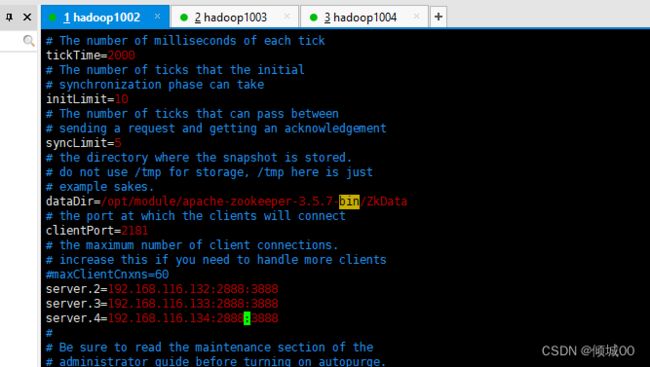

配置zoo.cfg文件

server.2=192.168.116.132:2888:3888

server.3=192.168.116.133:2888:3888

server.4=192.168.116.134:2888:3888

- 配置参数:

- server.A=B:C:D。

- A是一个数字,标识是哪一台(我们刚刚在myid中配置的)

集群模式下配置一个文件 myid,这个文件在 dataDir 目录下,这个文件里面有一个数据

就是 A 的值,Zookeeper 启动时读取此文件,拿到里面的数据与 zoo.cfg 里面的配置信息比 较从而判断到底是哪个 server。 - B是这个服务器的地址

- C是这个服务器中Follower与Leader服务器交信息的端口

- D是万一集群中Leader挂了,需要一个端口号来进行重新选举,选举成一个新的Leader,这个端口是用来这两个互相通信的端口

- 分发配置文件

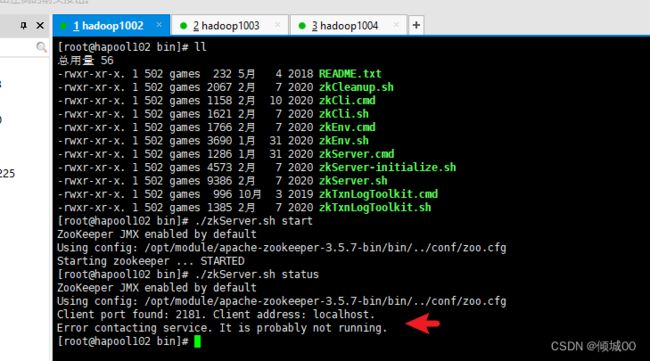

- 启动项目,先启动102

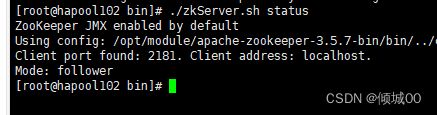

在Bin目录中执行

./zkServer.sh start

执行查看

./zkServer.sh status

- 发现报错了因为我们在配置文件中配置了3台的集群,zk是集群超过半数才会启动,所以需要启动>1.5个机器才会成功运行起来

- 启动103上的机器

- 发现103的角色是leader

- 重新执行102发现102的角色是follower

- 启动104 ,发现104的角色是follower

- PS:集群能否正常启动看的是配置文件中配置了几台集群,超过过半才会成功启动

- 配置文件的集群ID是我们在myid文件中配置的唯一ID

1.9 第一次启动的选举机制

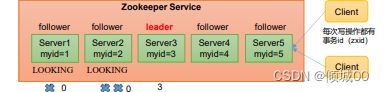

- 服务器1启动,发起一次选举,服务器自己投自己一票,此时服务器1票数一致,无法选举成功,服务器1的状态就变为LOOKING

- 服务器2启动,在发起一次选举,服务器一和服务器二会自己投自己,然后

交换选举信息此时服务器一发现服务器2的myid比自己目前投票推荐的(服务器一)y要大,更改投票选举为服务器2此时服务器1位0票,服务器2为2票,没有达到半数以上,故而无法成功选举,此时服务器的状态为LOOKING - 服务器3启动,发起一次选举,此时服务器2发现自己支持的(服务器2)的myid没有服务器3大,然后会选举到服务器3上,服务器3是3票,超过了半数,服务器3当选leader,服务器1和2当选follower

- 服务器4启动,此时服务器1-2-3的状态不是looking不会更改选票信息,此时服务器4服从多数,故而变成follwer,

- 服务器5启动,和服务器4一样

- 补充:每次客户端写操作会有的事务id(zxid),

- 1)SID:是服务器ID,他和服务器的myid是一样的

- 2)ZXID:是事务的id,

用来表示一次服务器状态的变更,在某一时刻,集群中的每台机器的ZXID是不同的,这个ZK的服务端更新请求有关系 - 3)EPOCH:他是每一个服务器任期的编号,没有leader时,同一轮投票值是相同的,没投完一票就会增加

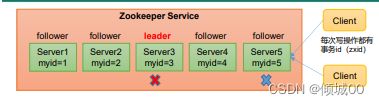

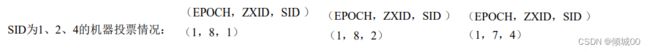

1.10 非第一次选举机制

- 1) 当zk服务器中发生以下两种情况的时候会发生选举:

- 服务器初始化(刚启动的时候)

- 服务运行期间无法和leader联系

- 2)而当一台机器进入Leader选举流程时,当前集群也可能会处于以下两种状态:

- 集群的leader还在

-

- 那么对于第一种已经存在learder的状态,机器会自动的选举learder,但会被告知learder还存在,对于该机器来说急需要重新连接learder即可

- 集群的learder确实不存在

- 假设zk5服务器挂了,并且learder挂了,那么机器就只剩下1,2,4这三台机器了,这三台机器满足半数,就会重新选举

- 按照(EPOCH(任期id),ZXID(事务ID),SID(唯一的ID))

- 选举的机制先按照EPOCH进行比较,大者则直接胜出

- 其次按照ZXID事务的ID进行判断,大者胜出

- 最后按照SID判断,大者胜出,也就是唯一的ID(myID)

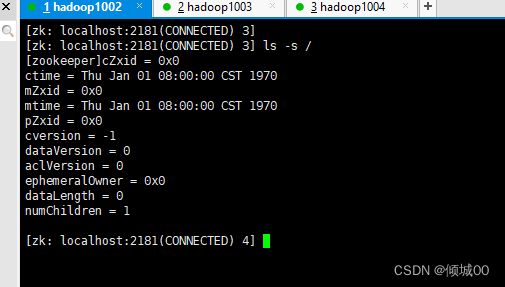

1.11 ZNode节点信息

- 启动客户端 ./zkCli.sh

- 输入 ls -s / 查看基本的信息

- czxid:

创建节点的事务id,每次修改zk状态都会产生一个zk的事务id,事务id是zk中所有事务修改的总的次数,每次修改都会有一个唯一的ZXID,如果zxid1小于zxid2,那么zxid1是在zxid2之前发生的 - ctime: Znode被创建的毫秒数,从1970年开始的

- mzxid:znode最后更新的事务zxid

- mtime:znode最后被修改的毫秒数,从1970年开始的

- pZxid:znode最后更新子节点的zxid

- cversion:znode子节点的变化,znode子节点的修改次数

- dataversion:znode的数据变化号

- aclVersion:访问控制列表的变化号

- ephemeraOwner:如果是临时节点,这个Znode的拥有者的session id,如果不是临时节点则是0

- dataLength:znode的数据长度

- numChildren:znode的子节点数量

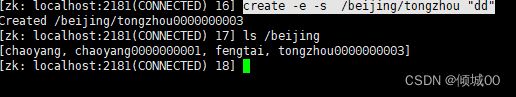

1.12 节点的类型

持久节点和不持久节点,不持久的节点断开连接之后会自动删除

持久节点和不持久节点下面还分持久节点和顺序编号和物顺序编号,非持久节点的顺序编号和无顺序编号

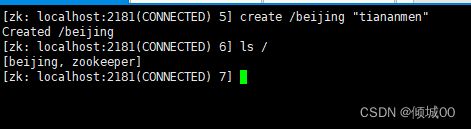

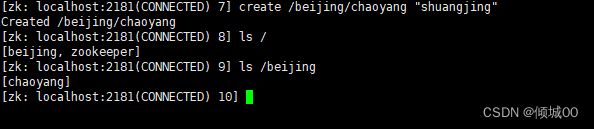

- 1.创建持久无不编号的节点

创建了一个北京节点,他的值是天安门

create /beijing "tiananmen"

-

- 创建持久的目录节点

创建了一个北京节点下的朝阳节点,他的值是双合

create /beijing/chaoyang "shuangjing"

-

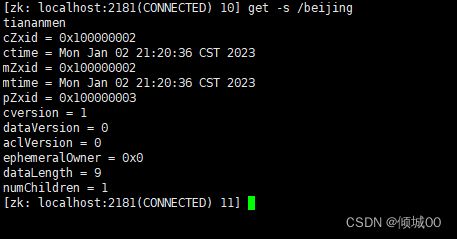

- 获取值

- 就可以拿到北京下的值是天安门,还能把基本的信息获得到

get -s /beijing

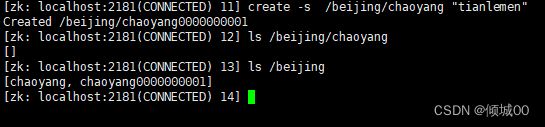

- 4.创建持久化带编号的节点

- 之前已经创建好了一个朝阳他的值是双井,如果节点重复了会报错

- 用带编号的创建节点,创建出来的节点是带编号的,向上走的,不会重复

create -s /beijing/chaoyang "tianlemen"

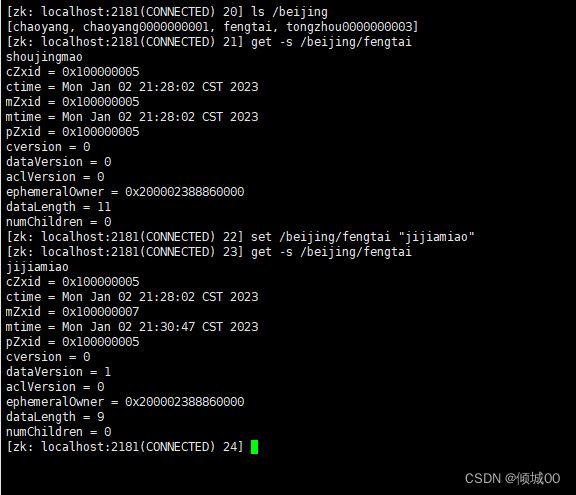

- 创建一个北京下丰台,值是首经贸

create -e /beijing/fengtai "shoujingmao"

6. 创建临时节点带序号 create -e -s /beijing/tongzhou “dd”

7.修改值

- 之前丰台的值是首经贸,修改为纪家庙

set /beijing/fengtai "jijiamiao"

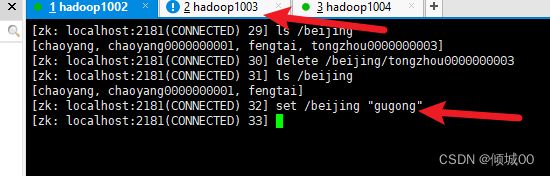

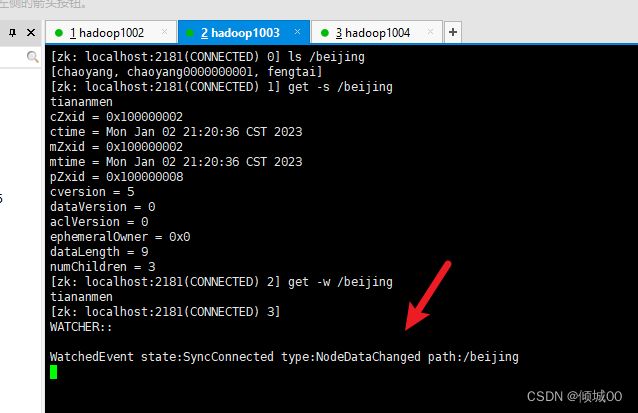

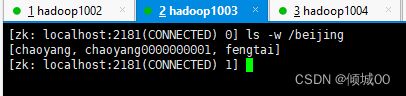

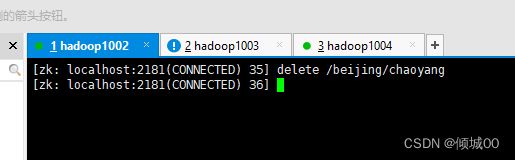

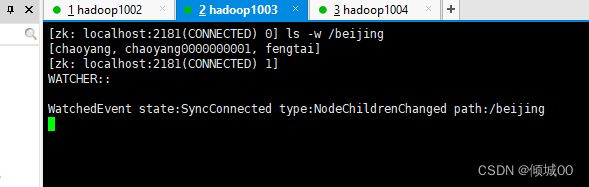

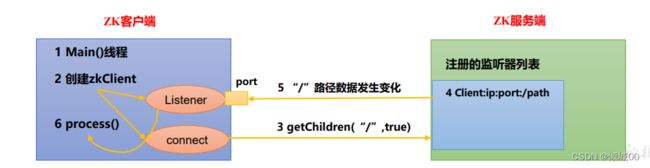

1.13 节点的监控

- 首先要有一个main线程

- 在main线程中创建zk的客户端,这时就会创建两个线程,一个负责网络通信(connet),一个负责监听(listener)

- 通过connet线程将注册的监听事件发送给zk,

- 在zk的注册器监听列表中,将注册的监听事件添加到列表中

- zk监听到数据发生了变化,就会将这个消息发送给listener线程

- listener线程就会调用process回调方法

- 常见的监听

- get path [watch] 监听节点数据的变化

- ls path [watch]监听子节点的增减变化

- 对节点的内容监控

- 1.3 服务器通过 get -w /beijing 去监控北京的内容是否发生变化

-

102 去修改数据,103会成功的监听到,但是这个监听只能监听一次,如果还想继续监听就得重新注册

-

监听节点的增减变化

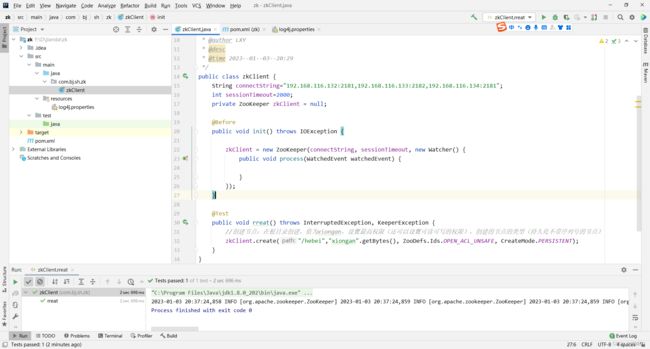

1.14 JAVA代码操作zk

- pom

4.0.0

com.bj.sh.zk

zk

1.0-SNAPSHOT

junit

junit

RELEASE

org.apache.logging.log4j

log4j-core

2.8.2

org.apache.zookeeper

zookeeper

3.5.7

- 在resources下创建log4j.properties

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c]

- %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c]- %m%n

- zkClient

package com.bj.sh.zk;

import org.apache.zookeeper.*;

import org.junit.Before;

import org.junit.Test;

import java.io.IOException;

/**

* @author LXY

* @desc

* @time 2023--01--03--20:29

*/

public class zkClient {

String connectString="192.168.116.132:2181,192.168.116.133:2182,192.168.116.134:2181";

int sessionTimeout=2000;

private ZooKeeper zkClient = null;

@Before

public void init() throws IOException {

zkClient = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

public void process(WatchedEvent watchedEvent) {

}

});

}

@Test

public void rreat() throws InterruptedException, KeeperException {

//创建节点:在根目录创建,值为xiongan,设置最高权限(还可以设置可读可写的权限),创建的节点的类型(持久化不带序列号的节点)

zkClient.create("/hebei","xiongan".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

}

}

![]()

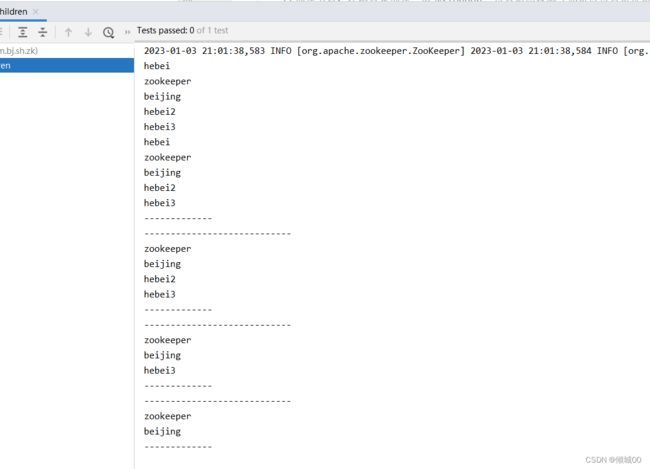

1.15 节点的监听

@Test

public void getChildren() throws Exception {

List children = zkClient.getChildren("/", true);

for (String child : children) {

System.out.println(child);

}

// 延时阻塞

Thread.sleep(Long.MAX_VALUE);

}

@Before

public void init() throws IOException {

zkClient = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

public void process(WatchedEvent watchedEvent) {

try {

System.out.println("----------------------------");

final List children = zkClient.getChildren("/", true);

for (String child : children) {

System.out.println(child);

}

// Thread.sleep(Long.MAX_VALUE);

System.out.println("-------------");

} catch (KeeperException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

});

}

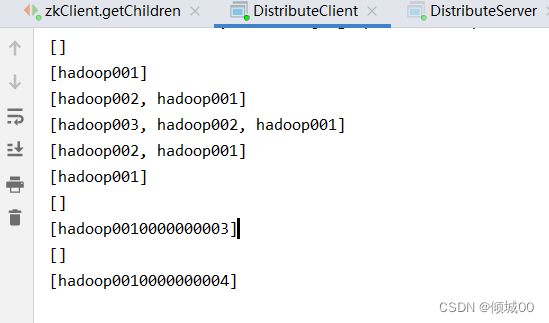

- 在getChildren中执行监听,监听到的数据只会执行一次,设置的是true,就会执行上面我们实例化的那一个监听方法,

- Thread.sleep(Long.MAX_VALUE); 最大睡眠是保证线程不会死亡可以一直监听

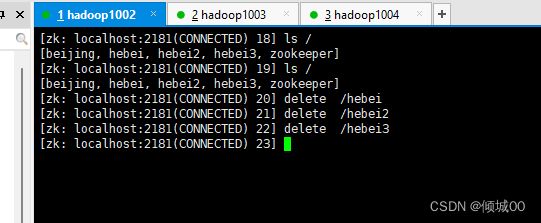

- 执行删除,成功的监听到了数据

1.16 判断节点是否存在

@Test

public void exist() throws InterruptedException, KeeperException {

final Stat exists = zkClient.exists("/hebei", false);

System.out.println(exists);

}

1.17 客户端写数据

- 如果客户端的请求是leader

- 1)首先leader会自己写一份

- 2)然后leader会向其他的一个follower写一份

- 3)follower写数据完毕之后会想leader进行一个应答

- 4)如果写入的机器超过了半数,直接向客户端应答

- 5)然后写最后剩下的follower,写完了follower向leader进行应答

- 如果客户端请求的是follower

- 1)首先follower会向leader发送写请求(因为followe没有写操作只能读)

- 2)leader首先自己先写一份,然后发送给follower写请求,

- 3)follower写完了会向leader发送ack

- 4)超过半数之后,leader会向follower发送存储数据已经超过了一半,需要向客户端发送ack(因为客户端请求的是follower,leader不能直接返回)

- 5)leader在讲剩下的follower写入数据,返回ack

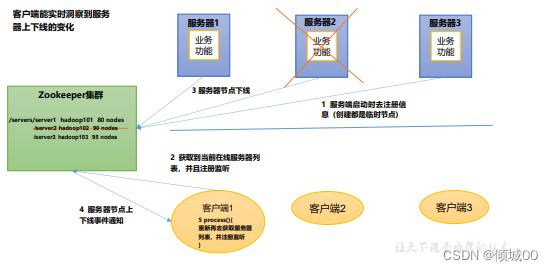

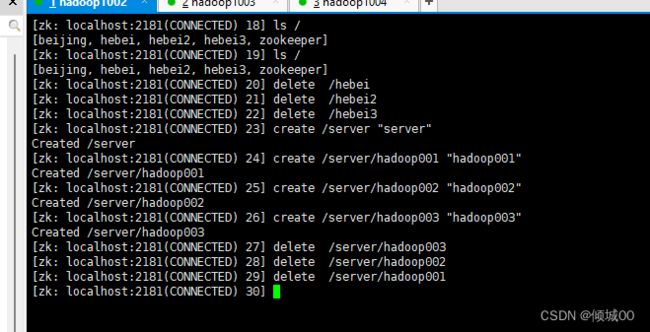

1.15 服务器动态上下线

- 首先服务器启动的时候会在server下创建路径文件

- 客户端去集群中监听列表

- 服务器下线

- 客户端会受到下线同时,然后去回调消息

1.18 服务器的动态上下线实现

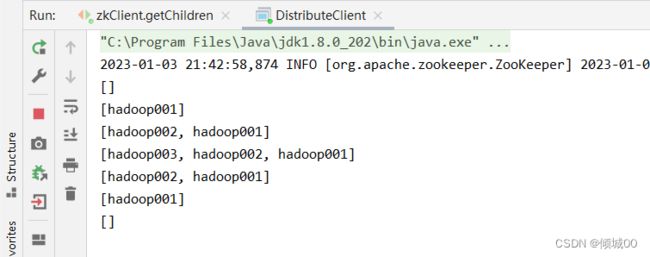

- DistributeClient

- 作用是监听server下的数据,如果客户端上线就会创建一个临时的节点,如果下线就会自动删除一个临时的接地那,DistributeClient的作用是客户端监控,动态的获取服务器的上下线信息

package com.bj.sh.zk;

import org.apache.zookeeper.KeeperException;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooKeeper;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

/**

* @author LXY

* @desc //客户端的监听

* @time 2023--01--03--21:35

*/

public class DistributeClient {

String connectString="192.168.116.132:2181,192.168.116.133:2182,192.168.116.134:2181";

int sessionTimeout=2000000;

ZooKeeper zkClient=null;

public static void main(String[] args) throws IOException, InterruptedException, KeeperException {

DistributeClient client=new DistributeClient();

//连接客户端

client.getconntion();

client.getChildren();

client.sleep();

}

private void sleep() throws InterruptedException {

Thread.sleep(Long.MAX_VALUE);

}

//客户端的监听

private void getChildren() throws InterruptedException, KeeperException {

Liststr=new ArrayList();

List children = zkClient.getChildren("/server", true);

for (String child : children) {

str.add(child);

}

System.out.println(str.toString());

}

//连接客户端

private void getconntion() throws IOException {

zkClient=new ZooKeeper(connectString, sessionTimeout, new Watcher() {

public void process(WatchedEvent watchedEvent) {

try {

getChildren();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (KeeperException e) {

e.printStackTrace();

}

}

});

}

}

-

通过shell模拟了一下,好用

-

DistributeServer

-

他的功能是运行的时候自动创建临时的server/队列,下线自动删除

package com.bj.sh.zk;

import org.apache.zookeeper.*;

import java.io.IOException;

/**

* @author LXY

* @desc

* @time 2023--01--03--21:45

*/

public class DistributeServer {

String connectString="192.168.116.132:2181,192.168.116.133:2182,192.168.116.134:2181";

int sessionTimeout=20000000;

ZooKeeper zkserver=null;

public static void main(String[] args) throws IOException, InterruptedException, KeeperException {

DistributeServer distributeServer=new DistributeServer();

distributeServer.getconntion();

distributeServer.creatserver("hadoop001");

distributeServer.sleep();

}

private void sleep() throws InterruptedException {

Thread.sleep(Long.MAX_VALUE);

}

private void creatserver(String hostname) throws InterruptedException, KeeperException {

zkserver.create("/server/"+hostname,hostname.getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

System.out.println("hostname:"+hostname);

}

//连接客户端

private void getconntion() throws IOException {

zkserver=new ZooKeeper(connectString, sessionTimeout, new Watcher() {

public void process(WatchedEvent watchedEvent) {

}

});

}

}

- 效果显著,上线提醒,下线也会提醒

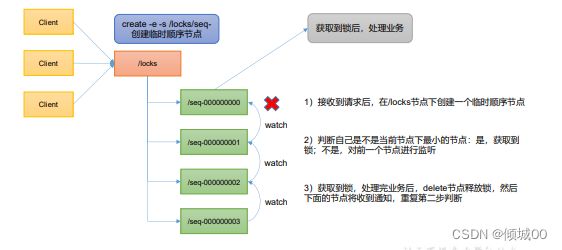

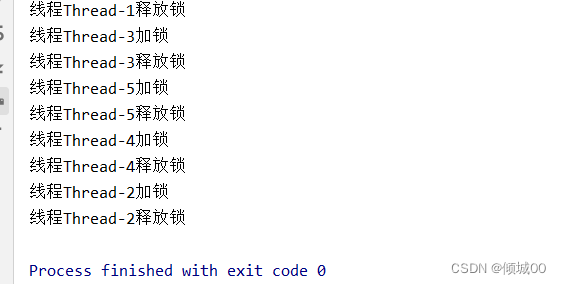

1.19 ZooKeeper 分布式锁

- 一个线程进来的时候出创建临时的节点,判断自己是不是当前节点下最小的节点,如果不是则对前一个进行监听,处理完成业务之后释放锁

- 代码实现(代码有bug,以后在改)

- 上锁工具类

package com.bj.sh.zk.lock;

import com.bj.sh.zk.zkClient;

import org.apache.zookeeper.*;

import org.apache.zookeeper.data.Stat;

import java.io.IOException;

import java.util.Collections;

import java.util.List;

import java.util.concurrent.CountDownLatch;

/**

* @author LXY

* @desc 分布式悲观锁

* @time 2023--01--04--20:31

*/

public class DistributedLock {

String connectString="192.168.116.132:2181,192.168.116.133:2182,192.168.116.134:2181";

int sessionTimeout=2000;

ZooKeeper zk=null;

private String rootNode = "locks";

private String subNode = "seq-";

//实例化计数器

private CountDownLatch connectLatch = new CountDownLatch(1);

//节点等待

private CountDownLatch waitLatch = new CountDownLatch(1);

// 当前 client 等待的子节点

private String waitPath;

// 当前 client 创建的子节点

private String currentNode;

public DistributedLock() throws IOException, InterruptedException, KeeperException {

//连接

zk=new ZooKeeper(connectString, sessionTimeout, new Watcher() {

public void process(WatchedEvent event) {

if (event.getState() == Event.KeeperState.SyncConnected) {

connectLatch.countDown();

}

if (event.getType() == Event.EventType.NodeDeleted && event.getPath().equals(waitPath))

{

waitLatch.countDown();

}

}

});

//连接,等待一个线程连接

connectLatch.await();

Stat exists = zk.exists("/locks", false);

if (exists==null){

System.out.println("根节点不存在,创建临时节点");

zk.create("/locks", "locks".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

}

}

//加锁

void zkLock() throws InterruptedException, KeeperException {

currentNode = zk.create("/" + rootNode + "/" + subNode,null, ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

// wait 一小会, 让结果更清晰一些

Thread.sleep(10);

// 注意, 没有必要监听"/locks"的子节点的变化情况

List childrenNodes = zk.getChildren("/" +rootNode, false);

// 列表中只有一个子节点, 那肯定就是 currentNode , 说明 client 获得锁

if (childrenNodes.size() == 1) {

return;

} else {

//对根节点下的所有临时顺序节点进行从小到大排序

Collections.sort(childrenNodes);

//当前节点名称

String thisNode = currentNode.substring(("/" +rootNode + "/").length());

//获取当前节点的位置

int index = childrenNodes.indexOf(thisNode);

if (index == -1) {

System.out.println("数据异常");

} else if (index == 0) {

// index == 0, 说明 thisNode 在列表中最小, 当前 client 获得锁

return;

} else {

// 获得排名比 currentNode 前 1 位的节点

this.waitPath = "/" + rootNode + "/" +

childrenNodes.get(index - 1);

// 在 waitPath 上注册监听器, 当 waitPath 被删除时, zookeeper 会回调监听器的 process 方法

zk.getData(waitPath, true, new Stat());

//进入等待锁状态

waitLatch.await();

return;

}

}

}

public void zkUnlock() throws InterruptedException, KeeperException {

zk.delete(this.currentNode, -1);

}

}

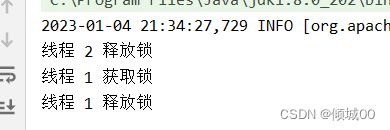

- 测试

package com.bj.sh.zk.lock;

import org.apache.zookeeper.KeeperException;

import java.io.IOException;

/**

* @author LXY

* @desc

* @time 2023--01--04--20:36

*/

public class test {

public static void main(String[] args) throws IOException, InterruptedException, KeeperException {

// 创建分布式锁 1

final DistributedLock lock1 = new DistributedLock();

// 创建分布式锁 2

final DistributedLock lock2 = new DistributedLock();

new Thread(new Runnable() {

public void run() {

// 获取锁对象

try {

lock1.zkLock();

System.out.println("线程 1 获取锁");

Thread.sleep(5 * 1000);

lock1.zkUnlock();

System.out.println("线程 1 释放锁");

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

new Thread(new Runnable() {

public void run() {

// 获取锁对象

try {

lock2.zkLock();

System.out.println("线程 2 获取锁");

Thread.sleep(5 * 1000);

lock2.zkUnlock();

System.out.println("线程 2 释放锁");

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

}

}

1.20 Curator 框架实现分布式锁案例

1)原生的 Java API 开发存在的问题

(1)会话连接是异步的,需要自己去处理。比如使用 CountDownLatch

(2)Watch 需要重复注册,不然就不能生效

(3)开发的复杂性还是比较高的

(4)不支持多节点删除和创建。需要自己去递归

-

curator是一款专门解决分布式锁的框架,

详情请查看官方文档:https://curator.apache.org/index.html -

代码实现

-

pom

org.apache.curator

curator-framework

4.3.0

org.apache.curator

curator-recipes

4.3.0

org.apache.curator

curator-client

4.3.0

- 代码

package com.bj.sh.zk.lock2;

import org.apache.curator.RetryPolicy;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.framework.recipes.locks.InterProcessMutex;

import org.apache.curator.retry.ExponentialBackoffRetry;

/**

* @author LXY

* @desc

* @time 2023--01--04--21:45

*/

public class test {

public static void main(String[] args) {

final InterProcessMutex lock1 = new InterProcessMutex(getCuratorFramework(), "/lock");

for (int i = 0; i < 5; i++) {

new Thread(new Runnable() {

public void run() {

try {

//加锁

lock1.acquire();

System.out.println("线程" + Thread.currentThread().getName() + "加锁");

Thread.sleep(5000);

lock1.release();

System.out.println("线程" + Thread.currentThread().getName() + "释放锁");

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

}

}

private static CuratorFramework getCuratorFramework() {

//重试策略,初试时间 3 秒,重试 3 次

ExponentialBackoffRetry policy = new ExponentialBackoffRetry(3000, 3);

// 通过工厂创建

CuratorFramework build = CuratorFrameworkFactory.builder().connectString("192.168.116.132:2181,192.168.116.133:2182,192.168.116.134:2181")

.connectionTimeoutMs(2000)

.sessionTimeoutMs(2000)

.retryPolicy(policy)

.build();

build.start();

return build;

}

}