CentOS7安装K8S V1.23.3

一、系统准备

查看系统版本

[root@localhost docker]# cat /etc/centos-release

CentOS Linux release 7.9.2009 (Core)配置网络

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=dhcp

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=704978ef-5eae-4d56-bd9d-39ca10ee3bd0

DEVICE=ens33

ONBOOT=yes

BOOTPROTO=static

DNS1=1x.5x.2xx.13

DNS2=x0.xx.xxx.14

IPADDR=192.168.163.131

NETMASK=255.255.255.0

GATEWAY=192.168.163.2添加阿里源

[root@localhost ~]# rm -rfv /etc/yum.repos.d/*

[root@localhost ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo配置主机名

[root@localhost docker]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.163.131 master01.paas.com master01关闭swap,注释swap分区

[root@master01 ~]# swapoff -a

[root@localhost docker]# vi /etc/fstab

#

# /etc/fstab

# Created by anaconda on Fri Dec 31 10:13:36 2021

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=47072dd8-78d8-47bf-a319-f96dfbc900aa /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

# 第三步确认swap已经关闭

[root@localhost docker]# free -m

total used free shared buff/cache available

Mem: 1819 1055 95 12 667 600

Swap: 0 0 0配置内核参数,将桥接的IPv4流量传递到iptables的链

[root@master01 ~]# cat > /etc/sysctl.d/k8s.conf <二、安装常用包

[root@master01 ~]# yum install vim bash-completion net-tools gcc -y三、使用aliyun源安装docker-ce

[root@master01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@master01 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@master01 ~]# yum -y install docker-ce配置docker, 为k8s部署做准备

[root@master01 ~]# mkdir -p /etc/docker

[root@master01 ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts":[

"native.cgroupdriver=systemd"

]

}

EOF

[root@master01 ~]# systemctl daemon-reload

[root@master01 ~]# systemctl restart docker四、安装kubectl、kubelet、kubeadm

添加阿里kubernetes源

[root@master01 ~]# cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF 安装

[root@master01 ~]# yum install kubectl kubelet kubeadm

[root@master01 ~]# systemctl enable kubelet五、初始化k8s集群

# POD的网段为: 10.244.0.0/16, api server地址就是master本机IP。

[root@master01 ~]# kubeadm init --kubernetes-version=v1.23.3 \

--apiserver-advertise-address=192.168.163.131 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16初始化过程遇到问题:参考步骤一:关闭swap分区 参考步骤三:配置docker

遇到错误:请使用 kubeadm reset重置之后,继续使用上面命令进行初始化

初始化提示一:

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING Swap]: swap is enabled; production deployments should disable swap unless testing the NodeSwap feature gate of the kubelet

初始化提示二:

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

成功提示

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.163.131:6443 --token ucuthy.65s2dj114t4y92gw \

--discovery-token-ca-cert-hash sha256:fe0ce0734b3eb14fb52e1cbe9bea688db4d5e53c84f2436765a4c633f5d6fbe6

记录生成的最后部分内容,此内容需要在其它节点加入Kubernetes集群时执行。

根据提示创建kubectl

[root@master01 ~]# mkdir -p $HOME/.kube

[root@master01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config执行下面命令,使kubectl可以自动补充

[root@master01 ~]# source <(kubectl completion bash)查看节点,pod

[root@localhost docker]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6d8c4cb4d-fhzpd 0/1 Pending 0 82s

kube-system coredns-6d8c4cb4d-lr2r9 0/1 Pending 0 82s

kube-system etcd-localhost.localdomain 1/1 Running 1 96s

kube-system kube-apiserver-localhost.localdomain 1/1 Running 0 96s

kube-system kube-controller-manager-localhost.localdomain 1/1 Running 0 96s

kube-system kube-proxy-5tf4j 1/1 Running 0 82s

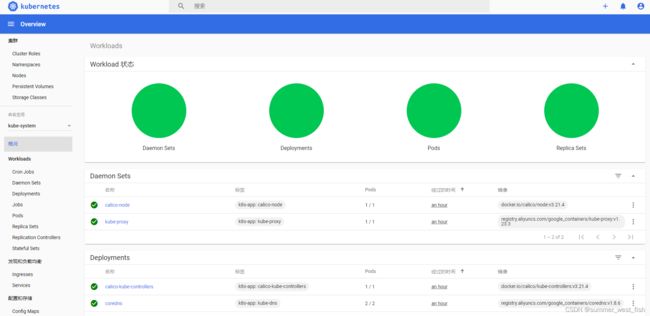

kube-system kube-scheduler-localhost.localdomain 1/1 Running 1 96s六、安装calico网络

[root@master01 ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created查看pod和node(需要几分钟时间)

[root@localhost docker]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-85b5b5888d-h2dqx 1/1 Running 0 114s

kube-system calico-node-dsp2s 1/1 Running 0 114s

kube-system coredns-6d8c4cb4d-fhzpd 1/1 Running 0 3m42s

kube-system coredns-6d8c4cb4d-lr2r9 1/1 Running 0 3m42s

kube-system etcd-localhost.localdomain 1/1 Running 1 3m56s

kube-system kube-apiserver-localhost.localdomain 1/1 Running 0 3m56s

kube-system kube-controller-manager-localhost.localdomain 1/1 Running 0 3m56s

kube-system kube-proxy-5tf4j 1/1 Running 0 3m42s

kube-system kube-scheduler-localhost.localdomain 1/1 Running 1 3m56s此时集群状态正常

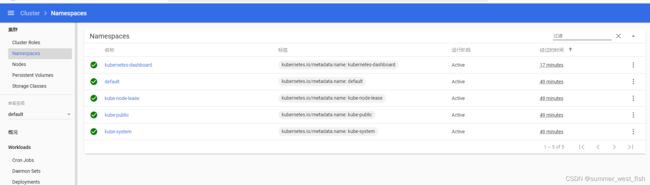

七、安装kubernetes-dashboard

官方部署dashboard的服务没使用nodeport,将yaml文件下载到本地,在service里添加nodeport

[root@master01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc7/aio/deploy/recommended.yaml

# type: NodePort nodePort: 30000

[root@master01 ~]# vim recommended.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30000

selector:

k8s-app: kubernetes-dashboard

[root@master01 ~]# kubectl create -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created查看pod,service

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-dc6947fbf-869kf 1/1 Running 0 37s

kubernetes-dashboard-5d4dc8b976-sdxxt 1/1 Running 0 37s

[root@master01 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.10.58.93 8000/TCP 44s

kubernetes-dashboard NodePort 10.10.132.66 443:30000/TCP 44s 使用token进行登录,执行下面命令获取token

[root@localhost docker]# kubectl describe secrets -n kubernetes-dashboard kubernetes-dashboard-token-rvnvq | grep token | awk 'NR==3{print $2}'

eyJhbGciOiJSUzI1NiIsImtpZCI6InVRNm02dHNNNmJKR01SMWl2bl9Ubk0weHY1T1lFSi1PaVMxblpjRFQtNnMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1ydm52cSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjU3MWU0YzQyLWUxYWUtNDliMC04MTNhLTNlNWUzZDY3ZTkxZiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.SR7tkzke_fV4ToNuHU5iciSfkqT7oc3AAPrPHl54VdN2hEpRUp2JF5TgpRDB4_-YoELWU3O5ZGsy2yR_HXTq7tdjIrTR6K2ZeX0344BPVKbGFf_CpFfAYiVTkM9EaVZSV6EO20JIxY5Qc_E_5TbwzDTI0ZYZE3l2VLwYv_F5LgMddeaQWX4iaQdzEiPsD6QM4QudSAzFcMKbH22xBG1WLtzQKI0N9KOccUWKpBwdRXTC0no3V_fgbCanv-NJ7skvGX17pCYdLvlFddMWxzE7tHabZTzmZr8K4UsMoo3k0KWv9BGAbXV8MHcM0OJU4bJEWNMB9PgFIdlk0rWoY9arZw如果不能访问,并且dashboardpod中日志有error产生

访问地址: https://192.168.163.131:30000/#/namespace?namespace=default

2022/01/29 08:11:04 Non-critical error occurred during resource retrieval: events is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "events" in API group "" in the namespace "default"

2022/01/29 08:11:04 Getting pod metrics

2022/01/29 08:11:04 Non-critical error occurred during resource retrieval: replicationcontrollers is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "replicationcontrollers" in API group "" in the namespace "default"

2022/01/29 08:11:04 Non-critical error occurred during resource retrieval: pods is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "pods" in API group "" in the namespace "default"

2022/01/29 08:11:04 Non-critical error occurred during resource retrieval: events is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "events" in API group "" in the namespace "default"

如下方法可以解决:第一个步骤不能解决、采用第二个步骤

[root@localhost docker]# kubectl delete -f recommended.yaml

[root@master01 ~]# kubectl create clusterrolebinding serviceaccount-cluster-admin --clusterrole=cluster-admin --group=system:serviceaccount

clusterrolebinding.rbac.authorization.k8s.io/serviceaccount-cluster-admin created[root@localhost docker]# vi recommended.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# 非常重要:Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["","apps","batch","extensions", "metrics.k8s.io"]

resources: ["*"]

verbs: ["get", "list", "watch"]

# kubectl apply -f recommended.yaml此时再查看dashboard,即可看到有资源展示

八、客户端调用api

xiaxinyu/go-middlewarehttps://gitee.com/xiaxinyu3_admin/go-middleware.git