二进制安装k8s集群V1.23.0

目录

一、集群规划

二、基础环境配置

1、配置/etc/hosts文件

2、设置主机名

3、安装yum源(Centos7)

4、必备工具安装

5、所有节点关闭firewalld 、dnsmasq、selinux

6、所有节点关闭swap分区,fstab注释swap

7、所有节点同步时间

8、所有节点配置limit

9、Master01节点免密钥登录其他节点

10、Master01下载安装文件

三、内核升级

1、创建文件夹(所有节点)

2、在master01节点下载内核

3、从master01节点传到其他节点

4、所有节点安装内核

5、所有节点更改内核启动顺序

6、检查默认内核是不是4.19

7、所有节点重启,然后检查内核是不是4.19

8、所有节点安装配置ipvsadm

9、所有节点配置ipvs模块

10、检查是否加载

11、开启一些k8s集群中必须的内核参数,所有节点配置k8s内核

12、所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

四、Runtime安装

1、Containerd作为Runtime

1.1 所有节点安装docker-ce-20.10

1.2 首先配置Containerd所需的模块(所有节点)

1.3 所有节点加载模块

1.4 所有节点,配置Containerd所需的内核

1.5 所有节点加载内核

1.6 所有节点配置Containerd的配置文件

1.7 所有节点将Containerd的Cgroup改为Systemd

1.8 所有节点将sandbox_image的Pause镜像改成符合自己版本的地址

1.9 所有节点启动Containerd,并配置开机自启动

1.10 所有节点配置crictl客户端连接的运行时位置

2、Docker作为Runtime

2.1 所有节点安装docker-ce 20.10:

2.2 由于新版Kubelet建议使用systemd,所以把Docker的CgroupDriver也改成systemd

2.3 所有节点设置开机自启动Docker

五、k8s及etcd安装

1、Master01下载kubernetes安装包

2、下载etcd安装包

3、解压kubernetes安装文件

4、解压etcd安装文件

5、版本查看

6、将组件发送到其他节点

7、所有节点创建/opt/cni/bin目录

8、切换分支

六、生成证书

1、Master01下载生成证书工具

2、生成etcd证书

2.1 所有Master节点创建etcd证书目录

2.2 所有节点创建kubernetes相关目录

2.3 Master01节点生成etcd证书

2.4 生成etcd CA证书和CA证书的key

2.5 将证书复制到其他节点,etcd安装的节点:master01、master02、master03

3、k8s组件证书

3.1 Master01生成kubernetes证书

3.2 生成apiserver的聚合证书。Requestheader-client-xxx requestheader-allowwd-xxx:aggerator

3.3 生成controller-manage的证书

3.4 set-cluster:设置一个集群项

3.5 设置一个环境项,一个上下文

3.6 set-credentials 设置一个用户项

3.7 使用某个环境当做默认环境

3.8 创建ServiceAccount Key à secret

3.9 发送证书至其他节点

3.10 查看证书文件

七、etcd配置

1、Master01配置

2、Master02配置

3、Master03配置

4、创建etcd的Service

5、所有Master节点创建etcd的证书目录

6、启动etcd

7、查看etcd状态

八、Master节点配置高可用集群

1、所有Master节点安装keepalived和haproxy

2、所有Master配置HAProxy,配置一样

3、所有Master节点配置KeepAlived

3.1 Master01配置

3.2 Master02配置

3.3 Master03配置

4、所有master节点健康检查配置脚本

5、授权脚本

6、所有master节点启动haproxy和keepalived

7、VIP测试

九、Kubernetes组件配置

所有节点创建相关目录:

1、安装Apiserver

1.1 Master01配置

1.2 Master02配置

1.3 Master03配置

1.4 所有Master节点开启kube-apiserver

1.5 检测kube-apiserver状态

2、安装ControllerManager

2.1 所有Master节点添加配置文件kube-controller-manager.service

2.2 所有Master节点启动kube-controller-manager

2.3 查看启动状态

3、安装Scheduler

3.1 所有Master节点配置kube-scheduler.service

3.2 所有master节点启动scheduler

十、TLS Bootstrapping配置

1、在Master01创建bootstrap

2、可以正常查询集群状态,才可以继续往下,否则不行,需要排查k8s组件是否有故障

3、创建bootstrap的secret

十一、Node节点配置

1、Master01节点复制证书至Node节点

2、Kubelet配置

2.1 所有节点创建相关目录

2.2 所有节点配置kubelet service

2.3 所有节点配置kubelet service的配置文件(也可以写到kubelet.service)

2.4 创建kubelet的配置文件

2.5 启动所有节点kubelet

2.6 查看集群状态

3、安装kube-proxy

3.1 以下操作只在Master01执行

3.2 将kubeconfig发送至其他节点

3.3 所有节点添加kube-proxy的配置和service文件

3.4 创建kube-proxy资源

3.5 所有节点启动kube-proxy

十二、安装Calico

1、Calico介绍

2、安装官方推荐版本(推荐)

2.1 修改calico.yaml的Pod网段

2.2 安装Calio

2.3 查看容器状态

十三、安装CoreDNS

1、CoreDNS介绍

1.1 概念

1.2 逻辑

2、安装官方推荐版本(推荐)

2.1 将coredns的serviceIP改成k8s service网段的第十个IP

2.2 安装coredns

3、安装最新版CoreDNS

十四、安装Metrics Server

1、安装metrics server

2、等待metrics server启动然后查看状态

十五、安装dashboard

1、安装指定版本dashboard

2、安装最新版

3、登录dashboard

3.1 在谷歌浏览器(Chrome)启动文件中加入启动参数

3.2 更改dashboard的svc为NodePort

3.3 查看端口号

3.4 访问Dashboard

3.5 查看token值

3.6 将token值输入到令牌后,单击登录即可访问Dashboard

一、集群规划

| 主机名 | IP地址 | 说明 |

|---|---|---|

| k8s-master01 | 192.168.126.129 | master节点1 |

| k8s-master02 | 192.168.126.130 | master节点2 |

| k8s-master03 | 192.168.126.131 | master节点3 |

| k8s-master-lb | 192.168.126.200 | keepalived虚拟IP |

| k8s-node01 | 192.168.126.131 | worker节点1 |

| k8s-node02 | 192.168.126.132 | worker节点2 |

| 配置信息 | 备注 |

|---|---|

| 系统版本 | CentOS 7.9.2009 |

| Docker版本 | 20.10.17 |

| 宿主机网段 | 192.168.126.0/24 |

| Pod网段 | 172.16.0.0/12 |

| Service网段 | 10.96.0.0/16 |

二、基础环境配置

1、配置/etc/hosts文件

[root@localhost ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.126.129 k8s-master01

192.168.126.130 k8s-master02

192.168.126.131 k8s-master03

192.168.126.200 k8s-master-lb # 如果不是高可用集群,该IP为Master01的IP

192.168.126.132 k8s-node01

192.168.126.133 k8s-node022、设置主机名

hostnamectl set-hostname k8s-master01

hostnamectl set-hostname k8s-master02

hostnamectl set-hostname k8s-master03

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node023、安装yum源(Centos7)

所有主机执行

[root@localhost ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@localhost ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@localhost ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@localhost ~]# sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo4、必备工具安装

[root@localhost ~]# yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y5、所有节点关闭firewalld 、dnsmasq、selinux

CentOS7需要关闭NetworkManager,CentOS8不需要

[root@localhost ~]# systemctl disable --now firewalld

[root@localhost ~]# systemctl disable --now dnsmasq

[root@localhost ~]# systemctl disable --now NetworkManager

[root@localhost ~]# setenforce 0

[root@localhost ~]# sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

[root@localhost ~]# sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config6、所有节点关闭swap分区,fstab注释swap

[root@localhost ~]# swapoff -a && sysctl -w vm.swappiness=0

[root@localhost ~]# sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab7、所有节点同步时间

安装ntpdate

[root@localhost ~]# rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

[root@localhost ~]# yum install ntpdate -y

所有节点同步时间。时间同步配置如下:

[root@localhost ~]# ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

[root@localhost ~]# echo 'Asia/Shanghai' >/etc/timezone

[root@localhost ~]# ntpdate time2.aliyun.com

30 Jun 12:38:19 ntpdate[12176]: adjust time server 203.107.6.88 offset -0.002743 sec

# 加入到crontab

[root@localhost ~]# crontab -e

*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com8、所有节点配置limit

[root@localhost ~]# ulimit -SHn 65535

[root@localhost ~]# vim /etc/security/limits.conf

# 末尾添加如下内容

* soft nofile 65536

* hard nofile 131072

* soft nproc 65535

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited 9、Master01节点免密钥登录其他节点

[root@k8s-master01 ~]# ssh-keygen -t rsa

Master01配置免密码登录其他节点

[root@k8s-master01 ~]# for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i;done10、Master01下载安装文件

[root@k8s-master01 ~]# cd /opt/ ; git clone https://github.com/dotbalo/k8s-ha-install.git

Cloning into 'k8s-ha-install'...

remote: Enumerating objects: 12, done.

remote: Counting objects: 100% (12/12), done.

remote: Compressing objects: 100% (11/11), done.

remote: Total 461 (delta 2), reused 5 (delta 1), pack-reused 449

Receiving objects: 100% (461/461), 19.52 MiB | 4.04 MiB/s, done.

Resolving deltas: 100% (163/163), done.三、内核升级

1、创建文件夹(所有节点)

[root@k8s-master01 ~]# mkdir -p /opt/kernel2、在master01节点下载内核

[root@k8s-master01 ~]# cd /opt/kernel

[root@k8s-master01 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

[root@k8s-master01 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm3、从master01节点传到其他节点

[root@k8s-master01 ~]# for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/opt/kernel; done4、所有节点安装内核

cd /opt/kernel && yum localinstall -y kernel-ml*5、所有节点更改内核启动顺序

[root@k8s-master01 ~]# grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64

Found initrd image: /boot/initramfs-4.19.12-1.el7.elrepo.x86_64.img

Found linux image: /boot/vmlinuz-3.10.0-693.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-693.el7.x86_64.img

Found linux image: /boot/vmlinuz-0-rescue-1c01f6af1f1d40ccb9988c844650f9a3

Found initrd image: /boot/initramfs-0-rescue-1c01f6af1f1d40ccb9988c844650f9a3.img

[root@k8s-master01 kernel]# grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"6、检查默认内核是不是4.19

[root@k8s-master01 kernel]# grubby --default-kernel

/boot/vmlinuz-4.19.12-1.el7.elrepo.x86_647、所有节点重启,然后检查内核是不是4.19

[root@k8s-master01 kernel]# reboot

[root@k8s-master01 ~]# uname -a

Linux k8s-master01 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linux8、所有节点安装配置ipvsadm

[root@k8s-master01 ~]# yum install ipvsadm ipset sysstat conntrack libseccomp -y9、所有节点配置ipvs模块

注意:在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack, 4.18以下使用nf_conntrack_ipv4即可:

[root@k8s-master01 ~]# modprobe -- ip_vs

[root@k8s-master01 ~]# modprobe -- ip_vs_rr

[root@k8s-master01 ~]# modprobe -- ip_vs_wrr

[root@k8s-master01 ~]# modprobe -- ip_vs_sh

[root@k8s-master01 ~]# modprobe -- nf_conntrack

[root@k8s-master01 ~]# vim /etc/modules-load.d/ipvs.conf

# 加入以下内容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

# 重新启动模块:

[root@k8s-master01 ~]# systemctl enable --now systemd-modules-load.service10、检查是否加载

[root@k8s-master01 ~]# lsmod | grep -e ip_vs -e nf_conntrack

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 151552 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 143360 1 ip_vs

nf_defrag_ipv6 20480 1 nf_conntrack

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 3 nf_conntrack,xfs,ip_vs11、开启一些k8s集群中必须的内核参数,所有节点配置k8s内核

[root@k8s-master01 ~]# cat < /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

net.ipv4.conf.all.route_localnet = 1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

# 重新加载

[root@k8s-master01 ~]# sysctl --system 12、所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

[root@k8s-master01 ~]# reboot

[root@k8s-master01 ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack

ip_vs_ftp 16384 0

nf_nat 32768 1 ip_vs_ftp

ip_vs_sed 16384 0

ip_vs_nq 16384 0

ip_vs_fo 16384 0

ip_vs_sh 16384 0

ip_vs_dh 16384 0

ip_vs_lblcr 16384 0

ip_vs_lblc 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs_wlc 16384 0

ip_vs_lc 16384 0

ip_vs 151552 24 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

nf_conntrack 143360 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 1 nf_conntrack

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs四、Runtime安装

1、Containerd作为Runtime

1.1 所有节点安装docker-ce-20.10

[root@k8s-master01 ~]# yum install docker-ce-20.10.* docker-ce-cli-20.10.* containerd -y

[root@k8s-master01 ~]# docker version

Client: Docker Engine - Community

Version: 20.10.17

API version: 1.41

Go version: go1.17.11

Git commit: 100c701

Built: Mon Jun 6 23:05:12 2022

OS/Arch: linux/amd64

Context: default

Experimental: true1.2 首先配置Containerd所需的模块(所有节点)

[root@k8s-master01 ~]# cat <1.3 所有节点加载模块

[root@k8s-master01 ~]# modprobe -- overlay

[root@k8s-master01 ~]# modprobe -- br_netfilter1.4 所有节点,配置Containerd所需的内核

[root@k8s-master01 ~]# cat < net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> net.bridge.bridge-nf-call-ip6tables = 1

> EOF

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1 1.5 所有节点加载内核

[root@k8s-master01 ~]# sysctl --system1.6 所有节点配置Containerd的配置文件

[root@k8s-master01 ~]# mkdir -p /etc/containerd

[root@k8s-master01 ~]# containerd config default | tee /etc/containerd/config.toml1.7 所有节点将Containerd的Cgroup改为Systemd

[root@k8s-master01 ~]# vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

BinaryName = ""

CriuImagePath = ""

CriuPath = ""

CriuWorkPath = ""

IoGid = 0

IoUid = 0

NoNewKeyring = false

NoPivotRoot = false

Root = ""

ShimCgroup = ""

SystemdCgroup = true找到containerd.runtimes.runc.options,添加SystemdCgroup = true(如果已存在直接修改,否则会报错),如下图所示:

1.8 所有节点将sandbox_image的Pause镜像改成符合自己版本的地址

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

[root@k8s-master01 ~]# vim /etc/containerd/config.toml

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6"1.9 所有节点启动Containerd,并配置开机自启动

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now containerd

[root@k8s-master01 ~]# systemctl status containerd.service

● containerd.service - containerd container runtime

Loaded: loaded (/usr/lib/systemd/system/containerd.service; enabled; vendor preset: disabled)

Active: active (running) since 四 2022-06-30 14:23:16 CST; 2min 36s ago

Docs: https://containerd.io

Process: 1720 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Main PID: 1722 (containerd)

Tasks: 9

Memory: 25.6M

CGroup: /system.slice/containerd.service

└─1722 /usr/bin/containerd

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.759584551+08:00" level=info msg="Start subscribing containerd event"

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.759661538+08:00" level=info msg="Start recovering state"

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.759744630+08:00" level=info msg="Start event monitor"

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.759769518+08:00" level=info msg="Start snapshots syncer"

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.759785914+08:00" level=info msg="Start cni network conf syncer for default"

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.759797273+08:00" level=info msg="Start streaming server"

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.760121521+08:00" level=info msg=serving... address=/run/containerd/containerd.sock.ttrpc

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.760180705+08:00" level=info msg=serving... address=/run/containerd/containerd.sock

6月 30 14:23:16 k8s-master01 containerd[1722]: time="2022-06-30T14:23:16.760285440+08:00" level=info msg="containerd successfully booted in 0.053874s"

6月 30 14:23:16 k8s-master01 systemd[1]: Started containerd container runtime.1.10 所有节点配置crictl客户端连接的运行时位置

[root@k8s-master01 ~]# cat > /etc/crictl.yaml <2、Docker作为Runtime

2.1 所有节点安装docker-ce 20.10:

[root@k8s-master01 ~]# yum install docker-ce-20.10.* docker-ce-cli-20.10.* -y2.2 由于新版Kubelet建议使用systemd,所以把Docker的CgroupDriver也改成systemd

[root@k8s-master01 ~]# mkdir /etc/docker

[root@k8s-master01 ~]# cat > /etc/docker/daemon.json <2.3 所有节点设置开机自启动Docker

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now docker五、k8s及etcd安装

1、Master01下载kubernetes安装包

1.23.0需要更改为你看到的最新版本

[root@k8s-master01 opt]# wget https://dl.k8s.io/v1.23.0/kubernetes-server-linux-amd64.tar.gz2、下载etcd安装包

[root@k8s-master01 ~]# wget https://github.com/etcd-io/etcd/releases/download/v3.5.1/etcd-v3.5.1-linux-amd64.tar.gz3、解压kubernetes安装文件

[root@k8s-master01 opt]# tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}4、解压etcd安装文件

[root@k8s-master01 opt]# tar xf etcd-v3.5.1-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.5.1-linux-amd64/etcd{,ctl}5、版本查看

[root@k8s-master01 opt]# kubelet --version

Kubernetes v1.23.0

[root@k8s-master01 opt]# etcdctl version

etcdctl version: 3.5.1

API version: 3.56、将组件发送到其他节点

[root@k8s-master01 opt]# MasterNodes='k8s-master02 k8s-master03'

[root@k8s-master01 opt]# WorkNodes='k8s-node01 k8s-node02'

[root@k8s-master01 opt]# for NODE in $MasterNodes; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bi

n/etcd* $NODE:/usr/local/bin/; done

[root@k8s-master01 opt]# for NODE in $WorkNodes; do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done7、所有节点创建/opt/cni/bin目录

[root@k8s-master01 opt]# mkdir -p /opt/cni/bin8、切换分支

Master01节点切换到1.23.x分支(其他版本可以切换到其他分支,.x即可,不需要更改为具体的小版本)

[root@k8s-master01 opt]# cd k8s-ha-install/

[root@k8s-master01 k8s-ha-install]# ls

k8s-deployment-strategies.md LICENSE metrics-server-0.3.7 metrics-server-3.6.1 README.md

[root@k8s-master01 k8s-ha-install]# git checkout manual-installation-v1.23.x

分支 manual-installation-v1.23.x 设置为跟踪来自 origin 的远程分支 manual-installation-v1.23.x。

切换到一个新分支 'manual-installation-v1.23.x'

[root@k8s-master01 k8s-ha-install]# ls

bootstrap calico CoreDNS csi-hostpath dashboard kubeadm-metrics-server metrics-server pki snapshotter六、生成证书

1、Master01下载生成证书工具

[root@k8s-master01 ~]# wget "https://pkg.cfssl.org/R1.2/cfssl_linux-amd64" -O /usr/local/bin/cfssl

[root@k8s-master01 ~]# wget "https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64" -O /usr/local/bin/cfssljson

[root@k8s-master01 ~]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson2、生成etcd证书

2.1 所有Master节点创建etcd证书目录

[root@k8s-master01 ~]# mkdir /etc/etcd/ssl -p2.2 所有节点创建kubernetes相关目录

[root@k8s-master01 ~]# mkdir -p /etc/kubernetes/pki2.3 Master01节点生成etcd证书

生成证书的CSR文件:证书签名请求文件,配置了一些域名、公司、单位

[root@k8s-master01 ~]# cd /opt/k8s-ha-install/pki/

[root@k8s-master01 pki]# ls

admin-csr.json ca-config.json etcd-ca-csr.json front-proxy-ca-csr.json kubelet-csr.json manager-csr.json

apiserver-csr.json ca-csr.json etcd-csr.json front-proxy-client-csr.json kube-proxy-csr.json scheduler-csr.json2.4 生成etcd CA证书和CA证书的key

[root@k8s-master01 pki]# cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

2022/06/30 16:07:55 [INFO] generating a new CA key and certificate from CSR

2022/06/30 16:07:55 [INFO] generate received request

2022/06/30 16:07:55 [INFO] received CSR

2022/06/30 16:07:55 [INFO] generating key: rsa-2048

2022/06/30 16:07:56 [INFO] encoded CSR

2022/06/30 16:07:56 [INFO] signed certificate with serial number 487000903415403993808075066317587714337578058114

[root@k8s-master01 pki]# cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,k8s-master01,k8s-master02,k8s-master03,192.168.126.129,192.168.126.130,192.168.126.131 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

2022/06/30 16:09:11 [INFO] generate received request

2022/06/30 16:09:11 [INFO] received CSR

2022/06/30 16:09:11 [INFO] generating key: rsa-2048

2022/06/30 16:09:11 [INFO] encoded CSR

2022/06/30 16:09:11 [INFO] signed certificate with serial number 1607351280812799100269022176081170930062314243202.5 将证书复制到其他节点,etcd安装的节点:master01、master02、master03

[root@k8s-master01 pki]# MasterNodes='k8s-master02 k8s-master03'

[root@k8s-master01 pki]# for NODE in $MasterNodes; do

ssh $NODE "mkdir -p /etc/etcd/ssl"

for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do

scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}

done

done3、k8s组件证书

3.1 Master01生成kubernetes证书

[root@k8s-master01 ~]# cd /opt/k8s-ha-install/pki/

[root@k8s-master01 pki]# ls

admin-csr.json ca-config.json etcd-ca-csr.json front-proxy-ca-csr.json kubelet-csr.json manager-csr.json

apiserver-csr.json ca-csr.json etcd-csr.json front-proxy-client-csr.json kube-proxy-csr.json scheduler-csr.json

[root@k8s-master01 pki]# cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

2022/06/30 16:11:48 [INFO] generating a new CA key and certificate from CSR

2022/06/30 16:11:48 [INFO] generate received request

2022/06/30 16:11:48 [INFO] received CSR

2022/06/30 16:11:48 [INFO] generating key: rsa-2048

2022/06/30 16:11:48 [INFO] encoded CSR

2022/06/30 16:11:48 [INFO] signed certificate with serial number 157560449643835006943538059381618105157085683230注意: 10.96.0.是k8s service的网段,如果说需要更改k8s service网段,那就需要更改10.96.0.1,

如果不是高可用集群,192.168.126.200为Master01的IP

[root@k8s-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.96.0.1,192.168.126.200,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.126.129,192.168.126.130,192.168.126.131 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

2022/07/15 17:00:27 [INFO] generate received request

2022/07/15 17:00:27 [INFO] received CSR

2022/07/15 17:00:27 [INFO] generating key: rsa-2048

2022/07/15 17:00:27 [INFO] encoded CSR

2022/07/15 17:00:27 [INFO] signed certificate with serial number 4179786411363736174319407660111389794296429420963.2 生成apiserver的聚合证书。Requestheader-client-xxx requestheader-allowwd-xxx:aggerator

[root@k8s-master01 pki]# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

2022/06/30 16:18:04 [INFO] generating a new CA key and certificate from CSR

2022/06/30 16:18:04 [INFO] generate received request

2022/06/30 16:18:04 [INFO] received CSR

2022/06/30 16:18:04 [INFO] generating key: rsa-2048

2022/06/30 16:18:05 [INFO] encoded CSR

2022/06/30 16:18:05 [INFO] signed certificate with serial number 591372355293828701333040192461663363975639312296

[root@k8s-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

2022/07/15 17:01:56 [INFO] generate received request

2022/07/15 17:01:56 [INFO] received CSR

2022/07/15 17:01:56 [INFO] generating key: rsa-2048

2022/07/15 17:01:56 [INFO] encoded CSR

2022/07/15 17:01:56 [INFO] signed certificate with serial number 327356221554221234940140333032459089069219790527

2022/07/15 17:01:56 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

3.3 生成controller-manage的证书

[root@k8s-master01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

2022/06/30 16:19:04 [INFO] generate received request

2022/06/30 16:19:04 [INFO] received CSR

2022/06/30 16:19:04 [INFO] generating key: rsa-2048

2022/06/30 16:19:04 [INFO] encoded CSR

2022/06/30 16:19:04 [INFO] signed certificate with serial number 438428207360344357682042985881400341297359876701

2022/06/30 16:19:04 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").3.4 set-cluster:设置一个集群项

注意,如果不是高可用集群,192.168.126.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s-master01 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.126.200:8443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Cluster "kubernetes" set.3.5 设置一个环境项,一个上下文

[root@k8s-master01 pki]# kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Context "system:kube-controller-manager@kubernetes" created.3.6 set-credentials 设置一个用户项

[root@k8s-master01 pki]# kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

User "system:kube-controller-manager" set.3.7 使用某个环境当做默认环境

[root@k8s-master01 pki]# kubectl config use-context system:kube-controller-manager@kubernetes --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Switched to context "system:kube-controller-manager@kubernetes".

[root@k8s-master01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

2022/06/30 16:20:58 [INFO] generate received request

2022/06/30 16:20:58 [INFO] received CSR

2022/06/30 16:20:58 [INFO] generating key: rsa-2048

2022/06/30 16:20:58 [INFO] encoded CSR

2022/06/30 16:20:58 [INFO] signed certificate with serial number 355451230719945708429951668347116585597094494154

2022/06/30 16:20:58 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").注意,如果不是高可用集群,192.168.126.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s-master01 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.126.200:8443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 pki]# kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

User "system:kube-scheduler" set.

[root@k8s-master01 pki]# kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Context "system:kube-scheduler@kubernetes" created.

[root@k8s-master01 pki]# kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Switched to context "system:kube-scheduler@kubernetes".

[root@k8s-master01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

2022/06/30 16:23:27 [INFO] generate received request

2022/06/30 16:23:27 [INFO] received CSR

2022/06/30 16:23:27 [INFO] generating key: rsa-2048

2022/06/30 16:23:27 [INFO] encoded CSR

2022/06/30 16:23:27 [INFO] signed certificate with serial number 273739376786174903578235331044637308572288752056

2022/06/30 16:23:27 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").注意,如果不是高可用集群,192.168.126.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s-master01 pki]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.126.200:8443 --kubeconfig=/etc/kubernetes/admin.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 pki]# kubectl config set-credentials kubernetes-admin --client-certificate=/etc/kubernetes/pki/admin.pem --client-key=/etc/kubernetes/pki/admin-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/admin.kubeconfig

User "kubernetes-admin" set.

[root@k8s-master01 pki]# kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --kubeconfig=/etc/kubernetes/admin.kubeconfig

Context "kubernetes-admin@kubernetes" created.

[root@k8s-master01 pki]# kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig

Switched to context "kubernetes-admin@kubernetes".3.8 创建ServiceAccount Key à secret

[root@k8s-master01 pki]# openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

Generating RSA private key, 2048 bit long modulus

...........................................................+++

.+++

e is 65537 (0x10001)

[root@k8s-master01 pki]# openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

writing RSA key3.9 发送证书至其他节点

[root@k8s-master01 pki]# for NODE in k8s-master02; do

for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do

scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};

done;

for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do

scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE};

done;

done

3.10 查看证书文件

[root@k8s-master01 pki]# ls /etc/kubernetes/pki/

admin.csr apiserver.csr ca.csr controller-manager.csr front-proxy-ca.csr front-proxy-client.csr sa.key scheduler-key.pem

admin-key.pem apiserver-key.pem ca-key.pem controller-manager-key.pem front-proxy-ca-key.pem front-proxy-client-key.pem sa.pub scheduler.pem

admin.pem apiserver.pem ca.pem controller-manager.pem front-proxy-ca.pem front-proxy-client.pem scheduler.csr

[root@k8s-master01 pki]# ls /etc/kubernetes/pki/ |wc -l

23七、etcd配置

k8s的键值数据库,保存集群的一些信息,对集群做的操作都会保存到Etcd数据库中;如果在生产环境中,集群规模特别大的话,建议Etcd机器与Master机器区分开,etcd配置大致相同,注意修改每个Master节点的etcd配置的主机名和IP地址。

| 192.168.126.129 |

Master01 |

Etcd1 |

| 192.168.126.130 | Master02 |

Etcd2 |

| 192.168.126.131 | Master03 |

Etcd3 |

1、Master01配置

[root@k8s-master01 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s-master01'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.126.129:2380'

listen-client-urls: 'https://192.168.126.129:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.126.129:2380'

advertise-client-urls: 'https://192.168.126.129:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://192.168.126.129:2380,k8s-master02=https://192.168.126.130:2380,k8s-master03=https://192.168.126.131:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false2、Master02配置

[root@k8s-master02 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s-master02'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.126.130:2380'

listen-client-urls: 'https://192.168.126.130:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.126.130:2380'

advertise-client-urls: 'https://192.168.126.130:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://192.168.126.129:2380,k8s-master02=https://192.168.126.130:2380,k8s-master03=https://192.168.126.131:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

3、Master03配置

[root@k8s-master03 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s-master03'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.126.131:2380'

listen-client-urls: 'https://192.168.126.131:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.126.131:2380'

advertise-client-urls: 'https://192.168.126.131:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://192.168.126.129:2380,k8s-master02=https://192.168.126.130:2380,k8s-master03=https://192.168.126.131:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

4、创建etcd的Service

[root@k8s-node01 ~]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

5、所有Master节点创建etcd的证书目录

[root@k8s-master01 ~]# mkdir /etc/kubernetes/pki/etcd

[root@k8s-master01 ~]# ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/6、启动etcd

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now etcd

Created symlink from /etc/systemd/system/etcd3.service to /usr/lib/systemd/system/etcd.service.

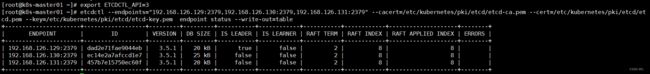

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.7、查看etcd状态

[root@k8s-master01 bin]# export ETCDCTL_API=3

[root@k8s-master01 bin]# etcdctl --endpoints="192.168.126.129:2379,192.168.126.130:2379,192.168.126.131:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 192.168.137.20:2379 | 20cb7e87b49ea5cb | 3.5.1 | 20 kB | false | false | 3 | 12 | 12 | |

| 192.168.137.21:2379 | 5c013a6e225b278d | 3.5.1 | 20 kB | true | false | 3 | 12 | 12 | |

| 192.168.137.22:2379 | 394ddfe89f7d414f | 3.5.1 | 20 kB | false | false | 3 | 12 | 12 | |

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+八、Master节点配置高可用集群

高可用配置(注意:如果不是高可用集群,haproxy和keepalived无需安装)

如果在云上安装也无需执行此章节的步骤,可以直接使用云上的lb,比如阿里云slb,腾讯云elb等

公有云要用公有云自带的负载均衡,比如阿里云的SLB,腾讯云的ELB,用来替代haproxy和keepalived,因为公有云大部分都是不支持keepalived的,另外如果用阿里云的话,kubectl控制端不能放在master节点,推荐使用腾讯云,因为阿里云的slb有回环的问题,也就是slb代理的服务器不能反向访问SLB,但是腾讯云修复了这个问题。

1、所有Master节点安装keepalived和haproxy

[root@k8s-master01 ~]# yum install keepalived haproxy -y2、所有Master配置HAProxy,配置一样

[root@k8s-master01 ~]# vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 192.168.126.129:6443 check

server k8s-master02 192.168.126.130:6443 check

server k8s-master03 192.168.126.131:6443 check

3、所有Master节点配置KeepAlived

3.1 Master01配置

[root@k8s-master01 pki]# vim /etc/keepalived/keepalived.conf

# 注意每个节点的IP和网卡(interface参数)

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.137.129

virtual_router_id 51

priority 101

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.137.200

}

track_script {

chk_apiserver

} }

3.2 Master02配置

[root@k8s-master02 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.126.130

virtual_router_id 51

priority 101

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.126.200

}

track_script {

chk_apiserver

} }

3.3 Master03配置

[root@k8s-master03 pki]# vim /etc/keepalived/keepalived.conf

# 注意每个节点的IP和网卡(interface参数)

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.137.131

virtual_router_id 51

priority 101

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.137.200

}

track_script {

chk_apiserver

} }

4、所有master节点健康检查配置脚本

# 编辑check_apiserver.sh

[root@k8s-master01 ~]# vim /etc/keepalived/check_apiserver.sh

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

5、授权脚本

[root@k8s-master01 ~]# chmod +x /etc/keepalived/check_apiserver.sh6、所有master节点启动haproxy和keepalived

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now haproxy

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

[root@k8s-master01 ~]# systemctl enable --now keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.7、VIP测试

[root@k8s-master01 ~]# ping 192.168.126.200

PING 192.168.126.200 (192.168.126.200) 56(84) bytes of data.

64 bytes from 192.168.126.200: icmp_seq=1 ttl=64 time=0.539 ms

64 bytes from 192.168.126.200: icmp_seq=2 ttl=64 time=0.351 ms

^C

--- 192.168.126.200 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1054ms

rtt min/avg/max/mdev = 0.351/0.445/0.539/0.094 ms

[root@k8s-master01 keepalived]# telnet 192.168.126.200 8443

Trying 172.20.10.200...

Connected to 172.20.10.200.

Escape character is '^]'.

Connection closed by foreign host.

九、Kubernetes组件配置

所有节点创建相关目录:

[root@k8s-master01 ~]# mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes1、安装Apiserver

所有Master节点创建kube-apiserver service,# 注意,如果不是高可用集群,192.168.126.200改为master01的地址

1.1 Master01配置

注意本文档使用的k8s service网段为10.96.0.0/16,该网段不能和宿主机的网段、Pod网段的重复,请按需修改

[root@k8s-master01 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.126.129 \

--service-cluster-ip-range=10.96.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.126.129:2379,https://192.168.126.130:2379,https://192.168.126.131:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

1.2 Master02配置

注意本文档使用的k8s service网段为10.96.0.0/16,该网段不能和宿主机的网段、Pod网段的重复,请按需修改

[root@k8s-master02 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.126.130 \

--service-cluster-ip-range=10.96.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.126.129:2379,https://192.168.126.130:2379,https://192.168.126.131:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

1.3 Master03配置

[root@k8s-master03 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.126.131 \

--service-cluster-ip-range=10.96.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.126.129:2379,https://192.168.126.130:2379,https://192.168.126.131:2a379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target1.4 所有Master节点开启kube-apiserver

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now kube-apiserver

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.1.5 检测kube-apiserver状态

[root@k8s-master01 ~]# systemctl status kube-apiserver

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since 四 2022-06-30 17:09:19 CST; 7s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 15096 (kube-apiserver)

Tasks: 13

Memory: 238.7M

CGroup: /system.slice/kube-apiserver.service

└─15096 /usr/local/bin/kube-apiserver --v=2 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --secure-port=6443 --insecure-port=0 --advertise-address=192.168.13...

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.875147 15096 cluster_authentication_trust_controller.go:165] writing updated authentication info to kube-s...hentication

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.885094 15096 cache.go:39] Caches are synced for APIServiceRegistrationController controller

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.885523 15096 shared_informer.go:247] Caches are synced for crd-autoregister

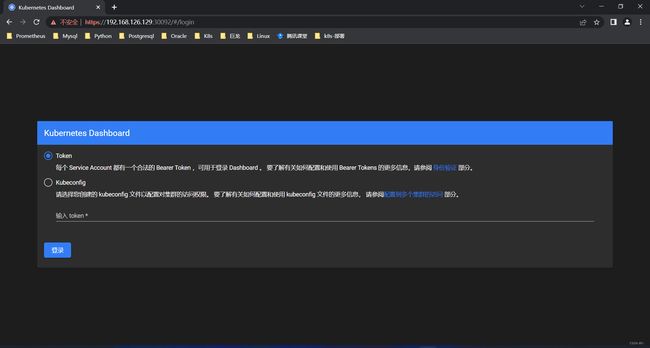

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.886995 15096 cache.go:39] Caches are synced for AvailableConditionController controller

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.888674 15096 genericapiserver.go:406] MuxAndDiscoveryComplete has all endpoints registered and discovery in...is complete

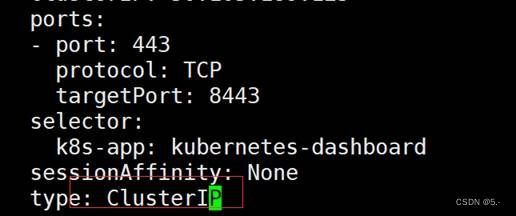

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.936652 15096 controller.go:611] quota admission added evaluator for: namespaces

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.939697 15096 cacher.go:799] cacher (*apiregistration.APIService): 1 objects queued in incoming channel.

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.939793 15096 cacher.go:799] cacher (*apiregistration.APIService): 2 objects queued in incoming channel.

6月 30 17:09:26 k8s-master01 kube-apiserver[15096]: I0630 17:09:26.941049 15096 strategy.go:233] "Successfully created FlowSchema" type="suggested" name="system-nodes"

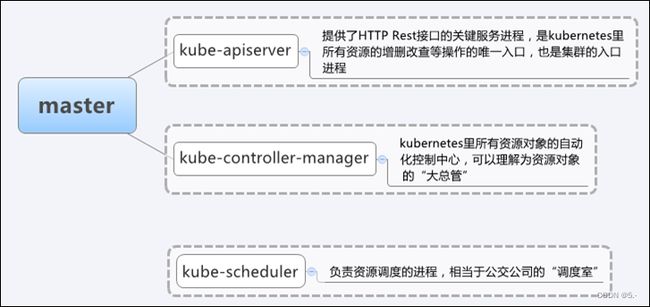

6月 30 17:09:27 k8s-master01 kube-apiserver[15096]: I0630 17:09:27.033187 15096 strategy.go:233] "Successfully created FlowSchema" type="suggested" name="system-node-high"2、安装ControllerManager

所有Master节点配置kube-controller-manager service(所有master节点配置一样)

注意本文档使用的k8s Pod网段为172.16.0.0/12,该网段不能和宿主机的网段、k8s Service网段的重复,请按需修改

2.1 所有Master节点添加配置文件kube-controller-manager.service

[root@k8s-master01 ~]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--cluster-cidr=172.16.0.0/12 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

2.2 所有Master节点启动kube-controller-manager

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now kube-controller-manager

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.2.3 查看启动状态

[root@k8s-master01 ~]# systemctl status kube-controller-manager

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since 四 2022-06-30 17:11:30 CST; 25s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 15269 (kube-controller)

Tasks: 10

Memory: 41.0M

CGroup: /system.slice/kube-controller-manager.service

└─15269 /usr/local/bin/kube-controller-manager --v=2 --logtostderr=true --address=127.0.0.1 --root-ca-file=/etc/kubernetes/pki/ca.pem --cluster-signing-cert-file=/etc/kubernetes...

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.423920 15269 shared_informer.go:247] Caches are synced for resource quota

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.462985 15269 shared_informer.go:247] Caches are synced for namespace

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.470820 15269 shared_informer.go:247] Caches are synced for cronjob

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.470881 15269 shared_informer.go:247] Caches are synced for service account

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.492839 15269 shared_informer.go:247] Caches are synced for resource quota

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.492882 15269 resource_quota_controller.go:458] synced quota controller

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.940987 15269 shared_informer.go:247] Caches are synced for garbage collector

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.941029 15269 garbagecollector.go:155] Garbage collector: all resource monitors have synced. Proceed...ct garbage

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.992443 15269 shared_informer.go:247] Caches are synced for garbage collector

6月 30 17:11:46 k8s-master01 kube-controller-manager[15269]: I0630 17:11:46.992487 15269 garbagecollector.go:251] synced garbage collector

Hint: Some lines were ellipsized, use -l to show in full.3、安装Scheduler

所有Master节点配置kube-scheduler service(所有master节点配置一样)

3.1 所有Master节点配置kube-scheduler.service

[root@k8s-master02 ~]# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

3.2 所有master节点启动scheduler

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now kube-scheduler

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@k8s-master01 ~]# systemctl status kube-scheduler

● kube-scheduler.service - Kubernetes Scheduler

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)

Active: active (running) since 四 2022-06-30 17:12:58 CST; 10s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 15390 (kube-scheduler)

Tasks: 7

Memory: 19.2M

CGroup: /system.slice/kube-scheduler.service

└─15390 /usr/local/bin/kube-scheduler --v=2 --logtostderr=true --address=127.0.0.1 --leader-elect=true --kubeconfig=/etc/kubernetes/scheduler.kubeconfig

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: reserve: {}

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: score: {}

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: schedulerName: default-scheduler

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: ------------------------------------Configuration File Contents End Here---------------------------------

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: I0630 17:13:00.502751 15390 server.go:139] "Starting Kubernetes Scheduler" version="v1.23.0"

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: I0630 17:13:00.524215 15390 tlsconfig.go:200] "Loaded serving cert" certName="Generated self signed cert" certDetail="\"localhost@165...

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: I0630 17:13:00.524655 15390 named_certificates.go:53] "Loaded SNI cert" index=0 certName="self-signed loopback" certDetail="\"apiserv...

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: I0630 17:13:00.524819 15390 secure_serving.go:200] Serving securely on [::]:10259

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: I0630 17:13:00.526477 15390 tlsconfig.go:240] "Starting DynamicServingCertificateController"

6月 30 17:13:00 k8s-master01 kube-scheduler[15390]: I0630 17:13:00.627135 15390 leaderelection.go:248] attempting to acquire leader lease kube-system/kube-scheduler...

Hint: Some lines were ellipsized, use -l to show in full.十、TLS Bootstrapping配置

1、在Master01创建bootstrap

注意,如果不是高可用集群,192.168.126.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s-master01 ~]# cd /opt/k8s-ha-install/bootstrap/

[root@k8s-master01 bootstrap]# ls

bootstrap.secret.yaml

[root@k8s-master01 bootstrap]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.126.200:8443 --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 bootstrap]# kubectl config set-credentials tls-bootstrap-token-user --token=c8ad9c.2e4d610cf3e7426e --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

User "tls-bootstrap-token-user" set.

[root@k8s-master01 bootstrap]# kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes --user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

Context "tls-bootstrap-token-user@kubernetes" created.

[root@k8s-master01 bootstrap]# kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

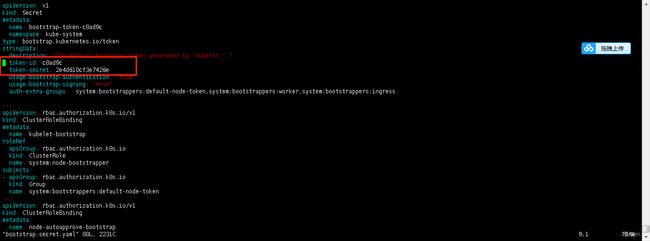

Switched to context "tls-bootstrap-token-user@kubernetes".注意:如果要修改bootstrap.secret.yaml的token-id和token-secret,需要保证下图红圈内的字符串一致的,并且位数是一样的。还要保证上个命令的黄色字体:c8ad9c.2e4d610cf3e7426e与你修改的字符串要一致

[root@k8s-master01 bootstrap]# mkdir -p /root/.kube ; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config2、可以正常查询集群状态,才可以继续往下,否则不行,需要排查k8s组件是否有故障

[root@k8s-master01 bootstrap]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

etcd-1 Healthy {"health":"true","reason":""}

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""} 3、创建bootstrap的secret

[root@k8s-master01 bootstrap]# kubectl create -f bootstrap.secret.yaml

secret/bootstrap-token-c8ad9c created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-certificate-rotation created

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created十一、Node节点配置

1、Master01节点复制证书至Node节点

2、Kubelet配置

2.1 所有节点创建相关目录

[root@k8s-master01 ~]# mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/2.2 所有节点配置kubelet service

[root@k8s-master01 ~]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

2.3 所有节点配置kubelet service的配置文件(也可以写到kubelet.service)

2.3.1 如果Runtime为Containerd,请使用如下Kubelet的配置

[root@k8s-master01 ~]# vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock --cgroup-driver=systemd"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' "

ExecStart=

ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

2.3.2 如果Runtime为Docker,请使用如下Kubelet的配置

[root@k8s-master01 ~]#vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' "

ExecStart=

ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

2.4 创建kubelet的配置文件

注意:如果更改了k8s的service网段,需要更改kubelet-conf.yml 的clusterDNS:配置,改成k8s Service网段的第十个地址,比如10.96.0.10

[root@k8s-master01 kubernetes]# vim /etc/kubernetes/kubelet-conf.yml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

2.5 启动所有节点kubelet

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now kubelet此时系统日志/var/log/messages显示只有如下信息为正常

Unable to update cni config: no networks found in /etc/cni/net.d

如果有很多报错日志,或者有大量看不懂的报错,说明kubelet的配置有误,需要检查kubelet配置

2.6 查看集群状态

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady 36s v1.23.0

k8s-master02 NotReady 35s v1.23.0

k8s-node01 NotReady 36s v1.23.0

k8s-node02 NotReady 35s v1.23.0 3、安装kube-proxy

# 注意,如果不是高可用集群,192.168.126.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

3.1 以下操作只在Master01执行

[root@k8s-master01 ~]# cd /opt/k8s-ha-install/

[root@k8s-master01 k8s-ha-install]# kubectl -n kube-system create serviceaccount kube-proxy

serviceaccount/kube-proxy created

[root@k8s-master01 k8s-ha-install]# kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy

clusterrolebinding.rbac.authorization.k8s.io/system:kube-proxy created

[root@k8s-master01 k8s-ha-install]# SECRET=$(kubectl -n kube-system get sa/kube-proxy --output=jsonpath='{.secrets[0].name}')

[root@k8s-master01 k8s-ha-install]# JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET --output=jsonpath='{.data.token}' | base64 -d)

[root@k8s-master01 k8s-ha-install]# PKI_DIR=/etc/kubernetes/pki

[root@k8s-master01 k8s-ha-install]# K8S_DIR=/etc/kubernetes

[root@k8s-master01 k8s-ha-install]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.126.200:8443 --kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 k8s-ha-install]# kubectl config set-credentials kubernetes --token=${JWT_TOKEN} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

User "kubernetes" set.

[root@k8s-master01 k8s-ha-install]# kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

Context "kubernetes" created.

[root@k8s-master01 k8s-ha-install]# kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

Switched to context "kubernetes".

3.2 将kubeconfig发送至其他节点

[root@k8s-master01 k8s-ha-install]# for NODE in k8s-master02; do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

done

kube-proxy.kubeconfig 100% 3123 1.1MB/s 00:00

[root@k8s-master01 k8s-ha-install]# for NODE in k8s-node01 k8s-node02; do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

scp kube-proxy/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

scp kube-proxy/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

done

kube-proxy.kubeconfig 100% 3123 1.3MB/s 00:00

kube-proxy.kubeconfig 100% 3123 325.9KB/s 00:00 3.3 所有节点添加kube-proxy的配置和service文件

[root@k8s-master01 k8s-ha-install]# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

3.4 创建kube-proxy资源

如果更改了集群Pod的网段,需要更改kube-proxy.yaml的clusterCIDR为自己的Pod网段

[root@k8s-master01 k8s-ha-install]# vim /etc/kubernetes/kube-proxy.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 172.16.0.0/12

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms3.5 所有节点启动kube-proxy

[root@k8s-master01 k8s-ha-install]# systemctl daemon-reload

[root@k8s-master01 k8s-ha-install]# systemctl enable --now kube-proxy

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@k8s-master01 k8s-ha-install]# systemctl status kube-proxy

● kube-proxy.service - Kubernetes Kube Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since 四 2022-06-30 17:29:20 CST; 9s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 16554 (kube-proxy)

Tasks: 6

Memory: 15.2M

CGroup: /system.slice/kube-proxy.service

└─16554 /usr/local/bin/kube-proxy --config=/etc/kubernetes/kube-proxy.yaml --v=2

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.506305 16554 config.go:317] "Starting service config controller"

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.506346 16554 shared_informer.go:240] Waiting for caches to sync for service config

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.506437 16554 config.go:226] "Starting endpoint slice config controller"

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.506454 16554 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.533005 16554 service.go:304] "Service updated ports" service="default/kubernetes" portCount=1

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.606501 16554 shared_informer.go:247] Caches are synced for service config

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.606618 16554 proxier.go:1000] "Not syncing ipvs rules until Services and Endpoints have been received from master"

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.606677 16554 proxier.go:1000] "Not syncing ipvs rules until Services and Endpoints have been received from master"

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.606742 16554 shared_informer.go:247] Caches are synced for endpoint slice config

6月 30 17:29:21 k8s-master01 kube-proxy[16554]: I0630 17:29:21.606839 16554 service.go:419] "Adding new service port" portName="default/kubernetes:https" servicePort="192.168.0.1:443/TCP"

Hint: Some lines were ellipsized, use -l to show in full.

十二、安装Calico

1、Calico介绍

Calico 是一套开源的网络和网络安全方案,用于容器、虚拟机、宿主机之前的网络连接,可以用在kubernetes、OpenShift、DockerEE、OpenStrack等PaaS或IaaS平台上。

2、安装官方推荐版本(推荐)

以下步骤只在master01执行:

2.1 修改calico.yaml的Pod网段

[root@k8s-master01 ~]# cd /opt/k8s-ha-install/calico/

[root@k8s-master01 calico]# ls

calico-etcd.yaml calico.yaml

[root@k8s-master01 calico]# cat calico.yaml

# 更改calico的网段,主要需要将红色部分的网段,改为自己的Pod网段

[root@k8s-master01 calico]# sed -i "s#POD_CIDR#172.16.0.0/12#g" calico.yaml

检查网段是自己的Pod网段:

[root@k8s-master01 calico]# grep "IPV4POOL_CIDR" calico.yaml -A 1

- name: CALICO_IPV4POOL_CIDR

value: "172.16.0.0/12"2.2 安装Calio

[root@k8s-master01 calico]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

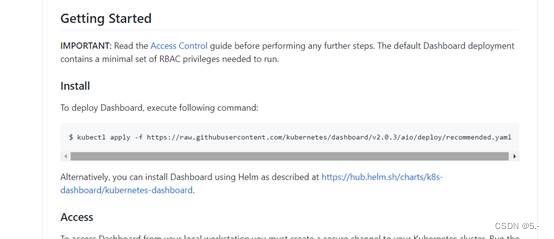

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created