手动安装高可用k8s集群(二进制)v1.23.4版本

目录

一、环境信息

1.1、集群信息

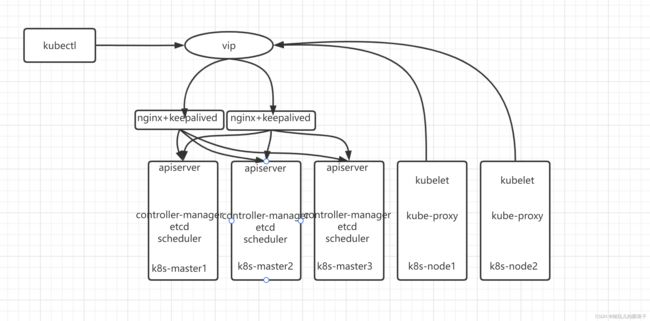

1.2 架构图

二、初始化准备

2.1 、设置主机名并添加HOSTS

2.2 配置免密登录

2.3 关闭selinux

2.4 关闭swap

2.5 关闭firewalld

2.6 修改内核参数

2.7 配置yum repo源

2.8 配置时间同步

2.9 安装Iptables

2.10 开启ipvs

2.11 安装编译环境和常用软件

2.12 安装docker

三、安装组件

3.1 创建CA 证书和密钥

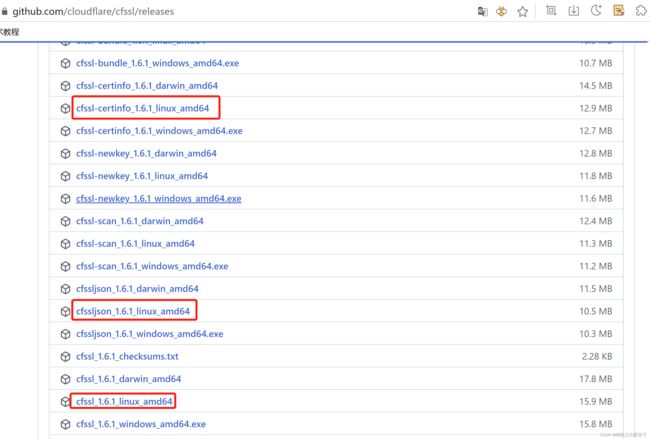

3.1.1 安装 CFSSL证书签发工具

3.1.2 创建CA

3.1.3 分发证书

3.2 部署高可用etcd集群

3.2.1 下载etcd

3.2.2 创建TLS 密钥和证书

3.2.3 创建配置文件

3.2.4 创建systemd unit文件

3.2.5 启动etcd

3.2.6 验证集群

3.3 安装K8s组件

3.3.1 下载二进制包

3.3.2 部署apiserver

3.3.3 安装kubectl工具

3.3.4 部署kube-controller-manager

3.3.5 部署kube-scheduler组件

3.3.6 安装kubelet

3.3.7 部署kube-proxy

3.3.8 安装calico网络插件

3.3.9 安装dns

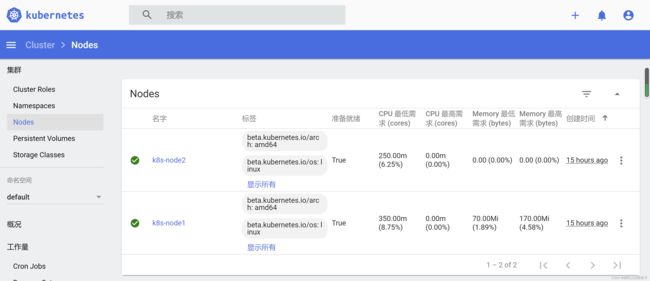

四、安装dashboard

4.1 下载yaml文件

4.2 部署dashboard

4.3 访问

五、配置高可用

5.1 安装nginx和keepalived

5.2 启动并验证

5.3 修改所有k8s指向apiserver的地址到vip

一、环境信息

1.1、集群信息

| 主机名 | 角色 | IP | 组件 |

| k8s-master1 | master | 192.168.3.61 | nginx、keepalived apiserver,controller-manager、etcd、scheduler |

| k8s-master2 | master | 192.168.3.62 | nginx、keepalived apiserver,controller-manager、etcd、scheduler |

| k8s-master3 | master | 192.168.3.63 | apiserver,controller-manager、etcd、scheduler |

| k8s-node1 | node | 192.168.3.64 | kubelet、kube-proxy |

| k8s-node2 | node | 192.168.3.65 | kubelet、kube-proxy |

| vip | vip | 192.168.3.60 |

1.2 架构图

凑合看吧

二、初始化准备

首先按1.1中集群信息准备好机器,并设置好IP

2.1 、设置主机名并添加HOSTS

按照1.1表格信息设置每一台的主机名

[root@localhost ~]# hostnamectl set-hostname k8s-master1

[root@localhost ~]#

[root@localhost ~]# cat /etc/hosts

192.168.3.61 k8s-master1

192.168.3.62 k8s-master2

192.168.3.63 k8s-master3

192.168.3.64 k8s-node1

192.168.3.65 k8s-node2

2.2 配置免密登录

配置三个master节点可以免密登录所有机器,在三个master节点上执行,

[root@localhost ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:Rt+CFiJmUtfV6LmHQQ9LW7tJMbpxGql1XYti7q+jeNY root@k8s-node2

The key's randomart image is:

+---[RSA 3072]----+

| . .. ..o |

| . . . = = .|

| . + . o+ X =...|

| + . o +%+*... |

| So=%oo |

| o. =o+ |

| o. |

| .o E |

| .o...+. |

+----[SHA256]-----+

[root@localhost ~]#

[root@localhost ~]#

[root@localhost ~]# ll

总用量 4

-rw-------. 1 root root 1187 2月 20 15:41 anaconda-ks.cfg

[root@localhost ~]# ssh-copy-id -i .ssh/id_rsa.pub k8s-master1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'k8s-master1 (192.168.3.61)' can't be established.

ECDSA key fingerprint is SHA256:/z2wiIxMLuXcbG5132CVezhjJtQf9BEQSM77zSDGzoI.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-master1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-master1'"

and check to make sure that only the key(s) you wanted were added.

[root@localhost ~]# ssh-copy-id -i .ssh/id_rsa.pub k8s-master2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'k8s-master2 (192.168.3.62)' can't be established.

ECDSA key fingerprint is SHA256:/z2wiIxMLuXcbG5132CVezhjJtQf9BEQSM77zSDGzoI.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-master2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-master2'"

and check to make sure that only the key(s) you wanted were added.

[root@localhost ~]# ssh-copy-id -i .ssh/id_rsa.pub k8s-master3

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'k8s-master3 (192.168.3.63)' can't be established.

ECDSA key fingerprint is SHA256:/z2wiIxMLuXcbG5132CVezhjJtQf9BEQSM77zSDGzoI.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-master3's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-master3'"

and check to make sure that only the key(s) you wanted were added.

[root@localhost ~]# ssh-copy-id -i .ssh/id_rsa.pub k8s-node1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'k8s-node1 (192.168.3.64)' can't be established.

ECDSA key fingerprint is SHA256:/z2wiIxMLuXcbG5132CVezhjJtQf9BEQSM77zSDGzoI.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-node1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-node1'"

and check to make sure that only the key(s) you wanted were added.

[root@localhost ~]# ssh-copy-id -i .ssh/id_rsa.pub k8s-node2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'k8s-node2 (192.168.3.65)' can't be established.

ECDSA key fingerprint is SHA256:/z2wiIxMLuXcbG5132CVezhjJtQf9BEQSM77zSDGzoI.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-node2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-node2'"

and check to make sure that only the key(s) you wanted were added.

[root@localhost ~]# ssh k8s-node2

Last login: Sun Feb 20 18:23:50 2022 from 192.168.3.202

[root@k8s-node2 ~]# exit

注销

Connection to k8s-node2 closed.

[root@localhost ~]#

2.3 关闭selinux

在所有机器上关闭selinux

[root@localhost ~]# vi /etc/sysconfig/selinux

SELINUX=disabled #修改成disabled

[root@localhost ~]# setenforce 0

[root@localhost ~]# getenforce #也可重启生效

Permissive

2.4 关闭swap

[root@localhost ~]# swapoff -a

[root@localhost ~]# vi /etc/fstab

[root@localhost ~]# df -h

#注释掉 #/dev/mapper/cl-swap none swap defaults 0 0

[root@localhost ~]# free -g

total used free shared buff/cache available

Mem: 3 0 2 0 0 3

Swap: 0 0 0

[root@localhost ~]#

2.5 关闭firewalld

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

2.6 修改内核参数

[root@localhost ~]# modprobe br_netfilter

[root@localhost ~]# lsmod |grep br_netfilter

br_netfilter 24576 0

bridge 200704 1 br_netfilter

[root@localhost ~]# cat > /etc/sysctl.d/k8s.conf < net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

[root@localhost ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@localhost ~]#

说明

modprobe br_netfilter如果不加载次模块

sysctl -p /etc/sysctl.d/k8s.conf出现报错:

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

如不开启net.bridge.bridge-nf-call-iptables

在centos下安装docker,执行docker info出现如下警告:

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

如不开启net.ipv4.ip_forward = 1参数

kubeadm初始化k8s如果报错: ERROR FileContent--proc-sys-net-ipv4-ip_forward] : .....

net.ipv4.ip_forward是数据包转发:

Linux系统默认禁止数据包转发。所谓转发即当主机拥有多于一块的网卡时,其中一块收到数据包,根据数据包的目的ip地址将数据包发往本机另一块网卡,该网卡根据路由表继续发送数据包。这通常是路由器所要实现的功能,要让Linux系统具有路由转发功能,需要配置一个Linux的内核参数net.ipv4.ip_forward。这个参数指定了Linux系统当前对路由转发功能的支持情况;

2.7 配置yum repo源

[root@localhost ~]# mkdir /etc/yum.repos.d/bak

[root@localhost ~]# mv /etc/yum.repos.d/

bak/ CentOS-Linux-Devel.repo CentOS-Linux-Plus.repo

CentOS-Linux-AppStream.repo CentOS-Linux-Extras.repo CentOS-Linux-PowerTools.repo

CentOS-Linux-BaseOS.repo CentOS-Linux-FastTrack.repo CentOS-Linux-Sources.repo

CentOS-Linux-ContinuousRelease.repo CentOS-Linux-HighAvailability.repo

CentOS-Linux-Debuginfo.repo CentOS-Linux-Media.repo

[root@localhost ~]# mv /etc/yum.repos.d/CentOS-Linux-* /etc/yum.repos.d/bak/

[root@localhost ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2495 100 2495 0 0 14339 0 --:--:-- --:--:-- --:--:-- 14257

[root@localhost ~]# yum clean all

0 文件已删除

[root@localhost ~]# yum install lrzsz

CentOS-8.5.2111 - Base - mirrors.aliyun.com 2.4 MB/s | 4.6 MB 00:01

CentOS-8.5.2111 - Extras - mirrors.aliyun.com 22 kB/s | 10 kB 00:00

CentOS-8.5.2111 - AppStream - mirrors.aliyun.com 1.6 MB/s | 8.4 MB 00:05

依赖关系解决。

===============================================================================================================================

软件包 架构 版本 仓库 大小

===============================================================================================================================

安装:

lrzsz x86_64 0.12.20-43.el8 base 84 k

事务概要

===============================================================================================================================

安装 1 软件包

总下载:84 k

安装大小:190 k

确定吗?[y/N]: y

下载软件包:

lrzsz-0.12.20-43.el8.x86_64.rpm 495 kB/s | 84 kB 00:00

-------------------------------------------------------------------------------------------------------------------------------

总计 492 kB/s | 84 kB 00:00

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : lrzsz-0.12.20-43.el8.x86_64 1/1

运行脚本: lrzsz-0.12.20-43.el8.x86_64 1/1

验证 : lrzsz-0.12.20-43.el8.x86_64 1/1

已安装:

lrzsz-0.12.20-43.el8.x86_64

完毕!

[root@localhost ~]#

2.8 配置时间同步

[root@localhost ~]# yum install -y chrony

上次元数据过期检查:0:03:17 前,执行于 2022年02月20日 星期日 03时29分28秒。

软件包 chrony-4.1-1.el8.x86_64 已安装。

依赖关系解决。

无需任何处理。

完毕!

[root@localhost ~]# vi /etc/chrony.conf

#pool 2.centos.pool.ntp.org iburst #注释掉

server ntp.aliyun.com iburst

[root@localhost ~]# systemctl restart chronyd.service

[root@localhost ~]# systemctl enable chronyd

[root@localhost ~]# chronyc sources -v

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current best, '+' = combined, '-' = not combined,

| / 'x' = may be in error, '~' = too variable, '?' = unusable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 203.107.6.88 2 6 17 14 +343us[ +831us] +/- 20ms

[root@localhost ~]# date

2022年 02月 20日 星期日 03:35:01 EST

[root@localhost ~]# date -R #发现时区不对

Sun, 20 Feb 2022 03:36:03 -0500

[root@localhost ~]# timedatectl set-timezone Asia/Shanghai #修改时区

[root@localhost ~]# date

2022年 02月 20日 星期日 16:36:30 CST

[root@localhost ~]#

2.9 安装Iptables

安装并清空规则,关闭即可,k8s自己初始化

[root@localhost ~]# yum install iptables-services -y

上次元数据过期检查:0:09:39 前,执行于 2022年02月20日 星期日 16时29分28秒。

依赖关系解决。

===============================================================================================================================

软件包 架构 版本 仓库 大小

===============================================================================================================================

安装:

iptables-services x86_64 1.8.4-20.el8 base 63 k

事务概要

===============================================================================================================================

安装 1 软件包

总下载:63 k

安装大小:20 k

下载软件包:

iptables-services-1.8.4-20.el8.x86_64.rpm 263 kB/s | 63 kB 00:00

-------------------------------------------------------------------------------------------------------------------------------

总计 261 kB/s | 63 kB 00:00

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : iptables-services-1.8.4-20.el8.x86_64 1/1

运行脚本: iptables-services-1.8.4-20.el8.x86_64 1/1

验证 : iptables-services-1.8.4-20.el8.x86_64 1/1

已安装:

iptables-services-1.8.4-20.el8.x86_64

完毕!

[root@localhost ~]# service iptables stop && systemctl disable iptables

Redirecting to /bin/systemctl stop iptables.service

[root@localhost ~]# iptables -F

[root@localhost ~]#

2.10 开启ipvs

不开启ipvs将会使用iptables进行数据包转发,但是效率低,所以推荐开通ipvs。

[root@localhost ~]# cat /etc/sysconfig/modules/ipvs.modules

modprobe -- ip_vs

modprobe -- ip_vs_lc

modprobe -- ip_vs_wlc

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_lblc

modprobe -- ip_vs_lblcr

modprobe -- ip_vs_dh

modprobe -- ip_vs_sh

modprobe -- ip_vs_nq

modprobe -- ip_vs_sed

modprobe -- ip_vs_ftp

modprobe -- nf_conntrack

[root@localhost ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules

[root@localhost ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@localhost ~]# lsmod | grep ip_vs

ip_vs_ftp 16384 0

ip_vs_sed 16384 0

ip_vs_nq 16384 0

ip_vs_dh 16384 0

ip_vs_lblcr 16384 0

ip_vs_lblc 16384 0

ip_vs_wlc 16384 0

ip_vs_lc 16384 0

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 22 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

nf_nat 45056 2 nft_chain_nat,ip_vs_ftp

nf_conntrack 172032 3 nf_nat,nft_ct,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

2.11 安装编译环境和常用软件

[root@localhost ~]# yum install wget vim make gcc gcc-c++ ipvsadm telnet net-tools -y

上次元数据过期检查:0:26:42 前,执行于 2022年02月20日 星期日 16时29分28秒。

依赖关系解决。

===============================================================================================================================

软件包 架构 版本 仓库 大小

===============================================================================================================================

安装:

... ...

已升级:

libgcc-8.5.0-4.el8_5.x86_64 libgomp-8.5.0-4.el8_5.x86_64 libstdc++-8.5.0-4.el8_5.x86_64

已安装:

binutils-2.30-108.el8_5.1.x86_64 cpp-8.5.0-4.el8_5.x86_64 gcc-8.5.0-4.el8_5.x86_64

gcc-c++-8.5.0-4.el8_5.x86_64 glibc-devel-2.28-164.el8.x86_64 glibc-headers-2.28-164.el8.x86_64

gpm-libs-1.20.7-17.el8.x86_64 ipvsadm-1.31-1.el8.x86_64 isl-0.16.1-6.el8.x86_64

kernel-headers-4.18.0-348.7.1.el8_5.x86_64 libmetalink-0.1.3-7.el8.x86_64 libmpc-1.1.0-9.1.el8.x86_64

libpkgconf-1.4.2-1.el8.x86_64 libstdc++-devel-8.5.0-4.el8_5.x86_64 libxcrypt-devel-4.1.1-6.el8.x86_64

make-1:4.2.1-10.el8.x86_64 net-tools-2.0-0.52.20160912git.el8.x86_64 pkgconf-1.4.2-1.el8.x86_64

pkgconf-m4-1.4.2-1.el8.noarch pkgconf-pkg-config-1.4.2-1.el8.x86_64 telnet-1:0.17-76.el8.x86_64

vim-common-2:8.0.1763-16.el8.x86_64 vim-enhanced-2:8.0.1763-16.el8.x86_64 vim-filesystem-2:8.0.1763-16.el8.noarch

wget-1.19.5-10.el8.x86_64

完毕!

[root@localhost ~]#

2.12 安装docker

或者可以使用containerd,本次使用docker,安装docker后并配置镜像加速

[root@localhost ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

上次元数据过期检查:3:09:42 前,执行于 2022年02月20日 星期日 16时29分28秒。

软件包 device-mapper-persistent-data-0.9.0-4.el8.x86_64 已安装。

软件包 lvm2-8:2.03.12-10.el8.x86_64 已安装。

依赖关系解决。

===============================================================================================================================

软件包 架构 版本 仓库 大小

===============================================================================================================================

安装:

yum-utils noarch 4.0.21-3.el8 base 73 k

事务概要

===============================================================================================================================

安装 1 软件包

总下载:73 k

安装大小:22 k

下载软件包:

yum-utils-4.0.21-3.el8.noarch.rpm 333 kB/s | 73 kB 00:00

-------------------------------------------------------------------------------------------------------------------------------

总计 330 kB/s | 73 kB 00:00

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : yum-utils-4.0.21-3.el8.noarch 1/1

运行脚本: yum-utils-4.0.21-3.el8.noarch 1/1

验证 : yum-utils-4.0.21-3.el8.noarch 1/1

已安装:

yum-utils-4.0.21-3.el8.noarch

完毕!

[root@localhost ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

添加仓库自:https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@localhost ~]# sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

[root@localhost ~]# yum -y install docker-ce

Docker CE Stable - x86_64 43 kB/s | 19 kB 00:00

依赖关系解决。

===============================================================================================================================

软件包 架构 版本 仓库 大小

===============================================================================================================================

安装:

docker-ce x86_64 3:20.10.12-3.el8 docker-ce-stable 22 M

安装依赖关系:

。。。 。。。

已安装:

checkpolicy-2.9-1.el8.x86_64 container-selinux-2:2.167.0-1.module_el8.5.0+911+f19012f9.noarch

containerd.io-1.4.12-3.1.el8.x86_64 docker-ce-3:20.10.12-3.el8.x86_64

docker-ce-cli-1:20.10.12-3.el8.x86_64 docker-ce-rootless-extras-20.10.12-3.el8.x86_64

docker-scan-plugin-0.12.0-3.el8.x86_64 fuse-overlayfs-1.7.1-1.module_el8.5.0+890+6b136101.x86_64

fuse3-3.2.1-12.el8.x86_64 fuse3-libs-3.2.1-12.el8.x86_64

libcgroup-0.41-19.el8.x86_64 libslirp-4.4.0-1.module_el8.5.0+890+6b136101.x86_64

policycoreutils-python-utils-2.9-16.el8.noarch python3-audit-3.0-0.17.20191104git1c2f876.el8.x86_64

python3-libsemanage-2.9-6.el8.x86_64 python3-policycoreutils-2.9-16.el8.noarch

python3-setools-4.3.0-2.el8.x86_64 slirp4netns-1.1.8-1.module_el8.5.0+890+6b136101.x86_64

完毕!

[root@localhost ~]#

[root@localhost ~]# mkdir /etc/docker

[root@localhost ~]# vim /etc/docker/daemon.json

{

"registry-mirrors":["https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@localhost ~]# systemctl daemon-reload

[root@localhost ~]# systemctl restart docker

[root@localhost ~]# docker version

Client: Docker Engine - Community

Version: 20.10.12

API version: 1.41

Go version: go1.16.12

Git commit: e91ed57

Built: Mon Dec 13 11:45:22 2021

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.12

API version: 1.41 (minimum version 1.12)

Go version: go1.16.12

Git commit: 459d0df

Built: Mon Dec 13 11:43:44 2021

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.4.12

GitCommit: 7b11cfaabd73bb80907dd23182b9347b4245eb5d

runc:

Version: 1.0.2

GitCommit: v1.0.2-0-g52b36a2

docker-init:

Version: 0.19.0

GitCommit: de40ad0

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@localhost ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

a2abf6c4d29d: Pull complete

a9edb18cadd1: Pull complete

589b7251471a: Pull complete

186b1aaa4aa6: Pull complete

b4df32aa5a72: Pull complete

a0bcbecc962e: Pull complete

Digest: sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 605c77e624dd 7 weeks ago 141MB

[root@localhost ~]#

三、安装组件

3.1 创建CA 证书和密钥

3.1.1 安装 CFSSL证书签发工具

可以去github下载最新版本

https://github.com/cloudflare/cfssl/releases

[root@k8s-master1 ~]# ll cfssl*

-rw-r--r-- 1 root root 16659824 2月 21 09:12 cfssl_1.6.1_linux_amd64

-rw-r--r-- 1 root root 13502544 2月 21 09:12 cfssl-certinfo_1.6.1_linux_amd64

-rw-r--r-- 1 root root 11029744 2月 21 09:12 cfssljson_1.6.1_linux_amd64

[root@k8s-master1 ~]# chmod +x cfssl*

[root@k8s-master1 ~]# cp cfssl_1.6.1_linux_amd64 /usr/local/bin/cfssl

[root@k8s-master1 ~]# cp cfssl-certinfo_1.6.1_linux_amd64 /usr/local/bin/cfssl-certinfo

[root@k8s-master1 ~]# cp cfssljson_1.6.1_linux_amd64 /usr/local/bin/cfssljson

[root@k8s-master1 ~]#

3.1.2 创建CA

[root@k8s-master1 ~]# cfssl print-defaults config > ca-config.json

[root@k8s-master1 ~]# cfssl print-defaults csr > ca-csr.json

[root@k8s-master1 ~]# vim ca-config.json

[root@k8s-master1 ~]# cat ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

-

config.json:可以定义多个profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个profile; signing: 表示该证书可用于签名其它证书;生成的ca.pem 证书中CA=TRUE;server auth: 表示client 可以用该CA 对server 提供的证书进行校验;client auth: 表示server 可以用该CA 对client 提供的证书进行验证。

[root@k8s-master1 ~]# vim ca-csr.json

[root@k8s-master1 ~]# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "87600h"

}

}

CN:Common Name,kube-apiserver 从证书中提取该字段作为请求的用户名(User Name);浏览器使用该字段验证网站是否合法;O:Organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组(Group);

生成CA 证书和私钥

[root@k8s-master1 ~]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2022/02/21 09:47:21 [INFO] generating a new CA key and certificate from CSR

2022/02/21 09:47:21 [INFO] generate received request

2022/02/21 09:47:21 [INFO] received CSR

2022/02/21 09:47:21 [INFO] generating key: rsa-2048

2022/02/21 09:47:22 [INFO] encoded CSR

2022/02/21 09:47:22 [INFO] signed certificate with serial number 562969161462549692386669206897984170659799392725

[root@k8s-master1 ~]# ll ca*

-rw-r--r-- 1 root root 387 2月 21 09:20 ca-config.json

-rw-r--r-- 1 root root 1045 2月 21 09:47 ca.csr

-rw-r--r-- 1 root root 257 2月 21 09:45 ca-csr.json

-rw------- 1 root root 1679 2月 21 09:47 ca-key.pem

-rw-r--r-- 1 root root 1310 2月 21 09:47 ca.pem

3.1.3 分发证书

将生成的CA 证书、密钥文件、配置文件拷贝到所有机器的/etc/kubernetes/ssl目录下

[root@k8s-master1 ~]# mkdir -p /etc/kubernetes/ssl

[root@k8s-master1 ~]# cp ca* /etc/kubernetes/ssl

[root@k8s-master1 ~]# ssh k8s-master2 "mkdir -p /etc/kubernetes/ssl"

[root@k8s-master1 ~]# ssh k8s-master3 "mkdir -p /etc/kubernetes/ssl"

[root@k8s-master1 ~]# ssh k8s-node1 "mkdir -p /etc/kubernetes/ssl"

[root@k8s-master1 ~]# ssh k8s-node2 "mkdir -p /etc/kubernetes/ssl"

[root@k8s-master1 ~]# scp ca* k8s-master2:/etc/kubernetes/ssl/

ca-config.json 100% 387 316.3KB/s 00:00

ca.csr 100% 1045 1.3MB/s 00:00

ca-csr.json 100% 257 399.6KB/s 00:00

ca-key.pem 100% 1679 2.6MB/s 00:00

ca.pem 100% 1310 2.4MB/s 00:00

[root@k8s-master1 ~]# scp ca* k8s-master3:/etc/kubernetes/ssl/

ca-config.json 100% 387 334.4KB/s 00:00

ca.csr 100% 1045 1.4MB/s 00:00

ca-csr.json 100% 257 286.7KB/s 00:00

ca-key.pem 100% 1679 1.9MB/s 00:00

ca.pem 100% 1310 2.1MB/s 00:00

[root@k8s-master1 ~]# scp ca* k8s-node1:/etc/kubernetes/ssl/

ca-config.json 100% 387 365.7KB/s 00:00

ca.csr 100% 1045 1.1MB/s 00:00

ca-csr.json 100% 257 379.1KB/s 00:00

ca-key.pem 100% 1679 2.1MB/s 00:00

ca.pem 100% 1310 1.8MB/s 00:00

[root@k8s-master1 ~]# scp ca* k8s-node2:/etc/kubernetes/ssl/

ca-config.json 100% 387 416.2KB/s 00:00

ca.csr 100% 1045 1.6MB/s 00:00

ca-csr.json 100% 257 368.6KB/s 00:00

ca-key.pem 100% 1679 2.5MB/s 00:00

ca.pem 100% 1310 2.0MB/s 00:00

[root@k8s-master1 ~]#

3.2 部署高可用etcd集群

kubernetes 系统使用etcd存储所有的数据,我们这里部署3个节点的etcd 集群,这3个节点直接复用kubernetes master的3个节点,分别命名为etcd01、etcd02、etcd03:

- etcd01:192.168.3.61

- etcd02:192.168.3.62

- etcd03:192.168.3.63

3.2.1 下载etcd

可以到github上下载最新版本

https://github.com/etcd-io/etcd/releases

[root@k8s-master1 ~]# wget https://github.com/coreos/etcd/releases/download/v3.5.2/etcd-v3.5.2-linux-amd64.tar.gz

--2022-02-21 10:04:46-- https://github.com/coreos/etcd/releases/download/v3.5.2/etcd-v3.5.2-linux-amd64.tar.gz

正在解析主机 github.com (github.com)... 20.205.243.166

正在连接 github.com (github.com)|20.205.243.166|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 301 Moved Permanently

位置:https://github.com/etcd-io/etcd/releases/download/v3.5.2/etcd-v3.5.2-linux-amd64.tar.gz [跟随至新的 URL]

--2022-02-21 10:04:47-- https://github.com/etcd-io/etcd/releases/download/v3.5.2/etcd-v3.5.2-linux-amd64.tar.gz

再次使用存在的到 github.com:443 的连接。

已发出 HTTP 请求,正在等待回应... 302 Found

位置:https://objects.githubusercontent.com/github-production-release-asset-2e65be/11225014/f4f31478-23ee-4680-b2f5-b9c9e6c22c10?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20220221%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20220221T020447Z&X-Amz-Expires=300&X-Amz-Signature=d95f23da1a648ce9ffbf30b90c3ee8dc084ce808c4c2cdb58834f8579af59568&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=11225014&response-content-disposition=attachment%3B%20filename%3Detcd-v3.5.2-linux-amd64.tar.gz&response-content-type=application%2Foctet-stream [跟随至新的 URL]

--2022-02-21 10:04:47-- https://objects.githubusercontent.com/github-production-release-asset-2e65be/11225014/f4f31478-23ee-4680-b2f5-b9c9e6c22c10?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20220221%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20220221T020447Z&X-Amz-Expires=300&X-Amz-Signature=d95f23da1a648ce9ffbf30b90c3ee8dc084ce808c4c2cdb58834f8579af59568&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=11225014&response-content-disposition=attachment%3B%20filename%3Detcd-v3.5.2-linux-amd64.tar.gz&response-content-type=application%2Foctet-stream

正在解析主机 objects.githubusercontent.com (objects.githubusercontent.com)... 185.199.109.133, 185.199.110.133, 185.199.111.133

正在连接 objects.githubusercontent.com (objects.githubusercontent.com)|185.199.109.133|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:19403085 (19M) [application/octet-stream]

正在保存至: “etcd-v3.5.2-linux-amd64.tar.gz”

etcd-v3.5.2-linux-amd64.tar.gz 100%[======================================================>] 18.50M 30.3MB/s 用时 0.6s

2022-02-21 10:04:48 (30.3 MB/s) - 已保存 “etcd-v3.5.2-linux-amd64.tar.gz” [19403085/19403085])

[root@k8s-master1 ~]# tar -xvf etcd-v3.5.2-linux-amd64.tar.gz

etcd-v3.5.2-linux-amd64/

etcd-v3.5.2-linux-amd64/etcdutl

etcd-v3.5.2-linux-amd64/Documentation/

etcd-v3.5.2-linux-amd64/Documentation/dev-guide/

etcd-v3.5.2-linux-amd64/Documentation/dev-guide/apispec/

etcd-v3.5.2-linux-amd64/Documentation/dev-guide/apispec/swagger/

etcd-v3.5.2-linux-amd64/Documentation/dev-guide/apispec/swagger/v3election.swagger.json

etcd-v3.5.2-linux-amd64/Documentation/dev-guide/apispec/swagger/v3lock.swagger.json

etcd-v3.5.2-linux-amd64/Documentation/dev-guide/apispec/swagger/rpc.swagger.json

etcd-v3.5.2-linux-amd64/Documentation/README.md

etcd-v3.5.2-linux-amd64/README-etcdutl.md

etcd-v3.5.2-linux-amd64/README-etcdctl.md

etcd-v3.5.2-linux-amd64/etcdctl

etcd-v3.5.2-linux-amd64/etcd

etcd-v3.5.2-linux-amd64/READMEv2-etcdctl.md

etcd-v3.5.2-linux-amd64/README.md

[root@k8s-master1 ~]# cp -p etcd-v3.5.2-linux-amd64/etcd* /usr/local/bin/

[root@k8s-master1 ~]# scp -r etcd-v3.5.2-linux-amd64/etcd* k8s-master2:/usr/local/bin/

etcd 100% 22MB 159.0MB/s 00:00

etcdctl 100% 17MB 170.0MB/s 00:00

etcdutl 100% 15MB 164.7MB/s 00:00

[root@k8s-master1 ~]# scp -r etcd-v3.5.2-linux-amd64/etcd* k8s-master3:/usr/local/bin/

etcd 100% 22MB 145.2MB/s 00:00

etcdctl 100% 17MB 153.2MB/s 00:00

etcdutl 100% 15MB 143.0MB/s 00:00

[root@k8s-master1 ~]#

3.2.2 创建TLS 密钥和证书

为了保证通信安全,客户端(如etcdctl)与etcd 集群、etcd 集群之间的通信需要使用TLS 加密。

创建etcd 证书签名请求:

[root@k8s-master1 ~]# vim etcd-csr.json

[root@k8s-master1 ~]# cat etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.3.61",

"192.168.3.62",

"192.168.3.63",

"192.168.3.60"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "k8s",

"OU": "system"

}]

}

[root@k8s-master1 ~]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2022/02/21 10:23:03 [INFO] generate received request

2022/02/21 10:23:03 [INFO] received CSR

2022/02/21 10:23:03 [INFO] generating key: rsa-2048

2022/02/21 10:23:03 [INFO] encoded CSR

2022/02/21 10:23:03 [INFO] signed certificate with serial number 540683039793068200585882328120306956582063169708

[root@k8s-master1 ~]#

[root@k8s-master1 ~]# ls etcd*.pem

etcd-key.pem etcd.pem

[root@k8s-master1 ~]# mkdir -p /etc/etcd/ssl

[root@k8s-master1 ~]# mv etcd*.pem /etc/etcd/ssl/

[root@k8s-master1 ~]# cp ca*.pem /etc/etcd/ssl/

[root@k8s-master1 ~]# ssh k8s-master2 mkdir -p /etc/etcd/ssl

[root@k8s-master1 ~]# ssh k8s-master3 mkdir -p /etc/etcd/ssl

[root@k8s-master1 ~]# scp /etc/etcd/ssl/* k8s-master2:/etc/etcd/ssl/

ca-key.pem 100% 1679 1.0MB/s 00:00

ca.pem 100% 1310 1.7MB/s 00:00

etcd-key.pem 100% 1679 1.6MB/s 00:00

etcd.pem 100% 1444 1.5MB/s 00:00

[root@k8s-master1 ~]# scp /etc/etcd/ssl/* k8s-master3:/etc/etcd/ssl/

ca-key.pem 100% 1679 1.1MB/s 00:00

ca.pem 100% 1310 1.5MB/s 00:00

etcd-key.pem 100% 1679 2.0MB/s 00:00

etcd.pem 100% 1444 1.9MB/s 00:00

[root@k8s-master1 ~]#

3.2.3 创建配置文件

[root@k8s-master1 ~]# cat /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.3.61:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.3.61:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.3.61:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.3.61:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.3.61:2380,etcd2=https://192.168.3.62:2380,etcd3=https://192.168.3.63:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@k8s-master1 ~]# scp /etc/etcd/etcd.conf k8s-master2:/etc/etcd/

etcd.conf 100% 520 457.0KB/s 00:00

[root@k8s-master1 ~]# scp /etc/etcd/etcd.conf k8s-master3:/etc/etcd/

etcd.conf 100% 520 445.7KB/s 00:00

[root@k8s-master1 ~]#

复制到另外两个节点的配置文件需要修改节点名称和IP 切记

- ETCD_NAME:节点名称,集群中唯一

- ETCD_DATA_DIR:数据目录

- ETCD_LISTEN_PEER_URLS:集群通信监听地址

- ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

- ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

- ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

- ETCD_INITIAL_CLUSTER:集群节点地址

- ETCD_INITIAL_CLUSTER_TOKEN:集群Token

- ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

创建完配置文件顺便把数据目录也创建上

[root@k8s-master1 ~]# mkdir -p /var/lib/etcd/default.etcd

[root@k8s-master1 ~]# ssh k8s-master2 mkdir -p /var/lib/etcd/default.etcd

[root@k8s-master1 ~]# ssh k8s-master3 mkdir -p /var/lib/etcd/default.etcd

3.2.4 创建systemd unit文件

[root@k8s-master1 ~]# vim etcd.service

[root@k8s-master1 ~]# cat etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-cert-file=/etc/etcd/ssl/etcd.pem \

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-client-cert-auth \

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@k8s-master1 ~]# cp etcd.service /usr/lib/systemd/system/

[root@k8s-master1 ~]# scp etcd.service k8s-master2:/usr/lib/systemd/system/

etcd.service 100% 635 587.5KB/s 00:00

[root@k8s-master1 ~]# scp etcd.service k8s-master3:/usr/lib/systemd/system/

etcd.service 100% 635 574.7KB/s 00:00

[root@k8s-master1 ~]#

3.2.5 启动etcd

在所有节点上都执行

可以先执行

systemctl daemon-reload

systemctl enable etcd.service然后执行

systemctl start etcd

[root@k8s-master1 ~]# systemctl daemon-reload

[root@k8s-master1 ~]# systemctl enable etcd.service

Created symlink /etc/systemd/system/multi-user.target.wants/etcd.service → /usr/lib/systemd/system/etcd.service.

[root@k8s-master1 ~]# ^C

[root@k8s-master1 ~]# systemctl start etcd

[root@k8s-master1 ~]# systemctl status etcd

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2022-02-21 10:56:59 CST; 10s ago

Main PID: 112245 (etcd)

Tasks: 9 (limit: 23502)

Memory: 26.0M

CGroup: /system.slice/etcd.service

└─112245 /usr/local/bin/etcd --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --trusted-ca->

2月 21 10:57:01 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:01.473+0800","caller":"rafthttp/peer_status.g>

2月 21 10:57:01 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:01.473+0800","caller":"rafthttp/stream.go:274>

2月 21 10:57:01 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:01.473+0800","caller":"rafthttp/stream.go:249>

2月 21 10:57:01 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:01.473+0800","caller":"rafthttp/stream.go:274>

2月 21 10:57:01 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:01.484+0800","caller":"etcdserver/server.go:7>

2月 21 10:57:01 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:01.484+0800","caller":"rafthttp/stream.go:412>

2月 21 10:57:01 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:01.484+0800","caller":"rafthttp/stream.go:412>

2月 21 10:57:03 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:03.798+0800","caller":"etcdserver/server.go:2>

2月 21 10:57:03 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:03.799+0800","caller":"membership/cluster.go:>

2月 21 10:57:03 k8s-master1 etcd[112245]: {"level":"info","ts":"2022-02-21T10:57:03.793.2.6 验证集群

部署完etcd 集群后,在任一etcd 节点上执行下面命令,提示is healthy则表示正常

[root@k8s-master1 ~]# for ip in 192.168.3.61 192.168.3.62 192.168.3.63; do

> ETCDCTL_API=3 /usr/local/bin/etcdctl \

> --endpoints=https://${ip}:2379 \

> --cacert=/etc/etcd/ssl/ca.pem \

> --cert=/etc/etcd/ssl/etcd.pem \

> --key=/etc/etcd/ssl/etcd-key.pem \

> endpoint health; done

https://192.168.3.61:2379 is healthy: successfully committed proposal: took = 7.41116ms

https://192.168.3.62:2379 is healthy: successfully committed proposal: took = 9.36961ms

https://192.168.3.63:2379 is healthy: successfully committed proposal: took = 8.957572ms

3.3 安装K8s组件

3.3.1 下载二进制包

可以去github下载最新版本

kubernetes/CHANGELOG at master · kubernetes/kubernetes · GitHub

解压并复制到对应的节点上

[root@k8s-master1 ~]# wget https://storage.googleapis.com/kubernetes-release/release/v1.23.4/kubernetes-server-linux-amd64.tar.gz -O kubernetes-server-linux-amd64.tar.gz

--2022-02-21 11:39:09-- https://storage.googleapis.com/kubernetes-release/release/v1.23.4/kubernetes-server-linux-amd64.tar.gz

正在解析主机 storage.googleapis.com (storage.googleapis.com)... 142.251.43.16, 172.217.160.112, 172.217.160.80

正在连接 storage.googleapis.com (storage.googleapis.com)|142.251.43.16|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:341392468 (326M) [application/x-tar]

正在保存至: “kubernetes-server-linux-amd64.tar.gz”

kubernetes-server-linux-amd64.t 100%[======================================================>] 325.58M 14.3MB/s 用时 24s

2022-02-21 11:39:34 (13.5 MB/s) - 已保存 “kubernetes-server-linux-amd64.tar.gz” [341392468/341392468])

[root@k8s-master1 ~]# tar zxvf kubernetes-server-linux-amd64.tar.gz

kubernetes/

kubernetes/server/

kubernetes/server/bin/

kubernetes/server/bin/kube-apiserver.tar

kubernetes/server/bin/kube-controller-manager.tar

。。。 。。。

[root@k8s-master1 ~]# cd kubernetes/server/bin/

[root@k8s-master1 bin]# ls

apiextensions-apiserver kube-apiserver.tar kubectl-convert kube-proxy.tar

kubeadm kube-controller-manager kubelet kube-scheduler

kube-aggregator kube-controller-manager.docker_tag kube-log-runner kube-scheduler.docker_tag

kube-apiserver kube-controller-manager.tar kube-proxy kube-scheduler.tar

kube-apiserver.docker_tag kubectl kube-proxy.docker_tag mounter

[root@k8s-master1 bin]# cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

[root@k8s-master1 bin]# scp kube-apiserver kube-controller-manager kube-scheduler kubectl k8s-master2:/usr/local/bin

kube-apiserver 100% 125MB 87.6MB/s 00:01

kube-controller-manager 100% 116MB 129.0MB/s 00:00

kube-scheduler 100% 47MB 114.0MB/s 00:00

kubectl 100% 44MB 123.6MB/s 00:00

[root@k8s-master1 bin]# scp kube-apiserver kube-controller-manager kube-scheduler kubectl k8s-master3:/usr/local/bin

kube-apiserver 100% 125MB 137.7MB/s 00:00

kube-controller-manager 100% 116MB 147.2MB/s 00:00

kube-scheduler 100% 47MB 148.0MB/s 00:00

kubectl 100% 44MB 133.8MB/s 00:00

[root@k8s-master1 bin]# scp kubelet kube-proxy k8s-node1:/usr/local/bin/

kubelet 100% 119MB 165.2MB/s 00:00

kube-proxy 100% 42MB 143.0MB/s 00:00

[root@k8s-master1 bin]# scp kubelet kube-proxy k8s-node2:/usr/local/bin/

kubelet 100% 119MB 146.0MB/s 00:00

kube-proxy 100% 42MB 134.1MB/s 00:00

[root@k8s-master1 bin]#

3.3.2 部署apiserver

TLS Bootstrapping 机制

Master apiserver启用TLS认证后,每个节点的 kubelet 组件都要使用由 apiserver 使用的 CA 签发的有效证书才能与 apiserver 通讯,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。

为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。

TLS bootstrapping 具体引导过程

1.TLS 作用

TLS 的作用就是对通讯加密,防止中间人窃听;同时如果证书不信任的话根本就无法与 apiserver 建立连接,更不用提有没有权限向apiserver请求指定内容。

2. RBAC 作用

当 TLS 解决了通讯问题后,那么权限问题就应由 RBAC 解决(可以使用其他权限模型,如 ABAC);RBAC 中规定了一个用户或者用户组(subject)具有请求哪些 api 的权限;在配合 TLS 加密的时候,实际上 apiserver 读取客户端证书的 CN 字段作为用户名,读取 O字段作为用户组.

以上说明:第一,想要与 apiserver 通讯就必须采用由 apiserver CA 签发的证书,这样才能形成信任关系,建立 TLS 连接;第二,可以通过证书的 CN、O 字段来提供 RBAC 所需的用户与用户组。kubelet 首次启动流程

TLS bootstrapping 功能是让 kubelet 组件去 apiserver 申请证书,然后用于连接 apiserver;那么第一次启动时没有证书如何连接 apiserver ?

在apiserver 配置中指定了一个 token.csv 文件,该文件中是一个预设的用户配置;同时该用户的Token和由apiserver的CA签发的用户被写入了kubelet 所使用的bootstrap.kubeconfig 配置文件中;这样在首次请求时,kubelet 使用 bootstrap.kubeconfig 中被 apiserver CA 签发证书时信任的用户来与 apiserver 建立 TLS 通讯,使用 bootstrap.kubeconfig 中的用户 Token 来向 apiserver 声明自己的 RBAC 授权身份.token.csv格式:3940fd7fbb391d1b4d861ad17a1f0613,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

首次启动时,可能与遇到 kubelet 报 401 无权访问 apiserver 的错误;这是因为在默认情况下,kubelet 通过 bootstrap.kubeconfig 中的预设用户 Token 声明了自己的身份,然后创建 CSR 请求;但是不要忘记这个用户在我们不处理的情况下他没任何权限的,包括创建 CSR 请求;所以需要创建一个 ClusterRoleBinding,将预设用户 kubelet-bootstrap 与内置的 ClusterRole system:node-bootstrapper 绑定到一起,使其能够发起 CSR 请求。

创建token.csv文件

[root@k8s-master1 ~]# cat > token.csv << EOF

> $(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

> EOF

[root@k8s-master1 ~]# cat token.csv

3609f8980544889a3224dc8c57954976,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

创建csr请求文件

[root@k8s-master1 ~]# vim kube-apiserver-csr.json

[root@k8s-master1 ~]# cat kube-apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.3.60",

"192.168.3.61",

"192.168.3.62",

"192.168.3.63",

"192.168.3.64",

"192.168.3.65",

"10.255.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "k8s",

"OU": "system"

}

]

[root@k8s-master1 ~]#

# 10.255.0.1 kubernetes 服务IP(预先分配,一般为svc地址中的第一个IP)

# svc.cluster.local 如果不想用这个也可以修改

生成证书

[root@k8s-master1 ~]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

2022/02/21 12:16:40 [INFO] generate received request

2022/02/21 12:16:40 [INFO] received CSR

2022/02/21 12:16:40 [INFO] generating key: rsa-2048

2022/02/21 12:16:40 [INFO] encoded CSR

2022/02/21 12:16:40 [INFO] signed certificate with serial number 99077620124943441155416266615920435310043288274

创建api-server的配置文件

[root@k8s-master1 ~]# vim kube-apiserver.conf

[root@k8s-master1 ~]#

[root@k8s-master1 ~]#

[root@k8s-master1 ~]# cat kube-apiserver.conf

KUBE_APISERVER_OPTS="

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=192.168.3.61 \

--secure-port=6443 \

--advertise-address=192.168.3.61 \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.255.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.3.61:2379,https://192.168.3.62:2379,https://192.168.3.63:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4"

--logtostderr:启用日志

--v:日志等级

--log-dir:日志目录

--etcd-servers:etcd集群地址

--bind-address:监听地址

--secure-port:https安全端口

--advertise-address:集群通告地址

--allow-privileged:启用授权

--service-cluster-ip-range:Service虚拟IP地址段

--enable-admission-plugins:准入控制模块

--authorization-mode:认证授权,启用RBAC授权和节点自管理

--enable-bootstrap-token-auth:启用TLS bootstrap机制

--token-auth-file:bootstrap token文件

--service-node-port-range:Service nodeport类型默认分配端口范围

--kubelet-client-xxx:apiserver访问kubelet客户端证书

--tls-xxx-file:apiserver https证书

--etcd-xxxfile:连接Etcd集群证书 –

-audit-log-xxx:审计日志

创建kube-apiserver 的systemd unit文件

[root@k8s-master1 ~]# vim kube-apiserver.service

[root@k8s-master1 ~]#

[root@k8s-master1 ~]# cat kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[root@k8s-master1 ~]#

分发apiserver的证书、配置文件、systemd

修改另外节点的配置文件,master2和master3的apiserver.conf中的IP

[root@k8s-master1 ~]# cp kube-apiserver*.pem /etc/kubernetes/ssl/

[root@k8s-master1 ~]# cp token.csv /etc/kubernetes/

[root@k8s-master1 ~]# cp kube-apiserver.conf /etc/kubernetes/

[root@k8s-master1 ~]# cp kube-apiserver.service /usr/lib/systemd/system/

[root@k8s-master1 ~]# scp kube-apiserver*.pem k8s-master2:/etc/kubernetes/ssl/

kube-apiserver-key.pem 100% 1679 1.7MB/s 00:00

kube-apiserver.pem 100% 1606 2.3MB/s 00:00

[root@k8s-master1 ~]# scp kube-apiserver*.pem k8s-master3:/etc/kubernetes/ssl/

kube-apiserver-key.pem 100% 1679 1.5MB/s 00:00

kube-apiserver.pem 100% 1606 2.1MB/s 00:00

[root@k8s-master1 ~]# scp token.csv kube-apiserver.conf k8s-master3:/etc/kubernetes/

token.csv 100% 84 55.8KB/s 00:00

kube-apiserver.conf 100% 1610 1.9MB/s 00:00

[root@k8s-master1 ~]# scp token.csv kube-apiserver.conf k8s-master2:/etc/kubernetes/

token.csv 100% 84 54.6KB/s 00:00

kube-apiserver.conf 100% 1610 1.7MB/s 00:00

[root@k8s-master1 ~]# scp kube-apiserver.service k8s-master2:/usr/lib/systemd/system/

kube-apiserver.service 100% 280 268.9KB/s 00:00

[root@k8s-master1 ~]# scp kube-apiserver.service k8s-master3:/usr/lib/systemd/system/

kube-apiserver.service 100% 280 273.9KB/s 00:00

[root@k8s-master1 ~]#

启动kube-apiserver

三个节点都需要执行

[root@k8s-master1 ~]# systemctl daemon-reload

[root@k8s-master1 ~]# systemctl enable kube-apiserver

The unit files have no installation config (WantedBy, RequiredBy, Also, Alias

settings in the [Install] section, and DefaultInstance for template units).

This means they are not meant to be enabled using systemctl.

Possible reasons for having this kind of units are:

1) A unit may be statically enabled by being symlinked from another unit's

.wants/ or .requires/ directory.

2) A unit's purpose may be to act as a helper for some other unit which has

a requirement dependency on it.

3) A unit may be started when needed via activation (socket, path, timer,

D-Bus, udev, scripted systemctl call, ...).

4) In case of template units, the unit is meant to be enabled with some

instance name specified.

[root@k8s-master1 ~]# systemctl start kube-apiserver

[root@k8s-master1 ~]# systemctl status kube-apiserver

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; static; vendor preset: disabled)

Active: active (running) since Mon 2022-02-21 12:39:09 CST; 13s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 147142 (kube-apiserver)

Tasks: 12 (limit: 23502)

Memory: 264.4M

CGroup: /system.slice/kube-apiserver.service

└─147142 /usr/local/bin/kube-apiserver --enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,Se>

2月 21 12:39:13 k8s-master1 kube-apiserver[147142]: I0221 12:39:13.591795 147142 httplog.go:129] "HTTP" verb="GET" URI="/api/>

2月 21 12:39:13 k8s-master1 kube-apiserver[147142]: I0221 12:39:13.598945 147142 alloc.go:329] "3.3.3 安装kubectl工具

Kubectl是客户端工具,操作k8s资源的,如增删改查等。

Kubectl操作资源的时候,默认先找KUBECONFIG,如果没有KUBECONFIG变量,那就会使用~/.kube/config

可以设置一个环境变量KUBECONFIG

[root@ xianchaomaster1 ~]# export KUBECONFIG =/etc/kubernetes/admin.conf

这样在操作kubectl,就会自动加载KUBECONFIG来操作要管理哪个集群的k8s资源了

也可以按照下面方法

[root@ xianchaomaster1 ~]# cp /etc/kubernetes/admin.conf ~/.kube/config

这样我们在执行kubectl,就会加载~/.kube/config文件操作k8s资源了

创建csr请求文件

[root@k8s-master1 ~]# vim admin-csr.json

[root@k8s-master1 ~]# cat admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "system"

}

]

}

生成证书

[root@k8s-master1 ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2022/02/21 12:53:07 [INFO] generate received request

2022/02/21 12:53:07 [INFO] received CSR

2022/02/21 12:53:07 [INFO] generating key: rsa-2048

2022/02/21 12:53:07 [INFO] encoded CSR

2022/02/21 12:53:07 [INFO] signed certificate with serial number 421998404594641165147193284791210831952845769831

2022/02/21 12:53:07 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master1 ~]# cp admin*.pem /etc/kubernetes/ssl/

[root@k8s-master1 ~]#

创建kubeconfig配置文件

[root@k8s-master1 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.3.61:6443 --kubeconfig=kube.config

Cluster "kubernetes" set.

[root@k8s-master1 ~]#

[root@k8s-master1 ~]# cat kube.config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURtakNDQW9LZ0F3SUJBZ0lVYWZvMDZDNzNHS0J2SHB1aEwvK2FQVGI1TU93d0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HYzNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEl5TURJeU1UQXlNVGN3TUZvWERUTXlNREl4T1RBeU1UY3dNRm93WlRFTE1Ba0cKQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbGFXcHBibWN4RERBSwpCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HYzNsemRHVnRNUk13RVFZRFZRUURFd3ByZFdKbGNtNWxkR1Z6Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBekZ0bTJ4dU5PV3dJalJpdVg0cmkKekhUbFd6R3B4Y1RNWUcySG03a3dnNlZPTDJQUzdwRG94OEtncHFNZHlQQStxTnpQMGtTM2NncDE0SGhGT3I4cgpSWjl1Y2EvZjJIaTJNZE8wMUExTWRnS01OMG95aFBweUg4aituMlFLNDJka05HMGJYcFN4TWwyWUxrbDlCeFBSCk85T2R0RHh6MGxVOWs5U0J3RXF4S3lQSCtqWUpjNVdhOHA5NlQrMFRnSlZsNExlOU9OR0RNV0JCRHFBWk54dGsKR0NqbmtET0NzQVorek9PM1BtMnl5RTFRRks3K1ZmVWZlaHdVTE9sSlhlUHE0V1BtY252aUdmQnV0akdsRXE1UwpSZ2IrVXhNeEZtU0R1RHIxVU1DOUFMYkdDemN2bUdTZDhBcjE1Z2dUVUk1WC9yYjlnLzltenZ3VnhUcUM4Uy9YCmR3SURBUUFCbzBJd1FEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlYKSFE0RUZnUVVsVmdpMm5VNS9TUDVSSXRxVEtzVGtjcE1QN2t3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUpsTAppcFJaRHVvdEtYMitiQTBuUU5aTndsYVZNdDRMK0k5TmZGOVBJWWhSYnhrQTlnMkcrYnVLNDJqYmtuck1yNDhFCmdxQVltWCtFMnUxMVZtcGFaVFNReUdYVVhZQ1RjYldXamVOaHRTb2ttMFhvUkkvK2dSNk5KTDFiYUVxWElXRWEKZXZYSmhTVzFLNUFVVDRCekZFUUVwTFlVNWdpT2tiZXdNTXdGRVJLVkV3YkFEVVdDUVFTWkxZNTZJQXRaN0Z4NwpNTHB6SWZvT0Eya24zbmc5RmhmZVNsb2dlN2txUXNjdUdlVTVmV29OMVdzYVpRS3U3b0NHM2tmQm53cVYvNktuCmgrNzFuRkpIN1B3a3FPdWZJaUNDNWxVWHlZWGw1ZE9qcEJWK0JpbUJuWEdzOHFJTEZyR2s5cllKVW9IWmcvN2EKcG9TSEp6cEp1aEFrZ05SM1BmOD0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.3.61:6443

name: kubernetes

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

[root@k8s-master1 ~]#

设置客户端认证参数

--创建kubectl使用的用户admin,并指定了刚创建的证书和config

[root@k8s-master1 ~]# kubectl config set-credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --client-key=/etc/kubernetes/ssl/admin-key.pem --embed-certs=true --kubeconfig=kube.config

User "admin" set.

配置安全上下文

[root@k8s-master1 ~]# kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config #添加上下文

Context "kubernetes" created.

[root@k8s-master1 ~]# kubectl config use-context kubernetes --kubeconfig=kube.config #把刚添加的上下文设置成当前上下文,等同于切换上下文

Switched to context "kubernetes".

[root@k8s-master1 ~]# mkdir ~/.kube -p

[root@k8s-master1 ~]# cp kube.config ~/.kube/config

[root@k8s-master1 ~]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.3.61:6443

[root@k8s-master1 ~]# kubectl get ns

NAME STATUS AGE

default Active 44m

kube-node-lease Active 44m

kube-public Active 44m

kube-system Active 44m

[root@k8s-master1 ~]# kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "https://127.0.0.1:10257/healthz": dial tcp 127.0.0.1:10257: connect: connection refused

scheduler Unhealthy Get "https://127.0.0.1:10259/healthz": dial tcp 127.0.0.1:10259: connect: connection refused

etcd-0 Healthy {"health":"true","reason":""}

etcd-1 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

复制kubectl配置

以后想用哪台机器管理集群,就把配置复制到哪里

[root@k8s-master1 ~]# ssh k8s-master2 mkdir ~/.kube

[root@k8s-master1 ~]# ssh k8s-master3 mkdir ~/.kube

[root@k8s-master1 ~]# scp ~/.kube/config k8s-master2:/root/.kube/

config 100% 6200 6.4MB/s 00:00

[root@k8s-master1 ~]# scp ~/.kube/config k8s-master3:/root/.kube/

config 100% 6200 3.4MB/s 00:00

[root@k8s-master1 ~]#

设置自动补全命令

[root@k8s-master1 ~]# yum install -y bash-completion

安装:

bash-completion noarch 1:2.7-5.el8 base 274 k

已安装:

bash-completion-1:2.7-5.el8.noarch

完毕!

[root@k8s-master1 ~]# source <(kubectl completion bash)

[root@k8s-master1 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@k8s-master1 ~]#

3.3.4 部署kube-controller-manager

创建csr请求文件

[root@k8s-master1 ~]# cat kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.3.60",

"192.168.3.61",

"192.168.3.62",

"192.168.3.63"

],

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

生成证书

[root@k8s-master1 ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2022/02/21 14:43:33 [INFO] generate received request

2022/02/21 14:43:33 [INFO] received CSR

2022/02/21 14:43:33 [INFO] generating key: rsa-2048

2022/02/21 14:43:33 [INFO] encoded CSR

2022/02/21 14:43:33 [INFO] signed certificate with serial number 496802849408140383404002518878408986795206976819

[root@k8s-master1 ~]#

创建kube-controller-manager的kubeconfig

[root@k8s-master1 ~]# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.3.61:6443 --kubeconfig=kube-controller-manager.kubeconfig # 添加配置

Cluster "kubernetes" set.

[root@k8s-master1 ~]# kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig #创建用户

User "system:kube-controller-manager" set.

[root@k8s-master1 ~]#

[root@k8s-master1 ~]# kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig #添加上下文

Context "system:kube-controller-manager" created.

[root@k8s-master1 ~]# kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig #切换上下文

Switched to context "system:kube-controller-manager".

创建配置文件kube-controller-manager.conf

[root@k8s-master1 ~]# vim kube-controller-manager.conf

[root@k8s-master1 ~]# cat kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="

--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.255.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.0.0.0/16 \

--experimental-cluster-signing-duration=87600h \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-use-rest-clients=true \

--horizontal-pod-autoscaler-sync-period=10s \

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

创建systemd unit启动文件

[root@k8s-master1 ~]# cat kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

分发配置

[root@k8s-master1 ~]# cp kube-controller-manager*.pem /etc/kubernetes/ssl/

[root@k8s-master1 ~]# cp kube-controller-manager.kubeconfig kube-controller-manager.conf /etc/kubernetes/

[root@k8s-master1 ~]# cp kube-controller-manager.service /usr/lib/systemd/system/

[root@k8s-master1 ~]# scp kube-controller-manager*.pem k8s-master2:/etc/kubernetes/ssl/

kube-controller-manager-key.pem 100% 1675 1.6MB/s 00:00

kube-controller-manager.pem 100% 1517 1.5MB/s 00:00

[root@k8s-master1 ~]# scp kube-controller-manager*.pem k8s-master3:/etc/kubernetes/ssl/

kube-controller-manager-key.pem 100% 1675 401.7KB/s 00:00

kube-controller-manager.pem 100% 1517 2.0MB/s 00:00

[root@k8s-master1 ~]# scp kube-controller-manager.kubeconfig kube-controller-manager.conf k8s-master3:/etc/kubernetes/

kube-controller-manager.kubeconfig 100% 6442 5.8MB/s 00:00

kube-controller-manager.conf 100% 1103 1.2MB/s 00:00

[root@k8s-master1 ~]# scp kube-controller-manager.kubeconfig kube-controller-manager.conf k8s-master2:/etc/kubernetes/

kube-controller-manager.kubeconfig 100% 6442 6.8MB/s 00:00

kube-controller-manager.conf 100% 1103 1.5MB/s 00:00

[root@k8s-master1 ~]# scp kube-controller-manager.service k8s-master3:/usr/lib/systemd/system/

kube-controller-manager.service 100% 325 337.5KB/s 00:00

[root@k8s-master1 ~]# scp kube-controller-manager.service k8s-master2:/usr/lib/systemd/system/

kube-controller-manager.service 100% 325 260.6KB/s 00:00

[root@k8s-master1 ~]#

启动服务

三个节点都要执行

[root@k8s-master1 ~]# systemctl daemon-reload

[root@k8s-master1 ~]# systemctl enable kube-controller-manager

Created symlink /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service → /usr/lib/systemd/system/kube-controller-manager.service.

[root@k8s-master1 ~]# systemctl start kube-controller-manager

[root@k8s-master1 ~]# systemctl status kube-controller-manager

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2022-02-21 15:08:30 CST; 4s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 201388 (kube-controller)

Tasks: 7 (limit: 23502)

Memory: 22.2M

CGroup: /system.slice/kube-controller-manager.service

└─201388 /usr/local/bin/kube-controller-manager --port=0 --secure-port=10252 --bind-address=127.0.0.1 --kubeconfig=>

2月 21 15:08:30 k8s-master1 kube-controller-manager[201388]: W0221 15:08:30.640107 201388 authentication.go:316] No authentic>

2月 21 15:08:30 k8s-master1 kube-controller-manager[201388]: W0221 15

Error: unknown flag: --horizontal-pod-autoscaler-use-rest-clients1.23的kube-controller-manager不再支持这个参数。

kube-controller-manager.conf中去掉后重启即可

3.3.5 部署kube-scheduler组件

创建csr

[root@k8s-master1 ~]# vim kube-scheduler-csr.json

[root@k8s-master1 ~]# cat kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.3.60",

"192.168.3.61",

"192.168.3.62",

"192.168.3.63"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

生成证书

[root@k8s-master1 ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2022/02/21 15:21:14 [INFO] generate received request

2022/02/21 15:21:14 [INFO] received CSR

2022/02/21 15:21:14 [INFO] generating key: rsa-2048

2022/02/21 15:21:14 [INFO] encoded CSR

2022/02/21 15:21:14 [INFO] signed certificate with serial number 511389872259561079962075349736953558613113264353

创建kube-scheduler的kubeconfig

[root@k8s-master1 ~]# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.3.61:6443 --kubeconfig=kube-scheduler.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master1 ~]# kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

User "system:kube-scheduler" set.

[root@k8s-master1 ~]# kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

Context "system:kube-scheduler" created.

[root@k8s-master1 ~]# kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

Switched to context "system:kube-scheduler".

[root@k8s-master1 ~]#

创建配置文件kube-scheduler.conf

[root@k8s-master1 ~]# vim kube-scheduler.conf

[root@k8s-master1 ~]# cat kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

创建systemd unit服务启动文件

[root@k8s-master1 ~]# vim kube-scheduler.service

[root@k8s-master1 ~]# cat kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

分发配置

[root@k8s-master1 ~]# cp kube-scheduler*.pem /etc/kubernetes/ssl/

[root@k8s-master1 ~]# cp kube-scheduler.kubeconfig kube-scheduler.conf /etc/kubernetes/

[root@k8s-master1 ~]# cp kube-scheduler.service /usr/lib/systemd/system/

[root@k8s-master1 ~]#

[root@k8s-master1 ~]# scp kube-scheduler*.pem k8s-master2:/etc/kubernetes/ssl/

kube-scheduler-key.pem 100% 1679 1.5MB/s 00:00

kube-scheduler.pem 100% 1493 1.9MB/s 00:00

[root@k8s-master1 ~]# scp kube-scheduler*.pem k8s-master3:/etc/kubernetes/ssl/

kube-scheduler-key.pem 100% 1679 1.5MB/s 00:00

kube-scheduler.pem 100% 1493 1.4MB/s 00:00

[root@k8s-master1 ~]# scp kube-scheduler.kubeconfig kube-scheduler.conf k8s-master2:/etc/kubernetes/

kube-scheduler.kubeconfig 100% 6378 5.4MB/s 00:00

kube-scheduler.conf 100% 209 248.9KB/s 00:00

[root@k8s-master1 ~]# scp kube-scheduler.kubeconfig kube-scheduler.conf k8s-master3:/etc/kubernetes/

kube-scheduler.kubeconfig 100% 6378 4.5MB/s 00:00

kube-scheduler.conf 100% 209 284.2KB/s 00:00

[root@k8s-master1 ~]# scp kube-scheduler.service k8s-master2:/usr/lib/systemd/system/

kube-scheduler.service 100% 293 192.4KB/s 00:00

[root@k8s-master1 ~]# scp kube-scheduler.service k8s-master3:/usr/lib/systemd/system/

kube-scheduler.service 100% 293 224.3KB/s 00:00

[root@k8s-master1 ~]#

启动服务

三个节点都要执行

[root@k8s-master1 ~]# systemctl daemon-reload

[root@k8s-master1 ~]# systemctl enable kube-scheduler

Created symlink /etc/systemd/system/multi-user.target.wants/kube-scheduler.service → /usr/lib/systemd/system/kube-scheduler.service.

[root@k8s-master1 ~]# systemctl start kube-scheduler

[root@k8s-master1 ~]# systemctl status kube-scheduler

● kube-scheduler.service - Kubernetes Scheduler

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2022-02-21 15:29:49 CST; 5s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 208525 (kube-scheduler)

Tasks: 9 (limit: 23502)

Memory: 13.8M

CGroup: /system.slice/kube-scheduler.service

└─208525 /usr/local/bin/kube-scheduler --address=127.0.0.1 --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig ->

2月 21 15:29:50 k8s-master1 kube-scheduler[208525]: score: {}

2月 21 15:29:50 k8s-master1 kube-scheduler[208525]: schedulerName: default-scheduler

2月 21 15:29:50 k8s-master1 kube-scheduler[208525]: ------------------------------------Configuration File Contents End Here-->

2月 21 15:29:50 k8s-master1 kube-scheduler[208525]: I0221 15:29:50.081000 208525 server.go:139] "Starting Kubernetes Schedule>

2月 21 15:29:50 k8s-master1 kube-scheduler[208525]: I0221 15:29:50.082408 208525 tlsconfig.go:200] "Loaded serving cert" cert>

2月 21 15:29:50 k8s-master1 kube-scheduler[208525]: I0221 15:29:50.082[root@k8s-master1 ~]# kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-2 Healthy {"health":"true","reason":""}

etcd-1 Healthy {"health":"true","reason":""}

etcd-0 Healthy {"health":"true","reason":""}

3.3.6 安装kubelet

创建kubelet-bootstrap.kubeconfig

[root@k8s-master1 ~]# BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

[root@k8s-master1 ~]# echo $BOOTSTRAP_TOKEN

3609f8980544889a3224dc8c57954976

[root@k8s-master1 ~]# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.3.61:6443 --kubeconfig=kubelet-bootstrap.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master1 ~]# kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig

User "kubelet-bootstrap" set.

[root@k8s-master1 ~]# kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

Context "default" created.

[root@k8s-master1 ~]# kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

Switched to context "default".

[root@k8s-master1 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

[root@k8s-master1 ~]#

创建配置文件kubelet.json

[root@k8s-master1 ~]# vim kubelet.json

[root@k8s-master1 ~]# cat kubelet.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.3.61",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.255.0.2"]

}

创建systemd unit 服务启动文件

[root@k8s-master1 ~]# vim kubelet.service

[root@k8s-master1 ~]# cat kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.json \

--network-plugin=cni \

--pod-infra-container-image=kubernetes/pause \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

分发kubelet相关配置

分发到node节点上

[root@k8s-master1 ~]# ssh k8s-node1 mkdir -p /etc/kubernetes/ssl

[root@k8s-master1 ~]# scp kubelet-bootstrap.kubeconfig kubelet.json k8s-node1:/etc/kubernetes/

kubelet-bootstrap.kubeconfig 100% 2102 1.4MB/s 00:00

kubelet.json 100% 802 1.1MB/s 00:00

[root@k8s-master1 ~]# scp ca.pem k8s-node1:/etc/kubernetes/ssl/

ca.pem 100% 1310 1.4MB/s 00:00

[root@k8s-master1 ~]# scp kubelet.service k8s-node1:/usr/lib/systemd/system/

kubelet.service 100% 661 543.0KB/s 00:00

[root@k8s-master1 ~]# ssh k8s-node2 mkdir -p /etc/kubernetes/ssl

[root@k8s-master1 ~]# scp kubelet-bootstrap.kubeconfig kubelet.json k8s-node2:/etc/kubernetes/

kubelet-bootstrap.kubeconfig 100% 2102 1.6MB/s 00:00

kubelet.json 100% 802 758.7KB/s 00:00

[root@k8s-master1 ~]# scp ca.pem k8s-node2:/etc/kubernetes/ssl/

ca.pem 100% 1310 1.4MB/s 00:00

[root@k8s-master1 ~]# scp kubelet.service k8s-node2:/usr/lib/systemd/system/

kubelet.service 100% 661 669.1KB/s 00:00

[root@k8s-master1 ~]#

kubelete.json中的address改为各个节点的ip地址 ,需要修改一下

启动kubelet

[root@k8s-master1 ~]# ssh k8s-node1 mkdir -p mkdir /var/lib/kubelet /var/log/kubernetes

[root@k8s-master1 ~]# ssh k8s-node2 mkdir -p mkdir /var/lib/kubelet /var/log/kubernetes

[root@k8s-master1 ~]# ssh k8s-node1 systemctl daemon-reload

[root@k8s-master1 ~]# ssh k8s-node2 systemctl daemon-reload

[root@k8s-master1 ~]# ssh k8s-node1 systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@k8s-master1 ~]# ssh k8s-node2 systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@k8s-master1 ~]# ssh k8s-node1 systemctl start kubelet

[root@k8s-master1 ~]# ssh k8s-node2 systemctl start kubelet

[root@k8s-master1 ~]# ssh k8s-node1 systemctl status kubelet

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2022-02-21 17:49:20 CST; 26s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 19745 (kubelet)

Tasks: 9 (limit: 23502)

Memory: 18.5M

CGroup: /system.slice/kubelet.service

└─19745 /usr/local/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig --cert-dir=/etc/kubernetes/ssl --kubeconfig=/etc/kubernetes/kubelet.kubeconfig --config=/etc/kubernetes/kubelet.json --network-plugin=cni --pod-infra-container-image=kubernetes/pause --alsologtostderr=true --logtostderr=false --log-dir=/var/log/kubernetes --v=2

如果pause镜像下载不下来可以手动下载

docker pull kubernetes/pause

批准CSR 请求

node节点启动后会向主节点发送csr请求

批准前的状态时Pending

批准之后的状态是Approved,Issued

[root@k8s-master1 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr-4fb--zcCoK1VH6pv0VAGTAcLem23M2aVmNhIcnNOiA4 3m8s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-u2keQxwL2xiDPiynMhH8LXidjWJzqapQ1c1Lbk1qwM0 4m14s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

[root@k8s-master1 ~]# kubectl certificate approve node-csr-4fb--zcCoK1VH6pv0VAGTAcLem23M2aVmNhIcnNOiA4

certificatesigningrequest.certificates.k8s.io/node-csr-4fb--zcCoK1VH6pv0VAGTAcLem23M2aVmNhIcnNOiA4 approved

[root@k8s-master1 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr-4fb--zcCoK1VH6pv0VAGTAcLem23M2aVmNhIcnNOiA4 3m58s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-u2keQxwL2xiDPiynMhH8LXidjWJzqapQ1c1Lbk1qwM0 5m4s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

[root@k8s-master1 ~]# kubectl certificate approve node-csr-u2keQxwL2xiDPiynMhH8LXidjWJzqapQ1c1Lbk1qwM0

certificatesigningrequest.certificates.k8s.io/node-csr-u2keQxwL2xiDPiynMhH8LXidjWJzqapQ1c1Lbk1qwM0 approved

[root@k8s-master1 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr-4fb--zcCoK1VH6pv0VAGTAcLem23M2aVmNhIcnNOiA4 4m15s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-u2keQxwL2xiDPiynMhH8LXidjWJzqapQ1c1Lbk1qwM0 5m21s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

[root@k8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-node1 NotReady 26s v1.23.4

k8s-node2 NotReady 7s v1.23.4

[root@k8s-master1 ~]#

3.3.7 部署kube-proxy

创建csr

[root@k8s-master1 ~]# vim kube-proxy-csr.json

[root@k8s-master1 ~]# cat kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "k8s",

"OU": "system"

}

]

}

生成证书

[root@k8s-master1 ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2022/02/21 18:07:42 [INFO] generate received request

2022/02/21 18:07:42 [INFO] received CSR