1、spark读取文件

custid_df = spark.read.format("csv").\

option("sep", ",").\

option("header", True).\

option("encoding", "utf-8").\

schema("custid STRING").\

load("/tmp/YB_1340802061021181116357983338500_20220701195704994.csv")

custid_df.createOrReplaceTempView("custid_rdd_table")

spark.spark_df("""

insert into table dw_bu.dwd_base_groupid_info_outside_d partition(day)

select 'custgroupid001',custid,'2022-08-02' from custid_rdd_table

""")

spark.catalog.dropTempView("custid_rdd_table")

2、获取hive表schemal

from pyspark.sql.types import StructType, StructField, StringType, IntegerType

df = spark.sql("DESCRIBE dw_bu.ods_base_data_d_info_m_d")

table_schamel = ''

table_schamel = ''

for row in df.collect():

if "#" in row.col_name:

break

table_schamel = table_schamel + " " + row.col_name + " " + row.data_type + ' comment "' + row.comment + '",'

table_schamel = table_schamel[:-1]

print(table_schamel)

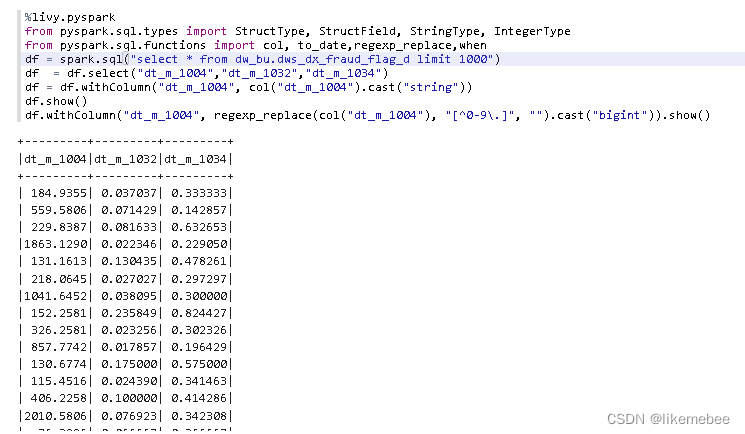

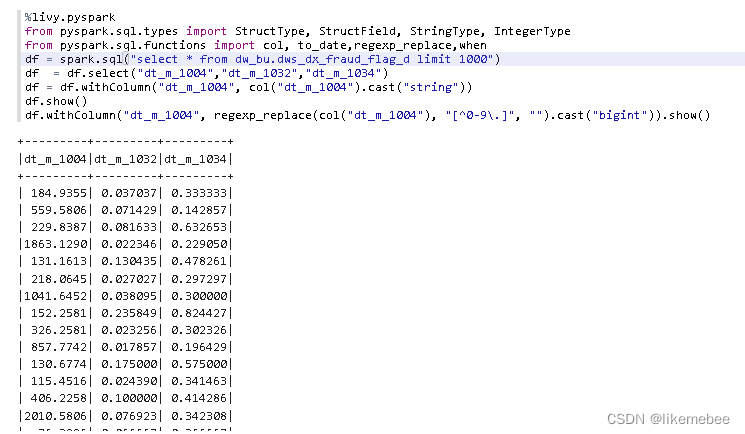

3、转换字段类型

from pyspark.sql.types import StructType, StructField, StringType, IntegerType

from pyspark.sql.functions import col, to_date,regexp_replace,when

df = spark.sql("select * from dw_bu.dws_dx_fraud_flag_d limit 1000")

df = df.select("dt_m_1004","dt_m_1032","dt_m_1034")

df = df.withColumn("dt_m_1004", col("dt_m_1004").cast("string"))

df.show()

df.withColumn("dt_m_1004", regexp_replace(col("dt_m_1004"), "[^0-9\.]", "").cast("bigint")).show()

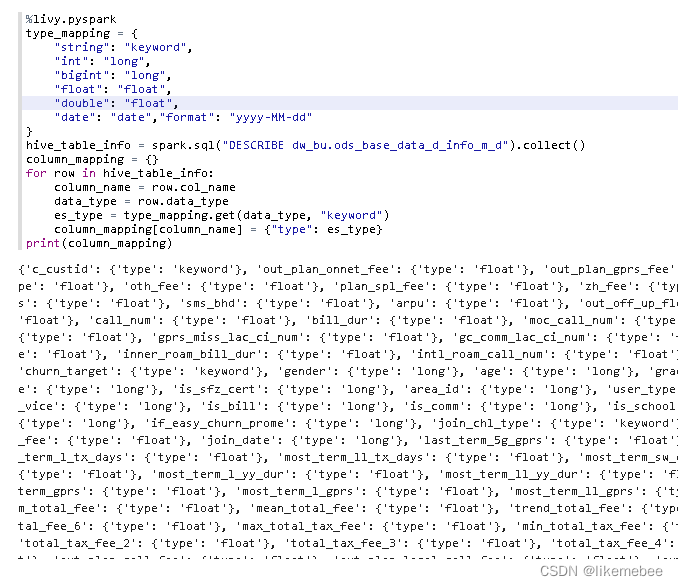

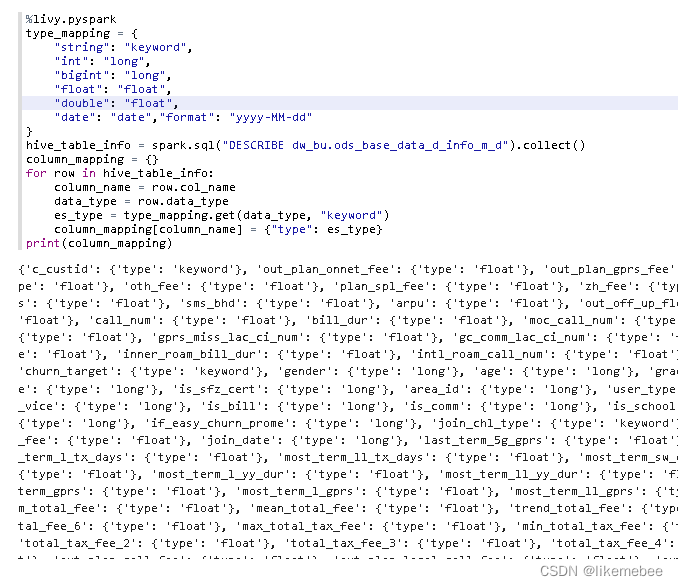

4、获取hive表对应spark索引

type_mapping = {

"string": "keyword",

"int": "long",

"bigint": "long",

"float": "float",

"double": "float",

"date": "date","format": "yyyy-MM-dd"

}

hive_table_info = spark.sql("DESCRIBE dw_bu.ods_base_data_d_info_m_d").collect()

column_mapping = {}

for row in hive_table_info:

column_name = row.col_name

data_type = row.data_type

es_type = type_mapping.get(data_type, "keyword")

column_mapping[column_name] = {"type": es_type}

print(column_mapping)

对某张表的字段加密

from pyspark.sql.functions import sha2

# 读取 Hive 表

table_name = "dw_bu.dwd_data_month_train_info"

df = spark.table(table_name)

# 加密字段并替换原值

df = df.withColumn("cx_app_govissuredcode", sha2(df["cx_app_govissuredcode"], 256))

# 保存修改后的结果回到 Hive 表

df.write.mode("overwrite").saveAsTable("dw_bu.dwd_data_month_train_info_bak")