本地k8s集群搭建保姆级教程(5)-安装k8s监控Prometheus+Grafana

本地k8s集群搭建保姆级教程(5)-安装k8s监控Prometheus+Grafana

1、 环境依赖K8S集群:

在实践本文内容前,请准备好对应的k8s集群环境,如果没有请参照前面的文章进行搭建:

本地k8s集群搭建保姆级教程(1)-虚拟机安装

本地k8s集群搭建保姆级教程(2)-装机Alpine

本地k8s集群搭建保姆级教程(3)-安装k8s集群

2、prometheus-operator及组件介绍

Prometheus 作为 Kubernetes 监控的事实标准,有着强大的功能和良好的生态。但是它不支持分布式,不支持数据导入、导出,不支持通过 API 修改监控目标和报警规则,所以在使用它时,通常需要写脚本和代码来简化操作。Prometheus Operator 为监控 Kubernetes service、deployment 和 Prometheus 实例的管理提供了简单的定义,简化在 Kubernetes 上部署、管理和运行 Prometheus 和 Alertmanager 集群。

-

MetricServer:是kubernetes集群资源使用情况的聚合器,收集数据给kubernetes集群内使用,如 kubectl,hpa,scheduler等。

-

PrometheusOperator:是一个系统监测和警报工具箱,用来存储监控数据。

-

NodeExporter:用于各node的关键度量指标状态数据。

-

KubeStateMetrics:收集kubernetes集群内资源对象数 据,制定告警规则。

-

Prometheus:采用pull方式收集apiserver,scheduler,controller-manager,kubelet组件数 据,通过http协议传输。

3、prometheus-operator

3.1 prometheus-operator运行图

3.2 克隆仓库

a. 仓库:https://github.com/prometheus-operator/kube-prometheus

请根据k8s集群的版本选择相应的Tag版本,我这里选择main,最新的版本

$ git clone https://github.com/prometheus-operator/kube-prometheus

$ cd kube-prometheus/manifests

$ touch replace.sh

$ chmod +x -R replace.sh

b. 添加如下内容, 主要目的是修改镜像地址为阿里云镜像地址

#/bin/sh

set -x

# 删除网络策略

ls | grep networkPolicy | xargs rm -rf

# 替换镜像地址为阿里云地址

sed -i "s#quay.io/prometheus/#registry.cn-hangzhou.aliyuncs.com/chenby/#g" *.yaml

sed -i "s#quay.io/brancz/#registry.cn-hangzhou.aliyuncs.com/chenby/#g" *.yaml

sed -i "s#k8s.gcr.io/prometheus-adapter/#registry.cn-hangzhou.aliyuncs.com/chenby/#g" *.yaml

sed -i "s#quay.io/prometheus-operator/#registry.cn-hangzhou.aliyuncs.com/chenby/#g" *.yaml

sed -i "s#k8s.gcr.io/kube-state-metrics/#registry.cn-hangzhou.aliyuncs.com/chenby/#g" *.yaml

# 修改svc为LoadBalancer

# grafana-service.yaml

sed -i "/ports:/i\ type: LoadBalancer" grafana-service.yaml

sed -i "/targetPort: http/i\ nodePort: 31100" grafana-service.yaml

# prometheus-service.yaml

sed -i "/ports:/i\ type: LoadBalancer" prometheus-service.yaml

sed -i "/targetPort: web/i\ nodePort: 31200" prometheus-service.yaml

sed -i "/targetPort: reloader-web/i\ nodePort: 31300" prometheus-service.yaml

# alertmanager-service.yaml

sed -i "/ports:/i\ type: LoadBalancer" alertmanager-service.yaml

sed -i "/targetPort: web/i\ nodePort: 31400" alertmanager-service.yaml

sed -i "/targetPort: reloader-web/i\ nodePort: 31500" alertmanager-service.yaml

# 替换时区

sed -i "s/default_timezone = UTC/default_timezone = Asia\/Shanghai/g" grafana-config.yaml

c. 执行处理

$ ./replace.sh

d .安装RCD

$ kubectl create -f ./setup

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created

e. 部署容器

$ kubectl create -f ./

alertmanager.monitoring.coreos.com/main created

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-grafana-overview created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes-darwin created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

prometheusrule.monitoring.coreos.com/grafana-rules created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

poddisruptionbudget.policy/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

service/prometheus-operator created

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created

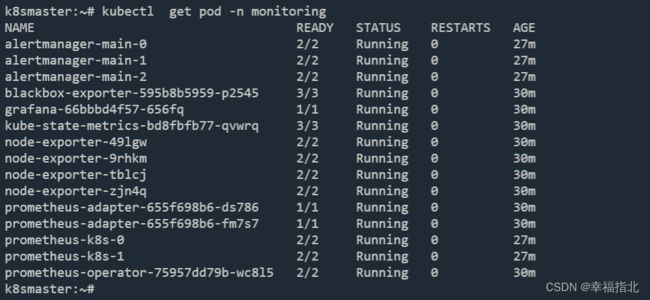

4、 查看验证

$ kubectl get pod -n monitoring

5、访问Grafana UI

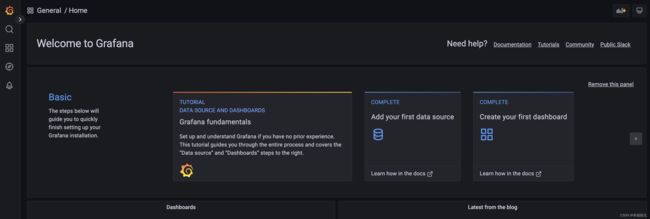

5.1 访问Grafana

在浏览器中访问: http://192.168.56.3:31100,如下:

Grafana默认账户/密码是 admin/admin, 第一次登录后或让你修改密码, 修改即可

5.2 Dashboard安装

a. 官方仪表盘:https://grafana.com/grafana/dashboards

b. 安装主机监控

在上面的页面中的搜索框中搜:linux, 操作如下图

c. 点击并打开,你会得到如下链接:

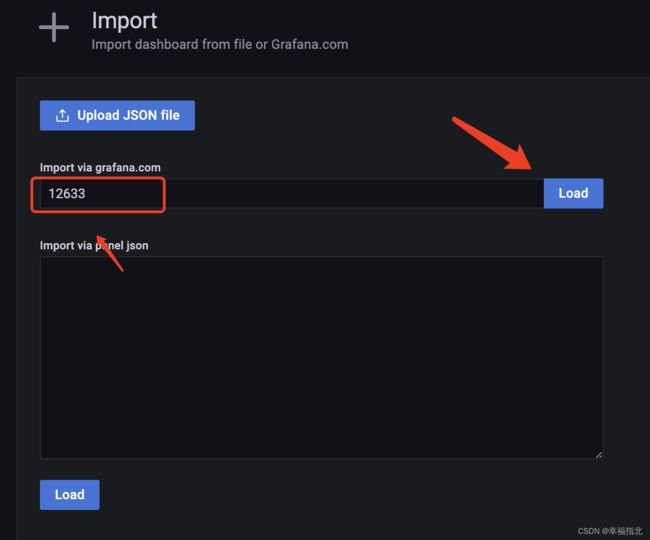

https://grafana.com/grafana/dashboards/12633

请复制后面的dashboards Id: 12633

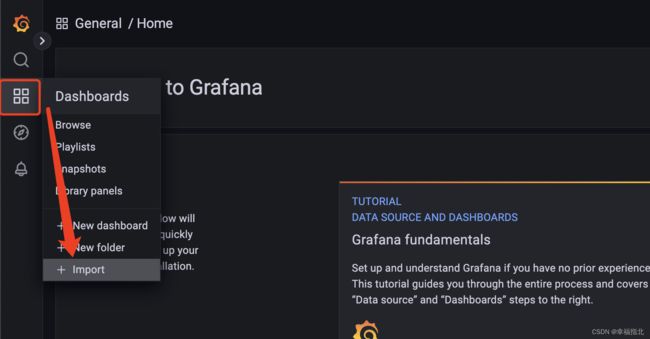

d. 然后回到我们的Grafana UI中,按如下操作

e. 填入ID,并点击Load, 如下图:

f. 选择数据源prometheus, 并点击Import

5.4 其他仪表盘推荐

1、 https://grafana.com/grafana/dashboards/1860 所有节点的监控仪表盘

2、https://grafana.com/grafana/dashboards/15514 Cilium仪表盘

3、https://grafana.com/grafana/dashboards/15661 K8S各个Node仪表盘

6、 参考文章:

https://blog.csdn.net/qq_33921750/article/details/124395645

结尾

此教程结束了,谢谢阅读!

若有遇到问题,欢迎评论区留言交流!