基于metaRTC嵌入式webrtc的H265网页播放器实现(我与metaRTC的缘分)完结

目前100ms延迟已达成,暂不进一步开始,过程中还是感觉有点力不从心,还是多点积累再出发!我与meta RTC的缘分开始于实现H265网页播放的理想,搜遍全网,也只发现metaRTC实现了webrtc H265编码的发送,相信很多网友也是这个初衷,所以大家聚到了一起,也是这个机缘让我认识了一众大佬,很多资深的音视频开发大佬都藏身于metaRTC的群里,我给自己打开了一扇窗,见识了更广阔的世界。在了解metaRTC后,很长一段时间没有怎么实质的做什么研究工作,metaRTC更新也很快,很多基于ffmpeg的,我也不熟,中途只是埋头做自己的pion系列服务器软件(其中大佬开源m7s(langhuihui/monibuca: Monibuca is a Modularized, Extensible framework for building Streaming Server (github.com))媒体服务器软件也给我极大的帮助),(期间做了一个kvs 的自研信令和flutterwebrtc客户端,这些都为我后来深入了解metaRTC打下了基础。在群里也就偶尔发一下言,潜水听大佬们讲一个一个的新概念和专业知识,受益良多,后来慢慢发现自己不能置身事外,正值杨大佬开始做metaRTC5.0稳定版,于是我开始跟进源码的运用,先后移植了自己以前基于kvs()做的信令系统,完善了datachannel传输,多peer管理等,并基于IPC应用做了一个基于RV1126嵌入式IPC音视频的硬编传输(详见metaRTC性能测试_superxxd的博客-CSDN博客),也感受了群主强大的研发能力,深受鼓舞。但一直的梦想H265 浏览器播放没有得到满足。

在8月12日晚上,我在群里发言,希望做一个基于metaRTC的H265网页版的播放器,以下是当时的热闹的聊天信息

从当时的发言,看得出来,我的确是一脸懵逼,甚至连wasm不能硬解都不清楚,只是听说过。但是因为我认为实现过程比实现本身的价值更高,于是就义无反顾地出发了,惯用套路,各种baidu,github,也发现了前辈大佬们做了相当多的工作,于是在他们的基础上开始干活,直接在别人项目上动手测试,先看看是怎么运作的,效果是什么样的,然后一点一点的根据自己的想法实现文件传输,解码播放,测试、性能优化。中间各种花屏,解码不成功,不出图。让我都想要放弃。但事实证明实现的过程比实现本身更有价值,过程会让你将书本知识变成自己的理解,融入自己的知识体系。现在终于实现了一版好于我预期的播放器。初始源码开源在如下地址https://github.com/xiangxud/webrtc_H265player,欢迎大家star,fork 和提issue pr

我先在我的go写的项目里面验证我的想法,于是写了datachannel h265视频编码发送的函数,并实现了帧的解析

const (

//H265

// https://zhuanlan.zhihu.com/p/458497037

NALU_H265_VPS = 0x4001

NALU_H265_SPS = 0x4201

NALU_H265_PPS = 0x4401

NALU_H265_SEI = 0x4e01

NALU_H265_IFRAME = 0x2601

NALU_H265_PFRAME = 0x0201

HEVC_NAL_TRAIL_N = 0

HEVC_NAL_TRAIL_R = 1

HEVC_NAL_TSA_N = 2

HEVC_NAL_TSA_R = 3

HEVC_NAL_STSA_N = 4

HEVC_NAL_STSA_R = 5

HEVC_NAL_BLA_W_LP = 16

HEVC_NAL_BLA_W_RADL = 17

HEVC_NAL_BLA_N_LP = 18

HEVC_NAL_IDR_W_RADL = 19

HEVC_NAL_IDR_N_LP = 20

HEVC_NAL_CRA_NUT = 21

HEVC_NAL_RADL_N = 6

HEVC_NAL_RADL_R = 7

HEVC_NAL_RASL_N = 8

HEVC_NAL_RASL_R = 9

)

// int type = (NALU头第一字节 & 0x7E) >> 1

// hvcC extradata是一种头描述的格式。而annex-b格式中,则是将VPS, SPS和PPS等同于普通NAL,用start code分隔,非常简单。Annex-B格式的”extradata”:

// start code+VPS+start code+SPS+start code+PPS

//格式详情参见以下博客

// 作者:一川烟草i蓑衣

// 链接:https://www.jianshu.com/p/909071e8f8c6

func GetFrameTypeName(frametype uint16) (string, error) {

switch frametype {

case NALU_H265_VPS:

return "H265_FRAME_VPS", nil

case NALU_H265_SPS:

return "H265_FRAME_SPS", nil

case NALU_H265_PPS:

return "H265_FRAME_PPS", nil

case NALU_H265_SEI:

return "H265_FRAME_SEI", nil

case NALU_H265_IFRAME:

return "H265_FRAME_I", nil

case NALU_H265_PFRAME:

return "H265_FRAME_P", nil

default:

return "", errors.New("frametype unsupport")

}

}

func FindStartCode2(Buf []byte) bool {

if Buf[0] != 0 || Buf[1] != 0 || Buf[2] != 1 {

return false //判断是否为0x000001,如果是返回1

} else {

return true

}

}

func FindStartCode3(Buf []byte) bool {

if Buf[0] != 0 || Buf[1] != 0 || Buf[2] != 0 || Buf[3] != 1 {

return false //判断是否为0x00000001,如果是返回1

} else {

return true

}

}

func GetFrameType(pdata []byte) (uint8, uint16, error) {

var frametype uint16

destcount := 0

// naluendptr := 0

if FindStartCode2(pdata) {

destcount = 3

} else if FindStartCode3(pdata) {

destcount = 4

} else {

return 0, 0, errors.New("not find")

}

temptype := (pdata[destcount] & 0x7E) >> 1

bytesBuffer := bytes.NewBuffer(pdata[destcount : destcount+2])

binary.Read(bytesBuffer, binary.BigEndian, &frametype)

fmt.Printf("temptype :%02x type is 0x%04x", temptype, frametype)

return temptype, frametype, nil

}

func H265DataChannelHandler(dc *webrtc.DataChannel, mediatype string) {

fmt.Printf("H265DataChannelHandler\n")

dc.OnOpen(func() {

nInSendH265Track++

fmt.Printf("dc.OnOpen %d\n", nInSendH265Track)

sendH265ImportFrame(dc, utils.NALU_H265_SEI)

sendH265ImportFrame(dc, utils.NALU_H265_VPS)

sendH265ImportFrame(dc, utils.NALU_H265_SPS)

sendH265ImportFrame(dc, utils.NALU_H265_PPS)

sendH265ImportFrame(dc, utils.NALU_H265_IFRAME)

if nInSendH265Track <= 1 {

go func() {

fmt.Println("read stdin for h265\n")

fmt.Println("start thread for h265 ok\n")

sig := make(chan os.Signal)

signal.Notify(sig, syscall.SIGINT, syscall.SIGTERM, syscall.SIGKILL, syscall.SIGABRT, syscall.SIGQUIT)

var file *os.File

var err error

if !USE_FILE_UPLOAD {

rk1126.ResumeH264()

} else {

fileName := "./h265_high.mp4"

file, err = os.Open(fileName)

defer file.Close()

if err != nil {

fmt.Println("Open the file failed,err:", err)

os.Exit(-1)

}

fmt.Println("open file ", fileName, " ok\n")

}

// // h264FrameDuration=

timestart := time.Now().UnixMilli()

ticker := time.NewTicker(h264FrameDuration)

defer ticker.Stop()

for {

select {

case <-sig:

rk1126.PauseH264()

break

case <-sysvideochan:

rk1126.PauseH264()

fmt.Println("sysvideochan exit")

nInSendH265Track = 0

return

case <-ticker.C:

// default:

if nInSendH265Track <= 0 {

rk1126.PauseH264()

fmt.Println("no dc channel exit")

return

}

if USE_FILE_UPLOAD {

var arr [256]byte

var buf []byte

bufflen := 0

for {

// var arr [MAXPACKETSIZE]byte

n, err := file.Read(arr[:])

if err == io.EOF {

fmt.Println("file read finished")

file.Seek(0, 0)

continue

//break

}

if err != nil {

fmt.Println("file read failed", err)

os.Exit(-1)

}

buf = append(buf, arr[:n]...)

bufflen += n

if bufflen >= MAXPACKETSIZE {

break

}

}

h265 := &rk1126.Mediadata{}

h265.Data = buf

h265.Len = bufflen

timestart := time.Now().UnixMilli()

for _, vdc := range H265dcmap {

SendH265FrameData(vdc, h265, timestart)

}

time.Sleep(h264FrameDuration)

} else {

delayms := time.Now().UnixMilli() - timestart

fmt.Println("send H265 delay ", delayms)

// fmt.Println("GetH264Data start nInSendH264Track->", nInSendH264Track)

timestart = time.Now().UnixMilli()

h265 := rk1126.GetH264Data()

// data := h264.Data[0 : h264.Len-1]

if h265 != nil {

for _, vdc := range H265dcmap {

// fmt.Println("\r\nSendH265FrameData ", vdc)

SendH265FrameData(vdc, h265, h265.Timestamp.Milliseconds())

}

rk1126.VideoDone(h265)

// fmt.Println("\r\nh264 send ok")

} else {

fmt.Println("h265 data is nil")

}

}

}

}

fmt.Println("h265 thread exit")

nInSendH265Track = 0

}()

}

})

dc.OnMessage(func(msg webrtc.DataChannelMessage) {

msg_ := string(msg.Data)

fmt.Println(msg_)

})

dc.OnClose(func() {

fmt.Println("hd265 dc close")

nInSendH265Track--

for k, v := range H265dcmap {

if v == dc {

delete(H265dcmap, k)

}

}

if mediatype == "audio" {

nInSendAudioTrack--

if nInSendAudioTrack <= 0 {

//用户全部退出就是让采集程序退出

fmt.Println("sysaudiochan 退出")

if sysaudiochan != nil {

sysaudiochan <- struct{}{}

}

}

}

// syschan <- struct{}{}

})

}

var vpsFrame rk1126.Mediadata

var spsFrame rk1126.Mediadata

var ppsFrame rk1126.Mediadata

var seiFrame rk1126.Mediadata

var keyFrame rk1126.Mediadata

func SaveFrameKeyData(pdata *rk1126.Mediadata, frametype uint16) {

switch frametype {

case utils.NALU_H265_VPS:

vpsFrame = *pdata

case utils.NALU_H265_SPS:

spsFrame = *pdata

case utils.NALU_H265_PPS:

ppsFrame = *pdata

case utils.NALU_H265_SEI:

seiFrame = *pdata

case utils.NALU_H265_IFRAME:

keyFrame = *pdata

default:

}

}

//重要帧发送

func sendH265ImportFrame(dc *webrtc.DataChannel, frametype uint16) {

start := time.Now().UnixMilli()

switch frametype {

case utils.NALU_H265_VPS:

SendH265FrameData(dc, &vpsFrame, start)

case utils.NALU_H265_SPS:

SendH265FrameData(dc, &vpsFrame, start)

case utils.NALU_H265_PPS:

SendH265FrameData(dc, &ppsFrame, start)

case utils.NALU_H265_SEI:

SendH265FrameData(dc, &seiFrame, start)

case utils.NALU_H265_IFRAME:

SendH265FrameData(dc, &keyFrame, start)

default:

}

}

func SendH265FrameData(dc *webrtc.DataChannel, h265frame *rk1126.Mediadata, timestamp int64) {

// fmt.Println("start SendH265FrameData ", dc)

if h265frame.Len > 0 && dc != nil && dc.ReadyState() == webrtc.DataChannelStateOpen {

var frametypestr string

data := h265frame.Data[0:h265frame.Len]

// data := base64.StdEncoding.EncodeToString(buf)

glength := len(data)

count := glength / MAXPACKETSIZE

rem := glength % MAXPACKETSIZE

temptype, frametype, err := utils.GetFrameType(data)

if err != nil {

} else {

SaveFrameKeyData(h265frame, frametype)

frametypestr, err = utils.GetFrameTypeName(frametype)

}

// string.split(",")

// string.split(":")

startstr := "h265 start ,FrameType:" + frametypestr + ",nalutype:" + strconv.Itoa(int(temptype)) + ",pts:" + strconv.FormatInt(timestamp, 10) + ",Packetslen:" + strconv.Itoa(glength) + ",packets:" + strconv.Itoa(count) + ",rem:" + strconv.Itoa(rem)

// startstr := fmt.Sprintf("h265 start ,FrameType:%s,pts:%lld,Packetslen:%d,packets:%d,rem:%d", frametypestr, h265frame.Timestamp.Milliseconds(), glength, count, rem)

dc.SendText(startstr)

fmt.Println("SendH265FrameData start ", startstr)

i := 0

for i = 0; i < count; i++ {

lenth := i * MAXPACKETSIZE

// dc.SendText("jpeg ID:" + strconv.Itoa(i))

dc.Send(data[lenth : lenth+MAXPACKETSIZE])

//fmt.Println("send len ", lenth, " :", data[lenth:lenth+MAXPACKETSIZE])

}

if rem != 0 {

// dc.SendText("jpeg ID:" + strconv.Itoa(i))

dc.Send(data[glength-rem : glength])

//fmt.Println("send len ", rem, " :", data[glength-rem:glength])

}

dc.SendText("h265 end")

//fmt.Println("send h265 end ")

}

}在js里面实现了H265帧流的接收和处理

//webrtc datachannel send h265 stream

const START_STR="h265 start";

const FRAME_TYPE_STR="FrameType";

const PACKET_LEN_STR="Packetslen";

const PACKET_COUNT_STR="packets";

const PACKET_PTS="pts";

const PACKET_REM_STR="rem";

const KEY_FRAME_TYPE="H265_FRAME_I"

var frameType="";

var isKeyFrame=false;

var pts=0;

var h265DC;

var bWorking=false;

var h265dataFrame=[];

var h265data;

var dataIndex=0;

var h265datalen=0;

var packet=0;

var expectLength = 4;

var bFindFirstKeyFrame=false;

// startstr := "h265 start ,FrameType:" + frametypestr + ",Packetslen:" + strconv.Itoa(glength) + ",packets:" + strconv.Itoa(count) + ",rem:" + strconv.Itoa(rem)

function isString(str){

return (typeof str=='string')&&str.constructor==String;

}

function hexToStr(hex,encoding) {

var trimedStr = hex.trim();

var rawStr = trimedStr.substr(0, 2).toLowerCase() === "0x" ? trimedStr.substr(2) : trimedStr;

var len = rawStr.length;

if (len % 2 !== 0) {

alert("Illegal Format ASCII Code!");

return "";

}

var curCharCode;

var resultStr = [];

for (var i = 0; i < len; i = i + 2) {

curCharCode = parseInt(rawStr.substr(i, 2), 16);

resultStr.push(curCharCode);

}

// encoding为空时默认为utf-8

var bytesView = new Uint8Array(resultStr);

var str = new TextDecoder(encoding).decode(bytesView);

return str;

}

function deepCopy(arr) {

const newArr = []

for(let i in arr) {

console.log(arr[i])

if (typeof arr[i] === 'object') {

newArr[i] = deepCopy(arr[i])

} else {

newArr[i] = arr[i]

}

}

console.log(newArr)

return newArr

}

function dump_hex(h265data,h265datalen){

// console.log(h265data.toString());

var str="0x"

for (var i = 0; i < h265datalen; i ++ ) {

var byte =h265data.slice(i,i+1)[0];

str+=byte.toString(16)

str+=" "

// console.log((h265datalen+i).toString(16)+" ");

}

console.log(str);

}

function appendBuffer (buffer1, buffer2) {

var tmp = new Uint8Array(buffer1.byteLength + buffer2.byteLength);

tmp.set(new Uint8Array(buffer1), 0);

tmp.set(new Uint8Array(buffer2), buffer1.byteLength);

return tmp.buffer;

};

function reportStream(size){

}

function stopH265(){

if(h265DC!==null){

h265DC.close();

}

}

var receivet1=new Date().getTime();

var bRecH265=false;

function initH265DC(pc,player) {

console.log("initH265DC",Date());

h265DC = pc.createDataChannel("h265");

// var ctx = canvas.getContext("2d");

h265DC.onmessage = function (event) {

// console.log(bRecH265,":",event.data)

if(bRecH265){

if(isString(event.data)) {

console.log("reveive: "+event.data)

if(event.data.indexOf("h265 end")!=-1){

bRecH265=false;

// console.log("frame ok",":",event.data," len:"+h265datalen)

if(h265datalen>0){

// const framepacket=new Uint8Array(h265data)

const t2 = new Date().getTime()-receivet1;

if(frameType==="H265_FRAME_VPS"||frameType==="H265_FRAME_SPS"||frameType==="H265_FRAME_PPS"||frameType==="H265_FRAME_SEI"||frameType==="H265_FRAME_P")

console.log("receive time:"+t2+" len:"+h265datalen);

if(frameType==="H265_FRAME_P"&&!bFindFirstKeyFrame){

return

}

bFindFirstKeyFrame=true;

// h265dataFrame.push(new Uint8Array(h265data))

var dataFrame=new Uint8Array(h265data)//deepCopy(h265data)//h265dataFrame.shift()

var data={

pts: pts,

size: h265datalen,

iskeyframe: isKeyFrame,

packet: dataFrame//

// new Uint8Array(h265data)//h265data//new Uint8Array(h265data)

};

var req = {

t: ksendPlayerVideoFrameReq,

l: h265datalen,

d: data

};

player.postMessage(req,[req.d.packet.buffer]);

h265data=null;

h265datalen=0;

packet=0;

receivet1=new Date().getTime();

}

return;

}

}else{

if (h265data != null) {

h265data=appendBuffer(h265data,event.data);

} else if (event.data.byteLength < expectLength) {

h265data = event.data.slice(0);

} else {

h265data=event.data;

}

h265datalen+=event.data.byteLength;

packet++;

console.log("packet: "+packet+": t len"+h265datalen)

return;

}

}

if(isString(event.data)) {

let startstring = event.data

// console.log("reveive: "+startstring)

if(startstring.indexOf("h265 start")!=-1){

console.log(event.data );

const startarray=startstring.split(",");

// startstr := "h265 start ,FrameType:" + frametypestr + ",Packetslen:" + strconv.Itoa(glength) + ",packets:" + strconv.Itoa(count) + ",rem:" + strconv.Itoa(rem)

for(let i=0;i接下来就是在metaRTC中的移植,因信令和采集部分以前已经做好(详见metaRTC p2p自建信令系统_superxxd的博客-CSDN博客),实现起来也比较方便,datachannel的交互以前也做了一个实现(详见metaRTC datachannel 实现 reply_superxxd的博客-CSDN博客)应为go和c师出同门所以移植实现并不难

//metaRTC 发送datachannel 的函数

void g_ipc_rtcrecv_sendData(int peeruid,uint8* data,int len,int mediatype){

if(len<=0 || data==null) return;

YangFrame H265Frame;

//H265Frame.payload=MEMCALLOC(1,len);

//if(H265Frame.payload==NULL) {

// printf("H265Frame.payload MEMCALLOC fail\n");

// return;

//}

//IpcRtcSession* rtcHandle=(IpcRtcSession*)user;

for(int32_t i=0;ipushs.vec.vsize;i++){

YangPeerConnection* rtc=rtcHandle->pushs.vec.payload[i];

//找到本peer

if(rtc->peer.streamconfig.uid==peeruid){

//memcpy(H265Frame.payload,data,len);

H265Frame.payload=data;

G265Frame.mediaType=mediatype;

H265Frame.nb=len;

H265Frame.pts=H265Frame.dts=GETTIME();

printf("datachannel send out %s\n",(char*)H265Frame.payload);

rtc->on_message(&rtc->peer,&H265Frame);

break;

}

}

//SAFE_MEMFREE(H265Frame.payload);

}

#define MAXPACKETSIZE 65536

void SendH265FrameData(int peeruid, uint8* data,int len, int64 timestamp ) {

if(data!=null &&len >0) {

char frametypestr[20];

char *endchar="h265 end";

char startstr[200+1];

int frametype=0;

int count=0,rem=0;

count = len/ MAXPACKETSIZE;

rem = glength % MAXPACKETSIZE;

if(GetFrameType(data,&frametype)==0){

SaveFrameKeyData(h265frame, frametype);

GetFrameTypeName(frametype,&frametypestr);

}

snprintf(startstr,200,"h265 start ,FrameType:%s,nalutype:%d,pts:%lld,Packetslen:%d,packets:%d,rem:%d",frametypestr,temptype,timestamp,len, count,rem);

// YANG_DATA_CHANNEL_STRING = 51,

// YANG_DATA_CHANNEL_BINARY = 53,

g_ipc_rtcrecv_sendData(peeruid,startstr,strlen(startstr),YANG_DATA_CHANNEL_STRING );

printf("SendH265FrameData start ", startstr);

int i = 0,lenth=0;

for i = 0; i < count; i++ {

lenth = i * MAXPACKETSIZE

g_ipc_rtcrecv_sendData(peeruid,data+lenth,MAXPACKETSIZE,YANG_DATA_CHANNEL_BINARY );

}

if rem != 0 {

g_ipc_rtcrecv_sendData(peeruid,data+len-rem,rem,YANG_DATA_CHANNEL_BINARY );

}

g_ipc_rtcrecv_sendData(peeruid,endchar,strlen(endchar),YANG_DATA_CHANNEL_STRING );

}

} 以上即为webrtc 播放器的核心实现,metaRTC是一款优秀的嵌入式webrtc 软件包,以后还会支持quic协议,我们的播放器软件也可以很快速的移植到这种传输协议。

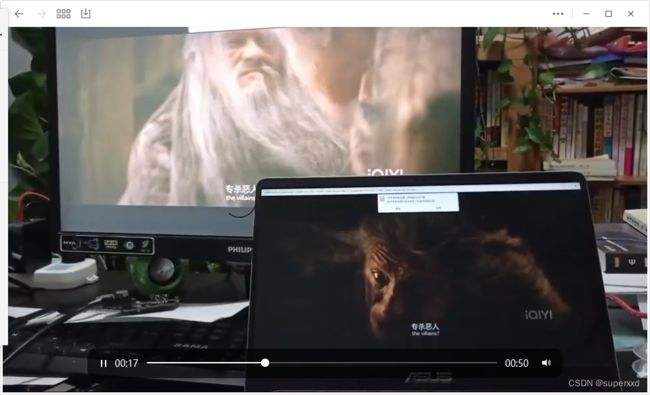

播放器解码等实现(详见基于webrtc的p2p H265播放器实现一_superxxd的博客-CSDN博客),实测效果可以见我的头条号

用webrtc h265播放器体验新版《雪山飞孤》,打破传统,利-今日头条 (toutiao.com)