python开发HDFS

为什么要用python链接HDFS

我们的目标是大数据算法平台,我们在处理数据,训练模型后,部分数据需要存在hdfs,那么我们就可以直接通过python 接口把数据放在hdfs上。

教程

Python hdfs包_程序模块 - PyPI - Python中文网

安装

pip install requests-kerberos

pip install kerberos

pip install avro

pip install hdfs 安装过程存在问题

cannot import name 'SCHEME_KEYS'

重新安装pip即可

conda remove pip

conda install pip使用方法

hdfscli模式

click模式有什么好处,其实可以看做python的cmd模式,具体可以参考Options — Click Documentation (6.x)

这里可以自行查一下click与args的区别。

Quickstart — HdfsCLI 2.5.8 documentation

新增配置, vim ~/.hdfscli.cfg

[global]

default.alias = dev

[dev.alias]

url = http://localhost:50070

user = roothdfscli功能

hdfscli --helpUsage:

hdfscli [interactive] [-a ALIAS] [-v...]

hdfscli download [-fsa ALIAS] [-v...] [-t THREADS] HDFS_PATH LOCAL_PATH

hdfscli upload [-sa ALIAS] [-v...] [-A | -f] [-t THREADS] LOCAL_PATH HDFS_PATH

hdfscli -L | -V | -h

Commands:

download Download a file or folder from HDFS. If a

single file is downloaded, - can be

specified as LOCAL_PATH to stream it to

standard out.

interactive Start the client and expose it via the python

interpreter (using iPython if available).

upload Upload a file or folder to HDFS. - can be

specified as LOCAL_PATH to read from standard

in.

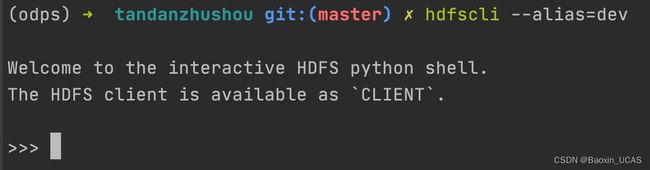

进入hdfscli

hdfscli --alias=devWebHDFS API

我们知道在hdfs中,经常用的命令有

hadoop fs -ls

hadoop fs -cat

hadoop fs -put

hadoop fs -get

hadoop fs -mkdir

hadoop fs -cp

hadoop fs -mv

hadoop fs -stat

在python中也要实现上述功能

from hdfs import Client

client=Client("http://localhost:50070/")

dir(client)

['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__registry__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', '_allow_snapshot', '_append', '_create', '_create_snapshot', '_delete', '_delete_snapshot', '_disallow_snapshot', '_get_acl_status', '_get_content_summary', '_get_file_checksum', '_get_file_status', '_get_home_directory', '_get_trash_root', '_list_status', '_lock', '_mkdirs', '_modify_acl_entries', '_open', '_proxy', '_remove_acl', '_remove_acl_entries', '_remove_default_acl', '_rename', '_rename_snapshot', '_request', '_session', '_set_acl', '_set_owner', '_set_permission', '_set_replication', '_set_times', '_timeout', '_urls', 'acl_status', 'allow_snapshot', 'checksum', 'content', 'create_snapshot', 'delete', 'delete_snapshot', 'disallow_snapshot', 'download', 'from_options', 'list', 'makedirs', 'parts', 'read', 'remove_acl', 'remove_acl_entries', 'remove_default_acl', 'rename', 'rename_snapshot', 'resolve', 'root', 'set_acl', 'set_owner', 'set_permission', 'set_replication', 'set_times', 'status', 'upload', 'url', 'urls', 'walk', 'write']具体如何使用,直接看python文档说明即可。

注意,这里的URL最后要加上反斜杠,要不然会报错。

其他参考文档

python读写hdfs四种不同格式文件 - 简书