Kubernetes轻量级日志工具Loki安装及踩坑记录

Loki简介

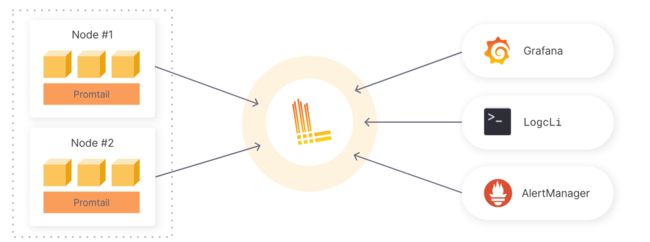

Loki是Grafana出品的一个轻量级日志系统,熟悉ELK的都知道ELK使用起来的成本,而且仅仅是日志检索使用ELK的话有点大材小用了。Loki8技术栈中使用了以下组件。

- Promtail

用来将容器日志发送到 Loki 或者 Grafana 服务上的日志收集工具,该工具主要包括发现采集目标以及给日志流添加上 Label 标签 然后发送给 Loki,Promtail 的服务发现是基于 Prometheus 的服务发现机制实现的。 - Loki

受 Prometheus 启发的可以水平扩展、高可用以及支持多租户的日志聚合系统,使用了和 Prometheus 相同的服务发现机制,将标签添加到日志流中而不是构建全文索引,从 Promtail 接收到的日志和应用的 metrics 指标就具有相同的标签集,不仅提供了更好的日志和指标之间的上下文切换,还避免了对日志进行全文索引。 - Grafana

一个用于监控和可视化观测的开源平台,支持非常丰富的数据源,在 Loki 技术栈中它专门用来展示来自 Prometheus 和 Loki 等数据源的时间序列数据,可进行查询、可视化、报警等操作,可以用于创建、探索和共享数据 Dashboard,鼓励数据驱动。

安装

Loki

关于k8s如何创建应用就不再赘述,Loki在接收日志后会对日志数据进行一定的加工整理,因为存储的数据为有状态的,安装时候推荐使用StatefulSet。创建文件loki.yaml,文件内容如下:

apiVersion: v1

kind: ServiceAccount

metadata:

name: loki

namespace: logging

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: loki

namespace: logging

rules:

- apiGroups:

- extensions

resourceNames:

- loki

resources:

- podsecuritypolicies

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: loki

namespace: logging

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: loki

subjects:

- kind: ServiceAccount

name: loki

---

apiVersion: v1

kind: ConfigMap

metadata:

name: loki

namespace: logging

labels:

app: loki

data:

loki.yaml: |

auth_enabled: false

ingester:

chunk_idle_period: 3m

chunk_block_size: 262144

chunk_retain_period: 1m

max_transfer_retries: 0

lifecycler:

ring:

kvstore:

store: inmemory

replication_factor: 1

limits_config:

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 168h

schema_config:

configs:

- from: "2022-05-15"

store: boltdb-shipper

object_store: filesystem

schema: v11

index:

prefix: index_

period: 24h

server:

http_listen_port: 3100

storage_config:

boltdb_shipper:

active_index_directory: /data/loki/boltdb-shipper-active

cache_location: /data/loki/boltdb-shipper-cache

cache_ttl: 24h

shared_store: filesystem

filesystem:

directory: /data/loki/chunks

chunk_store_config:

max_look_back_period: 0s

table_manager:

retention_deletes_enabled: true

retention_period: 48h

compactor:

working_directory: /data/loki/boltdb-shipper-compactor

shared_store: filesystem

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: loki

namespace: logging

labels:

app: loki

spec:

podManagementPolicy: OrderedReady

replicas: 1

selector:

matchLabels:

app: loki

serviceName: loki

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

app: loki

spec:

serviceAccountName: loki

securityContext:

fsGroup: 10001

runAsGroup: 10001

runAsNonRoot: true

runAsUser: 10001

initContainers: []

containers:

- name: loki

image: grafana/loki:2.3.0

imagePullPolicy: IfNotPresent

args:

- -config.file=/etc/loki/loki.yaml

volumeMounts:

- name: config

mountPath: /etc/loki

- name: loki-storage

mountPath: /data

ports:

- name: http-metrics

containerPort: 3100

protocol: TCP

livenessProbe:

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 45

timeoutSeconds: 1

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

readinessProbe:

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 45

timeoutSeconds: 1

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

securityContext:

readOnlyRootFilesystem: true

terminationGracePeriodSeconds: 4800

imagePullSecrets:

- name: your-secret

volumes:

- name: config

configMap:

defaultMode: 420

name: loki

volumeClaimTemplates:

- metadata:

name: loki-storage

labels:

app: loki

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

volumeMode: Filesystem

---

apiVersion: v1

kind: Service

metadata:

name: loki

namespace: logging

labels:

app: loki

spec:

type: ClusterIP

ports:

- port: 3100

protocol: TCP

name: http-metrics

targetPort: http-metrics

selector:

app: loki

---

apiVersion: v1

kind: Service

metadata:

name: loki-outer

namespace: logging

labels:

app: loki

spec:

type: NodePort

ports:

- port: 3100

protocol: TCP

name: http-metrics

targetPort: http-metrics

nodePort: 32537

selector:

app: loki

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: loki-storage

spec:

capacity:

storage: 5Gi

nfs:

server: yourIP

path: /data/loki

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

volumeMode: FilesystemGrafana

Grafana的功能就不再多说了,YYDS,用来做可视化界面,创建loki-grafana.yml,文件内容如下:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

namespace: logging

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

volumeName: grafana-storage

volumeMode: Filesystem

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-storage

spec:

capacity:

storage: 10Gi

nfs:

server: yourIp

path: /data/loki/grafana

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

volumeMode: Filesystem

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: grafana

name: grafana

namespace: logging

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

securityContext:

fsGroup: 472

supplementalGroups:

- 0

containers:

- name: grafana

image: grafana/grafana

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: http-grafana

protocol: TCP

resources:

requests:

cpu: 500m

memory: 1024Mi

limits:

cpu: 1000m

memory: 2048Mi

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-pv

imagePullSecrets:

- name: your-secret

volumes:

- name: grafana-pv

persistentVolumeClaim:

claimName: grafana-pvc

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: logging

spec:

ports:

- port: 3000

protocol: TCP

targetPort: http-grafana

nodePort: 30339

selector:

app: grafana

type: NodePort

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana-ui

namespace: logging

labels:

k8s-app: grafana

spec:

rules:

- host: your web address

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000Promtail

Promtail的主要作用是进行日志采集,每个K8s宿主机上所存储的docker容器及日志存储是独立的,和Host绑定,为了可以采集到所有节点Node的数据,官方推荐Promatail使用DaemonSet安装,创建loki-promtail.yml文件,文件内容如下:

apiVersion: v1

kind: ServiceAccount

metadata:

name: loki-promtail

labels:

app: promtail

namespace: logging

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

app: promtail

name: promtail-clusterrole

namespace: logging

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "watch", "list"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: promtail-clusterrolebinding

labels:

app: promtail

namespace: logging

subjects:

- kind: ServiceAccount

name: loki-promtail

namespace: logging

roleRef:

kind: ClusterRole

name: promtail-clusterrole

apiGroup: rbac.authorization.k8s.io

---

apiVersion: v1

kind: ConfigMap

metadata:

name: loki-promtail

namespace: logging

labels:

app: promtail

data:

promtail.yaml: |2-

client:

backoff_config:

max_period: 5m

max_retries: 10

min_period: 500ms

batchsize: 1048576

batchwait: 1s

external_labels: {}

timeout: 10s

positions:

filename: /run/promtail/positions.yaml

server:

http_listen_port: 3101

target_config:

sync_period: 10s

scrape_configs:

- job_name: kubernetes-pods-name

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_label_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/$1/**/*.log

separator: _

source_labels:

- namespace

- pod

- __meta_kubernetes_pod_uid

target_label: __path__

- job_name: kubernetes-pods-app

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

source_labels:

- __meta_kubernetes_pod_label_name

- source_labels:

- __meta_kubernetes_pod_label_app

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/$1/**/*.log

separator: _

source_labels:

- namespace

- pod

- __meta_kubernetes_pod_uid

target_label: __path__

- job_name: kubernetes-pods-direct-controllers

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: drop

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/$1/**/*.log

separator: _

source_labels:

- namespace

- pod

- __meta_kubernetes_pod_uid

target_label: __path__

- job_name: kubernetes-pods-indirect-controller

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: keep

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- action: replace

regex: '([0-9a-z-.]+)-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/$1/**/*.log

separator: _

source_labels:

- namespace

- pod

- __meta_kubernetes_pod_uid

target_label: __path__

- job_name: kubernetes-pods-static

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: ''

source_labels:

- __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror

- action: replace

source_labels:

- __meta_kubernetes_pod_label_component

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/$1/**/*.log

separator: _

source_labels:

- namespace

- pod

- __meta_kubernetes_pod_uid

target_label: __path__

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: loki-promtail

namespace: logging

labels:

app: promtail

spec:

selector:

matchLabels:

app: promtail

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: promtail

spec:

serviceAccountName: loki-promtail

containers:

- name: promtail

image: grafana/promtail:2.3.0

imagePullPolicy: IfNotPresent

args:

- -config.file=/etc/promtail/promtail.yaml

- -client.url=http://loki:3100/loki/api/v1/push

env:

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

volumeMounts:

- mountPath: /etc/promtail

name: config

- mountPath: /run/promtail

name: run

- mountPath: /var/lib/docker/containers

name: docker

readOnly: true

- mountPath: /var/log/pods

name: pods

readOnly: true

ports:

- containerPort: 3101

name: http-metrics

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsGroup: 0

runAsUser: 0

readinessProbe:

failureThreshold: 5

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

imagePullSecrets:

- name: your-secret

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

volumes:

- name: config

configMap:

defaultMode: 420

name: loki-promtail

- name: run

hostPath:

path: /run/promtail

type: ""

- name: docker

hostPath:

path: /var/lib/docker/containers

- name: pods

hostPath:

path: /var/log/pods在安装过程中最容易遇到问题的应该就是Promtail配置的过程了,首先需要明白其工作原理,Promtail的优势在于如果直接采集Docker容器的日志,可以使用官方提供的模板自动采集,对于应用没有侵入,不需要进行特殊配置。

使用Promtail核心操作在于两步

- 挂载k8s节点宿主机到容器内目录,这一步需要注意Docker的工作目录,默认为

/var/lib/docker/,如果修改了,在挂载目录时需要同步修改。k8s 1.14之前版本为/var/log/pods/,1.14 or later:/ / .log /var/log/pods/。其中num为容器重启的次数,使用_ _ / / .log ls -l命令查看会发现/var/log/pods/中的日志文件为软链接/var/lib/docker/中的文件,因此在挂载时后需要注意。 - 日志文件路径匹配,在进行relabel_configs配置时需要结合容器中的实际地址进行标签设置。

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/$1/**/*.log

separator: _

source_labels:

- namespace

- pod

- __meta_kubernetes_pod_uid

target_label: __path__当遇到__path__时会将replacement替换为具体的文件路径,$1表示取source_labels中的第一个参数,在此对应namespace,这里的namespace已经进行了替换,会被拼接为实际的值。

注意事项

Loki的安装使用k8s会很简单,如果使用helm就更简单了,基于笔者使用的环境为内网,所以通过下载离线镜像的方式,在内网私服进行安装,过程很简单,细节是魔鬼。在安装过程中也踩了很多坑,知其然更知其所以然才能避开安装过程中的问题,如果参考了很多博客,安装过程没问题,却依然不能正常使用,那肯定是环境或者配置问题导致的,在此记录,希望遇到相同问题的人可以得到一些帮助。

关于Loki和Promtail安装中涉及到的ConfigMap中的配置项就不再赘述,详细的配置内容可以参考:

- loki-cm: Configuration | Grafana Loki documentation

- promtail-cm: Configuration | Grafana Loki documentation

其他参考资料:

- Loki - Kubernetes 进阶训练营(第2期)

- Grafana学习(loki日志存在错误的label) | Z.S.K.'s Records