JAVA后端项目实战:SpringBoot+Elasticsearch+Redis+MybatisPlus+binlog监听+权限鉴定(JWT+Token+redis)

文章目录

- 〇、功能设计

- 一、SpringBoot项目搭建

- 二、Elasticsearch数据库搭建

-

- 0.部署Elasticsearch和kibana服务

- 1.SpringBoot整合Elasticsearch

- 2.爬虫获得数据来源

- 3.导入数据

- 三、使用Redis进行接口限流

-

- 0.整合Redis

- 1.导入Redis模块代码

- 2.实现AOP限流

- 四、指标分析模块

-

- 1.功能概述

- 2.获取指标

- 3.更新指标

- 五、数据管理模块

-

- 0.搭建MySQL服务

- 1.整合MybatisPlus和MySQL

- 2.代码实现CRUD

- 六、MySQL、Elasticsearch数据同步

-

- 1.功能概述

- 2.相关配置

- 3.bin log监控逻辑

- 七、权限

-

- 1.配置

- 2.注册和登录(获取token)

- 3.aop实现权限拦截

代码仓库:github

最好对着源码看此教程。

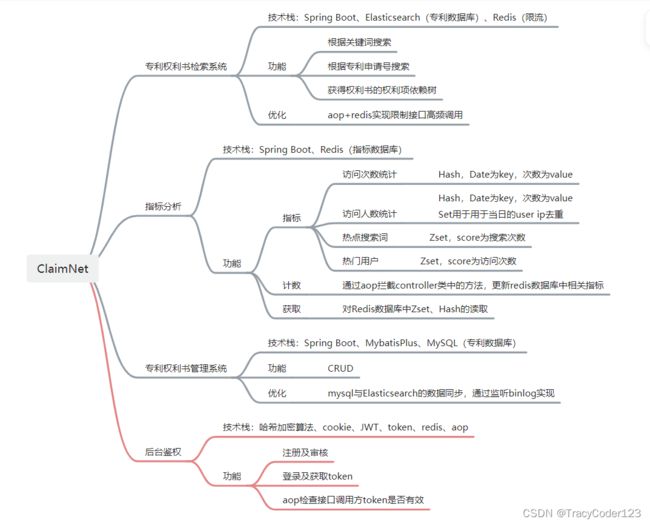

〇、功能设计

设置数据检索、指标分析、数据管理模块,数据检索提供给用户使用,后两个模块提供给管理员使用。此外还实现了权限拦截功能。

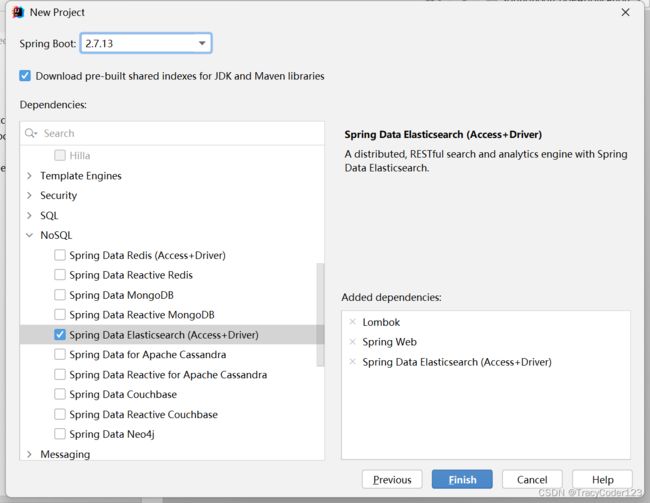

一、SpringBoot项目搭建

- 依赖:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.13</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.tracy</groupId>

<artifactId>Search</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>Search</name>

<description>Search</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.aspectj</groupId>

<artifactId>aspectjweaver</artifactId>

<version>1.9.7</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<!-- https://mvnrepository.com/artifact/org.kie.modules/com-fasterxml-jackson -->

<dependency>

<groupId>org.kie.modules</groupId>

<artifactId>com-fasterxml-jackson</artifactId>

<version>6.5.0.Final</version>

<type>pom</type>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>

- 项目信息

二、Elasticsearch数据库搭建

0.部署Elasticsearch和kibana服务

使用教程之前写过了:博客

按照步骤搭建起Elasticsearch和kibana服务。

部署好服务之后,创建两个索引text和claim,与后端代码对应起来。

1.SpringBoot整合Elasticsearch

整合教程之前也写过了:博客

2.爬虫获得数据来源

数据爬虫 -> 解析 -> 转为json

数据是根据自己写的爬虫程序获得,简单来说,就是通过已有的一个专利申请号列表去EPO网站上爬取对应的权利书数据。爬取并整理后的数据放在了项目的statics目录下的json文件夹中。 由于不想触及一些版权的问题,因此仅展示其中的几百条数据。

- 代码如下:

import json

import re

from selenium import webdriver

from selenium.webdriver.common.by import By

from scrapy.selector import Selector

def init():

# 1 初始化

option = webdriver.EdgeOptions()

# 这里添加edge的启动文件=>chrome的话添加chrome.exe的绝对路径

option.binary_location = r'C:\Program Files (x86)\Microsoft\Edge\Application\msedge.exe'

# 这里添加的是driver的绝对路径

driver = webdriver.Edge(r'D:\setup\edgedriver_win64\msedgedriver.exe', options=option)

return driver

# 爬取数据

def down(driver, patent_id, path):

url = "https://worldwide.espacenet.com/patent/search?q=" + patent_id

dict_claims = {

"_id": patent_id,

"_source": {}

}

# 2 获取元素

try:

driver.minimize_window()

driver.get(url)

driver.implicitly_wait(30)

driver.find_element(by=By.CLASS_NAME, value='search')

# time.sleep(1)

driver.find_element(by=By.XPATH, value='//*[contains(@data-qa,"claimsTab_resultDescription")]').click()

driver.find_element(by=By.XPATH, value='//*[contains(@class,"text-block__content--3_ryPSrw")]')

# time.sleep(1)

# 3 获取元素中的数据

text = driver.find_element(by=By.XPATH, value='//body').get_attribute('innerHTML')

html = Selector(text=text)

text = html.xpath('//*[contains(@class, "text-block__content--3_ryPSrw")]')[0].xpath('string(.)').get()

# 4 处理获取到的text

pattern = r'[0-9]+\.\s'

list = re.split(pattern, text)

for i in range(len(list)):

if len(list[i]) != 0:

dict_claims["_source"][str(i)]=list[i].strip()

# 把爬取到的数据存放到path中

if len(dict_claims["_source"])!=0:

with open(file=path, mode='a', encoding='utf-8') as f:

f.write(json.dumps(dict_claims) + "\n")

print("【获取成功】")

except Exception as e:

# open(file='cited_ids_balanced_failed.txt', mode='a', encoding="utf-8").write(patent_id.strip()+"\n")

print(e)

pass

if __name__ == '__main__':

f_read = open(file='../2 读取专利号/cited_ids_balanced_failed.txt', mode='r', encoding="utf-8")

path_original = 'data_cited.json'

driver = init()

for line in f_read:

patent_id = line.strip()

print(patent_id)

down(driver, patent_id, path_original)

3.导入数据

执行命令将claim.json和text.json分别导入索引claim和索引text中去。

0 安装npm工具

apt install npm -g

1 安装elasticdump插件

npm install elasticdump -g

2 启动es服务

3 通过json文件导入数据

elasticdump --input ./文件名.json --output "http://服务器内网ip:9200/索引名"

三、使用Redis进行接口限流

0.整合Redis

之前写的教程:博客

1.导入Redis模块代码

将仓库中RedisConfig类和AccessLimit类粘贴到项目中。

- 增加yml配置:

time表示限流的单位时间(s),access表示在time s时间内每个接口最多可以访问的次数。

spring:

elasticsearch:

rest:

uris: http://es???ip:9200

redis:

host: 服务器ip

database: 0

port: 6379

# 自定义配置变量

redis:

N: 1000

time: 60

access: 1000

2.实现AOP限流

package com.tracy.search.util;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.annotation.Aspect;

import org.aspectj.lang.annotation.Before;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

@Aspect

@Component

public class AccessLimitAspect {

@Autowired

AccessLimit accessLimit;

@Before("execution(* com.tracy.search.controller..*.*(..))")

public void checkLimit(JoinPoint joinPoint) {

String signature=joinPoint.getTarget().getClass().getName()+"."+joinPoint.getSignature().getName()+"()";

if(!accessLimit.accessLimit(signature)){

throw new SecurityException("达到了限流上限!");

}

}

}

controller包下的全部接口将会被拦截限流。

四、指标分析模块

1.功能概述

使用Redis+AOP统计检索系统的访问次数、访问人数、热点搜索词、热门用户。

- 访问次数: Hash存储,Date为key,次数为value。按日统计。

- 访问人数: Hash,Date为key,次数为value,按日统计。Set用于用于每日的user ip去重。

- 热点搜索词: Zset,score为搜索次数。

- 热门用户: Zset,score为访问次数。

2.获取指标

- 增加yml配置:

spring:

elasticsearch:

rest:

uris: http://es???ip:9200

redis:

host: 服务器ip

database: 0

port: 6379

# 自定义配置变量

redis:

N: 1000

hotUser: 1000

hotKeyword: 1000

time: 60

access: 1000

- controller:

package com.tracy.search.controller;

import com.tracy.search.service.AnalysisService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.*;

import java.util.Date;

import java.util.HashMap;

import java.util.List;

@RestController

@RequestMapping("/analysis")

public class AnalysisController {

@Autowired

AnalysisService analysisService;

@GetMapping("/hotUser")

public List<String> hotUser(){

return analysisService.hot("HotUser");

}

@GetMapping("/hotKeyword")

public List<String> hotKeyword(){

return analysisService.hot("HotKeyword");

}

@GetMapping("/vv")

public HashMap<Date,Integer> vv(@RequestBody List<Date> dates){

return analysisService.v(dates,"VV");

}

@GetMapping("/uv")

public HashMap<Date,Integer> uv(@RequestBody List<Date> dates){

return analysisService.v(dates,"UV");

}

}

- service:

package com.tracy.search.service;

import java.util.Date;

import java.util.HashMap;

import java.util.List;

public interface AnalysisService {

List<String> hot(String type);

HashMap<Date, Integer> v(List<Date> dates,String type);

}

package com.tracy.search.service;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Service;

import java.util.*;

@Service

public class AnalysisServiceImpl implements AnalysisService{

@Autowired

RedisTemplate<String,Object> redisTemplate;

@Value("${redis.hotUser}")

int userCount;

@Value("${redis.hotKeyword}")

int keywordCount;

@Override

public List<String> hot(String type) {

List<String> res=new ArrayList<>();

Set<Object> zsets=redisTemplate.opsForZSet().range(type,0,userCount-1);

for(Object obj:zsets){

res.add((String)obj);

}

return res;

}

@Override

public HashMap<Date, Integer> v(List<Date> dates,String type) {

HashMap<Date,Integer> res=new HashMap<>();

for(Date date:dates){

res.put(date,(Integer)redisTemplate.opsForHash().get(type,date));

}

return res;

}

}

3.更新指标

基于aop拦截controller方法,然后更新相应的指标。

package com.tracy.search.aop;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.annotation.Before;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.data.redis.core.RedisTemplate;

import javax.annotation.Resource;

import javax.servlet.http.HttpServletRequest;

import java.util.Date;

public class AnalysisAspect {

@Resource

RedisTemplate<String,Object> redisTemplate;

@Value("${redis.hotUser}")

int userCount;

@Value("${redis.hotKeyword}")

int keywordCount;

//home方法每被调用一次,就更新一次uv和vv

@Before("execution(* com.tracy.search.controller.SearchController.home(..))")

public void updateIndex1(JoinPoint joinPoint) {

HttpServletRequest request=(HttpServletRequest)joinPoint.getArgs()[0];

String user_ip=request.getRemoteAddr();

uv_vv_update(user_ip);

}

//SearchController中的查询方法每被调用一次,热点词和热门用户就更新一次

@Before("execution(List com.tracy.search.controller.SearchController.*(..))" )

public void updateIndex2(JoinPoint joinPoint) {

HttpServletRequest request=(HttpServletRequest)joinPoint.getArgs()[0];

String user_ip=request.getRemoteAddr();

String word=(String)joinPoint.getArgs()[1];

hot_keyword_user_update(word,"HotKeyword");

hot_keyword_user_update(user_ip,"HotUser");

}

//热点搜索词、热门用户统计

public void hot_keyword_user_update(String word,String type){

redisTemplate.opsForZSet().addIfAbsent(type,word,0);

redisTemplate.opsForZSet().incrementScore(type,word,1);

if(type.equals("HotUser")){

if(redisTemplate.opsForZSet().size(type)>userCount){

redisTemplate.opsForZSet().popMin("HotUser");

}

}else{

if(redisTemplate.opsForZSet().size(type)>keywordCount){

redisTemplate.opsForZSet().popMin("HotKeyword");

}

}

}

//访问次数、访问人数统计

public void uv_vv_update(String user_ip){

Date today=new Date();

long tomorrow_timestamp=today.getTime()+today.getTime() + (24 * 60 * 60 * 1000);

//今天的访问次数+1

redisTemplate.opsForHash().increment("VV",today,1);

//今天的访问人数更新,如果是今天的新用户则今日的UV+1

String today_uv_key=today + "UV";

if(Boolean.FALSE.equals(redisTemplate.hasKey(today_uv_key))){

redisTemplate.opsForHash().increment("UV",today,1);

redisTemplate.opsForSet().add(today_uv_key,user_ip);

redisTemplate.expireAt(today_uv_key,new Date(tomorrow_timestamp));//明天过期

}

else{

if(Boolean.FALSE.equals(redisTemplate.opsForSet().isMember(today_uv_key, user_ip))){

redisTemplate.opsForHash().increment("UV",today,1);

}

redisTemplate.opsForSet().add(today_uv_key,user_ip);

}

}

}

五、数据管理模块

0.搭建MySQL服务

创建好数据库之后,执行项目static目录下给出的sql文件就可以导入表结构和数据。

1.整合MybatisPlus和MySQL

MybatisPlus整合教程之前也写过:博客

- 依赖

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.32</version>

</dependency>

<!--PageHelper分页插件-->

<dependency>

<groupId>com.github.pagehelper</groupId>

<artifactId>pagehelper-spring-boot-starter</artifactId>

<version>1.4.4</version>

</dependency>

- yml配置

spring:

elasticsearch:

rest:

uris: http://es???ip:9200

redis:

host: 服务器ip

database: 0

port: 6379

datasource:

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://localhost:3306/数据库名?serverTimezone=UTC&useUnicode=true&characterEncoding=utf-8

username: root

password: 密码

# MyBatis-Plus的设置

# 别名包扫描路径,为路径下的所有类创建别名

mybatis-plus:

type-aliases-package: com.tracy.search.entity

# xml扫描路径,这里的classpath对应的是resources目录

# 然后在Mapper接口写上自定义方法并关联XML语句,即可实现手写SQL

mapper-locations: classpath*:mapper/*.xml

# MyBatis-Plus驼峰转换,配置后不论手写SQL还是接口方法,都能自动映射(默认on)

configuration:

map-underscore-to-camel-case: on

# 配置生成SQL日志

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

# 自定义配置变量

redis:

N: 1000

hotUser: 1000

hotKeyword: 1000

time: 60

access: 1000

- 配置类

package com.tracy.search.config;

import com.baomidou.mybatisplus.annotation.DbType;

import com.baomidou.mybatisplus.extension.plugins.MybatisPlusInterceptor;

import com.baomidou.mybatisplus.extension.plugins.inner.PaginationInnerInterceptor;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

@MapperScan("com.tracy.search.repository") //配置dao包扫描

public class MybatisPlusConfig {

/**

* 添加分页插件

*/

@Bean

public MybatisPlusInterceptor mybatisPlusInterceptor() {

MybatisPlusInterceptor interceptor = new MybatisPlusInterceptor();

// 新的分页插件,一缓和二缓遵循mybatis的规则

interceptor.addInnerInterceptor(new PaginationInnerInterceptor(DbType.MYSQL));

return interceptor;

}

}

2.代码实现CRUD

- controller

package com.tracy.search.controller;

import com.baomidou.mybatisplus.extension.plugins.pagination.Page;

import com.tracy.search.entity.Text;

import com.tracy.search.service.ManageService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.*;

import java.util.List;

@RestController

@RequestMapping("/manage")

public class ManageController {

@Autowired

ManageService manageService;

//插入单条数据

@PostMapping("/insert")

public Boolean insert(@RequestBody Text text){

return manageService.save(text);

}

//单条删除、批量删除

@PostMapping("/delete")

public Boolean delete(@RequestBody List<Integer> ids){

boolean res=true;

for(Integer id:ids){

if(!manageService.removeById(id)){

res=false;

}

}

return res;

}

//更新

@PostMapping("/update")

public Boolean update(@RequestBody Text text){

return manageService.updateById(text);

}

//条件查询

@PostMapping("/query")

public Page<Text> query(@RequestBody Text text,Integer pageNum, Integer pageSize){

Page<Text> userPage = new Page<>();

userPage.setCurrent(pageNum);

userPage.setSize(pageSize);

return manageService.query(userPage,text);

}

}

- service

package com.tracy.search.service;

import com.baomidou.mybatisplus.extension.plugins.pagination.Page;

import com.baomidou.mybatisplus.extension.service.IService;

import com.tracy.search.entity.Text;

public interface ManageService extends IService<Text> {

Page<Text> query(Page<Text> textPage,Text text);

}

package com.tracy.search.service;

import com.baomidou.mybatisplus.core.conditions.query.QueryWrapper;

import com.baomidou.mybatisplus.extension.plugins.pagination.Page;

import com.baomidou.mybatisplus.extension.service.impl.ServiceImpl;

import com.tracy.search.entity.Text;

import com.tracy.search.repository.TextDao;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

@Service

@Transactional

public class ManageServiceImpl extends ServiceImpl<TextDao, Text> implements ManageService{

@Autowired

TextDao textDao;

@Override

public Page<Text> query(Page<Text> textPage, Text text) {

return textDao.selectPage(textPage,new QueryWrapper<Text>()

.ge("application_no", text.getApplicationNo())

.ge("date",text.getDate()));

}

}

- dao

package com.tracy.search.repository;

import com.baomidou.mybatisplus.core.mapper.BaseMapper;

import com.tracy.search.entity.Text;

import org.springframework.stereotype.Repository;

@Repository

// 继承Mybatis-Plus提供的BaseMapper,提供基础的CRUD及分页方法

public interface TextDao extends BaseMapper<Text> {

}

六、MySQL、Elasticsearch数据同步

1.功能概述

- 需求

在数据管理模块对数据进行写入、删除、修改之后,将会产生MySQL和Elasticsearch数据库之间的数据不一致,因此需要同步。目前想到的解决的办法是监听MySQL的bin log,然后将修改操作同步到Elasticsearch中去。

2.相关配置

- 修改MySQL的配置文件my.ini

log_bin=mysql-bin

binlog-format=ROW

MySQL的binlog-format参数用于控制二进制日志的格式,主要有三种格式:STATEMENT、ROW和MIXED。

- STATEMENT格式

STATEMENT格式是MySQL二进制日志的默认格式,它记录了每个SQL语句的操作,即它记录了要执行的SQL语句本身,而不是记录每条记录的修改。当执行UPDATE或DELETE语句时,只会记录修改的行数,而不会记录修改的具体内容。STATEMENT格式的优点是记录的日志量较小,缺点是可能会出现数据不一致的情况。- ROW格式

ROW格式记录了每行记录的修改,即它记录了每条记录的修改,而不是记录要执行的SQL语句本身。当执行UPDATE或DELETE语句时,会记录修改前后的每行记录,而不是记录修改的行数。ROW格式的优点是记录的日志具有很高的可读性,并且能够保证数据一致性,缺点是记录的日志量较大。- MIXED格式

MIXED格式是STATEMENT格式和ROW格式的混合形式,它根据具体情况自动选择使用哪种格式。当执行简单的SQL语句时,使用STATEMENT格式;当执行复杂的SQL语句时,使用ROW格式。MIXED格式的优点是既能够保证数据一致性,又能够减少日志量,缺点是会增加系统的复杂性。需要注意的是,在选择binlog-format参数时,需要根据实际情况进行选择。如果系统对数据一致性要求较高,应该选择ROW格式;如果系统对日志量要求较高,可以选择STATEMENT格式;如果系统对数据一致性和日志量都有要求,可以选择MIXED格式。

修改配置文件后重启mysql服务,并查看是否配置生效:

show variables like 'log_bin';

- 依赖

<!-- binlog-->

<dependency>

<groupId>com.github.shyiko</groupId>

<artifactId>mysql-binlog-connector-java</artifactId>

<version>0.21.0</version>

</dependency>

- yml配置

spring:

elasticsearch:

rest:

uris: http://es???ip:9200

redis:

host: 服务器ip

database: 0

port: 6379

datasource:

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://localhost:3306/数据库名?serverTimezone=UTC&useUnicode=true&characterEncoding=utf-8

username: root

password: 密码

#mysql与redis同步需要的配置

sync:

mysqlHost: localhost

mysqlPort: 3306

base: ClaimNet

table: text

esHost: ip

esPort: 9200

index: text

# MyBatis-Plus的设置

# 别名包扫描路径,为路径下的所有类创建别名

mybatis-plus:

type-aliases-package: com.tracy.search.entity

# xml扫描路径,这里的classpath对应的是resources目录

# 然后在Mapper接口写上自定义方法并关联XML语句,即可实现手写SQL

mapper-locations: classpath*:mapper/*.xml

# MyBatis-Plus驼峰转换,配置后不论手写SQL还是接口方法,都能自动映射(默认on)

configuration:

map-underscore-to-camel-case: on

# 配置生成SQL日志

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

# 自定义配置变量

redis:

N: 1000

hotUser: 1000

hotKeyword: 1000

time: 60

access: 1000

- 同步配置

package com.tracy.search.config;

import com.github.shyiko.mysql.binlog.BinaryLogClient;

import com.github.shyiko.mysql.binlog.event.deserialization.EventDeserializer;

import org.apache.http.HttpHost;

import org.elasticsearch.client.RestClient;

import org.elasticsearch.client.RestHighLevelClient;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import java.util.concurrent.TimeUnit;

@Configuration

public class SyncConfig {

@Value("${aync.mysqlHost}")

private String mysqlHostname;

@Value("${aync.mysqlPort}")

private Integer mysqlPort;

@Value("${spring.datasource.username}")

private String mysqlUsername;

@Value("${spring.datasource.password}")

private String mysqlPassword;

@Bean

public BinaryLogClient binaryLogClient(){

BinaryLogClient binaryLogClient = new BinaryLogClient(mysqlHostname, mysqlPort, mysqlUsername, mysqlPassword);

//MySQL二进制日志(binlog)客户端的保活时间间隔

binaryLogClient.setKeepAliveInterval(TimeUnit.SECONDS.toMillis(1));

//是否开启MySQL二进制日志(binlog)客户端的保活机制

binaryLogClient.setKeepAlive(true);

//用来反序列化MySQL binlog事件

EventDeserializer eventDeserializer = new EventDeserializer();

eventDeserializer.setCompatibilityMode(

EventDeserializer.CompatibilityMode.DATE_AND_TIME_AS_LONG,

EventDeserializer.CompatibilityMode.CHAR_AND_BINARY_AS_BYTE_ARRAY

);

binaryLogClient.setEventDeserializer(eventDeserializer);

return binaryLogClient;

}

}

3.bin log监控逻辑

package com.tracy.search.sync;

import java.io.IOException;

import java.util.HashMap;

import java.util.Map;

import com.github.shyiko.mysql.binlog.BinaryLogClient;

import com.github.shyiko.mysql.binlog.event.*;

import com.tracy.search.service.SyncService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

@Component

public class MysqlElasticsearchSync {

@Autowired

RedisTemplate<String,Object> redisTemplate;

@Autowired

BinaryLogClient binaryLogClient;

@Autowired

SyncService syncService;

@Value("${sync.base}")

String basename;

@Value("${sync.table}")

String tablename;

private static final Map<Long,String> tableMap=new HashMap<>();

public void start() throws IOException {

String table_name=basename+"."+tablename;

// 注册MySQL binlog事件监听器

binaryLogClient.registerEventListener(event -> {

EventData data = event.getData();

if (data instanceof TableMapEventData) {

// 如果是TABLE_MAP事件,获取对应的表的元数据信息

TableMapEventData tableMapEventData = (TableMapEventData) data;

long tableId = tableMapEventData.getTableId();

String tableName = tableMapEventData.getDatabase() + "." + tableMapEventData.getTable();

tableMap.put(tableId, tableName);

}

else if (data instanceof WriteRowsEventData) {

// 处理插入事件

WriteRowsEventData eventData = (WriteRowsEventData) data;

if(table_name.equals(tableMap.get(eventData.getTableId()))){

try {

syncService.handleInsert(eventData);

} catch (Exception e) {

e.printStackTrace();

}

}

} else if (data instanceof DeleteRowsEventData) {

// 处理删除事件

DeleteRowsEventData eventData = (DeleteRowsEventData) data;

if(table_name.equals(tableMap.get(eventData.getTableId()))){

try {

syncService.handleDelete( eventData);

} catch (Exception e) {

e.printStackTrace();

}

}

} else if (data instanceof UpdateRowsEventData) {

// 处理更新事件

UpdateRowsEventData eventData = (UpdateRowsEventData) data;

if(table_name.equals(tableMap.get(eventData.getTableId()))) {

try {

syncService.handleUpdate(eventData);

} catch (Exception e) {

e.printStackTrace();

}

}

}

});

binaryLogClient.connect();

}

}

package com.tracy.search.service;

import com.github.shyiko.mysql.binlog.event.DeleteRowsEventData;

import com.github.shyiko.mysql.binlog.event.UpdateRowsEventData;

import com.github.shyiko.mysql.binlog.event.WriteRowsEventData;

public interface SyncService {

void handleInsert(WriteRowsEventData eventData);

void handleDelete(DeleteRowsEventData eventData);

void handleUpdate(UpdateRowsEventData eventData);

}

package com.tracy.search.service;

import com.github.shyiko.mysql.binlog.event.DeleteRowsEventData;

import com.github.shyiko.mysql.binlog.event.UpdateRowsEventData;

import com.github.shyiko.mysql.binlog.event.WriteRowsEventData;

import com.tracy.search.entity.Text;

import com.tracy.search.repository.TextRepository;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import java.io.Serializable;

import java.util.Map;

@Service

public class SyncServiceImpl implements SyncService {

@Autowired

TextRepository textRepository;

@Override

public void handleInsert(WriteRowsEventData eventData) {

Serializable[] row = eventData.getRows().get(0);

Text text=new Text((Long)row[0],(String)row[1],(String)row[2],(String)row[3],(String)row[4],(String)row[5],(String)row[6]);

textRepository.save(text);

}

@Override

public void handleDelete(DeleteRowsEventData eventData) {

Serializable[] row = eventData.getRows().get(0);

Text text=new Text((Long)row[0],(String)row[1],(String)row[2],(String)row[3],(String)row[4],(String)row[5],(String)row[6]);

textRepository.delete(text);

}

@Override

public void handleUpdate(UpdateRowsEventData eventData) {

Map.Entry<Serializable[],Serializable[]> row_entry = eventData.getRows().get(0);

Text text=new Text((Long)row_entry.getKey()[0],(String)row_entry.getValue()[1],(String)row_entry.getValue()[2],(String)row_entry.getValue()[3],(String)row_entry.getValue()[4],(String)row_entry.getValue()[5],(String)row_entry.getValue()[6]);

textRepository.save(text);

}

}

- 项目启动时开启加事件监控

package com.tracy.search;

import com.tracy.search.sync.MysqlElasticsearchSync;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.transaction.annotation.EnableTransactionManagement;

import java.io.IOException;

@EnableTransactionManagement

@SpringBootApplication

public class SearchApplication {

public static void main(String[] args) throws IOException {

SpringApplication.run(SearchApplication.class, args);

new MysqlElasticsearchSync().start();

}

}

七、权限

关于权限这块先看看我之前写的这篇博客补充一些常识:博客

这一章基于cookie+JWT+token+redis+aop+哈希加密算法来做一个后台权限鉴定的功能。

简单来说,访问后台的用户需要先进入登录界面,进行注册,然后超级管理员有权限审核注册是否能通过;注册通过后就可以进行登录并访问后台;为了不让用户频繁地需要输入密码来访问后台,通过token来保障持续访问权限,直到token过期重新登录并获取token。

1.配置

- yml

auth:

token_alive: 7200

secret_key: tracy

- 依赖

<!-- jwt库 -->

<dependency>

<groupId>io.jsonwebtoken</groupId>

<artifactId>jjwt-api</artifactId>

<version>0.11.2</version>

</dependency>

<dependency>

<groupId>io.jsonwebtoken</groupId>

<artifactId>jjwt-impl</artifactId>

<version>0.11.2</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>io.jsonwebtoken</groupId>

<artifactId>jjwt-jackson</artifactId>

<version>0.11.2</version>

<scope>runtime</scope>

</dependency>

2.注册和登录(获取token)

- controller

package com.tracy.search.controller;

import com.tracy.search.entity.User;

import com.tracy.search.service.UserService;

import com.tracy.search.util.Result;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class AuthController {

@Autowired

UserService userService;

@PostMapping("/register")

public Result<User> register(@RequestBody User user){

return userService.register(user);

}

@PostMapping("/login")

public Result<User> login(@RequestBody User user){

return userService.login(user);

}

}

ManageController中添加:

@PostMapping("/AcceptRegister")

public Result<User> acc_register(HttpServletRequest request,@RequestBody User user){

return userService.acc_register(user);

}

- service

package com.tracy.search.service;

import com.tracy.search.entity.User;

import com.tracy.search.util.Result;

public interface UserService {

Result<User> register(User user);

Result<User> acc_register(User user);

Result<User> login(User user);

}

package com.tracy.search.service;

import com.baomidou.mybatisplus.core.conditions.query.QueryWrapper;

import com.baomidou.mybatisplus.extension.service.impl.ServiceImpl;

import com.tracy.search.entity.User;

import com.tracy.search.repository.UserDao;

import com.tracy.search.util.Encode;

import com.tracy.search.util.Result;

import com.tracy.search.util.JWT;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.TimeUnit;

@Service

@Transactional

public class UserServiceImpl extends ServiceImpl<UserDao,User> implements UserService {

@Autowired

UserDao userDao;

@Autowired

RedisTemplate<String,Object> redisTemplate;

@Value("${auth.token_alive}")

Integer token_alive;

@Override

public Result<User> register(User user) {

if(userDao.selectOne(new QueryWrapper<User>().ge("username",user.getUsername()))!=null){

return new Result<>(1,"用户名已存在",null);

}

user.setPassword(Encode.en(user.getPassword()));

userDao.insert(user);

return new Result<>(0,"",null);

}

@Override

public Result<User> acc_register(User user) {

user.setAccept(true);

userDao.updateById(user);

return new Result<>(0,"",null);

}

@Override

public Result<User> login(User user) {

//判断是否存在该用户、密码是否正确、是否是已注册用户

user.setPassword(Encode.en(user.getPassword()));

if(userDao.selectOne(new QueryWrapper<User>()

.ge("username",user.getUsername())

.ge("password",user.getPassword())

.ge("accept",1))!=null){

//生成token,redis缓存token,并返回

user.setToken(JWT.generate(user.getUsername()));

List<User> data=new ArrayList<>();

data.add(user);

redisTemplate.opsForValue().set(user.getUsername(),user.getToken());

redisTemplate.expire(user.getUsername(), token_alive, TimeUnit.SECONDS);

return new Result<>(0, "", data);

}else{

return new Result<>(1,"用户不存在或密码错误",null);

}

}

}

- dao

package com.tracy.search.repository;

import com.baomidou.mybatisplus.core.mapper.BaseMapper;

import com.tracy.search.entity.User;

import org.springframework.stereotype.Repository;

@Repository

public interface UserDao extends BaseMapper<User> {

}

- 哈希算法工具类

package com.tracy.search.util;

public class Encode {

public static String en(String password){

StringBuilder sb0=new StringBuilder();

//转为数字

for(char c:password.toCharArray()){

sb0.append(c-'0');

}

System.out.println("step1: "+sb0);

//将字符长度填充为3的倍数

while(sb0.length()%3!=0){

sb0.append(0);

}

//将中间位置的字符作为哈希值

int hash=Integer.parseInt(sb0.charAt(sb0.length()/2)+"");

System.out.println("hash: "+hash);

//每一个字符对哈希值求余

StringBuilder sb1=new StringBuilder();

for(char c:sb0.toString().toCharArray()){

sb1.append(Integer.parseInt(c+"")%hash);

}

System.out.println("step2: "+sb1);

//数字字符串转为混合字符串

sb0=new StringBuilder();

for(char c:sb1.toString().toCharArray()){

sb0.append((char)(Integer.parseInt(c+"")+'a'));

}

return sb0.toString();

}

}

- JWT生成和解析工具类:

package com.tracy.search.util;

import org.springframework.beans.factory.annotation.Value;

import io.jsonwebtoken.Claims;

import io.jsonwebtoken.Jwts;

import io.jsonwebtoken.SignatureAlgorithm;

import java.util.Date;

public class JWT {

//不公开的密钥

@Value("${auth.secret_key}")

private static String secret_key;

@Value("${auth.token_alive}")

private static Integer token_alive;

public static String generate(String username){

Date now = new Date();

Date expirationDate = new Date(now.getTime() +token_alive );

return Jwts.builder()

.setSubject(username)

.setIssuedAt(now)

.setExpiration(expirationDate)

.signWith(SignatureAlgorithm.HS256, secret_key)

.compact();

}

public static Claims parse(String token) {

return Jwts.parser()

.setSigningKey(secret_key)

.parseClaimsJws(token)

.getBody();

}

}

- 返回结果封装工具类

package com.tracy.search.util;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import java.util.List;

@Data

@NoArgsConstructor

@AllArgsConstructor

public class Result<T> {

private int code;//0表示成功、1表示失败

private String message;

private List<T> data;

}

3.aop实现权限拦截

package com.tracy.search.aop;

import com.tracy.search.controller.AuthController;

import com.tracy.search.util.JWT;

import io.jsonwebtoken.Claims;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.annotation.Aspect;

import org.aspectj.lang.annotation.Before;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

import javax.servlet.http.Cookie;

import javax.servlet.http.HttpServletRequest;

import java.util.Date;

@Aspect

@Component

public class AuthAspect {

@Autowired

RedisTemplate<String,Object> redisTemplate;

@Autowired

AuthController authController;

@Before("execution(* com.tracy.search.controller.ManageController.*(..)) || " +

"execution(* com.tracy.search.controller.AnalysisController.*(..)) ")

public void checkToken(JoinPoint joinPoint){

HttpServletRequest request=(HttpServletRequest)joinPoint.getArgs()[0];

Cookie[] cookies=request.getCookies();

//如果没有token,抛出异常

String token=null;

String username=null;

for(Cookie c:cookies){

if(c.getName().equals("token")){

token=c.getValue();

}

if(c.getName().equals("username")){

username=c.getValue();

}

}

if(token==null||username==null){

throw new SecurityException("请登录");

}

//获取到token,判断token是否有效,是否过期

if(Boolean.TRUE.equals(redisTemplate.hasKey(username)) &&redisTemplate.opsForValue().get(username).equals(token)){

return;

}

Claims claims = JWT.parse(token);

if(!claims.getSubject().equals(username)||claims.getExpiration().before(new Date())){

throw new SecurityException("请登录");

}

}

}