无人机影像配准并发布(共线方程)

无人机影像 + DEM 计算四个角点坐标(刚性变换)

-

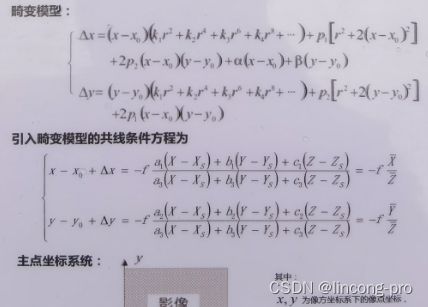

像空间坐标(x,y,-f)

-

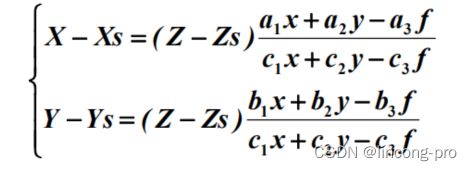

已知(x,y),求解(X,Y, Z)或者(Lat,Lon)

这里的Z是DEM上获取的坐标和Zs为相机坐标的高程,如果均为已知的情况下,则可以求解(X,Y),这里的(X,Y,Z)为地固地心坐标,单位为米。平地的情况只需要获取行高即可求解(X,Y),接着使用proj库将地固地心坐标转化为经纬度坐标即可。 -

地理配准

这里直接采用**gdal_translate和gdal_wrap**,gdal_translate转换过程如下,大概就是将jpg进行地理配准。请注意,GDAL的影像起点是左上角,但是我们的相机模型是左下角,所以需要变换Y轴。转换之后的tif只有专业的QGIS之类的软件才能读取。

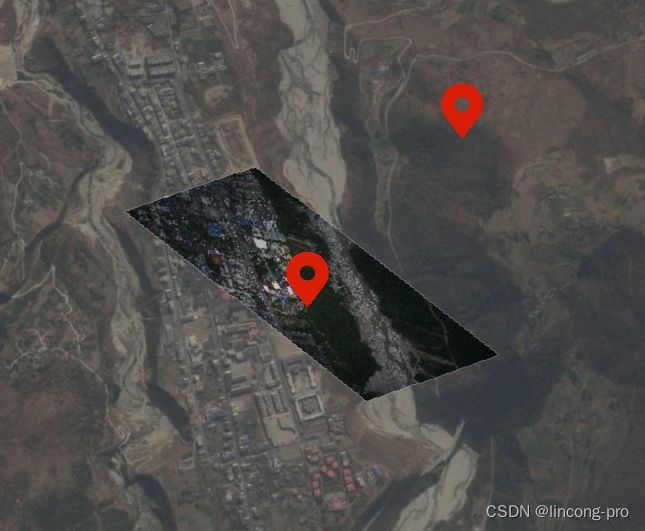

下面是QGIS读取的效果。但是为了geoserver能够识别,还需要转换,这时候需要

gdal_wrap,这个时候就很关键,我们需要设置其为透明。gdalwarp -r cubic -ovr AUTO -dstalpha D:\code\roadProj\public\demo\DSC00002\test.tif D:\code\roadProj\public\demo\DSC00002\test3_geotiff.tifgdal_translate.exe -of GTiff -gcp 0 5304 102.1265090139 29.6453703982 -gcp 7952 5304 102.1164515460 29.6474820822 -gcp 7952 0 102.1131839750 29.6445496193 -gcp 0 0 102.1233217949 29.6424804051 -ovr AUTO -co GCPs_Creation=YES D:\code\roadProj\public\demo\DSC00002\DSC00002.jpg D:\code\roadProj\public\demo\DSC00002\test.tif- geoserver发布,具体操作比较简单

代码逻辑

下面是求解影像四个角点经纬度的简单思路,主要还是共线方程,代码中的1000还是得根据距离地面的高度,即需要DEM的高程值才能求解得到较为精确的精度。

具体实现分为相机模型(固定的参数不部分),大疆无人机是WGS84椭球,EPSG:4978是地心地固的转换参数。

struct CameraModel {

double f = 7538.508; // 像素为单位

double u0 = 3982.417; // 像素为单位

double v0 = 2671.637;

double pixelSize = 4.5e-6; // 米为单位

double k1 = 2.470920e-9; // 径向畸变系数

double k2 = -2.767172e-16;

double k3 = 2.479935e-23;

double k4 = -6.583598e-31;

double p1 = 1.388595e-8; // 偏心畸变系数

double p2 = 1.781812e-7;

double alpha = -4.697031e-4; // CCD非正方形比例系数

double beta = -1.300023e-4;

double width = 7952; // 影像的高度和宽度

double height = 5304;

};```

```cpp

///

/// \brief The ComputeBoundingBox class

/// 计算影像的包围盒的经纬度坐标 + 高程,然后贴地

///

class ComputeBoundingBox {

public:

ComputeBoundingBox() {

transTool.init("EPSG:4326",

"EPSG:4978"); // 椭球坐标->地心坐标 XYZ

}

///

/// \brief resetR

/// \param phi 俯仰角

/// \param omega 横滚角

/// \param kappa 旋转角

///

void resetR(double phi, double omega, double kappa) {

this->phi = degreeToRadian(phi);

this->omega = degreeToRadian(omega);

this->kappa = degreeToRadian(kappa);

}

///

/// \brief resetCamera

/// \param lat 维度

/// \param lon 经度

/// \param height 高程

///

void resetCamera(const QString &imgNumber, double lat, double lon, double height) {

this->imgNumber = imgNumber;

this->cameraLat = lat;

this->cameraLon = lon;

this->height = height;

}

///

/// \brief compute 计算相机位置 + 旋转矩阵

///

void compute();

private:

///

/// \brief computeLatlon 根据像点坐标计算经纬度坐标(共线方程的逻辑)

/// @param vector2d uv x轴,y轴坐标

///

Eigen::Vector2d computeLatlon(Eigen::Vector2d &uv);

///

/// \brief degreeToRadian 度转弧度

/// \param degree

/// \return

///

double degreeToRadian(double degree) { return degree * M_PI / 180.0; }

// double computeDeltaX();

// double computeDeltaY();

Eigen::Vector3d cameraGeo;

Eigen::Matrix3d rMatrix;

Transform transTool;

CameraModel intrinsic; // 内参数矩阵

QString imgNumber; // 影像编号

double cameraLat; // 相机外参数矩阵

double cameraLon;

double height;

double phi;

double omega;

double kappa;

};

Eigen::Vector2d ComputeBoundingBox::computeLatlon(Eigen::Vector2d &uv) {

double u = uv.x();

double v = uv.y();

Eigen::Vector3d cameraSpace(u, v, -this->intrinsic.f); // 像空间坐标

double r = qSqrt(pow(u - this->intrinsic.u0, 2) + pow(v - this->intrinsic.v0, 2));

// (x-x0) * (k1*r^2 + k2*r^4 + k3*r^6 + k4*r8) + p1*(r^2 + 2*(x-x)^2) + 2p2*(x-x0)(y-y0) + alpha*(x-x0) +

// beta*(y-y0)

double deltaX = (u - this->intrinsic.u0) * (this->intrinsic.k1 * pow(r, 2) + this->intrinsic.k2 * pow(r, 4) +

this->intrinsic.k3 * pow(r, 6) + this->intrinsic.k4 * pow(r, 8)) +

this->intrinsic.p1 * (pow(r, 2) + 2 * pow((u - this->intrinsic.u0), 2)) +

2 * this->intrinsic.p2 * (u - this->intrinsic.u0) * (v - this->intrinsic.v0) +

this->intrinsic.alpha * (u - this->intrinsic.u0) + this->intrinsic.beta * (v - this->intrinsic.v0);

double deltaY = (v - this->intrinsic.v0) * (this->intrinsic.k1 * pow(r, 2) + this->intrinsic.k2 * pow(r, 4) +

this->intrinsic.k3 * pow(r, 6) + this->intrinsic.k4 * pow(r, 8)) +

this->intrinsic.p2 * (pow(r, 2) + 2 * pow((v - this->intrinsic.v0), 2)) +

2 * this->intrinsic.p1 * (u - this->intrinsic.u0) * (v - this->intrinsic.v0);

Eigen::Vector3d cameraOffset(deltaX - this->intrinsic.u0, deltaY - this->intrinsic.v0,

0); // 像点坐标偏移

Eigen::Vector3d cameraSpaceTrue = cameraSpace + cameraOffset; // 实际的像点坐标[最后一位该如何求解]

Eigen::Vector3d worldCoordBa =

this->rMatrix * cameraSpaceTrue * this->intrinsic.pixelSize; // (xBa, yBa, zBa) pixelSize这个参数多余

worldCoordBa =

Eigen::Vector3d(worldCoordBa.x() / worldCoordBa.z() * 1000, worldCoordBa.y() / worldCoordBa.z() * 1000, 0);

// qDebug() << worldCoordBa.x() << " " << worldCoordBa.y() << " " << worldCoordBa.z();

Eigen::Vector3d worldCoord = worldCoordBa + this->cameraGeo; // 真正的坐标

// std::cout << worldCoord.x() << " " << worldCoord.y() << " " << worldCoord.z();

// PJ_COORD latlonh = proj_coord(cameraLon, cameraLat, height, 0);

PJ_COORD geoxyz = proj_coord(worldCoord.x(), worldCoord.y(), worldCoord.z(), 0); // 地心坐标

PJ_COORD latlon = this->transTool.backward(geoxyz);

// std::cout << "lat:" << latlon.lpz.lam << " ,lon:" << latlon.lpz.phi << ", " << latlon.lpz.z;

return Eigen::Vector2d(latlon.lp.phi, latlon.lp.lam); // lat and lon

}

void ComputeBoundingBox::compute() {

PJ_COORD latlonh = proj_coord(cameraLon, cameraLat, height, 0);

PJ_COORD geoxyz = transTool.forward(latlonh);

this->cameraGeo = Eigen::Vector3d(geoxyz.xyz.x, geoxyz.xyz.y, geoxyz.xyz.z); // 地心坐标

// 计算绕X轴旋转的旋转矩阵 Rx(φ)

Eigen::Matrix3d Rx;

Rx << 1, 0, 0, 0, std::cos(phi), -std::sin(phi), 0, std::sin(phi), std::cos(phi);

// 计算绕Y轴旋转的旋转矩阵 Ry(ω)

Eigen::Matrix3d Ry;

Ry << std::cos(omega), 0, std::sin(omega), 0, 1, 0, -std::sin(omega), 0, std::cos(omega);

// 计算绕Z轴旋转的旋转矩阵 Rz(κ)

Eigen::Matrix3d Rz;

Rz << std::cos(kappa), -std::sin(kappa), 0, std::sin(kappa), std::cos(kappa), 0, 0, 0, 1;

// 计算总的旋转矩阵 R_total = Rz(κ) * Ry(ω) * Rx(φ)

this->rMatrix = Rz * Ry * Rx;

// 像素点畸变纠正

Eigen::Vector2d lb(0, 0); // 左下角为起点

Eigen::Vector2d rb(this->intrinsic.width, 0); // 右下角坐标

Eigen::Vector2d rt(this->intrinsic.width, this->intrinsic.height); // 右上角坐标

Eigen::Vector2d lt(0, this->intrinsic.height); // 左上角成果

std::vector<Eigen::Vector2d> latlonVec = {lb, rb, rt, lt};

qDebug() << "####################" << this->imgNumber << "########################";

for (Eigen::Vector2d &pixelCoord : latlonVec) {

pixelCoord = this->computeLatlon(pixelCoord);

qDebug() << "lat: " << QString::number(pixelCoord.x(), 'f', 10)

<< ",lon:" << QString::number(pixelCoord.y(), 'f', 10);

}

}