一百三十七、Hive——HQL运行报错(持续更新中)

一、timestamp字段与int字段相加

(一)场景

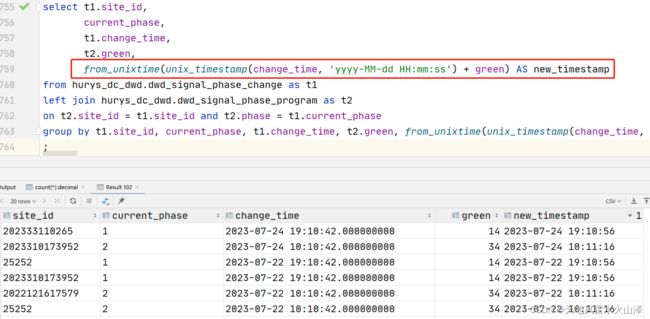

change_time字段是timestamp字段,代表一个红绿灯周期的开始时间(先是绿灯、再是黄灯、最后红灯),而green是int字段,代表绿灯的秒数,现在要求出绿灯的结束时间。即change_time字段+green字段

(二)timestamp字段与int字段无法直接相加,可以先把change_time字段转为时间戳,然后和green字段相加,最后再转为日期

样例:from_unixtime(unix_timestamp(change_time, 'yyyy-MM-dd HH:mm:ss') + green) AS new_timestamp

(三)SQL语句

成功!!!

二、with语句与insert结合使用

(一)场景

在DWS层中,对多层SQL使用with语句嵌套查询,然后insert插入数据。如果直接把insert放在with语句上面,那么就会如下报错

(二)报错

org.apache.hadoop.hive.ql.parse.ParseException:line 2:0 cannot recognize input near 'with' 'a1' 'as' in statement

(三)解决方法

把insert放在with的后面,select的前面

(四)SQL语句

with a1 as( select b1.site_id, b1.phase_id, b1.phase_start, b1.program_id, b1.lane_direction, b1.device_direction, b1.min_gree_end, b1.phase_end, b1.team_id, b1.name, t8.device_no, t9.lane_num lane_no from dws_pass as b1 left join hurys_dc_dwd.dwd_radar_config as t8 on t8.direction=b1.device_direction and t8.device_no=b1.device_no --得到真正的雷达编号字段 left join hurys_dc_dwd.dwd_radar_lane as t9 on t9.device_no=b1.device_no and t9.lane_direction=b1.lane_direction --得到车道编号字段 group by b1.site_id, b1.phase_id, b1.phase_start, b1.program_id, b1.lane_direction, b1.device_direction, b1.min_gree_end, b1.phase_end, b1.team_id, b1.name, t8.device_no, t9.lane_num) insert overwrite table dws_pass_sparetime_1hour partition(day) select a1.site_id, phase_id, program_id, phase_start, min_gree_end, phase_end, a1.device_no, team_id, name, t10.create_time, concat(substr(create_time, 1, 14), '00:00') start_time, a1.lane_no, section_no, coil_no, device_direction direction, lane_direction, target_id, target_type, drive_in_time, day from a1 left join hurys_dc_dwd.dwd_pass as t10 on t10.device_no=a1.device_no and t10.lane_no=a1.lane_no and t10.create_time between a1.min_gree_end and a1.phase_end group by a1.site_id, phase_id, program_id, phase_start, min_gree_end, phase_end, a1.device_no, team_id, name, t10.create_time, a1.lane_no, section_no, coil_no, device_direction, lane_direction, target_id, target_type, drive_in_time, day ;