【文本信息处理】网络文本访问和处理+分词

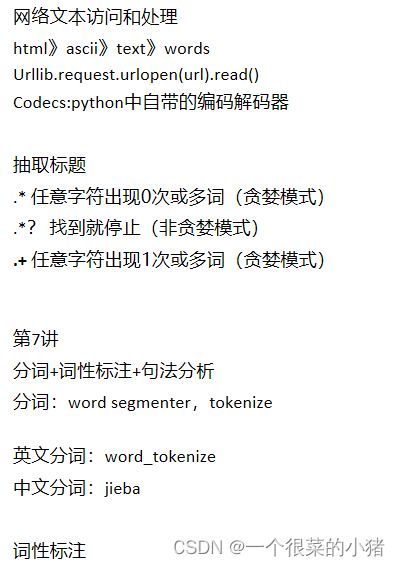

一、网络文本访问和处理

1、re.findall( )

返回string中所有与pattern匹配的全部字符串,返回形式为数组。

def findall(pattern, string, flags=0):

"""Return a list of all non-overlapping matches in the string.

If one or more capturing groups are present in the pattern, return

a list of groups; this will be a list of tuples if the pattern

has more than one group.

Empty matches are included in the result."""

return _compile(pattern, flags).findall(string)

2、代码

import codecs

import re

import urllib.request

url = "https://www.gdufs.edu.cn/info/1397/59442.htm"

html_text = urllib.request.urlopen(url).read()

print(type(html_text), html_text)

html_text_new = codecs.decode(html_text, 'utf-8')

print(type(html_text_new), html_text_new)

# 提取该网页的标题

# p1 = '(.*?) '

# 如果是.*? 后续再进行切片

p1 = '(.+?) '

title1 = re.findall(p1, html_text_new)

print(title1[0])

# 提取该网页中所有网址列表

# p2 = 'HREF="(.*?)"'

p2 = 'href="(https.+?)"'

http = re.findall(p2, html_text_new)

print(http)

二、英文分词(word_tokenize)

1、基本用法

#分句

nltk.tokenize.sent_tokenize(txt)

#分词

nltk.tokenize.word_tokenize(txt)

2、代码

import nltk.tokenize

txt = 'On the morning of March 24, Ruben Espinoza, Consul General of the Consulate General of Peru in Guangzhou, together with his delegation, visited GDUFS. Jiao Fangtai, vice president of the university, welcomed the guests in the VIP Hall of the administration building of the Baiyunshan campus. The two sides exchanged views on talent training and cultural exchange.'

chinesetext='这是一个很小的测试'

# 分句

sents = nltk.tokenize.sent_tokenize(txt)

print(sents)

print(len(sents))

# 分词

for i in range(len(sents)):

words = nltk.tokenize.word_tokenize(sents[i])

print(words)

输出

['On the morning of March 24, Ruben Espinoza, Consul General of the Consulate General of Peru in Guangzhou, together with his delegation, visited GDUFS.', 'Jiao Fangtai, vice president of the university, welcomed the guests in the VIP Hall of the administration building of the Baiyunshan campus.', 'The two sides exchanged views on talent training and cultural exchange.']

3

['On', 'the', 'morning', 'of', 'March', '24', ',', 'Ruben', 'Espinoza', ',', 'Consul', 'General', 'of', 'the', 'Consulate', 'General', 'of', 'Peru', 'in', 'Guangzhou', ',', 'together', 'with', 'his', 'delegation', ',', 'visited', 'GDUFS', '.']

['Jiao', 'Fangtai', ',', 'vice', 'president', 'of', 'the', 'university', ',', 'welcomed', 'the', 'guests', 'in', 'the', 'VIP', 'Hall', 'of', 'the', 'administration', 'building', 'of', 'the', 'Baiyunshan', 'campus', '.']

['The', 'two', 'sides', 'exchanged', 'views', 'on', 'talent', 'training', 'and', 'cultural', 'exchange', '.']

三、中文分词(jieba)

1、例题

将练习1.txt文本读入程序,使用jieba分词,将分词结果写入result.txt文件

练习1.txt

近日,软科发布2022年中国高校文科重大项目50强排行榜,我校立项文科类国家级重大项目5项,在全国高校排名第23,广东省并列第2。立项项目包括阐释党的十九届六中全会精神国家社科基金重大项目1项、国家社科基金年度重大项目3项、教育部哲学社会科学研究重大课题攻关项目1项,涉及学科包括外国文学、应用经济、法学、新闻学与传播学、国际问题研究等学科。“十四五”以来,学校已立项国家级重大项目12项。

import jieba

import nltk

from jieba import posseg

# 将文本读入程序

f = open("练习1.txt", "r", encoding='utf-8')

txt = f.read()

print(txt)

# 使用jieba分词

token = list(jieba.cut(txt))

str = ""

print(token)

print(type(token))

for word in token:

str = str + word + "/"

# 将分词结果写入result.txt文件

f2 = open("result.txt", "w", encoding='utf-8')

f2.write(str)

f2.close()

查看result.txt

近日/,/软科/发布/2022/年/中国/高校/文科/重大项目/50/强/排行榜/,/我校/立项/文科类/国家级/重大项目/5/项/,/在/全国/高校/排名第/23/,/广东省/并列/第/2/。/立项/项目/包括/阐释/党/的/十九/届/六中全会/精神/国家/社科/基金/重大项目/1/项/、/国家/社科/基金/年度/重大项目/3/项/、/教育部/哲学/社会科学/研究/重大/课题/攻关项目/1/项/,/涉及/学科/包括/外国文学/、/应用/经济/、/法学/、/新闻学/与/传播学/、/国际/问题/研究/等/学科/。/“/十四五/”/以来/,/学校/已/立项/国家级/重大项目/12/项/。/

2、分词并显示词性

fenci=jieba.posseg.cut("这是一个小小的测试")

print(list(fenci))

输出

[pair('这', 'r'), pair('是', 'v'), pair('一个', 'm'), pair('小小的', 'z'), pair('测试', 'vn')]