动手学深度学习v2笔记 —— 线性回归 + 基础优化算法

二 动手学深度学习v2 —— 线性回归 + 基础优化算法

目录:

- 线性回归

- 基础优化方法

1. 线性回归

总结

- 线性回归是对n维输入的加权,外加偏差

- 使用平方损失来衡量预测值和真实值的差异

- 线性回归有显示解

- 线性回归可以看作是单层神经网络

3. 总结

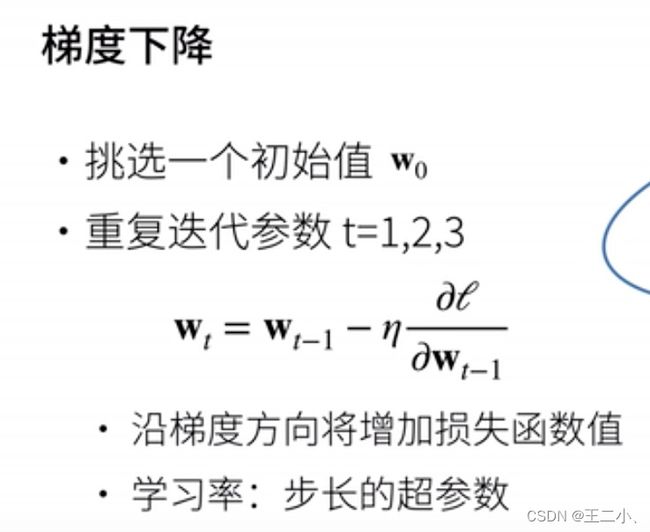

- 梯度下降通过不断沿着反梯度方向更新参数求解

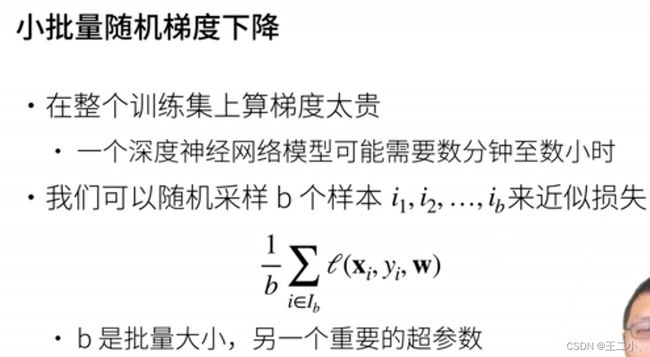

- 小批量随机梯度下降是深度学习默认的求解算法

- 两个重要的超参数是批量大小和学习率

4. 线性回归的从零开始实现

import torch

import random

def synthetic_data(w, b, num):

"生成 y = Xw + b + 噪声"

''' 根据带有噪声的线性模型构造一个人造数据集。 我们使用线性模型参数 = [2,−3.4]⊤

w=[2,−3.4]⊤、 = 4.2和噪声项ϵ生成数据集及其标签: = + + '''

X = torch.normal(0, 1, (num, len(w)))

y = torch.matmul(X, w) + b

y += torch.normal(0, 0.01, y.shape)

return X, y.reshape(-1, 1)

true_w = torch.tensor([2, -3.4])

true_b = 4.2

num_examples = 1000

batch_size = 10

features, labels = synthetic_data(true_w, true_b, num_examples)

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices)

for i in range(0, num_examples, batch_size):

num_indices = torch.tensor(indices[i: min(i +

batch_size, num_examples)])

yield features[num_indices], labels[num_indices]

def linreg(X, w, b):

return torch.matmul(X, w) + b

def squared_loss(y_hat, y):

return (y_hat - y.reshape(y_hat.shape))**2/2

def sgd(params, lr, batch_size):

with torch.no_grad():

for param in params:

param -= lr * param.grad / batch_size

param.grad.zero_()

w = torch.normal(0, 0.01, size=(2, 1), requires_grad=True)

b = torch.zeros(1, requires_grad=True)

loss = squared_loss

net = linreg

lr = 0.03

epoches = 3

for epoch in range(epoches):

for X, y in data_iter(batch_size, features, labels):

l = loss(net(X, w, b), y)

l.sum().backward()

sgd([w, b], lr, batch_size)

with torch.no_grad():

train_l = loss(net(features, w, b), labels)

print(f'epoch: {epoch + 1}, loss {float(train_l.mean()):f}')

5. 线性回归的简洁实现

import torch

from d2l import torch as d2l

from torch.utils import data

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = d2l.synthetic_data(true_w, true_b, 1000)

def load_array(batch_size, data_array, is_train=True):

dataset = data.TensorDataset(*data_array)

return data.DataLoader(dataset, batch_size, shuffle=is_train)

batch_size = 10

data_iter = load_array(batch_size, (features, labels))

from torch import nn

net = nn.Sequential(nn.Linear(2, 1))

net[0].weight.data.normal_(0, 0.01)

net[0].bias.data.fill_(0)

loss = nn.MSELoss()

trainer = torch.optim.SGD(net.parameters(), lr = 0.03)

num_epoches = 3

iter_temp = iter(data_iter)

for epoch in range(num_epoches):

for X, y in next(iter_temp):

l = loss(net(X), y)

l.backward()

trainer.step()

trainer.zero_grad()

l = loss(net(features), labels)

print(f'epoch {epoch + 1}, loss {l:f}')