【OpenStack(Train版)安装部署(十一)】之server相关命令测试以及日志分析

文章目录

-

- 本文章由公号【开发小鸽】发布!欢迎关注!!!

- 1. server相关命令测试

-

- (1)查看server相关命令

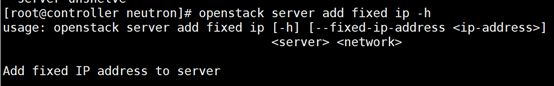

- (2)server add fixed ip

- (3)server add floating ip

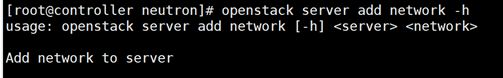

- (4)server add network

- (5)server add port

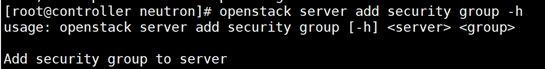

- (6)server add security group

- (7)server add volume

- (8)server backup create

- (9)server create

- (10)server delete

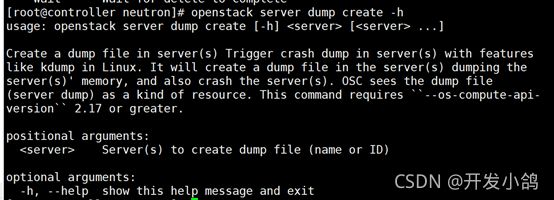

- (11) server dump create

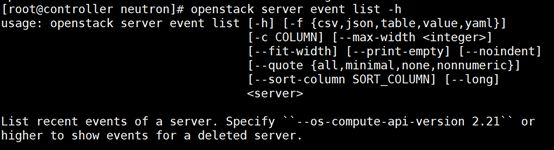

- (12)server event list

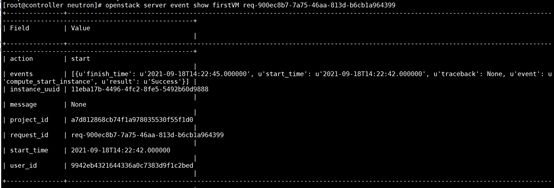

- (13)server event show

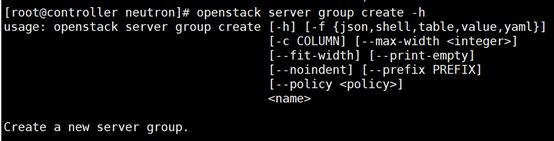

- (14)server group create

- (15)server group delete

- (16)server group list

- (17)server group show

- (18)server image create

- (19)server list

- (20)server lock

- (21)server migrate

- (22)server pause

- (23)server reboot

- (24)server rebuild

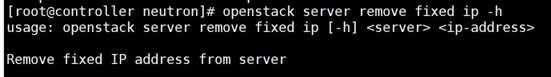

- (25)server remove fixed ip

- (26)server remove floating ip

- (27)server remove network

- (28)server remove port

- (29)server remove security group

- (30)server remove volume

- (31)server rescue

- (32)server resize

- (33)server resize confirm

- (34)server resize revert

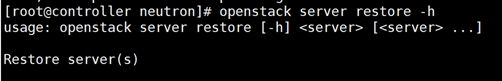

- (35)server restore

- (36)server resume

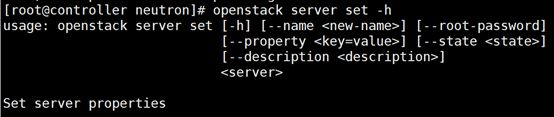

- (37)server set

- (38)server shelve

- (39)server show

- (40)server ssh

- (41)server start

- (42)server stop

- (43)server suspend

- (44)server unlock

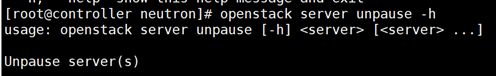

- (45)server unpause

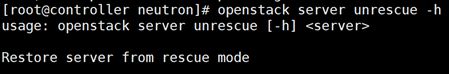

- (46)server unrescue

- (47)server unset

- (48)server unshelve

本文章由公号【开发小鸽】发布!欢迎关注!!!

老规矩–妹妹镇楼:

1. server相关命令测试

(1)查看server相关命令

[root@controller neutron]# openstack server -h

Command "server" matches:

server add fixed ip

server add floating ip

server add network

server add port

server add security group

server add volume

server backup create

server create

server delete

server dump create

server event list

server event show

server group create

server group delete

server group list

server group show

server image create

server list

server lock

server migrate

server pause

server reboot

server rebuild

server remove fixed ip

server remove floating ip

server remove network

server remove port

server remove security group

server remove volume

server rescue

server resize

server resize confirm

server resize revert

server restore

server resume

server set

server shelve

server show

server ssh

server start

server stop

server suspend

server unlock

server unpause

server unrescue

server unset

server unshelve

(2)server add fixed ip

为server实例添加固定的IP,参数是network的ID:

(3)server add floating ip

为server实例添加浮动的IP地址

(4)server add network

(5)server add port

(6)server add security group

(7)server add volume

(8)server backup create

Create a server backup image,创建实例的备份镜像,实际就是通过server实例创建一个镜像。

(9)server create

(10)server delete

(11) server dump create

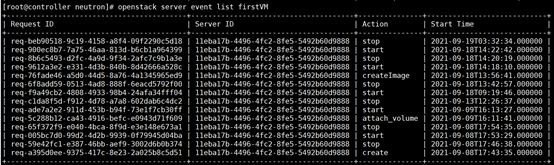

(12)server event list

(13)server event show

列出指定server实例的指定request请求的细节操作:

当我们通过查询server的event事件后,获取到对应server的指定request请求ID后,查询该请求对应的详细信息:

比如说针对firstVM这个实例的start操作,我们可以查询一下该操作的详细信息:

(14)server group create

创建一个server实例的群组,即多个server实例都放在一个群组中:

(15)server group delete

(16)server group list

(17)server group show

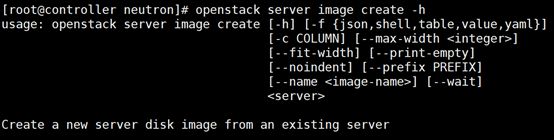

(18)server image create

(19)server list

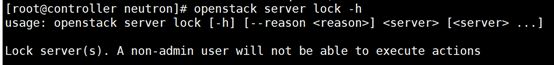

(20)server lock

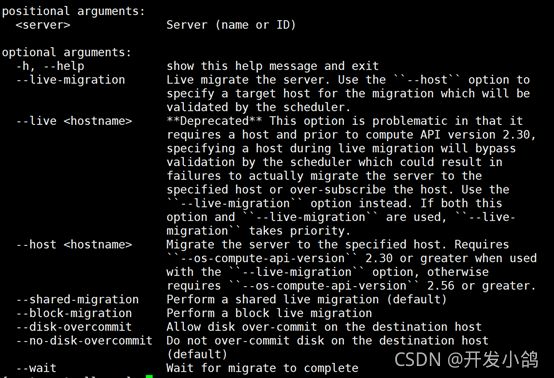

(21)server migrate

迁移一个server实例到不同的计算节点中,也就是说选择另一个计算节点进行迁移:

主要参数的解释如下所示:

–live-migration,动态迁移

一开始通过如下的命令:

openstack server migrate --live-migration sixthVM

查看nova-api.log日志发现:

2021-09-24 14:16:42.586 2289 INFO nova.api.openstack.wsgi [req-de76dedd-c998-4325-9546-0034cfab78e3 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] HTTP exception thrown: 实例 sixthVM 没有找到。

2021-09-24 14:16:42.589 2289 INFO nova.osapi_compute.wsgi.server [req-de76dedd-c998-4325-9546-0034cfab78e3 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "GET /v2.1/servers/sixthVM HTTP/1.1" status: 404 len: 517 time: 0.2916870

2021-09-24 14:16:42.730 2289 INFO nova.osapi_compute.wsgi.server [req-69b8762b-d341-42ae-a19c-8709e2dbb3c8 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "GET /v2.1/servers?name=sixthVM HTTP/1.1" status: 200 len: 701 time: 0.1353440

2021-09-24 14:16:43.186 2289 INFO nova.osapi_compute.wsgi.server [req-7e77e0ef-7c2b-4b1b-a17e-475ad37a9ec9 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "GET /v2.1/servers/ac91ed2c-6c0e-4865-afcf-2b1b9fcb5485 HTTP/1.1" status: 200 len: 1959 time: 0.4523001

2021-09-24 14:16:46.180 2289 INFO nova.api.openstack.wsgi [req-ba341f5a-8855-4a67-8d69-585ae4dc2184 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] HTTP exception thrown: 找不到有效主机,原因是 没有足够的主机可用。

2021-09-24 14:16:46.181 2289 INFO nova.osapi_compute.wsgi.server [req-ba341f5a-8855-4a67-8d69-585ae4dc2184 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "POST /v2.1/servers/ac91ed2c-6c0e-4865-afcf-2b1b9fcb5485/action HTTP/1.1" status: 400 len: 600 time: 2.9914739

发现到出现了error,找不到有效的主机,这是为什么呢?

继续查看nova-conductor.log日志:

2021-09-24 14:16:46.096 2403 WARNING nova.scheduler.utils [req-ba341f5a-8855-4a67-8d69-585ae4dc2184 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Failed to compute_task_migrate_server: 找不到有效主机,原因是 没有足够的主机可用。。

Traceback (most recent call last):

File "/usr/lib/python2.7/site-packages/oslo_messaging/rpc/server.py", line 235, in inner

return func(*args, **kwargs)

File "/usr/lib/python2.7/site-packages/nova/scheduler/manager.py", line 214, in select_destinations

allocation_request_version, return_alternates)

File "/usr/lib/python2.7/site-packages/nova/scheduler/filter_scheduler.py", line 96, in select_destinations

allocation_request_version, return_alternates)

File "/usr/lib/python2.7/site-packages/nova/scheduler/filter_scheduler.py", line 265, in _schedule

claimed_instance_uuids)

File "/usr/lib/python2.7/site-packages/nova/scheduler/filter_scheduler.py", line 302, in _ensure_sufficient_hosts

raise exception.NoValidHost(reason=reason)

NoValidHost: \u627e\u4e0d\u5230\u6709\u6548\u4e3b\u673a\uff0c\u539f\u56e0\u662f \u6ca1\u6709\u8db3\u591f\u7684\u4e3b\u673a\u53ef\u7528\u3002\u3002

: NoValidHost_Remote: \u627e\u4e0d\u5230\u6709\u6548\u4e3b\u673a\uff0c\u539f\u56e0\u662f \u6ca1\u6709\u8db3\u591f\u7684\u4e3b\u673a\u53ef\u7528\u3002\u3002

2021-09-24 14:16:46.098 2403 WARNING nova.scheduler.utils [req-ba341f5a-8855-4a67-8d69-585ae4dc2184 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] [instance: ac91ed2c-6c0e-4865-afcf-2b1b9fcb5485] Setting instance to ACTIVE state.: NoValidHost_Remote: \u627e\u4e0d\u5230\u6709\u6548\u4e3b\u673a\uff0c\u539f\u56e0\u662f \u6ca1\u6709\u8db3\u591f\u7684\u4e3b\u673a\u53ef\u7528\u3002\u3002

继续查看nova-scheduler.log日志:

可以看到,Host filter过滤器直接忽视了computer这个host;

2021-09-24 14:16:45.100 2690 INFO nova.scheduler.host_manager [req-ba341f5a-8855-4a67-8d69-585ae4dc2184 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Host filter ignoring hosts: computer

2021-09-24 14:16:45.102 2690 WARNING nova.scheduler.filters.compute_filter [req-ba341f5a-8855-4a67-8d69-585ae4dc2184 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] (localhost.localdomain, localhost.localdomain) ram: 3258MB disk: 32768MB io_ops: 0 instances: 0 has not been heard from in a while

2021-09-24 14:16:45.102 2690 INFO nova.filters [req-ba341f5a-8855-4a67-8d69-585ae4dc2184 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Filter ComputeFilter returned 0 hosts

2021-09-24 14:16:45.102 2690 INFO nova.filters [req-ba341f5a-8855-4a67-8d69-585ae4dc2184 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Filtering removed all hosts for the request with instance ID 'ac91ed2c-6c0e-4865-afcf-2b1b9fcb5485'. Filter results: ['AvailabilityZoneFilter: (start: 1, end: 1)', 'ComputeFilter: (start: 1, end: 0)']

重新尝试命令,进行冷迁移:

openstack server migrate sixthVM

查看nova-api.log日志:

2021-09-24 15:23:30.305 2289 INFO nova.api.openstack.wsgi [req-5a10aa8f-e1cf-46db-8a10-a0626fe33d04 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] HTTP exception thrown: 实例 sixthVM 没有找到。

2021-09-24 15:23:30.307 2289 INFO nova.osapi_compute.wsgi.server [req-5a10aa8f-e1cf-46db-8a10-a0626fe33d04 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "GET /v2.1/servers/sixthVM HTTP/1.1" status: 404 len: 517 time: 0.2010651

2021-09-24 15:23:30.406 2289 INFO nova.osapi_compute.wsgi.server [req-812f1dce-e613-4b09-8aad-5882881ba4a9 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "GET /v2.1/servers?name=sixthVM HTTP/1.1" status: 200 len: 701 time: 0.0959730

2021-09-24 15:23:30.712 2289 INFO nova.osapi_compute.wsgi.server [req-982b31b4-1b66-4731-9499-2d2831d21061 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "GET /v2.1/servers/ac91ed2c-6c0e-4865-afcf-2b1b9fcb5485 HTTP/1.1" status: 200 len: 1959 time: 0.3029451

2021-09-24 15:23:33.855 2289 INFO nova.api.openstack.wsgi [req-1b899c05-41da-4e75-b808-514d351dbc79 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] HTTP exception thrown: 找不到有效主机,原因是 冷迁移过程中发现无效主机。

2021-09-24 15:23:33.856 2289 INFO nova.osapi_compute.wsgi.server [req-1b899c05-41da-4e75-b808-514d351dbc79 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "POST /v2.1/servers/ac91ed2c-6c0e-4865-afcf-2b1b9fcb5485/action HTTP/1.1" status: 400 len: 612 time: 3.1414089

查看nova-scheduler.log日志:

可以发现,问题主要在于filter过滤器直接将computer节点过滤掉了,因为我们在迁移虚机时,使用的过滤器应该第一个就是过滤掉之前已经调度过的computer节点:

2021-09-24 15:23:33.148 2692 INFO nova.scheduler.host_manager [req-1b899c05-41da-4e75-b808-514d351dbc79 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Host filter ignoring hosts: computer

2021-09-24 15:23:33.154 2692 WARNING nova.scheduler.filters.compute_filter [req-1b899c05-41da-4e75-b808-514d351dbc79 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] (localhost.localdomain, localhost.localdomain) ram: 3258MB disk: 32768MB io_ops: 0 instances: 0 has not been heard from in a while

2021-09-24 15:23:33.155 2692 INFO nova.filters [req-1b899c05-41da-4e75-b808-514d351dbc79 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Filter ComputeFilter returned 0 hosts

2021-09-24 15:23:33.155 2692 INFO nova.filters [req-1b899c05-41da-4e75-b808-514d351dbc79 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Filtering removed all hosts for the request with instance ID 'ac91ed2c-6c0e-4865-afcf-2b1b9fcb5485'. Filter results: ['AvailabilityZoneFilter: (start: 1, end: 1)', 'ComputeFilter: (start: 1, end: 0)']

查看nova-conductor.log日志:

2021-09-24 15:23:33.790 2401 WARNING nova.scheduler.utils [req-1b899c05-41da-4e75-b808-514d351dbc79 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Failed to compute_task_migrate_server: 找不到有效主机,原因是 没有足够的主机可用。。

Traceback (most recent call last):

File "/usr/lib/python2.7/site-packages/oslo_messaging/rpc/server.py", line 235, in inner

return func(*args, **kwargs)

File "/usr/lib/python2.7/site-packages/nova/scheduler/manager.py", line 214, in select_destinations

allocation_request_version, return_alternates)

File "/usr/lib/python2.7/site-packages/nova/scheduler/filter_scheduler.py", line 96, in select_destinations

allocation_request_version, return_alternates)

File "/usr/lib/python2.7/site-packages/nova/scheduler/filter_scheduler.py", line 265, in _schedule

claimed_instance_uuids)

File "/usr/lib/python2.7/site-packages/nova/scheduler/filter_scheduler.py", line 302, in _ensure_sufficient_hosts

raise exception.NoValidHost(reason=reason)

#如下的都是16进制的字符,翻译过来就是无host可选:

: NoValidHost_Remote: \u627e\u4e0d\u5230\u6709\u6548\u4e3b\u673a\uff0c\u539f\u56e0\u662f \u6ca1\u6709\u8db3\u591f\u7684\u4e3b\u673a\u53ef\u7528\u3002\u3002

2021-09-24 15:23:33.792 2401 WARNING nova.scheduler.utils [req-1b899c05-41da-4e75-b808-514d351dbc79 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] [instance: ac91ed2c-6c0e-4865-afcf-2b1b9fcb5485] Setting instance to ACTIVE state.: NoValidHost_Remote: \u627e\u4e0d\u5230\u6709\u6548\u4e3b\u673a\uff0c\u539f\u56e0\u662f \u6ca1\u6709\u8db3\u591f\u7684\u4e3b\u673a\u53ef\u7528\u3002\u3002

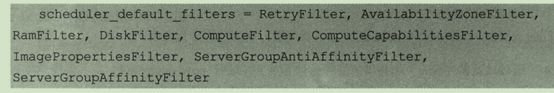

查看nova.conf配置文件:/etc/nova/nova.conf,修改nova.conf配置文件,添加如下的driver驱动:

![]()

scheduler_driver=nova.scheduler.filterscheduler.FilterScheduler

配置可用的filter,默认所有的filter都可以使用:

![]()

scheduler_available_filters = nova.scheduler.filters.all_filters

还可以指定真正使用的filter,会按照顺序依次地进行过滤:

将上面的设置去除掉RetryFilter,该filter的作用是去除掉之前调度过的节点;具体的每个filter的作用可以看看openstack的pdf文档:

scheduler_default_filters = AvailabilityZoneFilter, RamFilter, DiskFilter, ComputeFilter

重启Controller节点和Compute节点;

重新尝试热迁移虚拟机:

openstack server migrate --live-migration sixthVM

![]()

出现报错,因此删除该server虚机:

重新将新的虚机启动,并且尝试冷迁移:

openstack server migrate fifthVM

同样是失败:

![]()

我们查看nova-api.log日志,可以看到一大堆的ERROR错误,

![]()

大致的错误是消息超时,因此是neutron网络的原因,设置neutron.conf配置文件:

neutron在同步路由信息时,会从neutron-server获取所有router的信息,这个过程会比较长(130s左右,和网络资源的多少有关系),而 在/etc/neutron/neutron.conf中会有一个配置项“rpc_response_timeout”,它用来配置RPC的超时时间,默认为60s,所以导致超时异常.解决方法为设置rpc_response_timeout=180.

重新启动两个虚机;

重新将新的虚机启动,并且尝试冷迁移:

openstack server migrate fifthVM

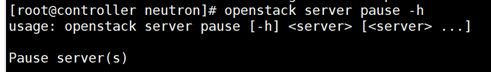

(22)server pause

将server实例短时间暂停,保存在宿主机的内存中,等待resume操作后从内存中读出server实例的状态,然后继续运行server实例,它的状态是paused。

(23)server reboot

soft reboot重启操作系统,整个过程server依然处于运行状态;

hard reboot重启server实例,相当于关机后再开机,

(24)server rebuild

重建一个server实例,通过之前备份的snapshot镜像恢复当前的server实例:

(25)server remove fixed ip

(26)server remove floating ip

(27)server remove network

(28)server remove port

(29)server remove security group

(30)server remove volume

(31)server rescue

考虑到操作系统故障,无法启动操作系统时,为了最大程度地挽救数据,我们需要使用一张启动盘将系统引导起来,然后再尝试恢复:

(32)server resize

改变server实例的flavor大小,复制原有的server实例内容到新的server实例中,在resize之前借助nova-scheduler重新为server实例选择一个合适的计算节点,如果选择的节点不是同一个,就需要进行migrate;如果选择的是同一个计算节点,则就是resize操作:

因此创建一个新的flavor:

openstack flavor create --id 4 --ram 128 --disk 1 --vcpus 1 m1.nano.new

创建好之后,进行resize操作:

openstack server resize --flavor m1.nano.new fifthVM

又是MQ的消息超时ERROR:

nova-api.log日志:

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi [req-adb018d8-afb7-4668-869c-179b90ea5dbf 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Unexpected exception in API method: MessagingTimeout: Timed out waiting for a reply to message ID ddf0ff7c5a874baa963a728feaaf4c2a

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi Traceback (most recent call last):

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/api/openstack/wsgi.py", line 671, in wrapped

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi return f(*args, **kwargs)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/api/validation/__init__.py", line 110, in wrapper

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi return func(*args, **kwargs)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/api/openstack/compute/servers.py", line 1045, in _action_resize

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi self._resize(req, id, flavor_ref, **kwargs)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/api/openstack/compute/servers.py", line 964, in _resize

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi self.compute_api.resize(context, instance, flavor_id, **kwargs)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/compute/api.py", line 225, in inner

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi return function(self, context, instance, *args, **kwargs)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/compute/api.py", line 152, in inner

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi return f(self, context, instance, *args, **kw)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/compute/api.py", line 215, in wrapped

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi return function(self, context, instance, *args, **kwargs)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/compute/api.py", line 3796, in resize

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi request_spec=request_spec)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/conductor/api.py", line 96, in resize_instance

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi request_spec=request_spec, host_list=host_list)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/nova/conductor/rpcapi.py", line 340, in migrate_server

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi return cctxt.call(context, 'migrate_server', **kw)

#发送RPC远程请求,设置的两个timeout都是从nova.conf中得到的

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/oslo_messaging/rpc/client.py", line 181, in call

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi transport_options=self.transport_options)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/oslo_messaging/transport.py", line 129, in _send

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi transport_options=transport_options)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/oslo_messaging/_drivers/amqpdriver.py", line 674, in send

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi transport_options=transport_options)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/oslo_messaging/_drivers/amqpdriver.py", line 662, in _send

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi call_monitor_timeout)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/oslo_messaging/_drivers/amqpdriver.py", line 551, in wait

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi message = self.waiters.get(msg_id, timeout=timeout)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi File "/usr/lib/python2.7/site-packages/oslo_messaging/_drivers/amqpdriver.py", line 429, in get

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi 'to message ID %s' % msg_id)

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi MessagingTimeout: Timed out waiting for a reply to message ID ddf0ff7c5a874baa963a728feaaf4c2a

2021-09-25 14:19:47.564 2249 ERROR nova.api.openstack.wsgi

2021-09-25 14:19:47.566 2249 INFO nova.api.openstack.wsgi [req-adb018d8-afb7-4668-869c-179b90ea5dbf 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] HTTP exception thrown: 发生意外 API 错误。请在 http://bugs.launchpad.net/nova/ 处报告此错误,并且附上 Nova API 日志(如果可能)。

<class 'oslo_messaging.exceptions.MessagingTimeout'>

2021-09-25 14:19:47.567 2249 INFO nova.osapi_compute.wsgi.server [req-adb018d8-afb7-4668-869c-179b90ea5dbf 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] 192.168.112.146 "POST /v2.1/servers/a2c57cfb-7dd9-42b6-b1e2-454d07cfbe77/action HTTP/1.1" status: 500 len: 755 time: 61.0104420

2021-09-25 14:19:50.621 2249 INFO oslo_messaging._drivers.amqpdriver [-] No calling threads waiting for msg_id : ddf0ff7c5a874baa963a728feaaf4c2a

nova-scheduler.log日志:

无内容;

nova-conductor.log日志:

2021-09-25 14:18:47.656 2407 WARNING oslo_config.cfg [req-adb018d8-afb7-4668-869c-179b90ea5dbf 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Deprecated: Option "scheduler_default_filters" from group "DEFAULT" is deprecated. Use option "enabled_filters" from group "filter_scheduler".

2021-09-25 14:19:50.517 2407 WARNING nova.scheduler.utils [req-adb018d8-afb7-4668-869c-179b90ea5dbf 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Failed to compute_task_migrate_server: Timed out waiting for a reply to message ID 187df0b0f95a4e27919321830142d187: MessagingTimeout: Timed out waiting for a reply to message ID 187df0b0f95a4e27919321830142d187

#实例设置为ACTIVE状态,消息超时

2021-09-25 14:19:50.522 2407 WARNING nova.scheduler.utils [req-adb018d8-afb7-4668-869c-179b90ea5dbf 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] [instance: a2c57cfb-7dd9-42b6-b1e2-454d07cfbe77] Setting instance to ACTIVE state.: MessagingTimeout: Timed out waiting for a reply to message ID 187df0b0f95a4e27919321830142d187

#等待消息超时

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server [req-adb018d8-afb7-4668-869c-179b90ea5dbf 9942eb4321644336a0c7383d9f1c2bed a7d812868cb74f1a978035530f55f1d0 - default default] Exception during message handling: MessagingTimeout: Timed out waiting for a reply to message ID 187df0b0f95a4e27919321830142d187

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server Traceback (most recent call last):

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/rpc/server.py", line 165, in _process_incoming

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server res = self.dispatcher.dispatch(message)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/rpc/dispatcher.py", line 274, in dispatch

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server return self._do_dispatch(endpoint, method, ctxt, args)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/rpc/dispatcher.py", line 194, in _do_dispatch

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server result = func(ctxt, **new_args)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/rpc/server.py", line 235, in inner

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server return func(*args, **kwargs)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/manager.py", line 95, in wrapper

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server return fn(self, context, *args, **kwargs)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/compute/utils.py", line 1372, in decorated_function

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server return function(self, context, *args, **kwargs)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/manager.py", line 299, in migrate_server

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server host_list)

#从这一步开始出现exception

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/manager.py", line 381, in _cold_migrate

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server updates, ex, request_spec)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_utils/excutils.py", line 220, in __exit__

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server self.force_reraise()

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_utils/excutils.py", line 196, in force_reraise

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server six.reraise(self.type_, self.value, self.tb)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/manager.py", line 350, in _cold_migrate

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server task.execute()

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/tasks/base.py", line 27, in wrap

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server self.rollback()

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_utils/excutils.py", line 220, in __exit__

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server self.force_reraise()

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_utils/excutils.py", line 196, in force_reraise

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server six.reraise(self.type_, self.value, self.tb)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/tasks/base.py", line 24, in wrap

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server return original(self)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/tasks/base.py", line 42, in execute

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server return self._execute()

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/tasks/migrate.py", line 340, in _execute

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server selection = self._schedule()

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/conductor/tasks/migrate.py", line 376, in _schedule

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server return_objects=True, return_alternates=True)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/scheduler/client/query.py", line 42, in select_destinations

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server instance_uuids, return_objects, return_alternates)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/nova/scheduler/rpcapi.py", line 160, in select_destinations

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server return cctxt.call(ctxt, 'select_destinations', **msg_args)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/rpc/client.py", line 181, in call

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server transport_options=self.transport_options)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/transport.py", line 129, in _send

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server transport_options=transport_options)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/_drivers/amqpdriver.py", line 674, in send

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server transport_options=transport_options)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/_drivers/amqpdriver.py", line 662, in _send

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server call_monitor_timeout)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/_drivers/amqpdriver.py", line 551, in wait

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server message = self.waiters.get(msg_id, timeout=timeout)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/oslo_messaging/_drivers/amqpdriver.py", line 429, in get

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server 'to message ID %s' % msg_id)

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server MessagingTimeout: Timed out waiting for a reply to message ID 187df0b0f95a4e27919321830142d187

2021-09-25 14:19:50.616 2407 ERROR oslo_messaging.rpc.server

查看到在调用rpc时,有这样的一行代码,其中的timeout参数采用的是nova.conf中的参数,因此我们尝试增大其中的两个timeout参数:

cctxt = self.client.prepare(

version=version, call_monitor_timeout=CONF.rpc_response_timeout,

timeout=CONF.long_rpc_timeout)

因此在nova.conf配置中添加了两个参数:

rpc_response_timeout=300

long_rpc_timeout=300

重启Controller节点;

重新尝试resize操作:

openstack server resize --flavor m1.nano.new fourthVM

时间好像挺长的,这是为啥呢?查看nova-api.log日志中,该日志的内容一直在重复,感觉像是哪里出了问题。

查看Compute节点的nova-compute.log日志,可以看到内容也是一直在重复:

2021-09-25 18:39:40.963 2129 INFO nova.compute.manager [-] [instance: 91cf5aa9-4605-4c99-bf0d-f603c6c988df] During sync_power_state the instance has a pending task (resize_prep). Skip.

2021-09-25 18:40:42.936 2129 INFO nova.compute.resource_tracker [req-52189c54-1223-47ae-a960-6b76acd33a45 - - - - -] [instance: 91cf5aa9-4605-4c99-bf0d-f603c6c988df] Updating resource usage from migration 348fdf7d-00b5-46fa-ad7b-704941ebb736

2021-09-25 18:40:42.982 2129 INFO nova.compute.resource_tracker [req-52189c54-1223-47ae-a960-6b76acd33a45 - - - - -] Instance ee0eb8cd-560e-47b6-9c32-fec87a55ffb4 has allocations against this compute host but is not found in the database.

看起来跟数据库好像有关系,最后一个日志中的意思是该实例在compute计算节点中是有分配的,但是在数据库中并没有发现,这可能是数据库的信息没有及时地更新。

错误:主机compute没有映射到任何单元

compute节点日志: Instance xxx has allocations against this compute host but is not found in the database.

解决:添加计算节点到cell数据库:

su -s /bin/sh -c “nova-manage cell_v2 discover_hosts --verbose” nova

继续尝试在dashboard中进行resize操作,依然是无尽的重复,等待,虚机的状态一直保持在resize_prep状态,但是nova-api.log日志和nova-compute.log日志中的内容一直在重复,我们如果要停止该虚机的状态,则直接修改数据库即可,如下所示:

先查询数据库状态

use nova;

select task_state,vm_state,power_state,display_name,deleted from instances where display_name="fourthVM";

修改 update instances set task_state = NULL, vm_state = 'stopped', power_state = 4 where display_name="fourthVM" and deleted=0;

在此之后,fourthVM虚机的状态就会修改为shutdown。

再次在nova.conf配置中添加了两个参数:

rpc_response_timeout=300

long_rpc_timeout=300

并且将所有的scheduler配置全部注释掉,重启Controller节点和Compute节点:

再次尝试resize虚机:

openstack server resize --flavor m1.nano.new fourthVM

还是失败,因此决定下次再尝试resize和migrate操作!

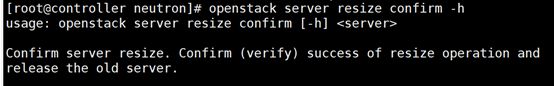

(33)server resize confirm

改变server实例大小,确认flavor大小修改完成同时释放旧的server:

(34)server resize revert

对server实例进行resize,反转server的新旧大小,即释放新的server,重启旧的server。

(35)server restore

修复某个server:

(36)server resume

中断后重新开始server实例,可以在pause或者suspend后恢复:

(37)server set

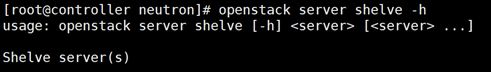

(38)server shelve

之前通过suspend操作后server实例处于shutdown状态,但是Hypervisor依然在宿主机上为其预留了资源,如果需要释放这些资源,则可以使用shelve来操作,该操作会将server实例作为image保存到glance中,然后在宿主机中删除该server实例:

(39)server show

展示server实例的详细信息:

(40)server ssh

通过ssh的方式连接到server实例中,可以选择IPV4或是IPV6:

(41)server start

启动serve实例:

(42)server stop

停止server实例:

(43)server suspend

长时间暂停server实例,将server实例的状态保存在磁盘中,它的状态是shutdown :

(44)server unlock

(45)server unpause

(46)server unrescue

(47)server unset

(48)server unshelve

恢复被shelve掉的server实例,因为之前的shevle操作保存了之前server实例的镜像文件,因此unshelve操作其实就是通过该镜像重启一个新的server实例: