linux搭建kubernetes集群(一主二从)

目录

1.环境准备

1.1禁用swap(kubernetes特性)

1.2 关闭iptables(三台机器都要设置)

1.3 修改主机名(三台机器都要设置)

1.4 域名解析,ssh免密登录

2.安装k8s

2.1下载yum源

2.2创建缓存(将后面需要下载的rpm包缓存下来,方便其他机器使用)

2.3 打开iptables桥接功能(三个节点都需调整)

2.4 打开路由转发(三个节点都需调整)

2.5 回到master节点

2.6 初始化集群(下载镜像)

2.7 其他节点加入集群

Kubernetes是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效(powerful),Kubernetes提供了应用部署,规划,更新,维护的一种机制。

Kubernetes可以在物理或虚拟机的Kubernetes集群上运行容器化应用,Kubernetes能提供一个以“容器为中心的基础架构”,满足在生产环境中运行应用的一些常见需求。

kubernetes中文官网:

https://kubernetes.io/zh/

kubernetes中文社区:

https://www.kubernetes.org.cn/

1.环境准备

我的服务器资源环境

每台主机必须安装docker,关闭防火墙(一般kubernetes是局域网运行),禁用selinux(确保文件访问),确保时间同步。

1.1禁用swap(kubernetes特性)

注意:所有节点都需禁用,不然无法加入集群。

#查看是否启用swap

[root@m72 ~]# free -h

total used free shared buff/cache available

Mem: 62G 647M 54G 1.0G 7.9G 60G

Swap: 15G 0B 15G关闭swap,执行

# 关闭swap

[root@m72 ~]# swapoff -a

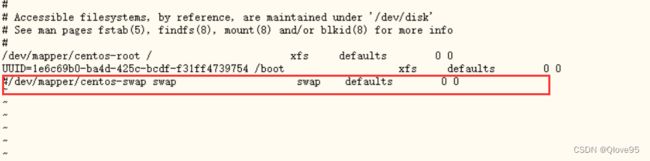

# 修改swap配置文件

[root@m72 ~]# vim /etc/fstab注释掉下面部分

1.2 关闭iptables(三台机器都要设置)

关闭iptables,并重启docker服务

[root@m72 ~]# iptables -F

[root@m72 ~]# systemctl daemon-reload

[root@m72 ~]# systemctl restart docker1.3 修改主机名(三台机器都要设置)

修改成对应的主机名,我这里主机设置的是m72, 2个节点是s73和s74

[root@m72 ~]# hostnamectl set-hostname m72

[root@s73 ~]# hostnamectl set-hostname s73

[root@s74 ~]# hostnamectl set-hostname s741.4 域名解析,ssh免密登录

修改主机hosts文件

[root@m72 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.220.15.72 m72

10.220.15.73 s73

10.220.15.74 s74将hosts文件拷贝给其他两个节点:

[root@m72 ~]# scp /etc/hosts [email protected]:/etc/hosts

[root@m72 ~]# scp /etc/hosts [email protected]:/etc/hosts

主机生成ssh秘钥

[root@m72 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:ZEdN2IJIihMOhSrVavfgY81EDm6mJw1clOIg7hLwzpg root@m72

The key's randomart image is:

+---[RSA 2048]----+

|.ooo.o.. ..=. |

|=ooo=.o ..o o |

|=*+=.+ o .. |

|+.B.* oo . |

|oB O * S |

|E.= * + |

|. + . |

| |

| |

+----[SHA256]-----+拷贝秘钥给另外两个节点

[root@m72 ~]# ssh-copy-id s73

[root@m72 ~]# ssh-copy-id s742.安装k8s

我们安装k8s时,利用的是kubernetes官方开发出来的自动化部署的软件(kubeadm),以来实现更快速的安装k8s。

2.1下载yum源

我们这里选择阿里的yum源

https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

新建一个kubernetes的repo文件

vim /etc/yum.repos.d/kubernetes.repo加入以下内容,保存

[kubernetes]

name=kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enable=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

2.2创建缓存(将后面需要下载的rpm包缓存下来,方便其他机器使用)

[root@m72 ~]# yum makecache

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.bfsu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

base | 3.6 kB 00:00:00

docker-ce-stable | 3.5 kB 00:00:00

extras | 2.9 kB 00:00:00

kubernetes | 1.4 kB 00:00:00

updates | 2.9 kB 00:00:00

......

kubernetes 797/797

kubernetes 797/797

Metadata Cache Created将kubernetes.repo复制给其他两台机器

[root@m72 /]# scp /etc/yum.repos.d/kubernetes.repo s73:/etc/yum.repos.d/

[root@m72 /]# scp /etc/yum.repos.d/kubernetes.repo s74:/etc/yum.repos.d/

2.3 打开iptables桥接功能(三个节点都需调整)

自定义文件k8s.conf

[root@m72 /]# vim /etc/sysctl.d/k8s.conf

# 加入以下内容

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

# 使配置生效

[root@m72 /]# sysctl -p /etc/sysctl.d/k8s.conf 同样方式调整另外两台机器

2.4 打开路由转发(三个节点都需调整)

[root@m72 /]# echo net.ipv4.ip_forward = 1 >> /etc/sysctl.conf

# 使配置生效

[root@master ~]# sysctl -p 同样方式调整另外两台机器

2.5 回到master节点

[root@m72 /]# vim /etc/yum.conf 修改keepcache=1 //启用缓存

下载rpm包

[root@m72 /]# yum -y install kubelet-1.15.1 kubeadm-1.15.1 kubectl-1.15.1下载完成后查看是否缓存了rpm包

[root@m72 /]# cd /var/cache/yum/x86_64/7/kubernetes/packages/

[root@m72 packages]# ll

total 67204

-rw-r--r--. 1 root root 7401938 Mar 18 06:26 4d300a7655f56307d35f127d99dc192b6aa4997f322234e754f16aaa60fd8906-cri-tools-1.23.0-0.x86_64.rpm

-rw-r--r--. 1 root root 9290806 Jan 4 2021 aa386b8f2cac67415283227ccb01dc043d718aec142e32e1a2ba6dbd5173317b-kubeadm-1.15.1-0.x86_64.rpm

-rw-r--r--. 1 root root 19487362 Jan 4 2021 db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kubernetes-cni-0.8.7-0.x86_64.rpm

-rw-r--r--. 1 root root 9920226 Jan 4 2021 f27b0d7e1770ae83c9fce9ab30a5a7eba4453727cdc53ee96dc4542c8577a464-kubectl-1.15.1-0.x86_64.rpm

-rw-r--r--. 1 root root 22704558 Jan 4 2021 f5edc025972c2d092ac41b05877c89b50cedaa7177978d9e5e49b5a2979dbc85-kubelet-1.15.1-0.x86_64.rpm设置kubelet开机自启动

[root@m72 packages]# systemctl enable kubelet.service2.6 初始化集群(下载镜像)

可是由于国内网络环境限制,我们不能直接从谷歌的镜像站下载镜像,我们直接上传镜像文件到集群,然后导入即可

链接:百度网盘

提取码:kuxd

编写脚本自动导入

vim images-import.sh加入以下内容

#!/bin/bash

# 默认会解压到/root/kubeadm-basic.images文件下

tar -zxvf /root/kubeadm-basic.images.tar.gz

ls /root/kubeadm-basic.images > /tmp/image-list.txt

cd /root/kubeadm-basic.images

for i in $( cat /tmp/image-list.txt )

do

docker load -i $i

done

rm -rf /tmp/image-list.txt执行脚本,查看docker镜像列表是否成功

[root@m72 ~]# sh images-import.sh

# 查看是否成功

[root@m72 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-apiserver v1.15.1 68c3eb07bfc3 2 years ago 207MB

k8s.gcr.io/kube-controller-manager v1.15.1 d75082f1d121 2 years ago 159MB

k8s.gcr.io/kube-proxy v1.15.1 89a062da739d 2 years ago 82.4MB

k8s.gcr.io/kube-scheduler v1.15.1 b0b3c4c404da 2 years ago 81.1MB

k8s.gcr.io/coredns 1.3.1 eb516548c180 3 years ago 40.3MB

k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 3 years ago 258MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 4 years ago 742kBdocker镜像都导入后,执行初始化集群

kubeadm init --kubernetes-version=v1.15.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

#–kubernetes-version:**指定当前kubernetes版本号(查看版本:kubelet --version)

#–pod-network: 指定pod网段,kubernetes默认指定网络。

#–ignore:忽略所有报错执行后如下图,记住最后打印的token语句,其他节点加入需要用得到

[init] Using Kubernetes version: v1.15.1

......

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.220.15.72:6443 --token cralff.5gzyqjx8jirtta2c \

--discovery-token-ca-cert-hash sha256:d72a9456b0ccb8e2bbdf58ae735410249ec9d6dfb641aa9e38e60e336de6d5bc 根据上面的提示创建目录并授权

[root@m72 ~]# mkdir -p $HOME/.kube

[root@m72 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@m72 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config查看节点

[root@m72 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

m72 NotReady master 9m54s v1.15.1显示 NotReady,是因为还缺少一个附件flannel,没有网络各Pod是无法通信的。

下载kube-flannel.yml文件,可能被墙无法下载,那么本地直接创建。

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml内容如下:

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg重新加载,再次查看

[root@m72 ~]# kubectl apply -f kube-flannel.yml

[root@m72 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

m72 Ready master 38m v1.15.12.7 其他节点加入集群

其他节点分别执行

yum -y install kubelet-1.15.1 kubeadm-1.15.1 kubectl-1.15.1加入开机自启动

systemctl enable kubelet.service创建文件夹

mkdir images主节点复制镜像文件

[root@m72 ~]# cd kubeadm-basic.images

[root@m72 kubeadm-basic.images]# scp * s73:/root/images/

[root@m72 kubeadm-basic.images]# scp * s73:/root/images/回到s73从节点,docker加入上述镜像

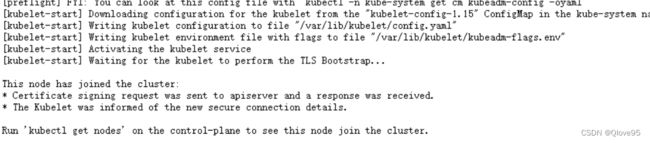

docker load -i xxx.tar最后s73执行之前打印的加入语句

kubeadm join 10.220.15.72:6443 --token cralff.5gzyqjx8jirtta2c \

--discovery-token-ca-cert-hash sha256:d72a9456b0ccb8e2bbdf58ae735410249ec9d6dfb641aa9e38e60e336de6d5bc 提示已加入, 查看主节点

[root@m72 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

m72 Ready master 3h7m v1.15.1

s73 Ready 8m32s v1.15.1 s73节点加入成功,同理加入s74节点,集群部署完成