Kubernetes1.24版本高可用集群环境搭建(二进制方式)

背景:

虽然kubeadm方式安装集群更加简单些,配置相对比较少,但是生产环境还是建议二进制的方式安装,因为二进制的方式kubernetes的kube-apiserver、kube-controller-manager、schedule、kube-proxy、kubelet组件是以进程的方式存在,而kubeadm是以容器方式存在,所以,二进制方式更加稳定。相比较而言,二进制方式有以下特点:

- 稳定性:二进制方式部署更加稳定。

- 灵活性:很多配置可以自定义。

- 维护性:管理更加简单。

本文将介绍如何使用二进制方式安装

高可用集群环境搭建

参见kubeasz项目:https://github.com/easzlab/kubeasz

环境规划

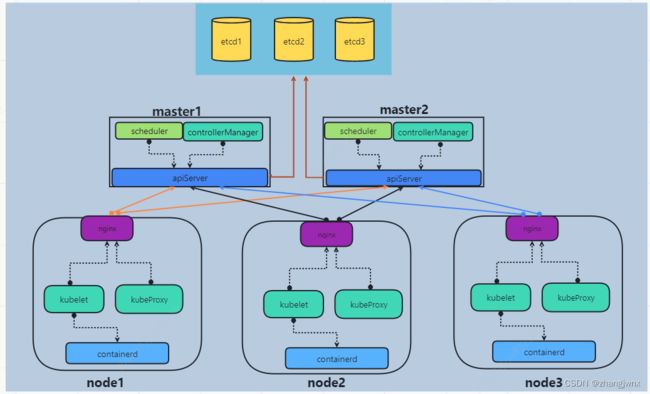

高可用架构图

节点配置

高可用集群所需节点配置如下:

| 角色 | IP | 配置 | 描述 |

|---|---|---|---|

| 部署节点【1台】 | 192.168.2.145 | 2c/4g | 运行ansible/ezctl命令 |

| master【3台】 | 192.168.2.131/132/133 | 4c/8g内存/50g硬盘起 | k8s控制平面,不运行具体的业务pod |

| node【n台】 | 192.168.2.134/135/136/xxx | 8c/32g内存/200g硬盘起 | 运行业务容器的节点 |

| etcd【3台】 | 192.168.2.137/138/139 | 4c/16g/50g硬盘【ssd】 | 保存k8s的集群数据的后端键值数据库,可与master部署在同一节点 |

| harbor【2台】 | 192.168.2.140/141 | 4c/8g内存/1t硬盘 | 两台做可用镜像存储私有仓库 |

| keepalived+haproxy【2台】 | 192.168.2.142/143 | 2c/4g | master节点和etcd节点的管理vip |

基础环境初始化

已完成系统初始化的可忽略此步骤

1.更改网卡名称为eth0:

root@ubuntu:vim /etc/default/grub

GRUB_CMDLINE_LINUX="net.ifnames=0 biosdevname=0"

root@ubuntu:update-grub

root@ubuntu:reboot

2、#修改主机名

vim /etc/hostname

hostname

wq保存退出

3、 #安装网络工具

apt install net-tools

4、#设置静态ip

-----------------------------------------------------------------

ls /etc/netplan

#默认会有01-netcfg.yaml文件

vim /etc/netplan/01-netcfg.yaml

# This file describes the network interfaces available on your system

# For more information, see netplan(5).

network:

version: 2

renderer: networkd

ethernets:

ens33:

dhcp4: no

addresses: [192.168.2.131/24] #依据自己网络规划填写

gateway4: 192.168.2.2 #依据自己网络规划填写

nameservers:

addresses: [114.114.114.114, 8.8.8.8]

netplan try #测试

netplan apply #应用当前修改的文件

--------------------------------------------------------------

5、设置时区

#设置时区

timedatectl set-timezone Asia/Shanghai

hwclock -w #同步系统时间到硬件

高可用负载均衡

内核优化

vim /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1:表示允许服务绑定一个本机不存在的 ipv4 的 IP地址,适用于服务需要绑定 vip 的场景。

net.ipv6.ip_nonlocal_bind = 1:表示允许服务绑定一个本机不存在的 ipv6 的 IP地址。适用于服务需要绑定 vip 的场景。

net.ipv4.ip_forward = 1: 表示允许内核路由转发功能

net.ipv6.ip_forward = 1: 表示允许内核路由转发功能

apt intall -y haproxy keepalived

cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

keepalived关键配置

-------------------------------------------------------------

virtual_ipaddress {

# optional label. should be of the form "realdev:sometext" for

# compatibility with ifconfig.

192.168.2.188 dev ens33 label ens33:1

192.168.2.189 dev ens33 label ens33:2

}

track_script {

chk_haproxy

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight -2

fall 3

rise 1

}

-------------------------------------------------------------

systemctl restart keepalived.service && systemctl enable keepalived.service #重启并设置开机自启动

haproxy关键配置

--------------------------------------------------------------

listen harbor-80

bind 192.168.2.188

mode tcp

balance source #注意负载均衡调度算法一定要选择源地址哈希(source)

server ubuntu01 192.168.2.131:80 check inter 3s fall 3 rise 1

server ubuntu02 192.168.2.132:80 check inter 3s fall 3 rise 1

listen harbor-443

bind 192.168.2.188

mode tcp

balance source #注意负载均衡调度算法一定要选择源地址哈希(source)

server ubuntu01 192.168.2.131:443 check inter 3s fall 3 rise 1

server ubuntu02 192.168.2.132:443 check inter 3s fall 3 rise 1

-------------------------------------------------------------

systemctl restart haproxy.service && systemctl enable haproxy.service

#重启并设置开机自启动

harbor高可用部署https方式

参见本博主:https://blog.csdn.net/m0_50589374/article/details/125926978

ansible二进制方式部署

基础环境准备

#部署节点需要执行以下操作

apt install -y git ansible sshpass #安装git、ansible、sshpass环境

ssh-keygen #生成秘钥

vim sync_key.sh

----------------------------------------------------------

#!/bin/bash

#主机列表

IPList="

192.168.2.131

192.168.2.132

192.168.2.133

192.168.2.134

192.168.2.135

192.168.2.136

xxxxxx

"

for node in ${IPList};do

sshpass -p 主机密码 ssh-copy-id ${node} -o StrictHostKeyChecking=no

echo "${node} 秘钥同步完成"

ssh ${node} ln -sv /usr/bin/python3 /usr/bin/python

echo "${node} /usr/bin/python3 软连接创建完成"

done

-----------------------------------------------------------

chmod +x sync_key.sh #授予可执行权限

bash sync_key.sh #执行同步脚本

下载kubeasz项目

export release=3.3.1

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

./ezdown -D

root@ubuntu01:~# ll /etc/kubeasz

total 140

drwxrwxr-x 13 root root 4096 Jul 24 16:00 ./

drwxr-xr-x 93 root root 4096 Jul 26 08:30 ../

-rw-rw-r-- 1 root root 20304 Jul 3 20:37 ansible.cfg

drwxr-xr-x 3 root root 4096 Jul 24 11:25 bin/ #k8s组件编译好的二进制文件

drwxr-xr-x 3 root root 4096 Jul 24 16:00 clusters/

drwxrwxr-x 8 root root 4096 Jul 3 20:51 docs/

drwxr-xr-x 2 root root 4096 Jul 24 11:35 down/ #addon组件

drwxrwxr-x 2 root root 4096 Jul 3 20:51 example/

-rwxrwxr-x 1 root root 25012 Jul 3 20:37 ezctl*

-rwxrwxr-x 1 root root 25266 Jul 3 20:37 ezdown*

drwxrwxr-x 3 root root 4096 Jul 3 20:51 .github/

-rw-rw-r-- 1 root root 301 Jul 3 20:37 .gitignore

drwxrwxr-x 10 root root 4096 Jul 3 20:51 manifests/

drwxrwxr-x 2 root root 4096 Jul 3 20:51 pics/

drwxrwxr-x 2 root root 4096 Jul 25 15:53 playbooks/

-rw-rw-r-- 1 root root 5058 Jul 3 20:37 README.md

drwxrwxr-x 22 root root 4096 Jul 3 20:51 roles/

drwxrwxr-x 2 root root 4096 Jul 3 20:51 tools/

创建集群实例

#创建集群实例

# 容器化运行kubeasz

./ezdown -S

# 创建集群

docker exec -it kubeasz ezctl new 集群名称

配置文件目录:

'/etc/kubeasz/clusters/集群名称/hosts' 和 '/etc/kubeasz/clusters/集群名称/config.yml'

根据前面节点规划修改hosts 文件和其他集群层面的主要配置选项;其他集群组件等配置项可以在config.yml 文件中修改。

配置详解

编辑hosts文件:

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.2.131

192.168.2.132

192.168.2.133

# master node(s)

[kube_master]

192.168.2.131

# work node(s)

[kube_node]

192.168.2.132

192.168.2.133

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking service地址范围

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking pod地址范围,一定要提前规划好

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range 端口不够可以修改此值

NODE_PORT_RANGE="30000-52767"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/集群名称"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

编辑config.xml文件:

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

#证书有效期

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# k8s version

K8S_VER: "1.24.2"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像,建议将此镜像上传到本地harbor仓库

SANDBOX_IMAGE: "easzlab.io.local:5000/easzlab/pause:3.7"

#SANDBOX_IMAGE: "reg.zhangjw.com/baseimages/pause:3.7"

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

**# [docker]信任的HTTP仓库,可添加自己的私有仓库**

INSECURE_REG: '["http://easzlab.io.local:5000","reg.zhangjw.com"]'

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

#填写高可用负载的vip即可

MASTER_CERT_HOSTS:

- "10.1.1.1"

- "k8s.easzlab.io"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数,依据自己node节点配置,可以增加该值

MAX_PODS: 110

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64"

flannelVer: "v0.15.1"

flanneld_image: "easzlab.io.local:5000/easzlab/flannel:{{ flannelVer }}"

# ------------------------------------------- calico

# [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md 但是跨网段会无法访问

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: false

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []

# [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x]

calico_ver: "v3.19.4"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

# [cilium]镜像版本

cilium_ver: "1.11.6"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: true

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "yes"

corednsVer: "1.9.3"

ENABLE_LOCAL_DNS_CACHE: false

dnsNodeCacheVer: "1.21.1"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

metricsserver_install: "yes"

metricsVer: "v0.5.2"

# dashboard 自动安装

dashboard_install: "yes"

dashboardVer: "v2.5.1"

dashboardMetricsScraperVer: "v1.0.8"

# prometheus 自动安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "35.5.1"

# nfs-provisioner 自动安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

# network-check 自动安装

network_check_enabled: false

network_check_schedule: "*/5 * * * *"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.1.3"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_TLS_PORT: 8443

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true

编辑containerd服务配置文件:

注意:添加的内容中仓库地址修改为自己对应的私有仓库地址

vim /etc/kubeasz/roles/containerd/templates/config.toml.j2

{% if ENABLE_MIRROR_REGISTRY %}

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://docker.mirrors.ustc.edu.cn", "http://hub-mirror.c.163.com"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."gcr.io"]

endpoint = ["https://gcr.mirrors.ustc.edu.cn"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"]

endpoint = ["https://gcr.mirrors.ustc.edu.cn/google-containers/"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"]

endpoint = ["https://quay.mirrors.ustc.edu.cn"]

#注意在此位置加入以下内容,依据自己仓库地址修改响应的配置

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."reg.zhangjw.com"]

endpoint = ["https://reg.zhangjw.com"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."reg.zhangjw.com".tls]

insecure_skip_verify = true

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."reg.zhangjw.com".auth]

username = "admin"

password = "Harbor12345"

{% endif %}

部署k8s集群

#建议配置命令alias,方便执行

echo "alias dk='docker exec -it kubeasz'" >> /root/.bashrc

source /root/.bashrc

# 一键安装,等价于执行docker exec -it kubeasz ezctl setup k8s-01 all

dk ezctl setup k8s-01 all #初始安装建议分步安装

# 或者分步安装,具体使用 dk ezctl help setup 查看分步安装帮助信息

# dk ezctl setup k8s-01 01

# dk ezctl setup k8s-01 02

# dk ezctl setup k8s-01 03

# dk ezctl setup k8s-01 04

每步安装具体组件如下所示:

--------------------------------------------------------------

Usage: ezctl setup <cluster> <step>

available steps:

01 prepare to prepare CA/certs & kubeconfig & other system settings

02 etcd to setup the etcd cluster

03 container-runtime to setup the container runtime(docker or containerd)

04 kube-master to setup the master nodes

05 kube-node to setup the worker nodes

06 network to setup the network plugin

07 cluster-addon to setup other useful plugins

90 all to run 01~07 all at once

10 ex-lb to install external loadbalance for accessing k8s from outside

11 harbor to install a new harbor server or to integrate with an existed one

--------------------------------------------------------------

01基础系统设置

#基础系统配置,包括加载内核模块、ulimits、Journald以及内核参数优化

dk ezctl setup 集群名称 01

02部署etcd集群

dk ezctl setup 集群名称 02

#etcd服务器验证etcd服务

export NODE_IPS="192.168.2.137 192.168.2.138 192.168.2.139"

for ip in ${NODE_IPS}; do etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://192.168.2.137:2379 is healthy: successfully committed proposal: took = 10.651582ms

https://192.168.2.138:2379 is healthy: successfully committed proposal: took = 74.296019ms

https://192.168.2.139:2379 is healthy: successfully committed proposal: took = 14.587119ms

注意:返回以上信息表示etcd集群运行正常,否则异常。

03部署containerd运行时

dk ezctl setup 集群名称 03

#环境验证

containerd -v

root@ubuntu01:~# containerd -v

containerd github.com/containerd/containerd v1.6.4 212e8b6fa2f44b9c21b2798135fc6fb7c53efc16

注意:返回以上信息表示containerd安装成功,否则异常

04部署master

dk ezctl setup 集群名称 04

#验证服务器

root@ubuntu01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.2.131 Ready,SchedulingDisabled master 3d2h v1.24.2

192.168.2.132 Ready,SchedulingDisabled master 3d2h v1.24.2

192.168.2.133 Ready,SchedulingDisabled master 3d2h v1.24.2

注意:master组件状态为ready表示安装成功

05部署node

dk ezctl setup 集群名称 05

#验证服务器

root@ubuntu01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.2.131 Ready,SchedulingDisabled master 3d2h v1.24.2

192.168.2.132 Ready,SchedulingDisabled master 3d2h v1.24.2

192.168.2.133 Ready,SchedulingDisabled master 3d2h v1.24.2

192.168.2.134 Ready node 3d2h v1.24.2

192.168.2.135 Ready node 3d2h v1.24.2

注意:node节点状态为ready表示安装成功

06部署网络服务calico

dk ezctl setup 集群名称 06

#验证网络

root@ubuntu01:~# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------+-------------------+-------+----------+-------------+

| 192.168.2.132 | node-to-node mesh | up | 13:17:43 | Established |

| 192.168.2.133 | node-to-node mesh | up | 14:10:34 | Established |

+---------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

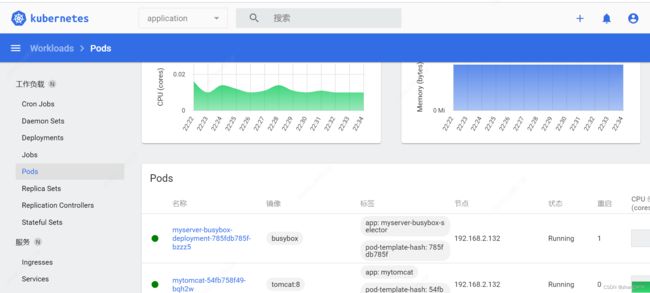

07部署addon组件

包括coreDNS service域名解析组件、metrics-server获取节点以及pod资源占用情况、dashboard图形管理工具

dk ezctl setup 集群名称 07

#验证组件

kube-system coredns-84b58f6b4-mjv7q 1/1 Running 2 (67m ago) 3d2h

kube-system dashboard-metrics-scraper-864d79d497-p4dp4 1/1 Running 0 60m

kube-system kubernetes-dashboard-5fc74cf5c6-p858p 1/1 Running 22 (65m ago) 3d2h

kube-system metrics-server-69797698d4-8rzkv 1/1 Running 0 60m

注意:pod都为running状态表示组件部署成功,否则失败,具体原因可使用命令 kubectl describe pod podName -n namespace查看

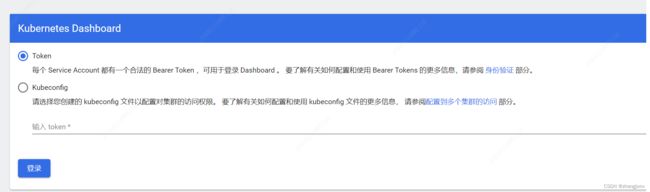

root@ubuntu01:~# kubectl describe secrets admin-user -n kube-system

Name: admin-user

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: f2db0eaf-43c5-47ea-b4fb-7d6e7d44c0cc

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkFpZXZzbmtoRDZxS251bmFoc25FNFMybU94d2RZblRCdFpVNzJ5eW1Nek0ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJmMmRiMGVhZi00M2M1LTQ3ZWEtYjRmYi03ZDZlN2Q0NGMwY2MiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.RM1nJ7pxpWVOmGWoAJ0nfsecn4Ush7rtWL8xTP-DoBR0MqkANWY5QO5bgxlKe4hAZTh8NHrLq35EUG5EqZnzySsg15kxJnVA1dZmwwIqYvXrglcHgZTTbQX3fO6BzoXkbb9c2BN3UcuNGeA4Dp0eHPE2LRyckvA6nuOPJHtBNvczmD2_e0SA1ENWAKS02zBHQ21uWlTGgMYoVzkvp1Au0HJw1PdaeqvucGgkDehJkmqEfLWMrxtJt1sXQt1WrQbYvsGZQj8guOL-CnCSIMGQ4Y_JBQ-htDzlIXkALAatN-PxTCBgVJNB1eWuJSGktKvyVDU9ldYH-yy4nqhWv7Y-gg

ca.crt: 1302 bytes

namespace: 11 bytes

2、验证metrics-server

#获取节点资源使用情况

root@ubuntu01:~# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

192.168.2.131 373m 18% 1582Mi 43%

192.168.2.132 326m 16% 1131Mi 31%

192.168.2.133 184m 9% 490Mi 13%

#查看pod具体的资源占用情况

root@ubuntu01:~# kubectl top pod mytomcat-54fb758f49-5rzcs -n application

NAME CPU(cores) MEMORY(bytes)

mytomcat-54fb758f49-5rzcs 2m 82Mi

root@ubuntu01:~#

注意:能正常获取到资源占用情况表示安装成功

3、验证coreDNS

root@ubuntu01:/app# kubectl exec -it myserver-centos-deployment-5564dff7bb-k4v8f -n application bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@myserver-centos-deployment-5564dff7bb-k4v8f /]# ping www.baidu.com

PING www.wshifen.com (103.235.46.40) 56(84) bytes of data.

64 bytes from 103.235.46.40 (103.235.46.40): icmp_seq=1 ttl=127 time=74.4 ms

64 bytes from 103.235.46.40 (103.235.46.40): icmp_seq=2 ttl=127 time=76.9 ms

64 bytes from 103.235.46.40 (103.235.46.40): icmp_seq=3 ttl=127 time=75.0 ms

64 bytes from 103.235.46.40 (103.235.46.40): icmp_seq=4 ttl=127 time=77.3 ms

[root@myserver-centos-deployment-5564dff7bb-k4v8f /]# nslookup kubernetes

Server: 10.100.0.2

Address: 10.100.0.2#53

** server can't find kubernetes: NXDOMAIN

[root@myserver-centos-deployment-5564dff7bb-k4v8f /]# nslookup kubernetes.default.svc.cluster.local

Server: 10.100.0.2

Address: 10.100.0.2#53

Name: kubernetes.default.svc.cluster.local

Address: 10.100.0.1

#查看serviceIP

root@ubuntu01:/app# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

application myserver-nginx-service NodePort 10.100.159.146 <none> 80:30004/TCP,443:30443/TCP 2d13h

application mytomcat NodePort 10.100.226.164 <none> 8080:30012/TCP 2d11h

default kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 3d3h

kube-system dashboard-metrics-scraper ClusterIP 10.100.175.99 <none> 8000/TCP 3d3h

kube-system kube-dns ClusterIP 10.100.0.2 <none> 53/UDP,53/TCP,9153/TCP 3d3h

kube-system kubernetes-dashboard NodePort 10.100.169.151 <none> 443:33271/TCP 3d3h

kube-system metrics-server ClusterIP 10.100.133.221 <none> 443/TCP 3d3h

可验证coreDNS域名解析成功

部署dashboard

#安装rancher

docker run -d --privileged --name rancher -v /data/rancher_data:/var/lib/rancher --restart=unless-stopped -p 80:80 -p 443:443 rancher/rancher:stable

#安装kuboard

sudo docker run -d \

--restart=unless-stopped \

--name=kuboard \

-p 80:80/tcp \

-p 10081:10081/tcp \

-e KUBOARD_ENDPOINT="http://192.168.2.131:80" \

-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

-v /root/kuboard-data:/data \

swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3

集群节点伸缩管理

集群管理主要包括添加master、node节点,删除master、node节点以及集群状态的监控告警

添加master节点

主要步骤包括:

# ssh 免密码登录

$ ssh-copy-id 新增master节点IP

# 部分操作系统需要配置python软链接

$ ssh 新增master节点IP ln -s /usr/bin/python3 /usr/bin/python

# 新增节点

$ ezctl add-master 集群名称 新增master节点IP

服务验证:

# 在新节点master 服务状态

$ systemctl status kube-apiserver

$ systemctl status kube-controller-manager

$ systemctl status kube-scheduler

返回 running表示组件服务成功

删除master节点

$ ezctl del-master 集群名称 待删除master节点IP

添加node节点

# ssh 免密码登录

$ ssh-copy-id 新增node节点IP

# 部分操作系统需要配置python软链接

$ ssh 新增node节点IP ln -s /usr/bin/python3 /usr/bin/python

# 新增节点

$ ezctl add-node 集群名称 新增node节点IP

服务验证:

# 验证新节点状态

$ kubectl get node

# 验证新节点的网络插件calico的Pod 状态,为running状态表示成功

$ kubectl get pod -n kube-system

删除node节点

$ ezctl del-node 集群名称 待删除node节点IP

版本升级

下载升级包

示例:1.24.2升级1.24.3

下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG

1、#下载kubernetes.tar.gz包

https://dl.k8s.io/v1.24.3/kubernetes.tar.gz

2、#下载kubernetes-client-linux-amd64.tar.gz包

https://dl.k8s.io/v1.24.3/kubernetes-client-linux-amd64.tar.gz

3、#下载kubernetes-server-linux-amd64.tar.gz包

https://dl.k8s.io/v1.24.3/kubernetes-server-linux-amd64.tar.gz

4、下载kubernetes-node-linux-amd64.tar.gz包

https://dl.k8s.io/v1.24.3/kubernetes-node-linux-amd64.tar.gz

升级master节点

1、逐台升级,先关闭其中一台master的负载均衡,例如升级131,需先关闭负载,使请求不要转到这个master节点

vim /etc/kube-lb/conf/kube-lb.conf

--------------------------------------------------------------------------------

user root;

worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events {

worker_connections 3000;

}

stream {

upstream backend {

#server 192.168.2.131:6443 max_fails=2 fail_timeout=3s;

server 192.168.2.132:6443 max_fails=2 fail_timeout=3s;

server 192.168.2.133:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 127.0.0.1:6443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

----------------------------------------------------------------------------

2、#重新加载nginx配置文件

systemctl reload kube-lb.service

3、#停止服务

systemctl stop kube-apiserver kube-controller-manager kube-proxy kube-schedule kubelet

4、#拷贝文件

scp kube-apiserver kube-controller-manager kube-proxy kube-schedule kubelet kubectl masterIP:/usr/local/bin/

5、#启动服务

systemctl start kube-apiserver kube-controller-manager kube-proxy kube-schedule kubelet

升级node节点

1、#驱逐node节点上的pod

kubectl drain nodeIP --ignore-daemonsets --delete-emptydir-data

2、#停止服务

systemctl stop kubelet kube-proxy

3、#拷贝升级所需的二进制文件

scp kubelet kube-proxy nodeIP:/usr/local/bin/

4、#设置开机自启动并且开启服务

systemctl start kubelet kube-proxy

5、#调整为可调度

kubectl uncordon nodeIP