Hadoop大数据框架研究(4)——Hive环境部署及使用

近期对hadoop生态的大数据框架进行了实际的部署测试,并结合ArcGIS平台的矢量大数据分析产品进行空间数据挖掘分析。本系列博客将进行详细的梳理、归纳和总结,以便相互交流学习。

A. MySQL安装配置

1.下载mysql源安装包

[root@node1 ~]# wget http://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

2.安装mysql源

[root@node1 ~]# yum localinstall mysql57-community-release-el7-8.noarch.rpm

3.检查源是否安装成功

[root@node1 ~]# yum repolist enabled | grep "mysql.*-community.*"

[root@node1 ~]# yum repolist enabled | grep "mysql.*-community.*"

!mysql-connectors-community/x86_64 MySQL Connectors Community 45

!mysql-tools-community/x86_64 MySQL Tools Community 57

!mysql57-community/x86_64 MySQL 5.7 Community Server 247

[root@node1 ~]# yum install mysql-community-server

5.启动mysql

[root@node1 ~]# systemctl start mysqld

6.查看mysql

[root@node1 ~]# systemctl status mysqld

[root@node1 ~]# systemctl status mysqld

?.mysqld.service - MySQL Server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2018-02-22 10:07:40 CST; 2 days ago

Docs: man:mysqld(8)

http://dev.mysql.com/doc/refman/en/using-systemd.html

Process: 1597 ExecStart=/usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid $MYSQLD_OPTS (code=exited, status=0/SUCCESS)

Process: 1109 ExecStartPre=/usr/bin/mysqld_pre_systemd (code=exited, status=0/SUCCESS)

Main PID: 1601 (mysqld)

CGroup: /system.slice/mysqld.service

?..1601 /usr/sbin/mysqld --daemonize --pid-file=/var/run/mysql...

Feb 22 10:07:26 node1.gisxy.com systemd[1]: Starting MySQL Server...

Feb 22 10:07:40 node1.gisxy.com systemd[1]: Started MySQL Server.7.修改密码策略和默认编码(utf8)

添加validate_password_policy配置,指定密码策略

# 选择0(LOW),1(MEDIUM),2(STRONG)

#validate_password_policy=0

如果不需要密码策略,添加如下配置禁用即可:

validate_password = off

默认字符修改:

[mysqld]

character_set_server=utf8

init_connect='SET NAMES utf8'

[root@node1 ~] systemctl restart mysqld

[root@node1 ~]# cat /etc/my.cnf

# For advice on how to change settings please see

# http://dev.mysql.com/doc/refman/5.7/en/server-configuration-defaults.html

[mysqld]

character_set_server=utf8

init_connect='SET NAMES utf8'

#

# Remove leading # and set to the amount of RAM for the most important data

# cache in MySQL. Start at 70% of total RAM for dedicated server, else 10%.

# innodb_buffer_pool_size = 128M

#

# Remove leading # to turn on a very important data integrity option: logging

# changes to the binary log between backups.

# log_bin

#

# Remove leading # to set options mainly useful for reporting servers.

# The server defaults are faster for transactions and fast SELECTs.

# Adjust sizes as needed, experiment to find the optimal values.

# join_buffer_size = 128M

# sort_buffer_size = 2M

# read_rnd_buffer_size = 2M

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

# Disabling symbolic-links is recommended to prevent assorted security risks

symbolic-links=0

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

#validate_password_policy=0

validate_password = off8.查看root用户登陆mysql的默认密码

[root@node1 ~]# grep 'temporary password' /var/log/mysqld.log

[root@node1 ~]# grep 'temporary password' /var/log/mysqld.log

2018-02-13T04:28:13.451771Z 1 [Note] A temporary password is generated for root@localhost: 7_L3ftL%drj69.使用默认密码登陆并修改密码

[root@node1 ~]# mysql -u root -p

[root@node1 ~]# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 3

Server version: 5.7.21 MySQL Community Server (GPL)

Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> set password for 'root'@'localhost'=password('arcgis');10.配置远程连接

mysql> grant all privileges on *.* to 'hadoop'@'%' identified by'arcgis';

Query OK, 0 rows affected, 1 warning (0.00 sec)将所有数据库的所有表(*.*)的所有权限(allprivileges),授予通过任何ip(%)访问的hadoop用户,密码为arcgis,如果要限制只有某台机器可以访问,将其换成相应的IP即可。

B. Hive部署与配置

1.apache-hive-2.3.2-bin.tar.gz下载:

http://mirror.bit.edu.cn/apache/hive/stable-2/apache-hive-2.3.2-bin.tar.gz

2.传递hive到/home/hadoop/hadoop/目录下并解压

3.修改配置文件

[hadoop@node1 hadoop]$ cd apache-hive-2.3.2-bin/conf

[hadoop@node1 conf]$ cp hive-env.sh.template hive-env.sh

a.hive-env.sh

[hadoop@node1 conf]$ cat hive-env.sh

# The heap size of the jvm stared by hive shell script can be controlled via:

# export HADOOP_HEAPSIZE=1024

# Set HADOOP_HOME to point to a specific hadoop install directory

HADOOP_HOME=/home/hadoop/hadoop/hadoop-2.7.5

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/home/hadoop/hadoop/apache-hive-2.3.2-bin/conf

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/home/hadoop/hadoop/apache-hive-2.3.2-bin/libb.hive-site.xml

[hadoop@node1 conf]$ cat hive-site.xml

hive.metastore.warehouse.dir

/user/hive/warehouse

hive.exec.scratchdir

/tmp/hive

javax.jdo.option.ConnectionURL

jdbc:mysql://node1.gisxy.com:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

hadoop

javax.jdo.option.ConnectionPassword

arcgis

hive.metastore.schema.verification

false

hive.metastore.local

false

hive.metastore.uris

thrift://node1.gisxy.com:9083

4.创建hdfs目录并确保权限

[hadoop@node1 conf]$ hadoop fs -mkdir -p /user/hive/warehouse

[hadoop@node1 conf]$ hadoop fs -mkdir -p /tmp/hive

5.拷贝mysql的jar包(mysql-connector-java-5.1.44-bin.jar)到$HIVE_HOME/lib目录

6.数据库初始化

[hadoop@node1 bin]$ ./schematool -initSchema -dbType mysql

7.启动hiveserver2并测试

[hadoop@node1 bin]$ ./hive --service hiveserver2

[hadoop@node1 bin]$ ./hive --service hiveserver2

which: no hbase in (/usr/local/jdk1.8.0_151/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/home/hadoop/hadoop/hadoop-2.7.5/sbin:/home/hadoop/hadoop/hadoop-2.7.5/bin:/home/hadoop/hadoop/scala-2.11.11/bin:/home/hadoop/.local/bin:/home/hadoop/bin)

2018-03-16 09:48:35: Starting HiveServer2[hadoop@node1 bin]$ ./hive --service metastore

[hadoop@node1 bin]$ ./hive --service metastore

2018-03-16 09:50:18: Starting Hive Metastore Server

./ext/metastore.sh: 第 29 行:export: ` -Dproc_metastore -Dlog4j.configurationFile=hive-log4j2.properties -Djava.util.logging.config.file=/home/hadoop/hadoop/apache-hive-2.3.2-bin/conf/parquet-logging.properties ': 不是有效的标识符

2018-03-16T09:50:21,309 INFO [main] org.apache.hadoop.hive.conf.HiveConf - Found configuration file file:/home/hadoop/hadoop/apache-hive-2.3.2-bin/conf/hive-site.xml

2018-03-16T09:50:22,611 WARN [main] org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-03-16T09:50:22,766 WARN [main] org.apache.hadoop.hive.conf.HiveConf - HiveConf of name hive.metastore.local does not exist

2018-03-16T09:50:22,992 WARN [main] org.apache.hadoop.hive.conf.HiveConf - HiveConf of name hive.metastore.local does not exist

2018-03-16T09:50:24,475 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting HiveMetaStore

STARTUP_MSG: host = node1.gisxy.com/192.168.0.171

STARTUP_MSG: args = []

STARTUP_MSG: version = 2.3.2

STARTUP_MSG: classpath = …………………………………………

************************************************************/

2018-03-16T09:50:24,537 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Starting hive metastore on port 9083

2018-03-16T09:50:24,846 WARN [main] org.apache.hadoop.hive.conf.HiveConf - HiveConf of name hive.metastore.local does not exist

2018-03-16T09:50:24,846 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

2018-03-16T09:50:26,468 WARN [main] org.apache.hadoop.hive.conf.HiveConf - HiveConf of name hive.metastore.local does not exist

2018-03-16T09:50:28,909 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Added admin role in metastore

2018-03-16T09:50:28,912 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Added public role in metastore

2018-03-16T09:50:28,975 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - No user is added in admin role, since config is empty

2018-03-16T09:50:29,284 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Starting DB backed MetaStore Server with SetUGI enabled

2018-03-16T09:50:29,309 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Started the new metaserver on port [9083]...

2018-03-16T09:50:29,309 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Options.minWorkerThreads = 200

2018-03-16T09:50:29,309 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Options.maxWorkerThreads = 1000

2018-03-16T09:50:29,309 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - TCP keepalive = true

2018-03-16T09:50:49,758 INFO [pool-7-thread-1] org.apache.hadoop.hive.metastore.HiveMetaStore - 1: source:192.168.0.171 get_all_functions

2018-03-16T09:50:49,982 WARN [pool-7-thread-1] org.apache.hadoop.hive.conf.HiveConf - HiveConf of name hive.metastore.local does not exist

2018-03-16T09:50:49,982 INFO [pool-7-thread-1] org.apache.hadoop.hive.metastore.HiveMetaStore - 1: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

2018-03-16T09:50:50,203 INFO [pool-7-thread-1] org.apache.hadoop.hive.metastore.HiveMetaStore - 1: source:192.168.0.171 get_all_databases

2018-03-16T09:50:50,272 INFO [pool-7-thread-1] org.apache.hadoop.hive.metastore.HiveMetaStore - 1: source:192.168.0.171 get_all_tables: db=db_hive_test

2018-03-16T09:50:50,367 INFO [pool-7-thread-1] org.apache.hadoop.hive.metastore.HiveMetaStore - 1: source:192.168.0.171 get_multi_table : db=db_hive_test tbls=student,test

2018-03-16T09:50:50,699 INFO [pool-7-thread-1] org.apache.hadoop.hive.metastore.HiveMetaStore - 1: source:192.168.0.171 get_all_tables: db=default

2018-03-16T09:50:50,709 INFO [pool-7-thread-1] org.apache.hadoop.hive.metastore.HiveMetaStore - 1: source:192.168.0.171 get_multi_table : db=default tbls=

2018-03-16T09:53:03,203 INFO [pool-7-thread-2] org.apache.hadoop.hive.metastore.HiveMetaStore - 2: source:192.168.0.153 get_all_databases

2018-03-16T09:53:03,340 WARN [pool-7-thread-2] org.apache.hadoop.hive.conf.HiveConf - HiveConf of name hive.metastore.local does not exist

2018-03-16T09:53:03,341 INFO [pool-7-thread-2] org.apache.hadoop.hive.metastore.HiveMetaStore - 2: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

2018-03-16T09:53:03,433 INFO [pool-7-thread-2] org.apache.hadoop.hive.metastore.HiveMetaStore - 2: source:192.168.0.153 get_functions: db=db_hive_test pat=*

2018-03-16T09:53:03,476 INFO [pool-7-thread-2] org.apache.hadoop.hive.metastore.HiveMetaStore - 2: source:192.168.0.153 get_functions: db=default pat=*

2018-03-16T09:53:04,455 INFO [pool-7-thread-2] org.apache.hadoop.hive.metastore.HiveMetaStore - 2: source:192.168.0.153 get_database: default

2018-03-16T09:53:04,522 INFO [pool-7-thread-2] org.apache.hadoop.hive.metastore.HiveMetaStore - 2: source:192.168.0.153 get_database: default

2018-03-16T09:53:04,539 INFO [pool-7-thread-2] org.apache.hadoop.hive.metastore.HiveMetaStore - 2: source:192.168.0.153 get_database: default

2018-03-16T09:53:09,713 INFO [pool-7-thread-3] org.apache.hadoop.hive.metastore.HiveMetaStore - 3: source:192.168.0.153 get_all_databases

2018-03-16T09:53:09,888 WARN [pool-7-thread-3] org.apache.hadoop.hive.conf.HiveConf - HiveConf of name hive.metastore.local does not exist

2018-03-16T09:53:09,889 INFO [pool-7-thread-3] org.apache.hadoop.hive.metastore.HiveMetaStore - 3: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

2018-03-16T09:53:09,950 INFO [pool-7-thread-3] org.apache.hadoop.hive.metastore.HiveMetaStore - 3: source:192.168.0.153 get_functions: db=db_hive_test pat=*

2018-03-16T09:53:09,959 INFO [pool-7-thread-3] org.apache.hadoop.hive.metastore.HiveMetaStore - 3: source:192.168.0.153 get_functions: db=default pat=*

2018-03-16T09:53:10,927 INFO [pool-7-thread-3] org.apache.hadoop.hive.metastore.HiveMetaStore - 3: source:192.168.0.153 get_database: default

2018-03-16T09:53:10,984 INFO [pool-7-thread-3] org.apache.hadoop.hive.metastore.HiveMetaStore - 3: source:192.168.0.153 get_database: default

2018-03-16T09:53:10,998 INFO [pool-7-thread-3] org.apache.hadoop.hive.metastore.HiveMetaStore - 3: source:192.168.0.153 get_database: default

2018-03-16T09:53:11,153 INFO [pool-7-thread-3] org.apache.hadoop.hive.metastore.HiveMetaStore - 3: source:192.168.0.153 get_tables: db=default pat=.*进程中增加了hive相关守护进程RunJar

[hadoop@node1 ~]$ jps

25858 RunJar

6643 DFSZKFailoverController

6772 ResourceManager

7338 NameNode

25662 RunJar

28335 Jps

[hadoop@node1 ~]$ a.hive命令使用

[hadoop@node1 bin]$ ./hive

[hadoop@node1 bin]$ ./hive

which: no hbase in (/usr/local/jdk1.8.0_151/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/home/hadoop/hadoop/hadoop-2.7.5/sbin:/home/hadoop/hadoop/hadoop-2.7.5/bin:/home/hadoop/hadoop/scala-2.11.11/bin:/root/bin)

Logging initialized using configuration in jar:file:/home/hadoop/hadoop/apache-hive-2.3.2-bin/lib/hive-common-2.3.2.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

OK

default

Time taken: 5.325 seconds, Fetched: 2 row(s)

hive> create databasedb_hive_test;hive>use db_hive_test;hive>create table test(id int,name string)row format delimited fields terminated by ',' lines terminated by '\n';新建txt文档并输入信息(文本信息必须与创建表设置的分割符一致,否则会有一堆堆的NULL):

1000,gisxy

1001,zhangsan

1002,lisi

1003,wangwu 加载数据并查看

hive>load data local inpath '/home/hadoop/hadoop/hadoop275_tmp/hive_test.txt' into table db_hive_test.test;

Loading data to table db_hive_test.test

OK

Time taken: 0.496 seconds

hive> select * from test;

OK

1000 gisxy

1001 zhangsan

1002 lisi

1003 wangwu

Time taken: 0.1 seconds, Fetched: 5 row(s)查看表的详细信息

hive> desc formatted test;

OK

# col_name data_type comment

id int

name string

# Detailed Table Information

Database: db_hive_test

Owner: hadoop

CreateTime: Sun Feb 25 12:54:21 CST 2018

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://HA/user/hive/warehouse/db_hive_test.db/test

Table Type: MANAGED_TABLE

Table Parameters:

numFiles 1

numRows 0

rawDataSize 0

totalSize 69

transient_lastDdlTime 1519534473

# Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -1

Bucket Columns: []

Sort Columns: []

Storage Desc Params:

field.delim ,

line.delim \n

serialization.format ,

Time taken: 0.063 seconds, Fetched: 32 row(s)hive> show functions;OK

!

!=

$sum0

%

&

*

+

-

/

<

<=

<=>

<>

=

==

>

>=

^

abs

acos

add_months

aes_decrypt

aes_encrypt

and

array

array_contains

ascii

asin

assert_true

atan

avg

base64

between

bin

bloom_filter

bround

cardinality_violation

case

cbrt

ceil

ceiling

char_length

character_length

chr

coalesce

collect_list

collect_set

compute_stats

concat

concat_ws

context_ngrams

conv

corr

cos

count

covar_pop

covar_samp

crc32

create_union

cume_dist

current_database

current_date

current_timestamp

current_user

date_add

date_format

date_sub

datediff

day

dayofmonth

dayofweek

decode

degrees

dense_rank

div

e

elt

encode

ewah_bitmap

ewah_bitmap_and

ewah_bitmap_empty

ewah_bitmap_or

exp

explode

extract_union

factorial

field

find_in_set

first_value

floor

floor_day

floor_hour

floor_minute

floor_month

floor_quarter

floor_second

floor_week

floor_year

format_number

from_unixtime

from_utc_timestamp

get_json_object

get_splits

greatest

grouping

hash

hex

histogram_numeric

hour

if

in

in_bloom_filter

in_file

index

initcap

inline

instr

internal_interval

isnotnull

isnull

java_method

json_tuple

lag

last_day

last_value

lcase

lead

least

length

levenshtein

like

ln

locate

log

log10

log2

logged_in_user

lower

lpad

ltrim

map

map_keys

map_values

mask

mask_first_n

mask_hash

mask_last_n

mask_show_first_n

mask_show_last_n

matchpath

max

md5

min

minute

mod

month

months_between

named_struct

negative

next_day

ngrams

noop

noopstreaming

noopwithmap

noopwithmapstreaming

not

ntile

nullif

nvl

octet_length

or

parse_url

parse_url_tuple

percent_rank

percentile

percentile_approx

pi

pmod

posexplode

positive

pow

power

printf

quarter

radians

rand

rank

reflect

reflect2

regexp

regexp_extract

regexp_replace

regr_avgx

regr_avgy

regr_count

regr_intercept

regr_r2

regr_slope

regr_sxx

regr_sxy

regr_syy

repeat

replace

replicate_rows

reverse

rlike

round

row_number

rpad

rtrim

second

sentences

sha

sha1

sha2

shiftleft

shiftright

shiftrightunsigned

sign

sin

size

sort_array

sort_array_by

soundex

space

split

sq_count_check

sqrt

stack

std

stddev

stddev_pop

stddev_samp

str_to_map

struct

substr

substring

substring_index

sum

tan

to_date

to_unix_timestamp

to_utc_timestamp

translate

trim

trunc

ucase

unbase64

unhex

unix_timestamp

upper

uuid

var_pop

var_samp

variance

version

weekofyear

when

windowingtablefunction

xpath

xpath_boolean

xpath_double

xpath_float

xpath_int

xpath_long

xpath_number

xpath_short

xpath_string

year

|

~

Time taken: 5.418 seconds, Fetched: 271 row(s)

hive> desc function extended split;

OK

split(str, regex) - Splits str around occurances that match regex

Example:

> SELECT split('oneAtwoBthreeC', '[ABC]') FROM src LIMIT 1;

["one", "two", "three"]

Function class:org.apache.hadoop.hive.ql.udf.generic.GenericUDFSplit

Function type:BUILTIN

Time taken: 0.273 seconds, Fetched: 6 row(s)

hive> hive> quit;其他命令:

删除表

Drop Table 语法:DROP TABLE [IF EXISTS] table_name;

重命名表

ALTER TABLE name RENAME TO new_name

添加新列

ALTER TABLE name ADD COLUMNS (col_spec[, col_spec ...])

删除指定列

ALTER TABLE name DROP [COLUMN] column_name

修改指定列

ALTER TABLE name CHANGE column_name new_name new_type

替换指定列

ALTER TABLE name REPLACE COLUMNS (col_spec[, col_spec ...])

b.客户端beeline

[hadoop@node1 bin]$ ./beeline

[hadoop@node1 bin]$ ./beeline

Beeline version 2.3.2 by Apache Hive

beeline> !connect jdbc:hive2://node1.gisxy.com:10000/default

Connecting to jdbc:hive2://node1.gisxy.com:10000/default

Enter username for jdbc:hive2://node1.gisxy.com:10000/default: hadoop

Enter password for jdbc:hive2://node1.gisxy.com:10000/default: ******

Connected to: Apache Hive (version 2.3.2)

Driver: Hive JDBC (version 2.3.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://node1.gisxy.com:10000/default>0: jdbc:hive2://node1.gisxy.com:10000/default>show databases;

+----------------+

| database_name |

+----------------+

| db_hive_test |

| default |

+----------------+

2 rows selected (0.615 seconds)

0: jdbc:hive2://node1.gisxy.com:10000/default> use db_hive_test;

No rows affected (0.221 seconds)

0: jdbc:hive2://node1.gisxy.com:10000/default> show tables;

+-----------+

| tab_name |

+-----------+

| test |

+-----------+

1 row selected (0.363 seconds)

0: jdbc:hive2://node1.gisxy.com:10000/default> 0: jdbc:hive2://node1.gisxy.com:10000/default> !quit

Closing: 0: jdbc:hive2://node1.gisxy.com:10000/default

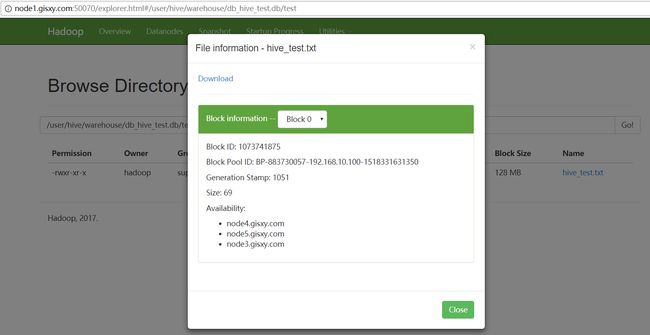

c.在hdfs中查看

d.在mysql查看

[hadoop@node1 bin]$ mysql -u hadoop -p

[hadoop@node1 bin]$ mysql -u hadoop -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 25

Server version: 5.7.21 MySQL Community Server (GPL)

Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| hive |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> use hive

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+---------------------------+

| Tables_in_hive |

+---------------------------+

| AUX_TABLE |

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_COMPACTIONS |

| COMPLETED_TXN_COMPONENTS |

| DATABASE_PARAMS |

| DBS |

| DB_PRIVS |

| DELEGATION_TOKENS |

| FUNCS |

| FUNC_RU |

| GLOBAL_PRIVS |

| HIVE_LOCKS |

| IDXS |

| INDEX_PARAMS |

| KEY_CONSTRAINTS |

| MASTER_KEYS |

| NEXT_COMPACTION_QUEUE_ID |

| NEXT_LOCK_ID |

| NEXT_TXN_ID |

| NOTIFICATION_LOG |

| NOTIFICATION_SEQUENCE |

| NUCLEUS_TABLES |

| PARTITIONS |

| PARTITION_EVENTS |

| PARTITION_KEYS |

| PARTITION_KEY_VALS |

| PARTITION_PARAMS |

| PART_COL_PRIVS |

| PART_COL_STATS |

| PART_PRIVS |

| ROLES |

| ROLE_MAP |

| SDS |

| SD_PARAMS |

| SEQUENCE_TABLE |

| SERDES |

| SERDE_PARAMS |

| SKEWED_COL_NAMES |

| SKEWED_COL_VALUE_LOC_MAP |

| SKEWED_STRING_LIST |

| SKEWED_STRING_LIST_VALUES |

| SKEWED_VALUES |

| SORT_COLS |

| TABLE_PARAMS |

| TAB_COL_STATS |

| TBLS |

| TBL_COL_PRIVS |

| TBL_PRIVS |

| TXNS |

| TXN_COMPONENTS |

| TYPES |

| TYPE_FIELDS |

| VERSION |

| WRITE_SET |

+---------------------------+

57 rows in set (0.00 sec)

mysql> select * from TBLS;

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+--------------------+

| TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER | RETENTION | SD_ID | TBL_NAME | TBL_TYPE | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT | IS_REWRITE_ENABLED |

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+--------------------+

| 14 | 1519534461 | 6 | 0 | hadoop | 0 | 14 | test | MANAGED_TABLE | NULL | NULL | |

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+--------------------+

1 rows in set (0.00 sec)

mysql> exit;