CanalClient卡死宿主服务原因分析

文章目录

-

- 背景说明

-

- 技术架构说明

- GC日志

- canal Server日志

- jstack查看堆栈日志

- 解决方案

-

- 临时解决方案:

- 最终解决方案:

- 感悟

背景说明

数据库进行大批量数据更新的时候,会导致集成Canal client所在的服务无响应。

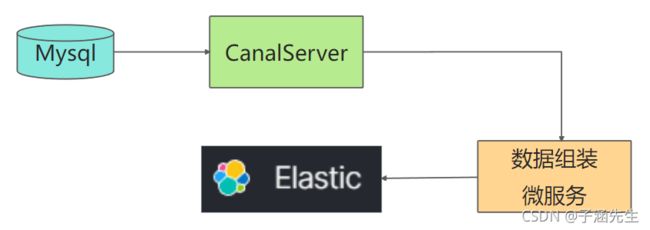

技术架构说明

首先,我先介绍下为什么会引入Canal。Java中的不同数据对象之间有着一定的关联关系,比如:组合、聚合等,Elasticsearch用来解决复杂场景的数据检索。我们需要一个微服务用来把数据组装完成后放到Es中。试想,对象A变化的时候会影响到对象B中对象A的描述,那我们就需要更新对象A所在的所有索引——现实代码中需要更新索引信息的入口非常多。如果对象结构更加复杂一些的场景,用于Es索引数据组装的过程就非常复杂,且代码很难维护。所以,我们采用Canal用来解耦业务逻辑与文档数据更新的问题——即老生常谈的缓存一致性问题。

在最初的架构设计中,我们试验性引入了第三方canal-client-spring-boot-starter,快速验证了该方案的可用性。

我们知道,大批量的数据更新一定是会超过单个微服务节点的负载能力的——果然批量数据更新的时候出现了问题。

我们此篇就是要分析一下到底是什么地方导致了服务无响应的问题。首先查看了CPU、内存信息,没有问题。当时还怀疑了两个点:

- 线程死锁;

- GC时间太长。

GC日志

查看进程的GC状态,也没有问题。

[root@localhost zhelifa]# jstat -gcutil [pid] 2000 10

S0 S1 E O M CCS YGC YGCT FGC FGCT GCT

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

56.36 0.00 49.47 24.91 93.59 90.90 18 0.324 4 0.635 0.959

canal Server日志

2021-10-12 17:46:28.688 [New I/O server worker #1-3] ERROR c.a.otter.canal.server.netty.handler.SessionHandler - something goes wrong with channel:[id: 0x15324335, /192.168.30.4:11715 => /192.168.6.51:11111], exception=java.io.IOE

xception: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.write0(Native Method)

at sun.nio.ch.SocketDispatcher.write(SocketDispatcher.java:47)

at sun.nio.ch.IOUtil.writeFromNativeBuffer(IOUtil.java:93)

at sun.nio.ch.IOUtil.write(IOUtil.java:51)

at sun.nio.ch.SocketChannelImpl.write(SocketChannelImpl.java:470)

at org.jboss.netty.channel.socket.nio.SocketSendBufferPool$PooledSendBuffer.transferTo(SocketSendBufferPool.java:243)

at org.jboss.netty.channel.socket.nio.NioWorker.write0(NioWorker.java:470)

at org.jboss.netty.channel.socket.nio.NioWorker.writeFromUserCode(NioWorker.java:388)

at org.jboss.netty.channel.socket.nio.NioServerSocketPipelineSink.handleAcceptedSocket(NioServerSocketPipelineSink.java:137)

at org.jboss.netty.channel.socket.nio.NioServerSocketPipelineSink.eventSunk(NioServerSocketPipelineSink.java:76)

at org.jboss.netty.channel.Channels.write(Channels.java:611)

at org.jboss.netty.channel.Channels.write(Channels.java:578)

at com.alibaba.otter.canal.server.netty.NettyUtils.write(NettyUtils.java:48)

at com.alibaba.otter.canal.server.netty.handler.SessionHandler.messageReceived(SessionHandler.java:202)

at org.jboss.netty.handler.timeout.IdleStateAwareChannelHandler.handleUpstream(IdleStateAwareChannelHandler.java:48)

at org.jboss.netty.handler.timeout.IdleStateHandler.messageReceived(IdleStateHandler.java:276)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:302)

at org.jboss.netty.handler.codec.replay.ReplayingDecoder.unfoldAndfireMessageReceived(ReplayingDecoder.java:526)

at org.jboss.netty.handler.codec.replay.ReplayingDecoder.callDecode(ReplayingDecoder.java:507)

at org.jboss.netty.handler.codec.replay.ReplayingDecoder.messageReceived(ReplayingDecoder.java:444)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:274)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:261)

at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:350)

at org.jboss.netty.channel.socket.nio.NioWorker.processSelectedKeys(NioWorker.java:281)

at org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:201)

at org.jboss.netty.util.internal.IoWorkerRunnable.run(IoWorkerRunnable.java:46)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

jstack查看堆栈日志

jstack -l [pid] > jstack.log

发现好多canal client执行线程,WAITING(parking)状态,等待资源<0x00000006ca337b70>

"canal-execute-thread-8" #1912 prio=5 os_prio=0 tid=0x00007f4e1418d800 nid=0x39ff waiting on condition [0x00007f4dc4b4e000]

java.lang.Thread.State: WAITING (parking)

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <0x00000006ca337b70> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2039)

at com.alibaba.druid.pool.DruidDataSource.takeLast(DruidDataSource.java:2002)

at com.alibaba.druid.pool.DruidDataSource.getConnectionInternal(DruidDataSource.java:1539)

at com.alibaba.druid.pool.DruidDataSource.getConnectionDirect(DruidDataSource.java:1326)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:1306)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:1296)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:109)

at org.springframework.jdbc.datasource.DataSourceTransactionManager.doBegin(DataSourceTransactionManager.java:263)

at org.springframework.transaction.support.AbstractPlatformTransactionManager.getTransaction(AbstractPlatformTransactionManager.java:376)

at org.springframework.transaction.interceptor.TransactionAspectSupport.createTransactionIfNecessary(TransactionAspectSupport.java:572)

at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:360)

at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:99)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:747)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:689)

at com.keyou.evm.lpm.service.es.ElSearchStoreServiceImpl$$EnhancerBySpringCGLIB$$4dc5f842.synchronous()

at com.keyou.evm.lpm.sync.StoreHandler.insert(StoreHandler.java:44)

at com.keyou.evm.lpm.sync.StoreHandler.insert(StoreHandler.java:23)

at top.javatool.canal.client.handler.impl.RowDataHandlerImpl.handlerRowData(RowDataHandlerImpl.java:35)

at top.javatool.canal.client.handler.impl.RowDataHandlerImpl.handlerRowData(RowDataHandlerImpl.java:16)

at top.javatool.canal.client.handler.AbstractMessageHandler.handleMessage(AbstractMessageHandler.java:48)

at top.javatool.canal.client.handler.impl.AsyncMessageHandlerImpl.lambda$handleMessage$0(AsyncMessageHandlerImpl.java:30)

at top.javatool.canal.client.handler.impl.AsyncMessageHandlerImpl$$Lambda$1571/194399623.run(Unknown Source)

at org.springframework.cloud.sleuth.instrument.async.TraceRunnable.run(TraceRunnable.java:67)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Locked ownable synchronizers:

- <0x00000006d11c0eb0> (a java.util.concurrent.ThreadPoolExecutor$Worker)

分析结果:Canal-client代码中使用了线程池,线程池同步数据到es的时候,占用了大量的连接池(DruidDataSource获取连接),导致大量的处于WAITING和 TIMED_WATING 状态的线程。

解决方案

临时解决方案:

- 调优连接池回收策略:使用非公平锁、自动回收超时连接;

- druid默认使用非公平锁。但是,配置文件中现有一个参数maxWait,这个会导致执行setMaxWait()创建一个公平锁来代替非公平锁。

参考文档:https://github.com/alibaba/druid/issues/1160

#是否自动回收超时连接

removeAbandoned=true

#超时时间(以秒数为单位)

removeAbandonedTimeout=180

最终解决方案:

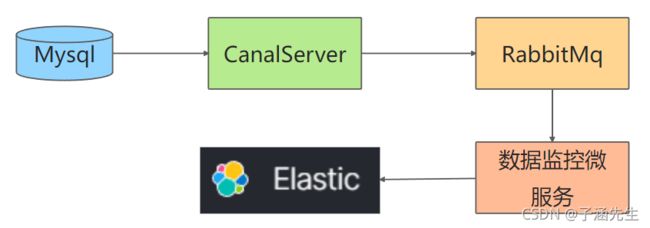

以上出现的问题,其根本原因还是单节点无法一股脑处理大批量的数据更新;所以,最终的方案一定是:使用MQ削峰填谷。

这也是为什么在CanalServer端,天生支持很多种Mq的配置的原因。否则,Client客户端难以承受Mysql主库数据大批量变化的带来的影响。

感悟

正如题目所述,从来没有一个技术栈可以独立运行。

我们可以在各大学习网站看到各种各样的技术视频。但要做好架构的话,需要对该技术的生态有一个全面的了解,否则在未来的维护中会非常被动。

感谢您的赏读~

如果您对我的文章感兴趣的话,欢迎留下您的问题让我们一起探讨!一起进步!!

还可以关注我的微信公众号哦~