十六、K8s安全管理与资源限制

实验环境:

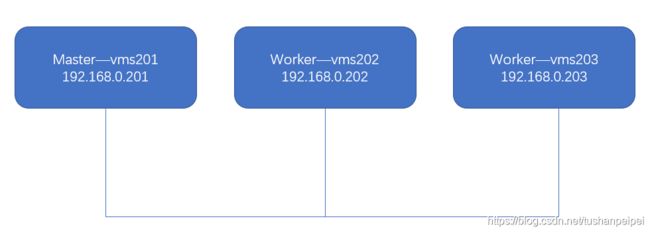

按照图示部署好了K8s集群,一个Master,两个worker nodes。

访问控制概述:

apiserver作为k8s集群系统的网关,是访问及管理资源对象的唯一入口,余下所有需要访问集群资源的组件,包括kube-controller-manager、kube-scheduler、kubelet和kube-proxy等集群基础组件、CoreDNS等集群的附加组件以及此前使用的kubectl命令等都要经由此网关进行集群访问和管理。这些客户端均要经由apiserver访问或改变集群状态并完成数据存储,并由它对每一次的访问请求进行合法性检验,包括用户身份鉴别、操作权限验证以及操作是否符合全局规范的约束等。所有检查均正常且对象配置信息合法性检验无误后才能访问或存入数据于后端存储系统etcd中。

客户端认证操作由apiserver配置的一到多个认证插件完成。收到请求后,apiserver依次调用为其配置的认证插件来认证客户端身份,直到其中一个插件可以识别出请求者的身份为止。

授权操作由一到多授权插件进行,它负责确定那些通过认证的用户是否有权限执行其发出的资源操作请求,如创建、删除或修改指定的对象等。随后,通过授权检测的用户所请求的修改相关的操作还要经由一到多个准入控制插件的遍历检测。

一、认证:

k8s 有下面几种认证方式:

- token

- kubeconfig

- oauth(第三方认证方式)

- base-auth(1.9版本后已经废弃,使用用户名和密码认证)

token认证:

首先在K8s集群外再启动一台设备vms204,做为kubectl的客户端,保证其能够访问到集群的设备。在其上安装kubectl,并补全table键无法显示的问题:

yum install -y kubectl-1.21.0-0 --disableexcludes=kubernetes

vim /etc/profile

在文件中加入一行如下的命令,并source一下:

# /etc/profile

source <(kubectl completion bash)

[root@vms204 ~]# source /etc/profile

在K8s集群的master上启用token的认证方式:

[root@vms201 ~]# openssl rand -hex 10

99b9df881e6e39007fc3

将生成的随机数、用户名和ID(用,隔开)保存到一个/etc/kubernetes/pki/目录下的csv文件下:

[root@vms201 security]# cat /etc/kubernetes/pki/bb.csv

99b9df881e6e39007fc3,admin2,3

修改apiserver的定义文件,在command一栏下加上token的参数,引用/etc/kubernetes/pki/bb.csv文件:

[root@vms201 security]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

- --token-auth-file=/etc/kubernetes/pki/bb.csv

重启kubelet:完成后master也就启动了token认证:

[root@vms201 security]# systemctl restart kubelet

返回到vms204上,连接master:

[root@vms204 ~]# kubectl -s https://192.168.0.201:6443 --token="99b9df881e6e39007fc3" --insecure-skip-tls-verify=true get pods -n kube-system

Error from server (Forbidden): pods is forbidden: User "admin2" cannot list resource "pods" in API group "" in the namespace "kube-system"

可以看到,此时已经认证通过了,但是没有权限。如果是错误的token的话,则是如下的结果:说明认证错误。

[root@vms204 ~]# kubectl -s https://192.168.0.201:6443 --token="99b9df881e6e39007" --insecure-skip-tls-verify=true get pods -n kube-system

error: You must be logged in to the server (Unauthorized)

kubeconfig认证:

修改kubeconfig文件:并不是有一个名字叫做kubeconfig的文件,而是用于做认证的文件我们就叫做kubeconfig文件。例如aa.txt里有认证信息,其也就被称为kubeconfig文件。

在安装好k8s后,系统会生成一个对k8s有管理员权限的文件(admin.conf),目录在/etc/kubernetes/:

[root@vms201 security]# ls /etc/kubernetes/

admin.conf controller-manager.conf kubelet.conf manifests pki scheduler.conf

当我们从管理员账号切换到其他账号,则无法查看k8s的信息:

[root@vms201 security]# su testuser

[testuser@vms201 security]$ kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

这是因为普通用户默认使用的kubeconfig文件在~/.kube/config,但是默认并不存在。我们可以将root用户的admin.conf拷贝到testuser的家目录中去,设置owner:

[root@vms201 ~]# cp /etc/kubernetes/admin.conf ~testuser/

[root@vms201 ~]# chown testuser.testuser ~testuser/admin.conf

返回testuser用户,设置环境变量,现在普通用户由于拿到了admin.conf(可以修改名字)文件,所以能够管理k8s了。

[testuser@vms201 ~]$ export KUBECONFIG=admin.conf

[testuser@vms201 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms201.rhce.cc Ready control-plane,master 10d v1.21.0

vms202.rhce.cc Ready <none> 10d v1.21.0

vms203.rhce.cc Ready <none> 10d v1.21.0

如果不设置环境变量,我们则可以将admin.conf拷贝到~/.kube/config,同样可以管理K8s:

[testuser@vms201 ~]$ unset KUBECONFIG

[testuser@vms201 ~]$ cp admin.conf .kube/config

[testuser@vms201 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms201.rhce.cc Ready control-plane,master 10d v1.21.0

vms202.rhce.cc Ready <none> 10d v1.21.0

vms203.rhce.cc Ready <none> 10d v1.21.0

因此,可以看到admin.conf这个文件的重要性,我们只需要将其拷贝到vms204上:

[root@vms201 /]# scp /etc/kubernetes/admin.conf 192.168.0.204:~

[email protected]'s password:

admin.conf 100% 5593 8.6MB/s 00:00

然后再vms204上使用此文件访问k8s集群:

[root@vms204 ~]# kubectl --kubeconfig=admin.conf get nodes

NAME STATUS ROLES AGE VERSION

vms201.rhce.cc Ready control-plane,master 10d v1.21.0

vms202.rhce.cc Ready <none> 10d v1.21.0

vms203.rhce.cc Ready <none> 10d v1.21.0

可以看到命令行中并没有设置集群的地址,这是因为admin.conf中就存在的k8s的集群和管理员的证书等信息。但是这种方式会造成很大的安全隐患(权限过大)。所以可以创建自己的kubeconfig文件给客户端使用。

首先需要在master node上申请证书。创建私钥,名字为 test.key:

[root@vms201 security]# openssl genrsa -out test.key 2048

Generating RSA private key, 2048 bit long modulus

.................................+++

..............+++

e is 65537 (0x10001)

利用刚生成的私有test.key生成证书请求文件 test.csr:其中CN=testuser很重要,也就是用户名。

[root@vms201 security]# openssl req -new -key test.key -out test.csr -subj "/CN=testuser/O=cka2021"

对证书请求文件进行 base64 编码:

[root@vms201 security]# cat test.csr | base64 | tr -d "\n"

编写申请证书请求文件的 yaml 文件:主要是修改request:,内容为上一步base64编码的内容。

[root@vms201 security]# cat csr.yaml

apiVersion: certificates.k8s.io/v1beta1

kind: CertificateSigningRequest

metadata:

name: testuser

spec:

groups:

- system:authenticated

# #signerName: kubernetes.io/legacy-aa # # 注意这行是被注释掉的

request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ2FqQ0NBVklDQVFBd0pURVJNQThHQTFVRUF3d0lkR1Z6ZEhWelpYSXhFREFPQmdOVkJBb01CMk5yWVRJdwpNakV3Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLQW9JQkFRQ2M5NnVVNURoR2xJTkhtNWUrCmFHTDQzVWJmUFltYTVSUFFsZ3hza0VVTkp0ZG9pTnFHeXVPWW1TY0wvNGM4V2w5RkJPdjUwdVJoUjhBRmk2REcKL1dDV3dhbW1CMnFkd0VobXpBczBaVWlQRWdTaDZVT3hnTUxxcW5nakdhT1hZeWdmb2QxTFNweXZrbjFncG5oOQpaYllwUU9hSzJlSzJyampOUE9YRnFQN21BSm5wZlZ1NStvbFpseGZLelVWOGh3Z1FFUmkwRFZLY3FYVEpzYVQwCmNDL1VFR2R3N080N2VyWHp4SkYzN3V1NUtpbWpzYzZ6dGc0MGhvQW9XSyt6d0gyenArKzBWWUtZRlV3SUFoYXkKMG1weERCZ3hwZzlQU1RZSnlBZzNYcEpWbE5MM1BUUnhTVDY2WDJpcTRxSS85ZXNmZXU2a3ZCaEV5UW9FbTB3OApZaDJCQWdNQkFBR2dBREFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBWnFhOVlzVDVUYzdlaWN2c0xTVjRHQXNxClkzUy9QK3hNKzdIUzA0azAwMXgwV041SkJObmgrTk4zZTdZUXl0SnpTcUFROTZMT2wrTWJ3MEE1ZHpkenV5SDQKdHBDSUpSczBJU2orRTFpSEg5dmFBaXliNWVUYzhQZ0FyRkoydWREblZPenU4ckkwY1dlbzdKYzBCRWYyTUlzcwpzcWU3dVVWR3AzOWtoTWR1SDAyTEMvcEhjUk9nRnZJSFNYdm84bXdxWnZBZnZoeFpiZVJ3K0d5Y1JPQzdaZU8zCm82QStmdEJuRnphZWtCZkMvRW44UVgvcFJPcVZhM0ZqbE1RQlRPa211b2ZMaTBIQVJhWWo3Y3JJOW1QSVJxQlEKbVBsclN4SU00ZW9Hd0pEWDJXYWZFTzRSVWhxV2JGc20yZHFhV2hnQUNHc2dDVHl2Nk1pZ0dqdnRnTlhvQmc9PQotLS0tLUVORCBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0K

usages:

- client auth

应用yaml文件去申请证书:可以看到状态为Pending。

[root@vms201 security]# kubectl apply -f csr.yaml

Warning: certificates.k8s.io/v1beta1 CertificateSigningRequest is deprecated in v1.19+, unavailable in v1.22+; use certificates.k8s.io/v1 CertificateSigningRequest

certificatesigningrequest.certificates.k8s.io/testuser created

[root@vms201 security]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

testuser 4s kubernetes.io/legacy-unknown kubernetes-admin Pending

审核证书:

[root@vms201 security]# kubectl certificate approve testuser

certificatesigningrequest.certificates.k8s.io/testuser approved

查看证书状态:

[root@vms201 security]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

testuser 111s kubernetes.io/legacy-unknown kubernetes-admin Approved,Issued

导出证书文件:

kubectl get csr/testuser -o jsonpath='{.status.certificate}' | base64 -d > testuser.crt

查看证书:

[root@vms201 security]# cat testuser.crt

获取CA的证书:

[root@vms201 security]# cp /etc/kubernetes/pki/ca.crt .

[root@vms201 security]# ls

ca.crt csr.yaml test.csr test.key testuser.crt

创建kubeconfig文件,其中包含了三大字段,分别是cluster、context(关联cluster和user)、user。首先来设置集群字段:

[root@vms201 security]# kubectl config --kubeconfig=kc1 set-cluster cluster1 --server=https://192.168.0.201:6443 --certificate-authority=ca.crt --embed-certs=true

Cluster "cluster1" set.

[root@vms201 security]# cat kc1

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: xxxxxx

server: https://192.168.0.201:6443

name: cluster1

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

这里–embed-certs=true 的意思是把证书内容写入到此 kubeconfig 文件里。接着设置用户字段:

[root@vms201 security]# kubectl config --kubeconfig=kc1 set-credentials testuser --client-certificate=testuser.crt --client-key=test.key --embed-certs=true

User "testuser" set.

[root@vms201 security]# cat kc1

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: xxxxxxx

server: https://192.168.0.201:6443

name: cluster1

contexts: null

current-context: ""

kind: Config

preferences: {}

users:

- name: testuser

user:

client-certificate-data: yyyyy

client-key-data: zzzzzz

设置上下文字段:

[root@vms201 security]# kubectl config --kubeconfig=kc1 set-context context1 --cluster=cluster1 --namespace=default --user=testuser

Context "context1" created.

编辑kc1文件:找到current-context的内容并设置为“context1”

拷贝kubeconfig文件给vms204:

[root@vms201 security]# scp kc1 192.168.0.204:~

[email protected]'s password:

kc1 100% 5516 4.8MB/s 00:00

在vms204上:

[root@vms204 ~]# kubectl --kubeconfig=kc1 get nodes

Error from server (Forbidden): nodes is forbidden: User "testuser" cannot list resource "nodes" in API group "" at the cluster scope

可以看到使用kc1文件,登陆成功,但是权限不够。在master上对testuser,做相应的授权,由于kc1存储了testuser的证书和私钥,所以kc1也具备了testuser的权限。(授权的内容在第二节详细补充)

[root@vms201 security]# kubectl create clusterrolebinding test1 --clusterrole=cluster-admin --user=testuser

clusterrolebinding.rbac.authorization.k8s.io/test1 created

返回到vms204上重新查看:

[root@vms204 ~]# export KUBECONFIG=kc1

[root@vms204 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms201.rhce.cc Ready control-plane,master 10d v1.21.0

vms202.rhce.cc Ready <none> 10d v1.21.0

vms203.rhce.cc Ready <none> 10d v1.21.0

删除掉对应的授权:

[root@vms201 security]# kubectl delete clusterrolebinding test1

clusterrolebinding.rbac.authorization.k8s.io "test1" deleted

二、授权:

在前面的认证中,可以看到,如果用户没有任何的权限,就算是认证成功了,也没有任何的权限,所以需要对用户进行授权。

授权方式:

- –authorization-mode=Node,RBAC:默认方式;

- –authorization-mode=AlwaysAllow:允许所有请求;

- –authorization-mode=AlwaysDeny:拒绝所有请求(对本身的管理员无效);

- –authorization-mode=ABAC:Attribute-Based Access Control 不够灵活放弃(基于属性的控制,非常不灵活,基本已经被放弃);

- –authorization-mode=RBAC:Role Based Access Control 基于角色的访问控制(最推荐);

- –authorization-mode=Node:Node授权器主要用于各个worker node上的kubelet访问apiserver时使用的,其他一般均由RBAC授权器来授权。

授权方式可以在/etc/kubernetes/manifests/kube-apiserver.yaml文件下进行查看和更改。

查看所有权限:

kubectl describe clusterrole admin

RBAC方式授权概述:

并不是直接将权限授予用户,因为不太方便。对于RBAC方式,会吧权限放在一个role(基于命名空间)里面,这个角色就具备了这些权限,然后将角色给用户,这个过程叫做rolebinding,然后这个角色就具备了对应的权限。

由于role是基于命名空间的,所以绑定的用户也仅会在此命名空间里有对应的权限。如果设置的是cluster role,则是全局的,不是属于命名空间。cluster role可以在多个命名空间里rolebinding用户,那么relobinding的用户则可以获取多个与其rolebinding的命名空间的cluster role的权限。

如果使用cluster role直接使用clusterrolebinding用户的话,则其用户在任意的命名空间内都有cluster role的权限。

方式1:role实验测试

定义role:获取对应的yaml文件

[root@vms201 security]# kubectl create role role1 --verb=get,list --resource=pods --dry-run=client -o yaml > role1.yaml

通过yaml文件创建role1:

[root@vms201 security]# kubectl apply -f role1.yaml

role.rbac.authorization.k8s.io/role1 created

[root@vms201 security]# kubectl get role

NAME CREATED AT

role1 2021-07-12T03:51:04Z

查看具体的信息,对pod有get和list权限。

[root@vms201 security]# kubectl describe role role1

Name: role1

Labels: <none>

Annotations: <none>

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

pods [] [] [get list]

创建rolebingding绑定role1到testuser上:

[root@vms201 security]# kubectl create rolebinding rbind1 --role=role1 --user=testuser

rolebinding.rbac.authorization.k8s.io/rbind1 created

[root@vms201 security]# kubectl get rolebinding

NAME ROLE AGE

rbind1 Role/role1 17s

在vms204上查看所授权的命名空间内的pod:可以查看到对应的pod。

[root@vms204 ~]# kubectl get pods -n security NAME READY STATUS RESTARTS AGE

pod1 1/1 Running 0 9s

如果我们不加-n security指定授权的命名空间,则默认是用户所在的default命令空间,会出现权限不足的报错:

[root@vms204 ~]# kubectl --kubeconfig=kc1 get pods

Error from server (Forbidden): pods is forbidden: User "testuser" cannot list resource "pods" in API group "" in the namespace "default"

但是如果我们想要创建一个pod,可以看到是没有create权限的,所以会报错:

[root@vms204 ~]# kubectl run -it nginx --image=nginx -n security

Error from server (Forbidden): pods is forbidden: User "testuser" cannot create resource "pods" in API group "" in the namespace "security"

同样去查看svc的信息,由于对于svc权限不足,也会报错:

[root@vms204 ~]# kubectl get svc -n security

Error from server (Forbidden): services is forbidden: User "testuser" cannot list resource "services" in API group "" in the namespace "security"

回到master,修改role的文件,添加对pod和svc的添加删除权限:

[root@vms201 security]# cat role1.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- ""

resources:

- pods

- services

verbs:

- get

- list

- create

- delete

[root@vms201 security]# kubectl apply -f role1.yaml

role.rbac.authorization.k8s.io/role1 configured

回到vms204上做测试:

[root@vms204 ~]# kubectl expose --name=svc1 pod pod1 --port=80 --target-port=80 --selector=run=pod1 -n security

service/svc1 exposed

[root@vms204 ~]# kubectl get svc -n security

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc1 ClusterIP 10.100.144.246 <none> 80/TCP 13s

[root@vms204 ~]# kubectl delete pod pod1 -n security

pod "pod1" deleted

需要注意的是,如果想要设置deployment的权限,由于其apiVersion是apps/v1(父级/子级)而不是pod和svc对于的apiVersion是V1,无父级。所以在创建对应role的时候,需要进行如下设置:

[root@vms201 security]# cat role1.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- "apps"

- ""

resources:

- pods

- services

- deployments

verbs:

- get

- list

- create

- delete

在apiGroups下设置deployment的父级"apps"。在如上的role的yaml文件中,无论是pods、services还是deployment都具有get、list、creat、delet的权限。如果想要对于resources设置不同的权限,可以按照如下的方式书写:

root@vms201 security]# cat role1.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- "apps"

resources:

- deployments

verbs:

- list

- get

- create

- apiGroups:

- ""

resources:

- pods

- services

verbs:

- get

- list

- create

- delete

yaml文件设置了deployments仅有list、create、get权限,而pods和services则存在之前的4个权限。

应用role后回到vms204上,对deployment进行测试:

[root@vms204 ~]# kubectl apply -f web1.yaml -n security

deployment.apps/web1 created

[root@vms204 ~]# kubectl get deployments.apps -n security

NAME READY UP-TO-DATE AVAILABLE AGE

web1 3/3 3 3 44s

如果我们想要去扩容,可以发现缺少了deployments/scale资源的patch的权限,在role.yaml文件上修改即可。

[root@vms204 ~]# kubectl scale deployment web1 --replicas=4 -n security

Error from server (Forbidden): deployments.apps "web1" is forbidden: User "testuser" cannot patch resource "deployments/scale" in API group "apps" in the namespace "security"

完成测试后清理环境:

[root@vms201 security]# kubectl delete -f role1.yaml

role.rbac.authorization.k8s.io "role1" deleted

[root@vms201 security]# kubectl delete deployments.apps web1

deployment.apps "web1" deleted

[root@vms201 security]# kubectl delete rolebindings rbind1

rolebinding.rbac.authorization.k8s.io "rbind1" deleted

方式2:clusterrole实验测试

在master上创建clusterrole的yaml文件:可以看到,写法和role是一样的,只需要将kind设置为ClusterRole。

[root@vms201 security]# kubectl create clusterrole crole1 --verb=get,list,create,delete --resource=deploy,pod,svc --dry-run=client -o yaml > crole1.yaml

[root@vms201 security]# cat crole1.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: crole1

rules:

- apiGroups:

- ""

resources:

- pods

- services

verbs:

- get

- list

- create

- delete

- apiGroups:

- apps

resources:

- deployments

verbs:

- get

- list

- create

- delete

创建clusterrole并查看:

[root@vms201 security]# kubectl apply -f crole1.yaml

clusterrole.rbac.authorization.k8s.io/crole1 created

[root@vms201 security]# kubectl get clusterrole | grep crole1

crole1 2021-07-12T05:00:09Z

设置rolebinding:

[root@vms201 security]# kubectl create rolebinding rbind1 --clusterrole=crole1 --user=testuser

rolebinding.rbac.authorization.k8s.io/rbind1 created

回到vms204上进行验证:可以看到,权限对rolebinding的命名空间内是生效的。

[root@vms204 ~]# kubectl get deployments.apps -n security

No resources found in security namespace.

[root@vms204 ~]# kubectl get svc -n security

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc1 ClusterIP 10.100.144.246 <none> 80/TCP 47m

[root@vms204 ~]# kubectl get pods -n security

NAME READY STATUS RESTARTS AGE

pod1 1/1 Running 0 49m

如果不在rolebinding的命名空间,则不会生效:

[root@vms204 ~]# kubectl get pods

Error from server (Forbidden): pods is forbidden: User "testuser" cannot list resource "pods" in API group "" in the namespace "default"

在master上删除现有的rolebinding rbind1,创建clusterrolebinding:

[root@vms201 security]# kubectl delete rolebindings rbind1

rolebinding.rbac.authorization.k8s.io "rbind1" deleted

[root@vms201 security]# kubectl create clusterrolebinding cbind1 --clusterrole=crole1 --user=testuser

clusterrolebinding.rbac.authorization.k8s.io/cbind1 created

重新在vms204上进行测试:可以看到,目前testuser用户具备了不同命名空间的权限。

[root@vms204 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pod1-vms202.rhce.cc 1/1 Running 20 7d21h

[root@vms204 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-78d6f96c7b-h6p86 1/1 Running 23 6d1h

calico-node-hstwf 1/1 Running 22 10d

calico-node-ldfhc 1/1 Running 28 10d

calico-node-sdvzv 1/1 Running 29 10d

coredns-545d6fc579-596hb 1/1 Running 22 10d

coredns-545d6fc579-7mb9v 1/1 Running 22 10d

etcd-vms201.rhce.cc 1/1 Running 25 10d

kube-apiserver-vms201.rhce.cc 1/1 Running 1 13h

kube-controller-manager-vms201.rhce.cc 1/1 Running 27 10d

kube-proxy-28x9h 1/1 Running 22 10d

kube-proxy-7qzdd 1/1 Running 27 10d

kube-proxy-m9c2c 1/1 Running 29 10d

kube-scheduler-vms201.rhce.cc 1/1 Running 26 10d

metrics-server-bcfb98c76-nqc2t 1/1 Running 23 6d1h

三、sa

账号类型分为两种:

- user account 用户账号(ua):用于远程登陆系统

- service account 服务账户(sa)

Service account是为了方便Pod里面的进程调用Kubernetes API或其他外部服务而设计的。例如使用web来管理k8s环境时,需要调用相关的程序(pod中)有对K8s管理,当时此程序需要有相关的权限,这时候就可以创建一个sa,赋予相关的权限,并且与管理K8s的环境程序进行绑定,那么这个程序就有了sa的对应权限,可以对K8s进行管理。

在master上创建sa1:

[root@vms201 security]# kubectl create sa sa1

serviceaccount/sa1 created

[root@vms201 security]# kubectl get secrets | grep sa1

sa1-token-98889 kubernetes.io/service-account-token 3 32s

可以,每创建一个sa,就会创建一个secret,名称为sa名-token-xxxx。而每个secret中有一个token。

[root@vms201 security]# kubectl describe secrets sa1-token-98889

Name: sa1-token-98889

Namespace: security

Labels: <none>

Annotations: kubernetes.io/service-account.name: sa1

kubernetes.io/service-account.uid: b6022cd9-15c4-4937-a0a8-f8cd4961bf95

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 8 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Imx1Y2xIXzRXQ2NjUGVpV1VXVFFxTnNsWHk3MVU5dklhX0k0TkpkeHY5UTgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJzZWN1cml0eSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJzYTEtdG9rZW4tOTg4ODkiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoic2ExIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYjYwMjJjZDktMTVjNC00OTM3LWEwYTgtZjhjZDQ5NjFiZjk1Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OnNlY3VyaXR5OnNhMSJ9.sNjctDHDFJ6W3X-v5-0--QOXqoAMS13cd2DaZQ6juHFbEPA9AUKuuD4JRU4CeNgSYNB8TZRnlfGrE5LMzefuKDda6EpinoO4_Kjwd9a5TcjgMTp3AHXOdI4pfOrVUWJzyNZ_BfRJ0777JyEpaUlWicgDDTBmEaID7bzsx0ATtGQG-2ySi9VCv_3fLRBPLBDtww0V6urb1jeMXqPZNNB6IUnsG0RzUiM02RkMy6V-O2_iKJGuOxcuC9-5lEEhEMdMvlYCC0El1U1xAc9AM9mpX8apL9yyw2b4q6PGd0YCtmOWsGgF2PKUEk4jkOt1dXmYGoeJwtnadESdMOhlrvGaFg

sa授权语法:

kubectl create clusterrolebinding(绑定方式) cbind2(绑定名) --clusterrole=cluster-admin(角色名) --serviceaccount=security:sa1(命名空间:sa名)

进行授权,然后sa1的secret中的token中便有了对应的权限。

[root@vms201 security]# kubectl create clusterrolebinding cbind2 --clusterrole=cluster-admin --serviceaccount=security:sa1

clusterrolebinding.rbac.authorization.k8s.io/cbind2 created

授权完成后,我们可以创建一个pod来调用sa1的权限:设置serviceAccount: sa1。本质是将sa1的token赋予了这个pod。

[root@vms201 security]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

terminationGracePeriodSeconds: 0

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources: {}

ports:

- name: http

containerPort: 80

protocol: TCP

hostPort: 80

serviceAccount: sa1

dnsPolicy: ClusterFirst

restartPolicy: Always

四、通过kuboard管理K8s

在 k8s 里的操作基本上使用的是 kubectl 命令。如果有一个面板工具可以大大简化我们对 k8s的管理操作,本章使用 kuboard 工具。

kuboard 是一款免费的 dashboard 工具,安装简单,安装之后就可以在 web 界面里对 k8s 进行做日常的管理。

安装步骤:

1.确保已经安装好了 metric-server :

2.下载对应的yaml文件:

wget https://kuboard.cn/install-script/kuboard.yaml

3.下载所需要的镜像:

[root@vms201 security]# grep image kuboard.yaml

image: eipwork/kuboard:latest

imagePullPolicy: Always

在三台设备上pull镜像:eipwork/kuboard:latest

docker pull eipwork/kuboard:latest

4.修改下载的kuboard.yaml文件并应用:

里面定义了并应用了SA、clusterrolebinding等全套信息。我们需要把把imagePullPolicy修改为IfNotPresent。

[root@vms201 security]# kubectl create -f kuboard.yaml

deployment.apps/kuboard created

service/kuboard created

serviceaccount/kuboard-user created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-user created

serviceaccount/kuboard-viewer created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer created

创建完成后查看创建的内容:

[root@vms201 security]# kubectl get pods -n kube-system | grep kuboard

kuboard-78dccb7d9f-r6sql 1/1 Running 0 118s

[root@vms201 security]# kubectl get svc -n kube-system | grep kuboard

kuboard NodePort 10.101.126.151 <none> 80:32567/TCP 2m4s

[root@vms201 security]# kubectl get deploy -n kube-system | grep kuboard

kuboard 1/1 1 1 2m15s

[root@vms201 security]# kubectl get sa -n kube-system | grep kuboard

kuboard-user 1 2m36s

kuboard-viewer 1 2m36s

[root@vms201 security]# kubectl get clusterrolebindings -n kube-system | grep kuboard kuboard-user ClusterRole/cluster-admin 3m1s

kuboard-viewer ClusterRole/view 3m1s

测试步骤:

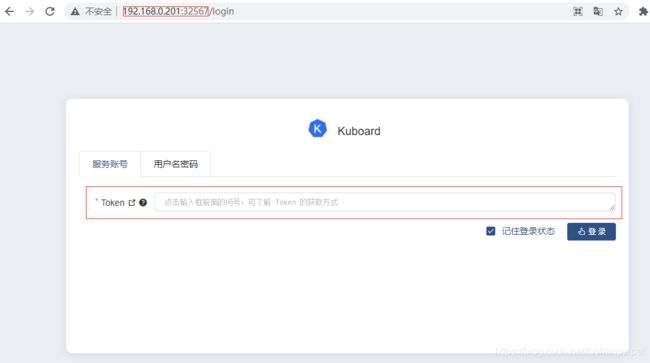

在外部的物理机的游览器中去访问SVC,可以使用集群中中的任何一个node的地址做IP,端口号为32567,可以看到如下的页面:

我们这里使用token进行登录,在master上使用如下命令获取token并复制登陆:

[root@vms201 security]# echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d)

eyJhbGciOiJSUzI1NiIsImtpZCI6Imx1Y2xIXzRXQ2NjUGVpV1VXVFFxTnNsWHk3MVU5dklhX0k0TkpkeHY5UTgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJvYXJkLXVzZXItdG9rZW4tcmg2enoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia3Vib2FyZC11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYzEyMTQxYWEtNmNmNi00YTViLWEwYmMtN2YwMTg3MGZiYjhlIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmt1Ym9hcmQtdXNlciJ9.go5c7ddngXn4EpCgmpjX3LVrQJ7pOdJIKMP5lFyoflcdXNY0NrJT6TEIRKCt-z7Av-dTRD4vmXAXCiOuILkJeeaItxo2BY_sNQI-lyZnMiXraPhnyRUEoA0BCTodjP4Lc7Vxf0e0L6406SS6O0M9_DRXLe4KgwTmZLVXAiBMojlVuA8jtDbKvGNffZS2C57JjN25rAyaU-ac9YK3Jd1-jK7SVxsLKHSKUgfQkrIgDXe_tDrmWFKHkzSOLd8EaOvHLEltE0Jw4vZxGQxGqttaMIhMQ1ZbxGfjiON1ar68guGGpBnLdvq2MTkDJhqCJt-Ovp7y3NVpbqy7Qf2fEjj1kQ

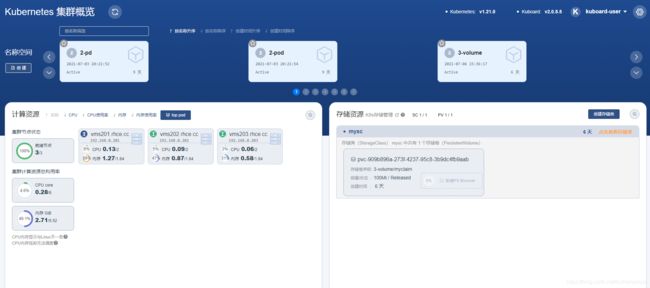

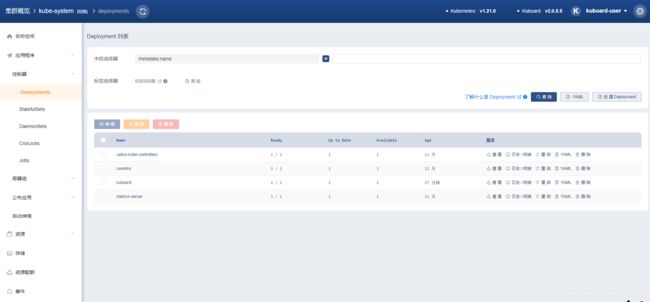

然后我们便进入了如下的界面:

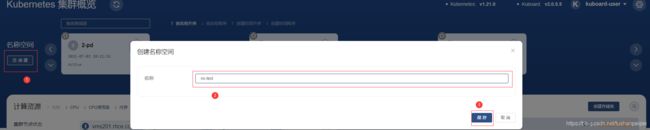

例如使用dashboard创命名空间:

应用后就可以看到创建了相应的ns:

点击进入相应的ns既可以对资源信息进行管理:

五、资源限制

在K8s中定义Pod中运行容器有两个维度的限制:

- 资源需求(requests):即运行Pod的节点必须满足运行Pod的最基本需求才能运行Pod。如: Pod运行至少需要2G内存,1核CPU。

- 资源限额(limits):即运行Pod期间,可能内存使用量会增加,那最多能使用多少内存,这就是资源限额。

准备步骤:

创建测试的pod:

[root@vms201 security]# cat testpod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podresource

name: podresource

spec:

terminationGracePeriodSeconds: 0

containers:

- image: centos

command: ["sh","-c","sleep 10000000"]

imagePullPolicy: IfNotPresent

name: podresource

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@vms201 security]# kubectl apply -f testpod.yaml

pod/podresource created

将内存测试工具memload拷贝到容器中,并安装:

[root@vms201 security]# kubectl cp memload-7.0-1.r29766.x86_64.rpm podresource:/opt

[root@vms201 security]# kubectl exec -it podresource -- bash

[root@podresource /]# rpm -ivh /opt/memload-7.0-1.r29766.x86_64.rpm

Verifying... ################################# [100%]

Preparing... ################################# [100%]

Updating / installing...

1:memload-7.0-1.r29766 ################################# [100%]

消耗1024的内存:

[root@podresource /]# memload 1024

Attempting to allocate 1024 Mebibytes of resident memory...

可以看到目前容器在vms203上运行:

[root@vms201 security]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podresource 1/1 Running 0 3m14s 10.244.185.115 vms203.rhce.cc <none> <none>

切换到vms203上,查看内存信息:可以看懂其内存已经消耗了1527M,仅有184可用。

[root@vms203 ~]# free -m

total used free shared buff/cache available

Mem: 1984 1527 88 17 368 184

Swap: 0 0 0

那么如何来限制容器的内存使用,以便将消耗的node资源设置控制在一个合理的范围呢,这个时候就需要资源控制了。

1.以resource字段方式限制资源使用:

编辑pod的yaml文件:

[root@vms201 security]# cat testpod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podresource

name: podresource

spec:

terminationGracePeriodSeconds: 0

containers:

- image: centos

command: ["sh","-c","sleep 10000000"]

imagePullPolicy: IfNotPresent

name: podresource

resources:

requests:

memory: 4Gi

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

在resources下添加要求至少需要4G内存,当应用时,可以看到状态为pending:

[root@vms201 security]# kubectl apply -f testpod.yaml

pod/podresource created

[root@vms201 security]# kubectl get pods

NAME READY STATUS RESTARTS AGE

podresource 0/1 Pending 0 27s

原因是因为node只有2G内存,不满足需求。接下来修改yaml文件,设置最小的cpu微核数为100m,最大的内存利用为512M:

[root@vms201 security]# cat testpod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podresource

name: podresource

spec:

terminationGracePeriodSeconds: 0

containers:

- image: centos

command: ["sh","-c","sleep 10000000"]

imagePullPolicy: IfNotPresent

name: podresource

resources:

requests:

cpu: 100m

limits:

memory: 512Mi

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@vms201 security]# kubectl apply -f testpod.yaml

pod/podresource created

以同样的方法用内存测试工具进行测试:

[root@podresource /]# memload 1024

Attempting to allocate 1024 Mebibytes of resident memory...

Killed

可以看到,由于消耗的内存大于512M,进程被强行OOM killed。如果我们运行300M,可以容器能够正常运行:

[root@podresource /]# memload 300

Attempting to allocate 300 Mebibytes of resident memory...

2.使用LimitRange对资源做限制:

配置对应的yaml文件(可以在官方网站搜索limit.yaml文件,下载模板):

[root@vms201 security]# cat limit.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: mem-limit-range

spec:

limits:

- max:

memory: 512Mi

min :

memory: 256Mi

type: Container

设置最大的内存为512M,最小的为256M,作用于当前的命名空间security。应用并查看:

[root@vms201 security]# kubectl apply -f limit.yaml

limitrange/mem-limit-range created

[root@vms201 security]# kubectl describe -f limit.yaml

Name: mem-limit-range

Namespace: security

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container memory 256Mi 512Mi 512Mi 512Mi -

应用创建测试使用的pod:没有设置资源限制。

[root@vms201 security]# cat testpod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podresource

name: podresource

spec:

terminationGracePeriodSeconds: 0

containers:

- image: centos

command: ["sh","-c","sleep 10000000"]

imagePullPolicy: IfNotPresent

name: podresource

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@vms201 security]# kubectl apply -f testpod.yaml

pod/podresource created

以同样的方式消耗1024M,查看结果:

[root@podresource /]# memload 1024

Attempting to allocate 1024 Mebibytes of resident memory...

Killed

可以看到,由于超过了limitRange中设置512M,所以消耗失败。

3.同时设置了limitRange和resource字段限制

如果容器内没有使用resource声明最大值最小值则使用这里设置的limitRange设置的。

如果容器里声明了resource请求和限制大于或者小于limitRange里的max或者min,都会导致pod创建不成功。容器申请的资源不能超过limit。

如果resource设置的资源限制在limitRange中,则可以创建成功,并且按照resource设置设置的执行。

在limitRange和之前保持一致的情况下,我们修改pod的yaml文件,设置最小的内存为300M,最大的为500M,在limitRange内:

[root@vms201 security]# cat testpod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podresource

name: podresource

spec:

terminationGracePeriodSeconds: 0

containers:

- image: centos

command: ["sh","-c","sleep 10000000"]

imagePullPolicy: IfNotPresent

name: podresource

resources:

requests:

memory: 300Mi

limits:

memory: 500Mi

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@vms201 security]# kubectl apply -f testpod.yaml

pod/podresource created

可以看到,能够创建对应的pod。

4.以resourcequota的方式限制资源

resourcequota(配额)可以限制内存、cpu等资源,但是更多的是限制一个命名空间最多创建的对象(例如svc、pod等)

编辑resourcequota.yaml文件,并创建resourcequota:

[root@vms201 security]# cat resourcequota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: compute-resources

spec:

hard:

pods: "4"

services: "2"

[root@vms201 security]# kubectl apply -f resourcequota.yaml

resourcequota/compute-resources created

[root@vms201 security]# kubectl describe resourcequotas

Name: compute-resources

Namespace: security

Resource Used Hard

-------- ---- ----

pods 1 4

services 1 2

限制了目前命名空间内仅能创建4个pod和2个svc,目前各有一个。然后我们现在创建多个3个svc,做测试:

[root@vms201 security]# kubectl expose --name=svc2 pod podresource --port=80

service/svc2 exposed

[root@vms201 security]# kubectl expose --name=svc3 pod podresource --port=80

Error from server (Forbidden): services "svc3" is forbidden: exceeded quota: compute-resources, requested: services=1, used: services=2, limited: services=2

可以看到在创建第三个svc时,出现在了报错,提醒我们svc配置的数量超过限额。

参考资料:

《老段CKA课程》