Kubeadm

- 一、Kubeadm 部署

-

- 1. 环境准备

- 2. 所有节点安装 docker

- 3. 所有节点安装 kubeadm,kubelet 和 kubectl

- 4. 部署 K8S 集群

-

- 4.1 配置 master01 节点

- 4.2 配置 node 节点

- 二、Kubeadm 高可用部署

-

- 1. 环境准备

- 2. 所有节点安装 docker

- 2. 所有节点安装kubeadm,kubelet和kubectl

- 3. 高可用组件安装、配置

- 4. 部署 K8S 集群

- 总结

-

- kubeadm 部署过程

- kubeadm 部署的K8S集群更新证书

一、Kubeadm 部署

| 集群服务器主机名 |

服务器IP地址 |

集群服务器部署的服务 |

| master(2C/4G,cpu核心数要求大于2) |

192.168.145.15 |

docker、kubeadm、kubelet、kubectl、flannel |

| node01(2C/2G) |

192.168.145.30 |

docker、kubeadm、kubelet、kubectl、flannel |

| node02(2C/2G) |

192.168.145.45 |

docker、kubeadm、kubelet、kubectl、flannel |

1、在所有节点上安装Docker和kubeadm

2、部署Kubernetes Master

3、部署容器网络插件

4、部署 Kubernetes Node,将节点加入Kubernetes集群中

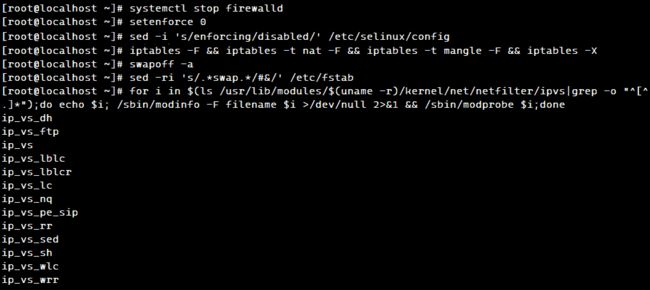

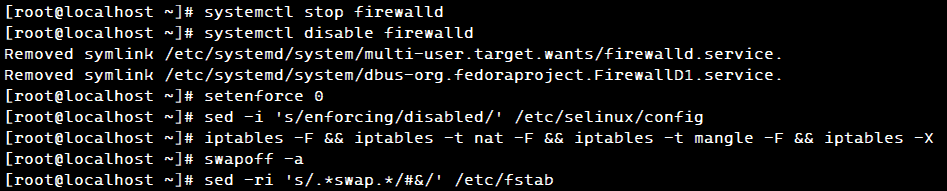

1. 环境准备

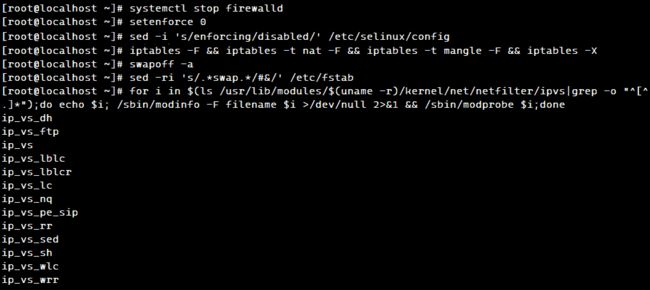

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

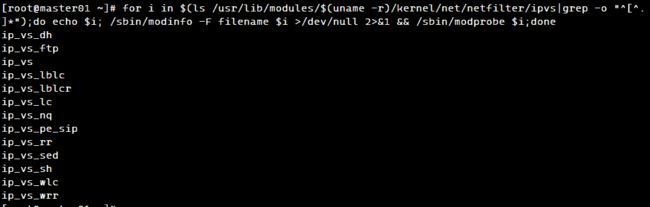

for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

hostnamectl set-hostname master01

hostnamectl set-hostname node01

hostnamectl set-hostname node02

vim /etc/hosts

192.168.145.15 master01

192.168.145.30 node01

192.168.145.45 node02

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-97yzQsRt-1691316483731)(C:/Users/86138/AppData/Roaming/Typora/typora-user-images/image-20230806104735013.png)]

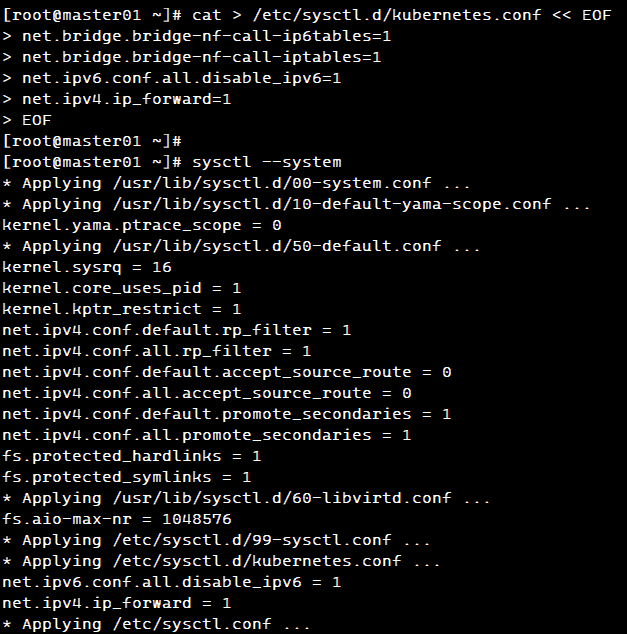

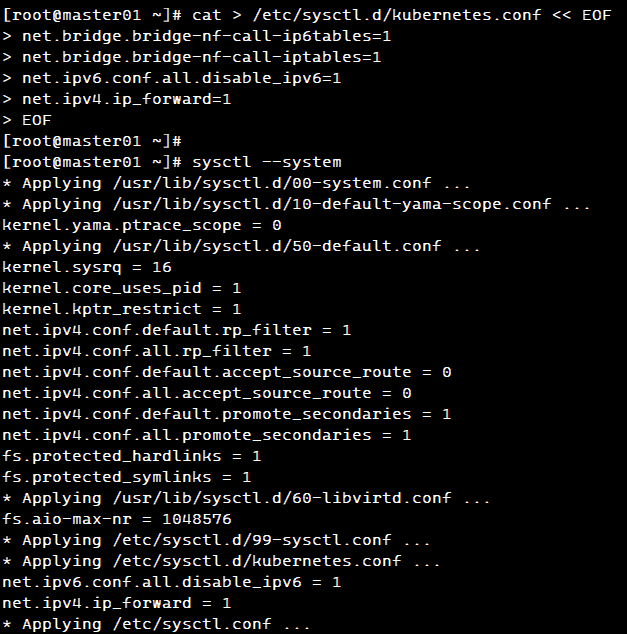

cat > /etc/sysctl.d/kubernetes.conf << EOF

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

EOF

sysctl

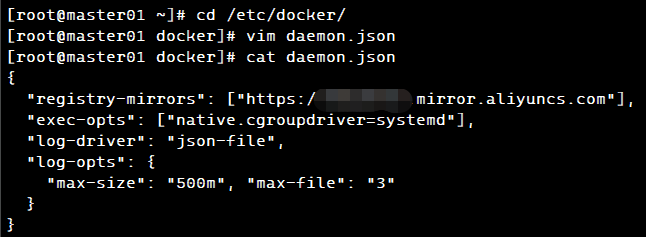

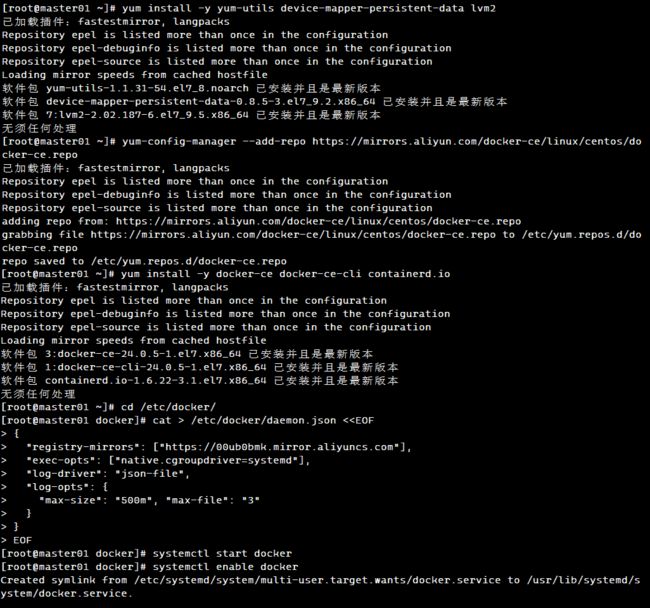

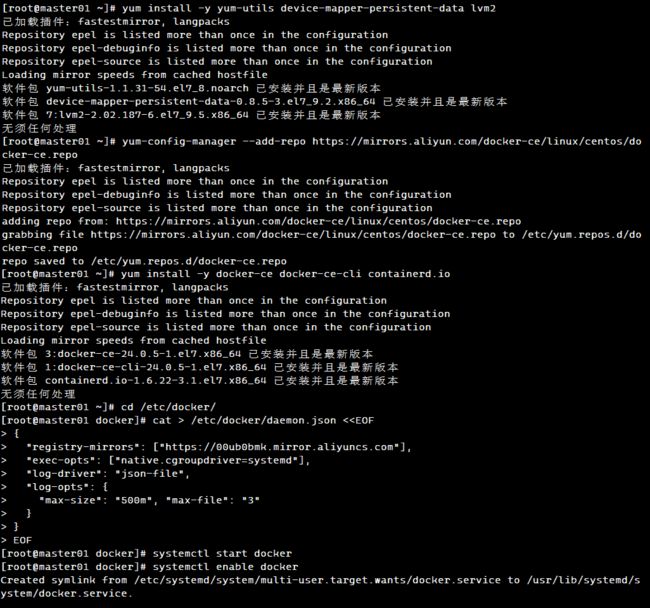

2. 所有节点安装 docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager

yum install -y docker-ce docker-ce-cli containerd.io

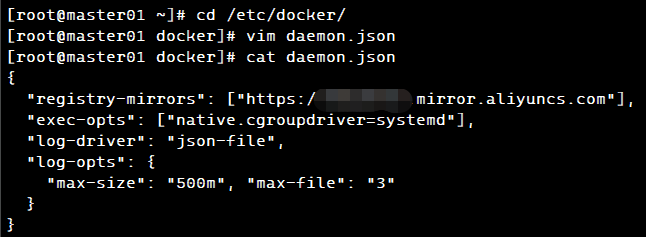

cd /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://00ub0bmk.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "500m", "max-file": "3"

}

}

EOF

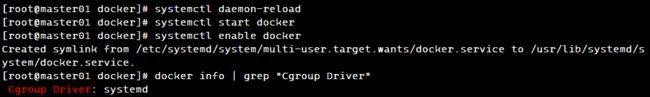

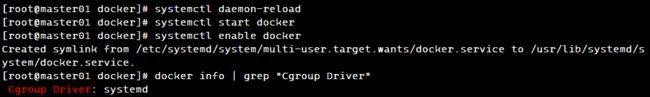

systemctl daemon-reload

systemctl start docker.service

systemctl enable docker.service

docker info | grep "Cgroup Driver"

Cgroup Driver: systemd

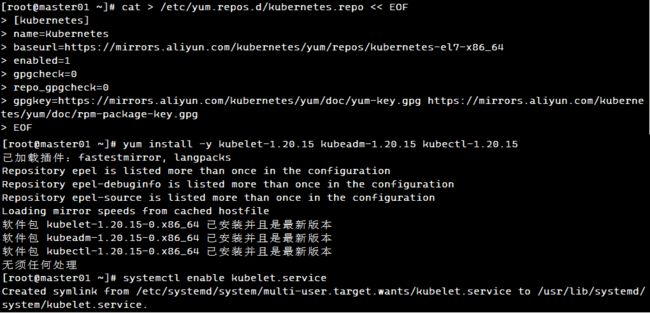

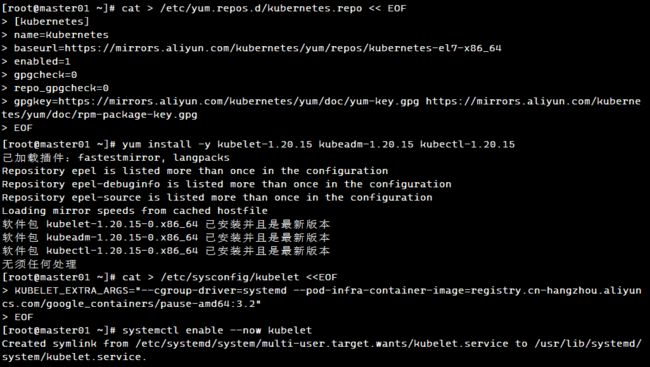

3. 所有节点安装 kubeadm,kubelet 和 kubectl

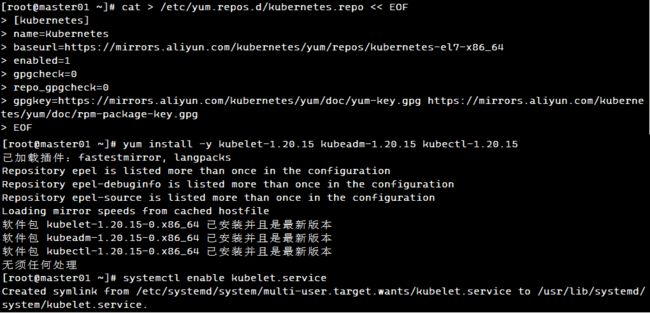

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https:

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https:

EOF

yum install -y kubelet-1.20.15 kubeadm-1.20.15 kubectl-1.20.15

systemctl enable kubelet.service

4. 部署 K8S 集群

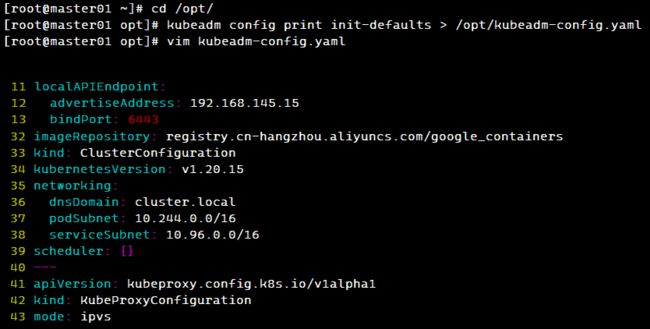

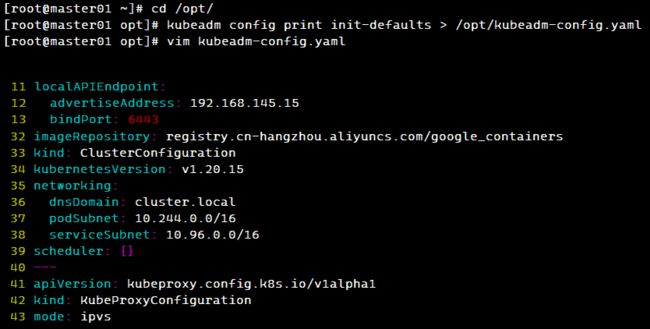

4.1 配置 master01 节点

kubeadm config images list

kubeadm config print init-defaults > /opt/kubeadm-config.yaml

cd /opt/

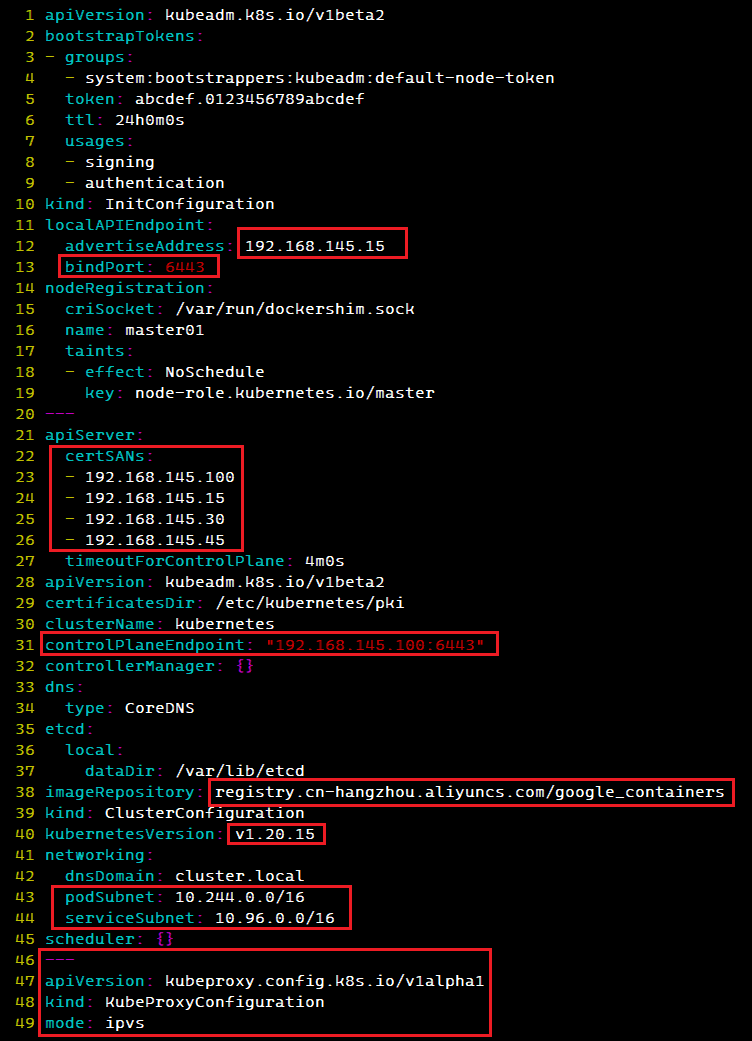

vim kubeadm-config.yaml

......

11 localAPIEndpoint:

12 advertiseAddress: 192.168.145.15

13 bindPort: 6443

......

32 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

33 kind: ClusterConfiguration

34 kubernetesVersion: v1.20.15

35 networking:

36 dnsDomain: cluster.local

37 podSubnet: 10.244.0.0/16

38 serviceSubnet: 10.96.0.0/16

39 scheduler: {}

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

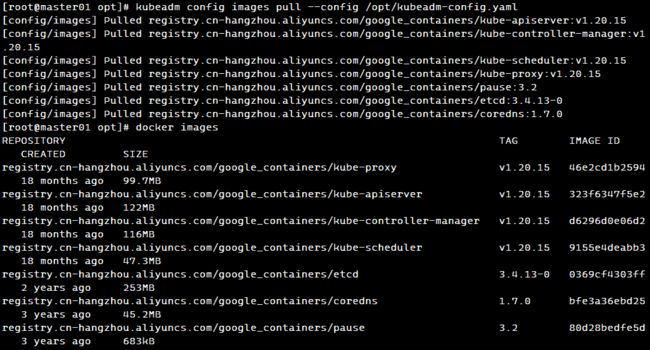

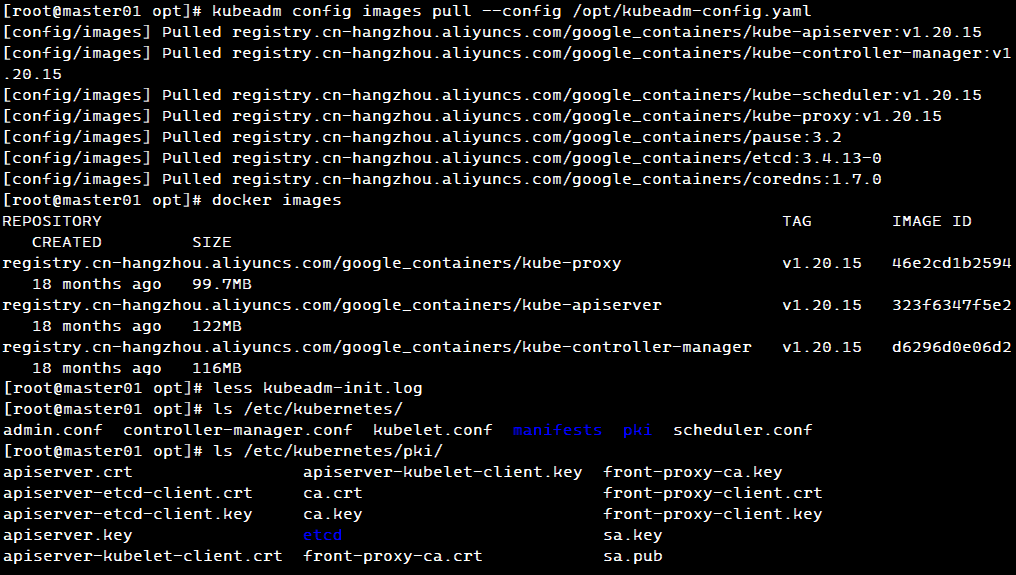

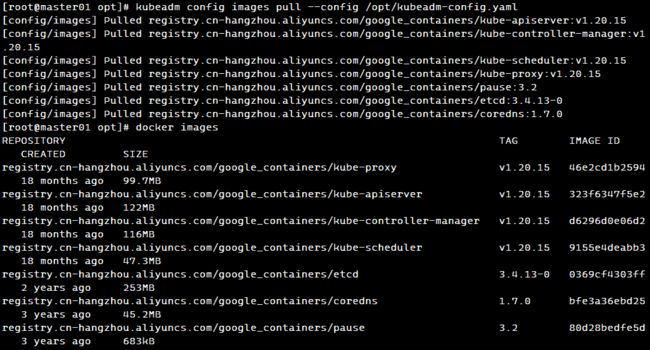

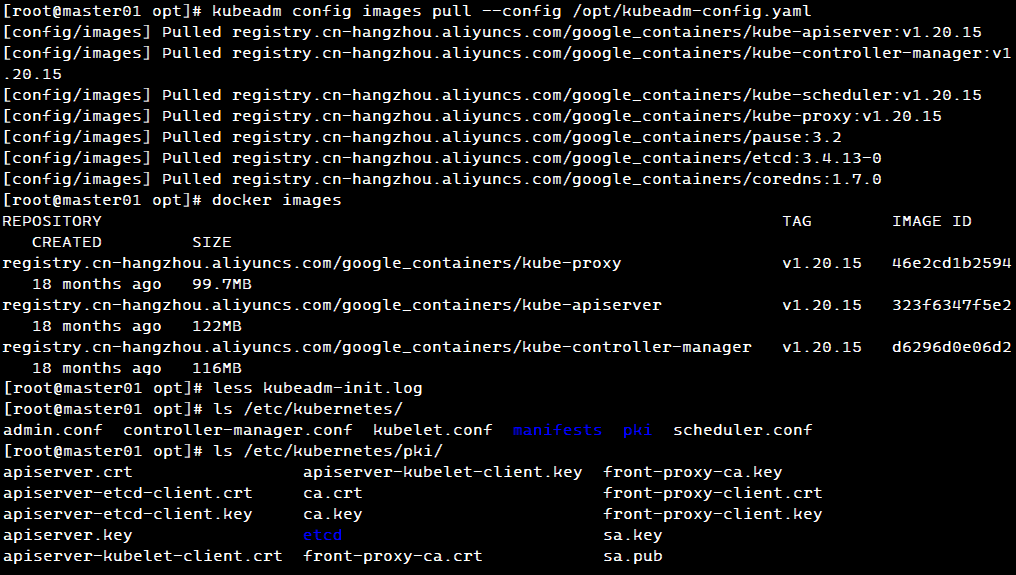

kubeadm config images pull

docker images

kubeadm init

less kubeadm-init.log

ls /etc/kubernetes/

ls /etc/kubernetes/pki

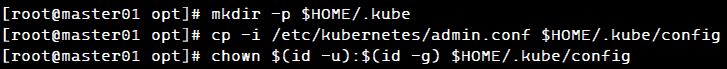

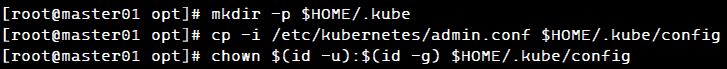

kubectl需经由API server认证及授权后方能执行相应的管理操作,kubeadm 部署的集群为其生成了一个具有管理员权限的认证配置文件 /etc/kubernetes/admin.conf,它可由 kubectl 通过默认的 “$HOME/.kube/config” 的路径进行加载。

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

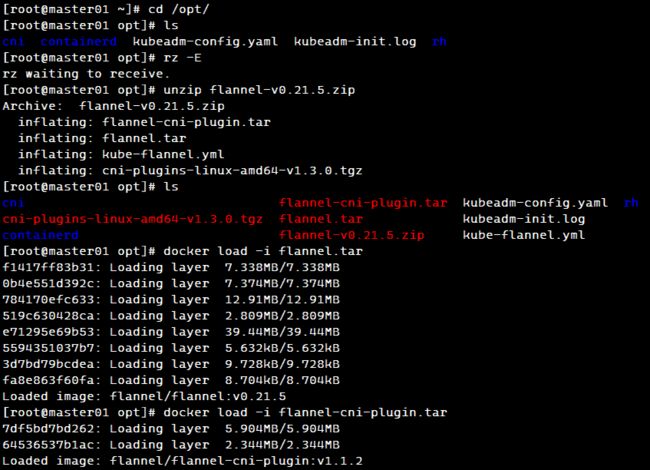

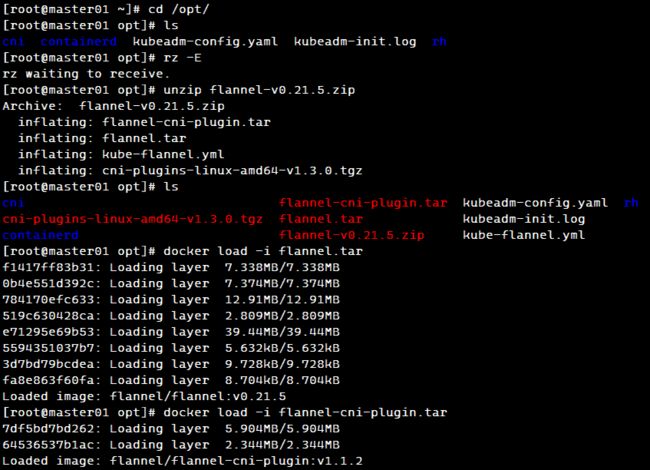

cd /opt/

unzip flannel-v0.21.5.zip

docker load -i flannel.tar

docker load -i flannel-cni-plugin.tar

)

)

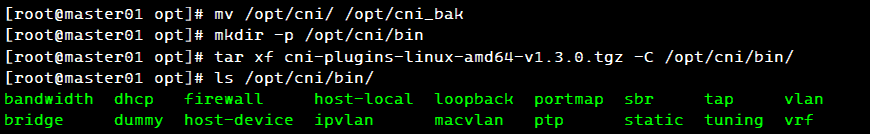

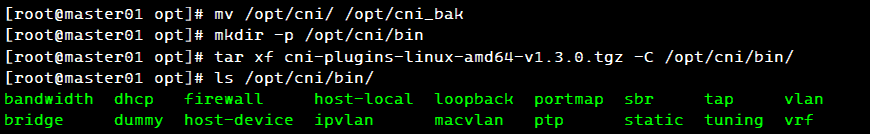

mv /opt/cni /opt/cni_bak

mkdir -p /opt/cni/bin

tar xf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

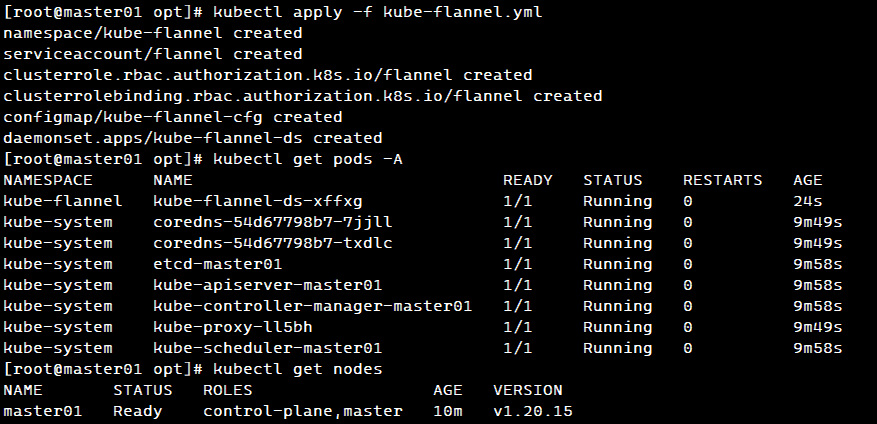

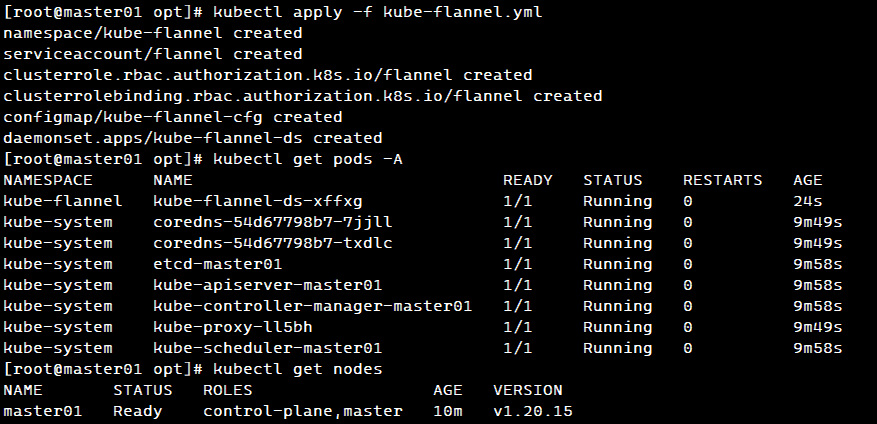

kubectl apply -f kube-flannel.yml

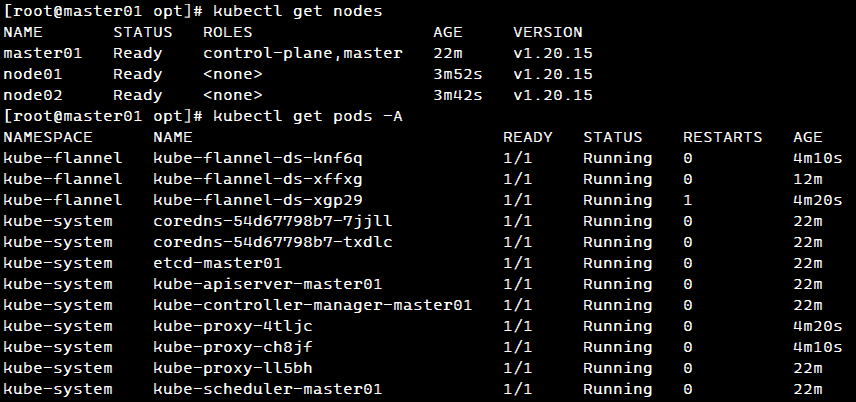

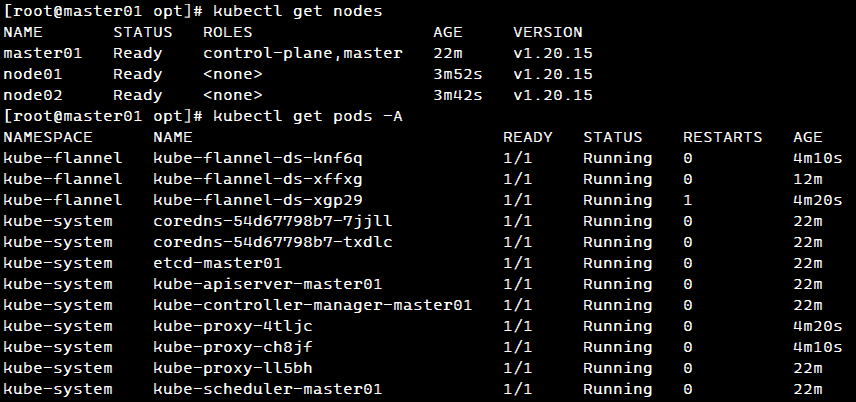

kubectl get nodes

kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-xffxg 1/1 Running 0 24s

kube-system coredns-54d67798b7-7jjll 1/1 Running 0 9m49s

kube-system coredns-54d67798b7-txdlc 1/1 Running 0 9m49s

kube-system etcd-master01 1/1 Running 0 9m58s

kube-system kube-apiserver-master01 1/1 Running 0 9m58s

kube-system kube-controller-manager-master01 1/1 Running 0 9m58s

kube-system kube-proxy-ll5bh 1/1 Running 0 9m49s

kube-system kube-scheduler-master01 1/1 Running 0 9m58s

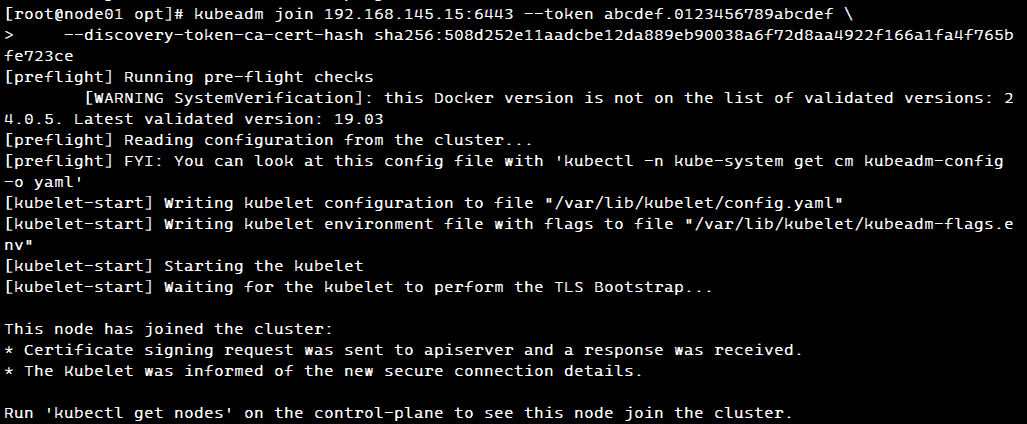

4.2 配置 node 节点

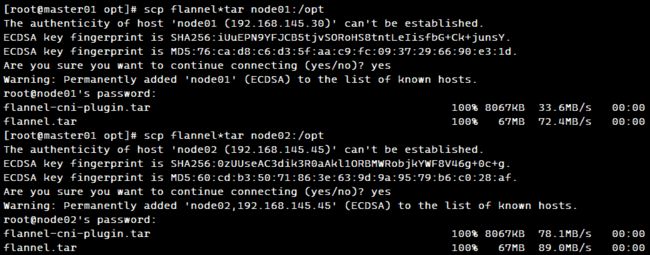

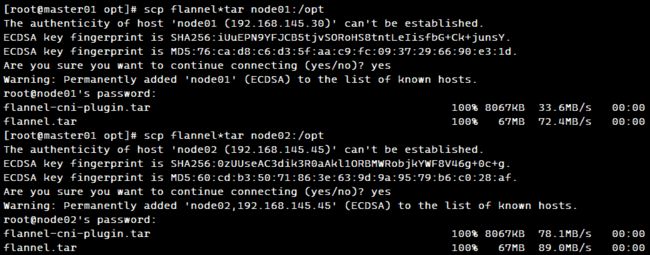

scp flannel*tar node01:/opt

scp flannel*tar node02:/opt

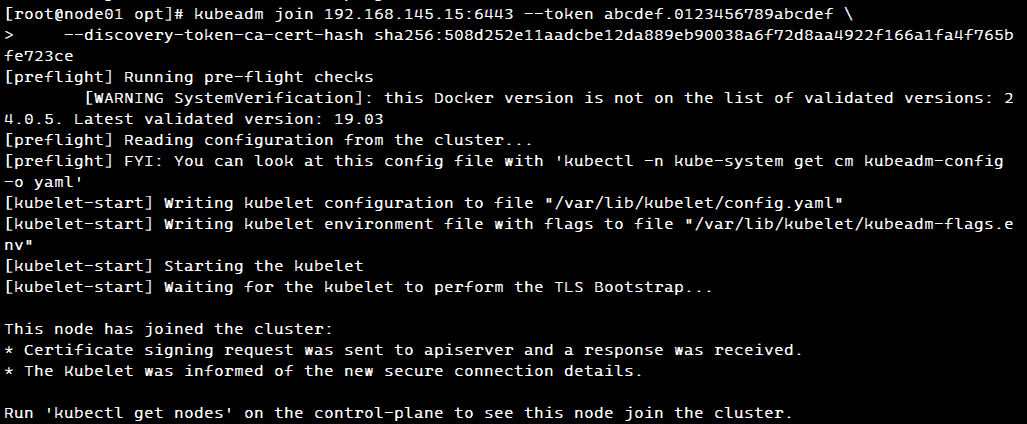

kubeadm join 192.168.145.15:6443

>

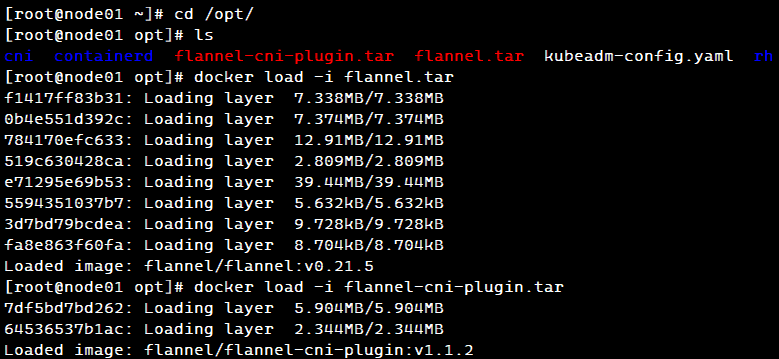

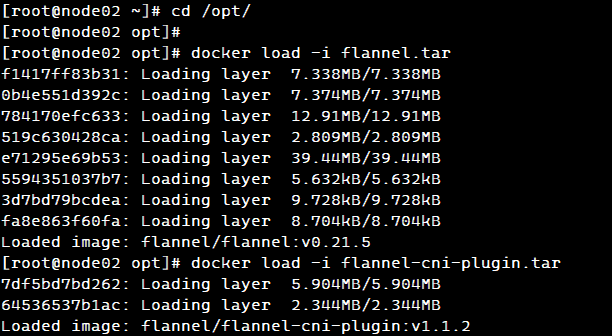

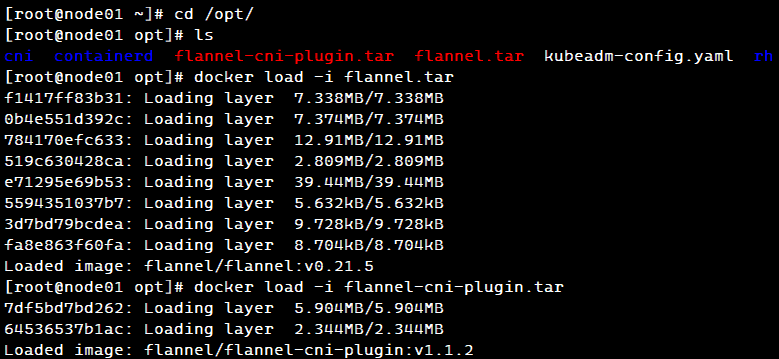

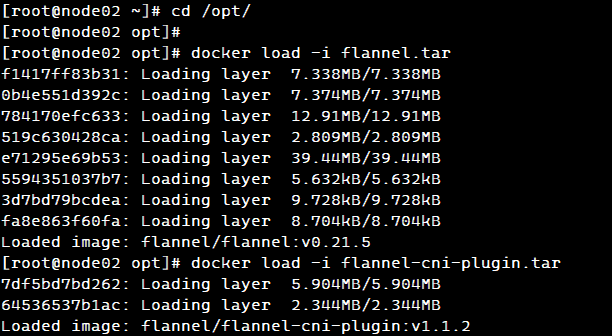

cd /opt/

docker load -i flannel.tar

docker load -i flannel-cni-plugin.tar

kubectl get nodes

kubectl get pods -A

二、Kubeadm 高可用部署

master节点cpu核心数要求大于2

● 最新的版本不一定好,但相对于旧版本,核心功能稳定,但新增功能、接口相对不稳

● 学会一个版本的 高可用部署,其他版本操作都差不多

● 宿主机尽量升级到CentOS 7.9

● 内核kernel升级到 4.19+ 这种稳定的内核

● 部署k8s版本时,尽量找 1.xx.5 这种大于5的小版本(这种一般是比较稳定的版本)

| 集群服务器主机名 |

服务器IP地址 |

集群服务器部署的服务 |

| master01(2C/4G,cpu核心数要求大于2) |

192.168.145.15 |

docker、kubeadm、kubelet、kubectl、flannel |

| master02(2C/4G,cpu核心数要求大于2) |

192.168.145.30 |

docker、kubeadm、kubelet、kubectl、flannel |

| master03(2C/4G,cpu核心数要求大于2) |

192.168.145.45 |

docker、kubeadm、kubelet、kubectl、flannel |

| node01(2C/2G) |

192.168.145.60 |

docker、kubeadm、kubelet、kubectl、flannel |

| node02(2C/2G) |

192.168.145.75 |

docker、kubeadm、kubelet、kubectl、flannel |

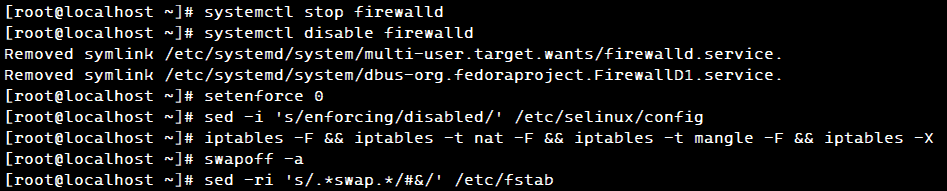

1. 环境准备

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

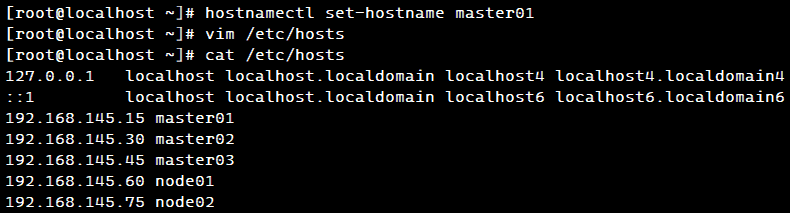

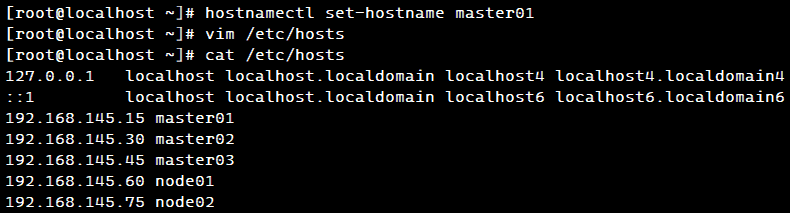

hostnamectl set-hostname master01

hostnamectl set-hostname master02

hostnamectl set-hostname master03

hostnamectl set-hostname node01

hostnamectl set-hostname node02

vim /etc/hosts

192.168.145.15 master01

192.168.145.30 master02

192.168.145.45 master03

192.168.145.60 node01

192.168.145.75 node02

yum -y install ntpdate

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' >/etc/timezone

ntpdate time2.aliyun.com

systemctl enable

crontab -e

*/30 * * * * /usr/sbin/ntpdate time2.aliyun.com

vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 131072

* soft nproc 65535

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

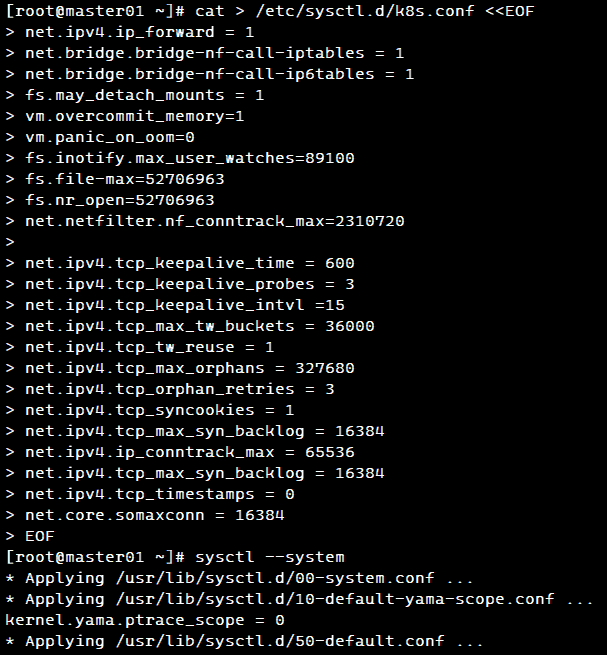

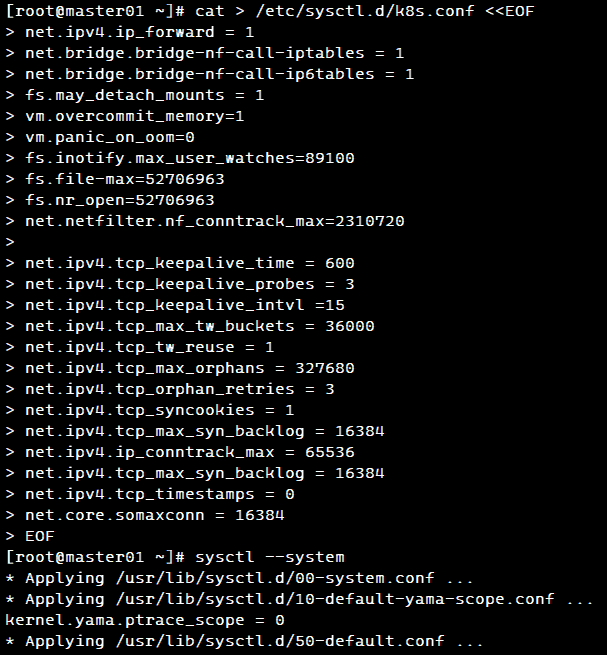

cat > /etc/sysctl.d/k8s.conf <<EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl

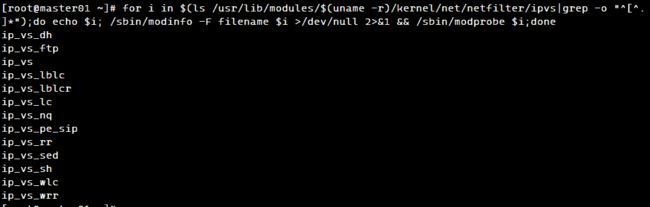

for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

2. 所有节点安装 docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager

yum install -y docker-ce docker-ce-cli containerd.io

cd /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://00ub0bmk.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "500m", "max-file": "3"

}

}

EOF

systemctl daemon-reload

systemctl restart docker.service

systemctl enable docker.service

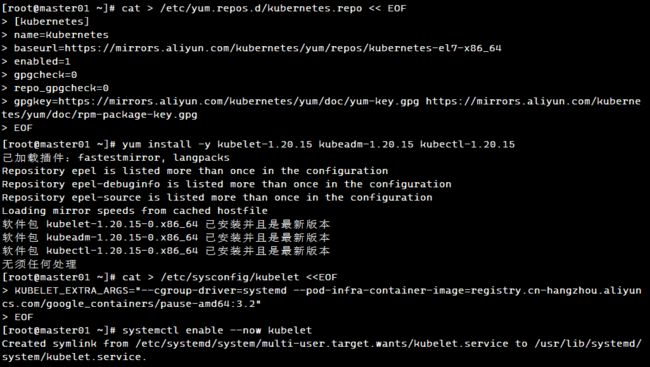

2. 所有节点安装kubeadm,kubelet和kubectl

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https:

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https:

EOF

yum install -y kubelet-1.20.15 kubeadm-1.20.15 kubectl-1.20.15

cat > /etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2"

EOF

systemctl enable

3. 高可用组件安装、配置

yum -y install haproxy keepalived

cat > /etc/haproxy/haproxy.cfg << EOF

global

log 127.0.0.1 local0 info

log 127.0.0.1 local1 warning

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode tcp

log global

option tcplog

option dontlognull

option redispatch

retries 3

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

maxconn 3000

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind *:16443

mode tcp

option tcplog

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

server k8s-master1 192.168.145.15:6443 check inter 10000 fall 2 rise 2 weight 1

server k8s-master2 192.168.145.30:6443 check inter 10000 fall 2 rise 2 weight 1

server k8s-master3 192.168.145.45:6443 check inter 10000 fall 2 rise 2 weight 1

EOF

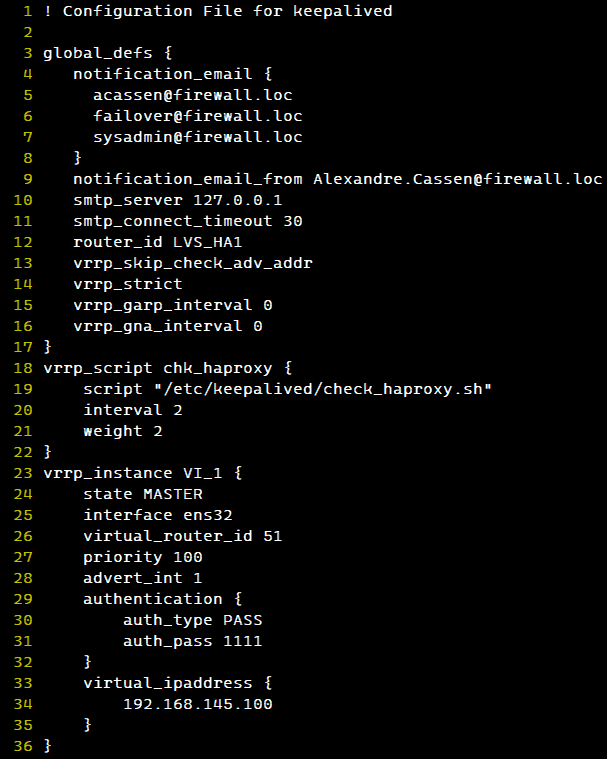

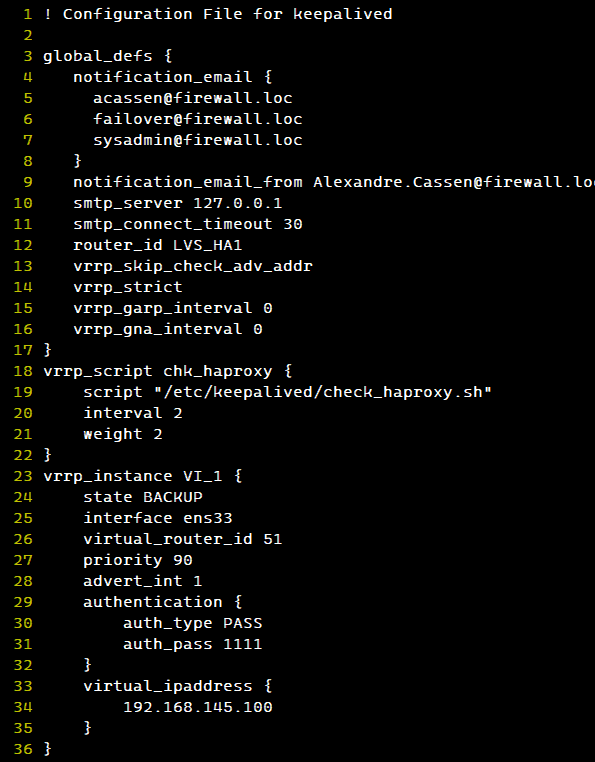

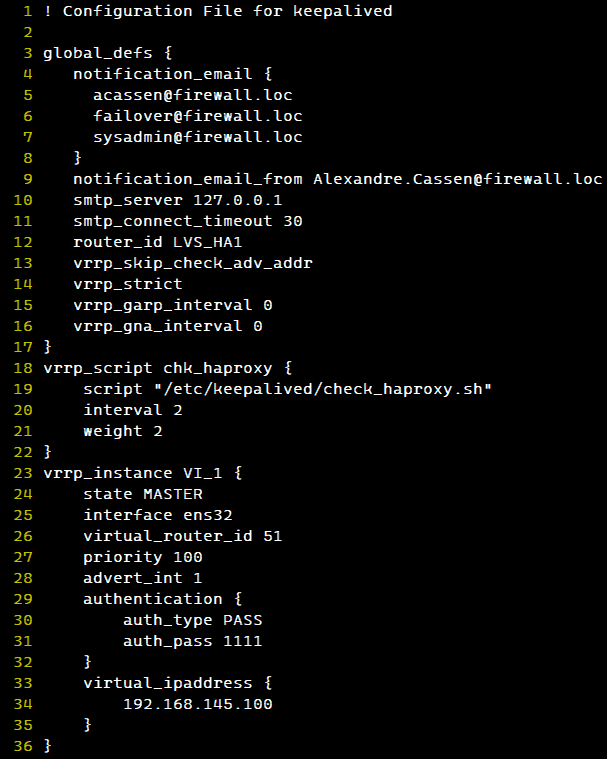

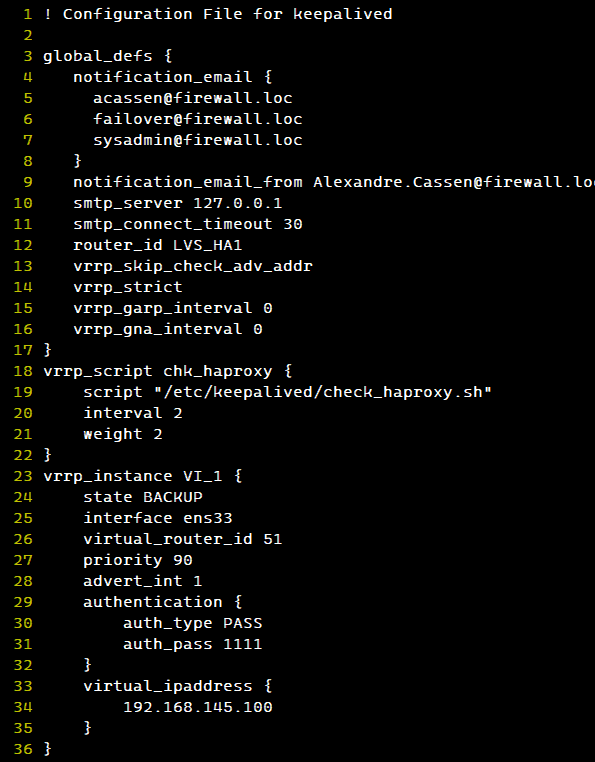

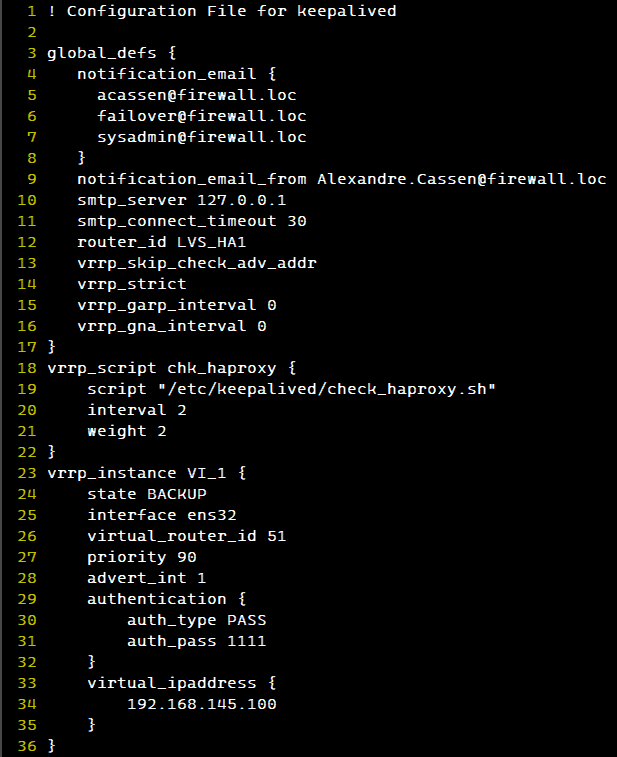

cd /etc/keepalived/

vim keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_HA1

}

vrrp_script chk_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 2

weight 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

virtual_ipaddress {

192.168.145.100

}

track_script {

chk_haproxy

}

}

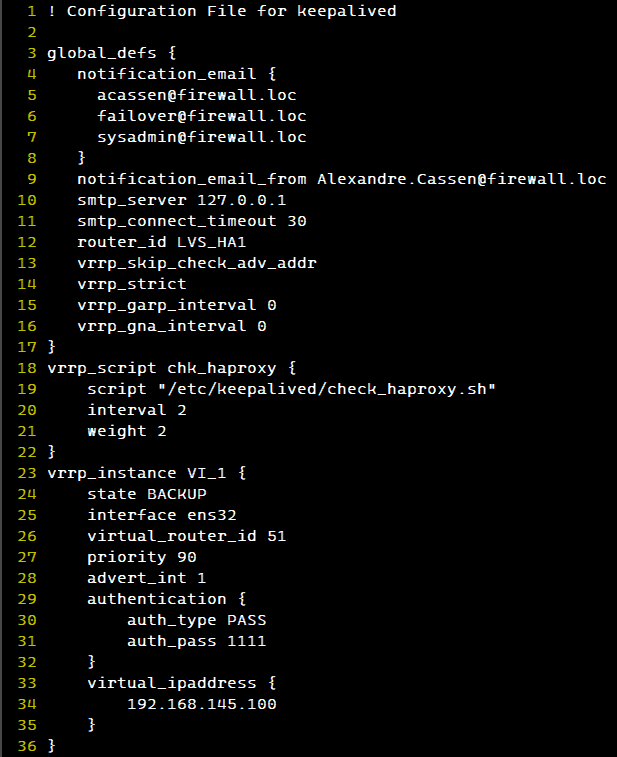

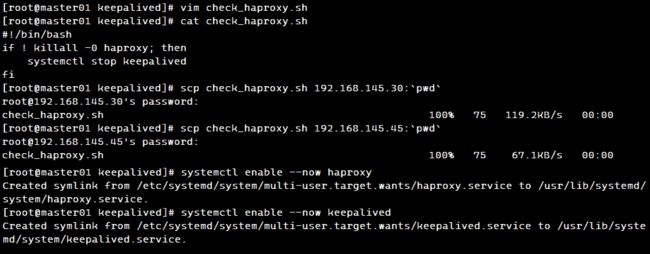

vim check_haproxy.sh

if ! killall -0 haproxy; then

systemctl stop keepalived

fi

systemctl enable

systemctl enable

4. 部署 K8S 集群

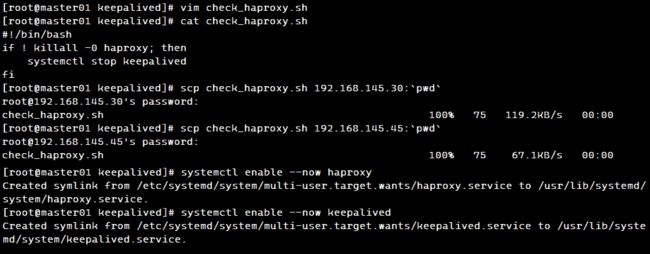

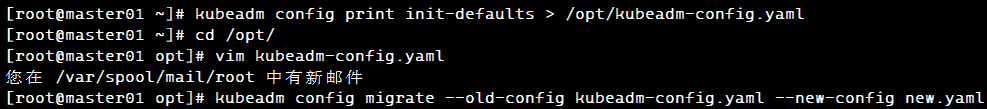

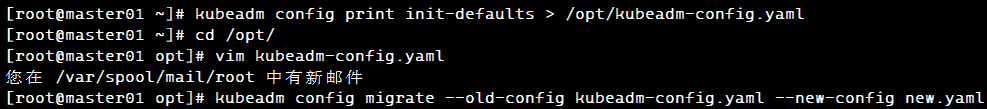

kubeadm config print init-defaults > /opt/kubeadm-config.yaml

cd /opt/

vim kubeadm-config.yaml

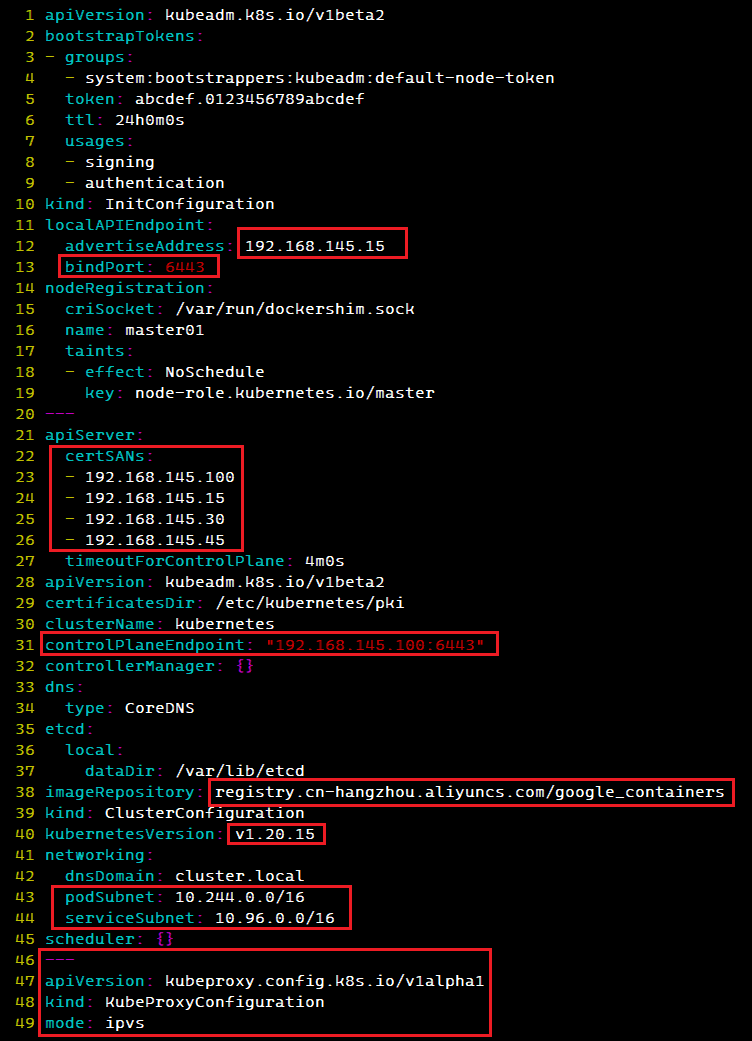

......

11 localAPIEndpoint:

12 advertiseAddress: 192.168.145.15

13 bindPort: 6443

21 apiServer:

22 certSANs:

23 - 192.168.145.100

24 - 192.168.145.15

25 - 192.168.145.30

26 - 192.168.145.45

30 clusterName: kubernetes

31 controlPlaneEndpoint: "192.168.145.100:6443"

32 controllerManager: {}

38 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

39 kind: ClusterConfiguration

40 kubernetesVersion: v1.20.15

41 networking:

42 dnsDomain: cluster.local

43 podSubnet: "10.244.0.0/16"

44 serviceSubnet: 10.96.0.0/16

45 scheduler: {}

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

kubeadm config migrate

for i in master02 master03 node01 node02; do scp /opt/new.yaml $i:/opt/; done

kubeadm config images pull

kubeadm init

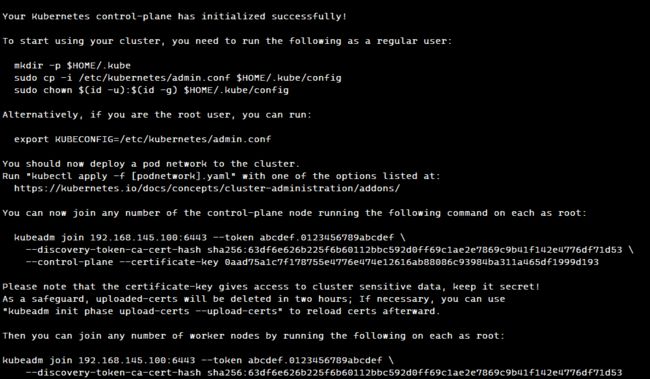

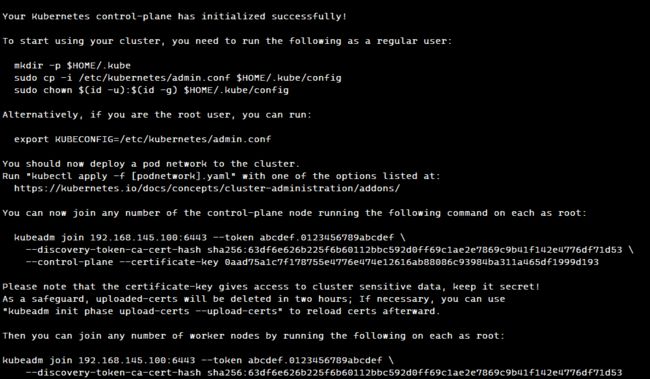

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https:

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.145.100:6443

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.145.100:6443

kubeadm join 192.168.145.100:6443

kubeadm join 192.168.145.100:6443

kubeadm reset -f

ipvsadm

rm -rf ~/.kube

再次进行初始化

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

vim /etc/kubernetes/manifests/kube-scheduler.yaml

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

......

systemctl restart kubelet

所有节点上传 flannel 镜像 flannel.tar 和网络插件 cni-plugins-linux-amd64-v0.8.6.tgz 到 /opt 目录,master节点上传 kube-flannel.yml 文件

cd /opt

unzip flannel-v0.21.5.zip

docker load -i flannel.tar

docker load -i flannel-cni-plugin.tar

mv /opt/cni /opt/cni_bak

mkdir -p /opt/cni/bin

tar xf cni-plugins-linux-amd64-v1.3.0.tgz /opt/cni/bin

kubectl apply -f kube-flannel.yml

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

kubectl apply -f kube-flannel.yml

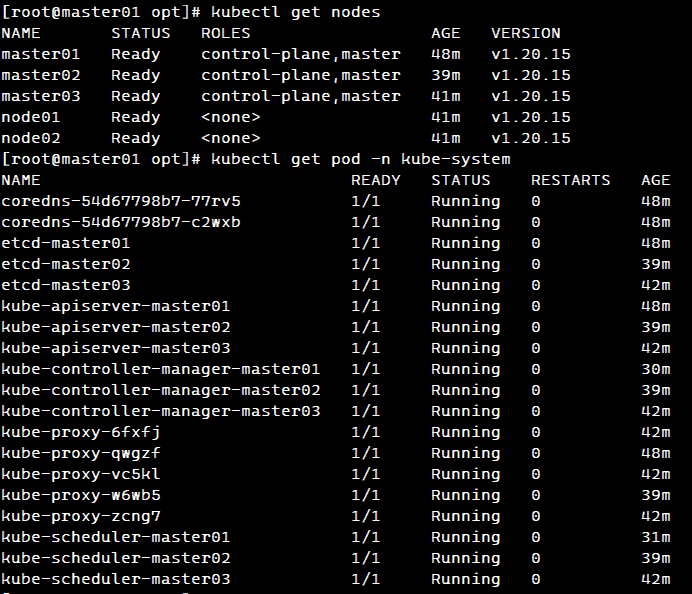

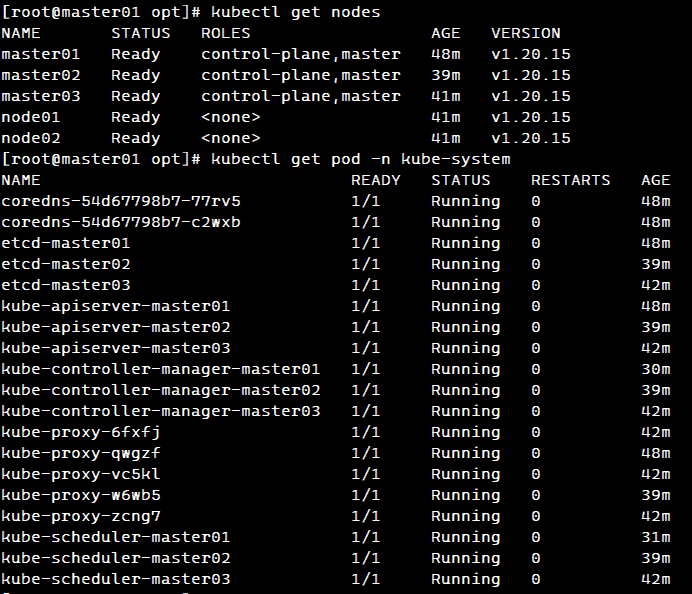

kubectl get nodes

kubectl get pod -n kube-system

总结

kubeadm 部署过程

1)所有节点进行初始化,安装docker引擎和kubeadm kubelet kubectl

2)生成集群初始化配置文件并进行修改

3)使用kubeadm init根据初始化配置文件生成K8S的master控制管理节点

4)安装CNI网络插件(flannel、calico等)

5)在其他节点使用kubeadm join将节点以node

kubeadm 部署的K8S集群更新证书

1)备份老证书和kubeconfig配置文件

mkdir /etc/kubernetes.bak

cp -r /etc/kubernetes/pki/ /etc/kubernetes.bak

cp /etc/kubernetes/*.conf /etc/kubernetes.bak

2)重新生成证书

kubeadm alpha certs renew all

3)重新生成kubeconfig配置文件

kubeadm init phase kubeconfig all

4)重启kubelet和其他K8S组件的Pod容器

systemctl restart kubelet

mv /etc/kubernetes/manifests /tmp

mv /tmp/*.yaml /etc/kubernetes/manifests