186. 【kubernetes】二进制文件方式安装-Kubernetes-集群(二)

1. 下载 Kubernetes 服务器的二进制文件

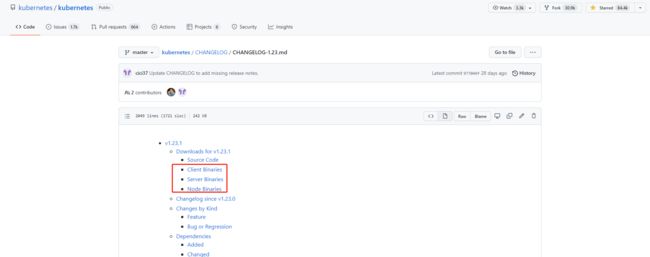

在 Github 下载 Kubernetes 的二进制文件(先进入 Release 页面,再点击 CHANGELOG),

下载 Server 端二进制(Server Binaries)文件的下载页面进行下载。

主要的服务程序二进制文件列表如下所示,

| 文件名 | 说明 |

|---|---|

| kube-apiserver | kube-apiserver 主程序 |

| kube-apiserver.docker_tag | kube-apiserver docker 镜像的 tag |

| kube-apiserver.tar | kube-apiserver docker 镜像文件 |

| kube-controller-manager | kube-controller-manager 主程序 |

| kube-controller-manager.docker_tag | kube-controller-manager docker 镜像的 tag |

| kube-controller-manager.tar | kube-controller-manager docker 镜像文件 |

| kube-scheduler | kube-scheduler 主程序 |

| kube-scheduler.docker_tag | kube-scheduler docker 镜像的 tag |

| kube-scheduler.tar | kube-scheduler docker 镜像文件 |

| kubelet | kubelet 主程序 |

| kube-proxy | kube-proxy 主程序 |

| kube-proxy.docker_tag | kube-proxy docker 镜像的 tag |

| kube-proxy.tar | kube-proxy docker 镜像文件 |

| kubectl | 客户端命令行工具 |

| kubeadm | Kubernetes 集群安装的命令工具 |

| apiextensions-apiserver | 提供实现自定义资源对象的扩展 API Server |

| kube-aggregator | 聚合 API Server 程序 |

在 Kubernetes 的 Master 节点上需要部署的服务包括 etcd、kube-apiserver、kube-controller-manager 和 kube-scheduler。

在工作节点(Worker Node) 上需要部署的服务包括 docker、kubelet 和 kube-proxy。

将 Kubernetes 的二进制可执行文件复制到 /usr/bin 目录下,然后在 /usr/lib/systemd/system 目录下为各服务创建 systemd 服务配置文件,这样就完成了软件的安装。

2. 部署 kube-apiserver 服务

设置 kube-apiserver 服务需要的 CA 相关证书。准备 master_ssl.cnf文件用于生成 x509 v3 版本的证书,

[ req ]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[ req_distinguished_name ]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[ alt_names ]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

DNS.5 = k8s-1

DNS.6 = k8s-2

DNS.7 = k8s-3

IP.1 = 169.169.0.1

IP.2 = 172.16.0.10

IP.3 = 172.16.0.100

PS: DNS.5对应的节点1 的域名,依次类推;IP.1不用修改,IP.2是master节点的 IP,IP.3是相同网段,但没有被使用的IP(即:ping 不通的ip)。

然后用 openssl命令创建 kube-apiserver 的服务端 CA 证书,包括 apiserver.key 和apiserver.crt 文件,将其保存到 /etc/kubernetes/pki 目录下。

openssl genrsa -out apiserver.key 2048

openssl req -new -key apiserver.key -config master_ssl.cnf -subj "/CN=172.16.0.10" -out apiserver.csr

openssl x509 -req -in apiserver.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile master_ssl.cnf -out apiserver.crt

为 kube-apiserver 服务创建 systemd 服务配置文件 /usr/lib/systemd/system/kube-apiserver.service:

cat > /usr/lib/systemd/system/kube-apiserver.service<配置文件 /etc/kubernetes/apiserver的内容通过环境变量 KUBE_API_ARGS设置 kube-apiserver的全部启动参数,包含 CA 安全配置的启动参数如下:

KUBE_API_ARGS="--secure-port=6443 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt \

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key \

--client-ca-file=/etc/kubernetes/pki/ca.crt \

--apiserver-count=1 \

--endpoint-reconciler-type=master-count \

--etcd-servers=https://172.16.0.10:2379,https://172.16.0.11:2379 \

--etcd-cafile=/etc/kubernetes/pki/ca.crt \

--etcd-certfile=/etc/etcd/pki/etcd_client.crt \

--etcd-keyfile=/etc/etcd/pki/etcd_client.key \

--service-cluster-ip-range=169.169.0.0/16 \

--service-node-port-range=30000-32767 \

--allow-privileged=true \

--v=0 \

--external-hostname=172.16.0.10 \

--anonymous-auth=false \

--service-account-issuer=https://admin \

--service-account-key-file=/etc/kubernetes/pki/apiserver.key \

--service-account-signing-key-file=/etc/kubernetes/pki/apiserver.key"

当前安装的是 Kubernetes v1.23.1使用以前老版本的参数启动失败,这里按报错提示,新增了--external-hostname、--anonymous-auth、--service-account-issuer、--service-account-key-file、--service-account-signing-key-file几个参数,这个几个参数的值,可能写的不规范,以后遇到问题再来调整,目前启动是能成功的

配置文件准备完毕后,启动 kube-apiserver 服务,并设置为开机自启动:

systemctl restart kube-apiserver && systemctl enable kube-apiserver

验证结果:

3. 创建客户端 CA 证书

-

kube-controller-manager、kube-scheduler、kubelt 和 kube-proxy 服务作为客户端连接 kube-apiserver 服务,需要为它们创建客户端 CA 证书进行访问。这里对这几个服务统一创建一个证书:

openssl genrsa -out client.key 2048 openssl req -new -key client.key -subj "/CN=admin" -out client.csr openssl x509 -req -in client.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -out client.crt -days 36500其中 -subj 参数中的 “/CN” 的名称被设置为“admin”,用于标识连接 kube-apiserver的客户端的名称。

-

将生成的 client.key 和 client.crt 文件保存在 /etc/kubernetes/pki 目录下

cp client.key client.crt /etc/kubernetes/pki/

4. 创建客户端连接 kube-apiserver 服务所需的 kubeconfig 配置文件

- 为 kube-controller-manager、kube-scheduler、kubelet 和 kube-proxy 服务统一创建一个 kubeconfig 文件作为连接 kube-apiserver 服务的配置文件,后续也作为 kubectl 命令行工具连接 kube-apiserver 服务的配置文件。

- 在 kubeconfig 文件中主要设置访问访问 kube-apiserver 的 URL 地址及所需 CA 证书等的相关参数,如下:

apiVersion: v1 kind: Config clusters: - name: default cluster: server: https://172.16.0.100:9443 certificate-authority: /etc/kubernetes/pki/ca.crt users: - name: admin user: client-certificate: /etc/kubernetes/pki/client.crt client-key: /etc/kubernetes/pki/client.key contexts: - context: cluster: default user: admin name: default current-context: default - 将

kubeconfig文件保存到/etc/kubernetes目录下。

5. 部署 kube-controller-manager 服务

- 为 kube-controller-manager 服务创建 systemd 服务配置文件

/usr/lib/systemd/system/kube-controller-manager.service:

cat > /usr/lib/systemd/system/kube-controller-manager.service<- 配置文件

/etc/kubernetes/controller-manager的内容为通过环境变量KUBE_CONTROLLER_MANAGER_ARGS设置的 kube-controller-manager 的全部启动参数,包含 CA 安全配置的启动参数,如下:KUBE_CONTROLLER_MANAGER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \ --leader-elect=false \ --service-cluster-ip-range=169.169.0.0/16 \ --service-account-private-key-file=/etc/kubernetes/pki/apiserver.key \ --root-ca-file=/etc/kubernetes/pki/ca.crt \ --v=0" - 配置文件准备完毕后,在 Master 主机上启动 kube-controller-manager 服务并设置为开机自启动:

systemctl restart kube-controller-manager && systemctl enable kube-controller-manager

6. 配置 kube-scheduler 服务

-

为 kube-scheduler 服务创建 systed 服务配置文件

/usr/lib/systemd/system/kube-scheduler.service,如下:[Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/scheduler ExecStart=/usr/bin/kube-scheduler \$KUBE_SCHEDULER_ARGS Restart=always [Install] WantedBy=multi-user.target -

配置文件 /etc/kubernetes/scheduler 的内容为通过环境变量 KUBE_SCHEDULER_ARGS 设置的 kube-scheduler 的全部启动参数,包含 CA 安全配置的启动参数,如下:

KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \ --leader-elect=false \ --v=0" -

配置文件准备完毕后,在 Master 主机上启动 kube-scheduler 服务并设置为开机自启动:

systemctl restart kube-scheduler && systemctl enable kube-scheduler

7. 使用 HAProxy 和 keepalived 部署高可用负载均衡器

- 这里搭建的两节点的环境,这一步只把流程走通。

- 部署 HAProxy 实例,准备 HAProxy 的配置文件 haproxy.cfg,内容如下:

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4096

no strict-limits

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend kube-apiserver

mode tcp

bind *:9443

option tcplog

default_backend kube-apiserver

listen stats

mode http

bind *:8888

stats auth admin:password

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /stats

log 127.0.0.1 local3 err

backend kube-apiserver

mode tcp

balance roundrobin

server k8s-master1 172.16.0.10:6443 check

- 最后一行,

k8s-master1改成master节点的主机名,172.16.0.10改成master 节点的ip - 以 Docker 容器方式运行 HAProxy 且镜像使用 haproxytech/haproxy-debian,将配置文件 haproxy.cfg 挂载到容器的 /usr/local/etc/haproxy 目录下,启动命令如下,

docker run -d --name k8s-haproxy --net=host --restart=always -v ${PWD}/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro haproxytech/haproxy-debian

- 部署 keepalived 实例,编辑 keepalived.conf,内容如下:

! Configuration File for keepalived

global_defs {

router_id LVS_1

}

vrrp_script checkhaproxy

{

script "/usr/bin/check-haproxy.sh"

interval 2

weight -30

}

vrrp_instance VI_1 {

state MASTER

interface enp7s0f1

virtual_router_id 51

priority 100

advert_int 1

virtual_ipaddress {

172.16.0.100/24 dev enp7s0f1

}

authentication {

auth_type PASS

auth_pass password

}

track_script {

checkhaproxy

}

}

注意:我本地的网卡名是:enp7s0f1,这个网卡名在上面的文件内出现了两次,都需要改成实际的网卡名。

- 新建一个 check-haproxy.sh 并将其保存到 /usr/bin 目录下,内容如下:

# !/bin/bash

# Program:

# check health

# History:

# 2022/01/14 [email protected] version:0.0.1

path=/bin:/sbin:/usr/bin:/usr/sbin:/usr/local/bin:/usr/local/sbin:~/bin

export path

count=$(netstat -apn | grep 9443 | wc -l)

if [ ${count} -gt 0 ]

then

exit 0

else

exit 1

fi

- 以 Docker 容器方式进行 HAProxy 且镜像使用 osixia/keeplived,将配置文件 keepalived.conf 挂载到容器的 /container/service/keepalived/assets 目录下,启动命令如下:

docker run -d --name k8s-keepalived --restart=always --net=host --cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW -v ${PWD}/keepalived.conf:/container/service/keepalived/assets/keepalived.conf -v ${PWD}/check-haproxy.sh:/usr/bin/check-haproxy.sh osixia/keepalived --copy-service

- 使用 curl 命令即可验证通过 HAProxy 的 172.16.0.100:9443 地址是否可以访问到 kube-apiserver 服务:

[root@qijing1 client]# curl -v -k https://172.16.0.100:9443

# 底下是验证结果:

* About to connect() to 172.16.0.100 port 9443 (# 0)

* Trying 172.16.0.100...

* Connected to 172.16.0.100 (172.16.0.100) port 9443 (# 0)

* Initializing NSS with certpath: sql:/etc/pki/nssdb

* skipping SSL peer certificate verification

* NSS: client certificate not found (nickname not specified)

* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

* Server certificate:

* subject: CN=172.16.0.10

* start date: 1月 13 12:46:58 2022 GMT

* expire date: 12月 20 12:46:58 2121 GMT

* common name: 172.16.0.10

* issuer: CN=172.16.0.10

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: 172.16.0.100:9443

> Accept: */*

>

< HTTP/1.1 401 Unauthorized

< Audit-Id: 6bdfd440-0a1d-4102-9ad3-4d9c636792ce

< Cache-Control: no-cache, private

< Content-Type: application/json

< Date: Fri, 14 Jan 2022 02:39:26 GMT

< Content-Length: 157

<

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

* Connection # 0 to host 172.16.0.100 left intact

}

可以看到 TCP/IP 连接创建成功,得到响应码,得到相应码为 401 的应答,说明通过 172.16.0.100 成功访问到了后端的 kube-apiserver 服务。至此,Master 所需的 3 个服务就全部启动完成了。