二进制搭建 Kubernetes(一)

目录

一、 环境准备

二、操作系统初始化配置

2.1 关闭防火墙

2.2 关闭selinux

2.3 关闭swap

2.4 根据规划设置主机名

2.5 在master添加hosts

2.6 调整内核参数

2.7 时间同步

三、 部署 etcd 集群

3.1 准备cfssl证书生成工具

3.2 生成Etcd证书

四、部署 docker引擎

五、部署 Master 组件

一、 环境准备

k8s集群master01:192.168.130.140 kube-apiserver kube-controller-manager kube-scheduler etcd

k8s集群node1:192.168.130.151 kubelet kube-proxy docker flannel

k8s集群node2:192.168.130.152 kubelet kube-proxy docker flannel

二、操作系统初始化配置

2.1 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld三台机子都关闭防火墙

清理iptables 的相关 规则 ,三台机同时操作

2.2 关闭selinux

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config2.3 关闭swap

swapoff -a

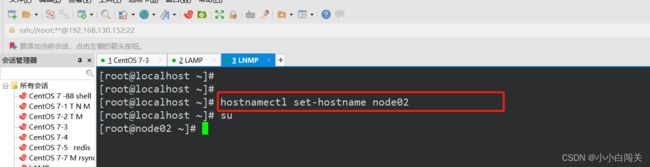

sed -ri 's/.*swap.*/#&/' /etc/fstab2.4 根据规划设置主机名

hostnamectl set-hostname master01

hostnamectl set-hostname node01

hostnamectl set-hostname node022.5 在master添加hosts

cat >> /etc/hosts << EOF

192.168.130.140 master01

192.168.130.151 node01

192.168.130.152 node02

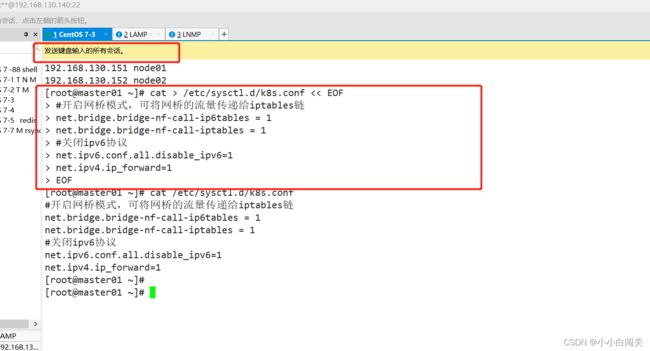

EOF2.6 调整内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

#开启网桥模式,可将网桥的流量传递给iptables链

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

#关闭ipv6协议

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

EOF

sysctl --system2.7 时间同步

yum install ntpdate -y

ntpdate time.windows.com三、 部署 etcd 集群

在 master01 节点上操作

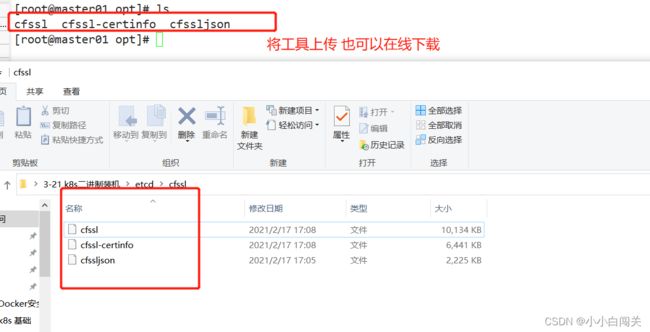

3.1 准备cfssl证书生成工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl*3.2 生成Etcd证书

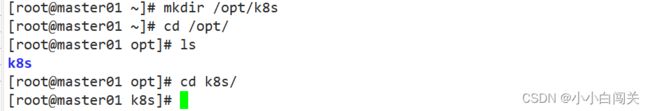

mkdir /opt/k8s

cd /opt/k8s/上传 etcd-cert.sh 和 etcd.sh 到 /opt/k8s/ 目录中

chmod +x etcd-cert.sh etcd.sh创建用于生成CA证书、etcd 服务器证书以及私钥的目录

mkdir /opt/k8s/etcd-cert

mv etcd-cert.sh etcd-cert/

cd /opt/k8s/etcd-cert/

./etcd-cert.sh#!/bin/bash

#配置证书生成策略,让 CA 软件知道颁发有什么功能的证书,生成用来签发其他组件证书的根证书

cat > ca-config.json < ca-csr.json <:使用 CSRJSON 文件生成生成新的证书和私钥。如果不添加管道符号,会直接把所有证书内容输出到屏幕。

#注意:CSRJSON 文件用的是相对路径,所以 cfssl 的时候需要 csr 文件的路径下执行,也可以指定为绝对路径。

#cfssljson 将 cfssl 生成的证书(json格式)变为文件承载式证书,-bare 用于命名生成的证书文件。

#-----------------------

#生成 etcd 服务器证书和私钥

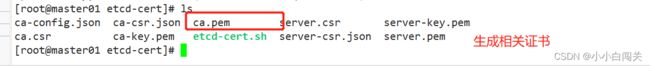

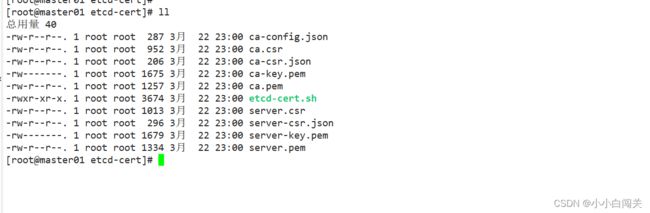

cat > server-csr.json < ls

ca-config.json ca-csr.json ca.pem server.csr server-key.pem

ca.csr ca-key.pem etcd-cert.sh server-csr.json server.pem#上传 etcd-v3.4.9-linux-amd64.tar.gz 到 /opt/k8s 目录中,启动etcd服务

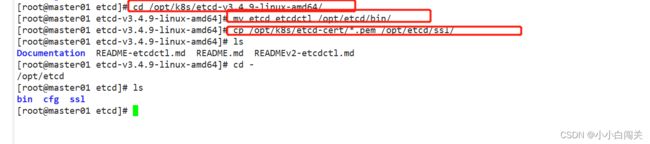

cd /opt/k8s/

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

mkdir -p /opt/etcd/{cfg,bin,ssl}

cd /opt/k8s/etcd-v3.4.9-linux-amd64/

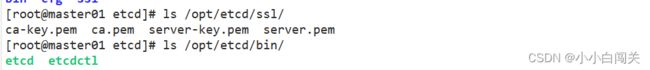

mv etcd etcdctl /opt/etcd/bin/

cp /opt/k8s/etcd-cert/*.pem /opt/etcd/ssl/

cd /opt/k8s/

./etcd.sh etcd01 192.168.80.10 etcd02=https://192.168.80.11:2380,etcd03=https://192.168.80.12:2380

ps -ef | grep etcd

scp -r /opt/etcd/ [email protected]:/opt/

scp -r /opt/etcd/ [email protected]:/opt/

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/创建etcd目录以及它的子目录

#!/bin/bash

#example: ./etcd.sh etcd01 192.168.80.10 etcd02=https://192.168.80.11:2380,etcd03=https://192.168.80.12:2380

#创建etcd配置文件/opt/etcd/cfg/etcd

ETCD_NAME=$1

WORK_DIR=/opt/etcd

cat > $WORK_DIR/cfg/etcd < /usr/lib/systemd/system/etcd.service < 在 node01 节点上操作

vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02" #修改

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.80.11:2380" #修改

ETCD_LISTEN_CLIENT_URLS="https://192.168.80.11:2379" #修改

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.80.11:2380" #修改

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.80.11:2379" #修改

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.80.10:2380,etcd02=https://192.168.80.11:2380,etcd03=https://192.168.80.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

systemctl start etcd

systemctl enable etcd

systemctl status etcd在 node02 节点上操作

vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03" #修改

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.80.12:2380" #修改

ETCD_LISTEN_CLIENT_URLS="https://192.168.80.12:2379" #修改

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.80.12:2380" #修改

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.80.12:2379" #修改

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.80.10:2380,etcd02=https://192.168.80.11:2380,etcd03=https://192.168.80.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

systemctl start etcd

systemctl enable etcd

systemctl status etcd检查etcd群集状态

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.80.10:2379,https://192.168.80.11:2379,https://192.168.80.12:2379" endpoint health --write-out=table

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.80.10:2379,https://192.168.80.11:2379,https://192.168.80.12:2379" --write-out=table member list四、部署 docker引擎

//所有 node 节点部署docker引擎

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

systemctl start docker.service

systemctl enable docker.service 五、部署 Master 组件

在 master01 节点上操作

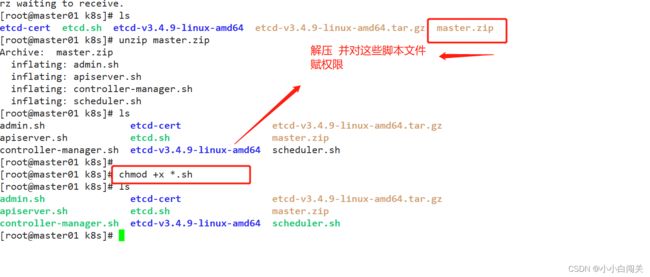

上传 master.zip 和 k8s-cert.sh 到 /opt/k8s 目录中,解压 master.zip 压缩包

cd /opt/k8s/

unzip master.zip

chmod +x *.sh

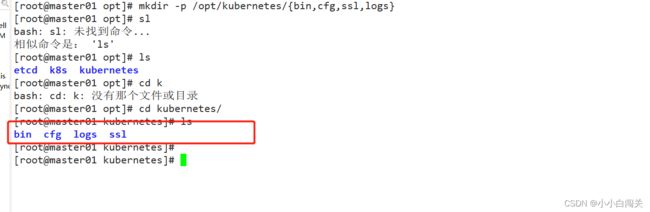

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}创建用于生成CA证书、相关组件的证书和私钥的目录

mkdir /opt/k8s/k8s-cert

mv /opt/k8s/k8s-cert.sh /opt/k8s/k8s-cert

cd /opt/k8s/k8s-cert/

./k8s-cert.shls *pem

admin-key.pem apiserver-key.pem ca-key.pem kube-proxy-key.pem

admin.pem apiserver.pem ca.pem kube-proxy.pem

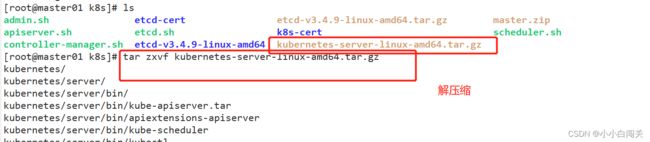

cp ca*pem apiserver*pem /opt/kubernetes/ssl/上传 kubernetes-server-linux-amd64.tar.gz 到 /opt/k8s/ 目录中,解压 kubernetes 压缩包

cd /opt/k8s/

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd /opt/k8s/kubernetes/server/bin

cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

ln -s /opt/kubernetes/bin/* /usr/local/bin/#创建 bootstrap token 认证文件,apiserver 启动时会调用,然后就相当于在集群内创建了一个这个用户,接下来就可以用 RBAC 给他授权

cd /opt/k8s/

vim token.sh

#!/bin/bash

#获取随机数前16个字节内容,以十六进制格式输出,并删除其中空格

BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

#生成 token.csv 文件,按照 Token序列号,用户名,UID,用户组 的格式生成

cat > /opt/kubernetes/cfg/token.csv < 安全端口6443用于接收HTTPS请求,用于基于Token文件或客户端证书等认证

启动 scheduler 服务

./scheduler.sh

ps aux | grep kube-scheduler启动 controller-manager 服务

./controller-manager.sh

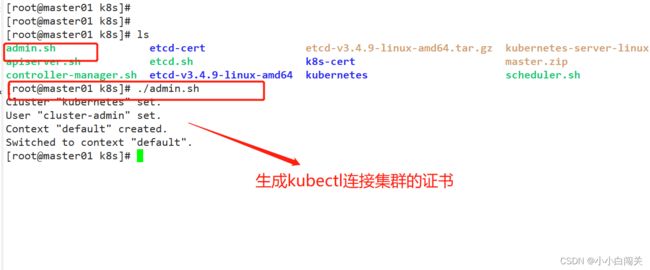

ps aux | grep kube-controller-manager生成kubectl连接集群的证书

./admin.sh

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous![]()

通过kubectl工具查看当前集群组件状态

kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"} 查看版本信息

kubectl version