k8s 查看pod流量_Kubernetes K8S之Pod生命周期与探针检测

![]()

K8S中Pod的生命周期与ExecAction、TCPSocketAction和HTTPGetAction探针检测

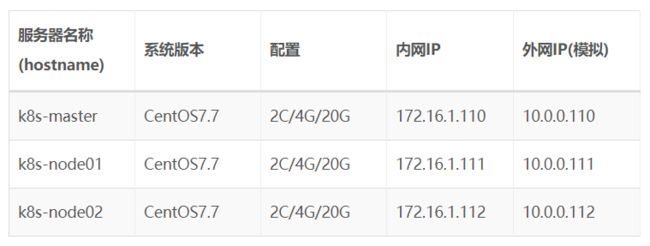

主机配置规划

Pod容器生命周期

Pause容器说明

每个Pod里运行着一个特殊的被称之为Pause的容器,其他容器则为业务容器,这些业务容器共享Pause容器的网络栈和Volume挂载卷,因此他们之间通信和数据交换更为高效。在设计时可以充分利用这一特性,将一组密切相关的服务进程放入同一个Pod中;同一个Pod里的容器之间仅需通过localhost就能互相通信。

kubernetes中的pause容器主要为每个业务容器提供以下功能:

PID命名空间:Pod中的不同应用程序可以看到其他应用程序的进程ID。

网络命名空间:Pod中的多个容器能够访问同一个IP和端口范围。

IPC命名空间:Pod中的多个容器能够使用SystemV IPC或POSIX消息队列进行通信。

UTS命名空间:Pod中的多个容器共享一个主机名;Volumes(共享存储卷)。

Pod中的各个容器可以访问在Pod级别定义的Volumes。

容器探针

探针是由 kubelet 对容器执行的定期诊断。要执行诊断,则需kubelet 调用由容器实现的 Handler。探针有三种类型的处理程序:

ExecAction:在容器内执行指定命令。如果命令退出时返回码为 0 则认为诊断成功。

CPSocketAction:对指定端口上的容器的 IP 地址进行 TCP 检查。如果端口打开,则诊断被认为是成功的。

HTTPGetAction:对指定的端口和路径上的容器的 IP 地址执行 HTTP Get 请求。如果响应的状态码大于等于200 且小于 400,则诊断被认为是成功的。

每次探测都将获得以下三种结果之一:

成功:容器通过了诊断。

失败:容器未通过诊断。

未知:诊断失败,因此不会采取任何行动。

Kubelet 可以选择是否在容器上运行三种探针执行和做出反应:

livenessProbe:指示容器是否正在运行。如果存活探测失败,则 kubelet 会杀死容器,并且容器将受到其重启策略的影响。如果容器不提供存活探针,则默认状态为 Success。

readinessProbe:指示容器是否准备好服务请求【对外接受请求访问】。如果就绪探测失败,端点控制器将从与 Pod 匹配的所有 Service 的端点中删除该 Pod 的 IP 地址。初始延迟之前的就绪状态默认为 Failure。如果容器不提供就绪探针,则默认状态为 Success。

startupProbe: 指示容器中的应用是否已经启动。如果提供了启动探测(startup probe),则禁用所有其他探测,直到它成功为止。如果启动探测失败,kubelet 将杀死容器,容器服从其重启策略进行重启。如果容器没有提供启动探测,则默认状态为成功Success。

备注:可以以Tomcat web服务为例。

容器重启策略

PodSpec 中有一个 restartPolicy 字段,可能的值为 Always、OnFailure 和 Never。默认为 Always。

Always表示一旦不管以何种方式终止运行,kubelet都将重启;OnFailure表示只有Pod以非0退出码退出才重启;Nerver表示不再重启该Pod。

restartPolicy 适用于 Pod 中的所有容器。restartPolicy 仅指通过同一节点上的 kubelet 重新启动容器。失败的容器由 kubelet 以五分钟为上限的指数退避延迟(10秒,20秒,40秒…)重新启动,并在成功执行十分钟后重置。如 Pod 文档中所述,一旦pod绑定到一个节点,Pod 将永远不会重新绑定到另一个节点。

存活(liveness)和就绪(readiness)探针的使用场景

如果容器中的进程能够在遇到问题或不健康的情况下自行崩溃,则不一定需要存活探针;kubelet 将根据 Pod 的restartPolicy 自动执行正确的操作。

如果你希望容器在探测失败时被杀死并重新启动,那么请指定一个存活探针,并指定restartPolicy 为 Always 或 OnFailure。

如果要仅在探测成功时才开始向 Pod 发送流量,请指定就绪探针。在这种情况下,就绪探针可能与存活探针相同,但是 spec 中的就绪探针的存在意味着 Pod 将在没有接收到任何流量的情况下启动,并且只有在探针探测成功后才开始接收流量。

Pod phase(阶段)

Pod 的 status 定义在 PodStatus 对象中,其中有一个 phase 字段。

Pod 的运行阶段(phase)是 Pod 在其生命周期中的简单宏观概述。该阶段并不是对容器或 Pod 的综合汇总,也不是为了做为综合状态机。

Pod 相位的数量和含义是严格指定的。除了本文档中列举的内容外,不应该再假定 Pod 有其他的 phase 值。

下面是 phase 可能的值:

挂起(Pending):Pod 已被 Kubernetes 系统接受,但有一个或者多个容器镜像尚未创建。等待时间包括调度 Pod 的时间和通过网络下载镜像的时间,这可能需要花点时间。

运行中(Running):该 Pod 已经绑定到了一个节点上,Pod 中所有的容器都已被创建。至少有一个容器正在运行,或者正处于启动或重启状态。

成功(Succeeded):Pod 中的所有容器都被成功终止,并且不会再重启。

失败(Failed):Pod 中的所有容器都已终止了,并且至少有一个容器是因为失败终止。也就是说,容器以非0状态退出或者被系统终止。

未知(Unknown):因为某些原因无法取得 Pod 的状态,通常是因为与 Pod 所在主机通信失败。

检测探针-就绪检测

pod yaml脚本

[root@k8s-master lifecycle]# pwd

/root/k8s_practice/lifecycle

[root@k8s-master lifecycle]# cat readinessProbe-httpget.yaml

apiVersion: v1

kind: Pod

metadata:

name: readiness-httpdget-pod

namespace: default

labels:

test: readiness-httpdget

spec:

containers:

- name: readiness-httpget

image: registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17

imagePullPolicy: IfNotPresent

readinessProbe:

httpGet:

path: /index1.html

port: 80

initialDelaySeconds: 5 #容器启动完成后,kubelet在执行第一次探测前应该等待 5 秒。默认是 0 秒,最小值是 0。

periodSeconds: 3 #指定 kubelet 每隔 3 秒执行一次存活探测。默认是 10 秒。最小值是 1

创建 Pod,并查看pod状态

[root@k8s-master lifecycle]# kubectl apply -f readinessProbe-httpget.yaml

pod/readiness-httpdget-pod created

[root@k8s-master lifecycle]# kubectl get pod -n default -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

readiness-httpdget-pod 0/1 Running 0 5s 10.244.2.25 k8s-node02

查看pod详情

[root@k8s-master lifecycle]# kubectl describe pod readiness-httpdget-pod

Name: readiness-httpdget-pod

Namespace: default

Priority: 0

Node: k8s-node02/172.16.1.112

Start Time: Sat, 23 May 2020 16:10:04 +0800

Labels: test=readiness-httpdget

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"readiness-httpdget"},"name":"readiness-httpdget-pod","names...

Status: Running

IP: 10.244.2.25

IPs:

IP: 10.244.2.25

Containers:

readiness-httpget:

Container ID: docker://066d66aaef191b1db08e1b3efba6a9be75378d2fe70e99400fc513b91242089c

………………

Port:

Host Port:

State: Running

Started: Sat, 23 May 2020 16:10:05 +0800

Ready: False ##### 状态为False

Restart Count: 0

Readiness: http-get http://:80/index1.html delay=5s timeout=1s period=3s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-v48g4 (ro)

Conditions:

Type Status

Initialized True

Ready False ##### 为False

ContainersReady False ##### 为False

PodScheduled True

Volumes:

default-token-v48g4:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-v48g4

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned default/readiness-httpdget-pod to k8s-node02

Normal Pulled 49s kubelet, k8s-node02 Container image "registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17" already present on machine

Normal Created 49s kubelet, k8s-node02 Created container readiness-httpget

Normal Started 49s kubelet, k8s-node02 Started container readiness-httpget

Warning Unhealthy 2s (x15 over 44s) kubelet, k8s-node02 Readiness probe failed: HTTP probe failed with statuscode: 404

由上可见,容器未就绪。

我们进入pod的第一个容器,然后创建对应的文件

[root@k8s-master lifecycle]# kubectl exec -it readiness-httpdget-pod -c readiness-httpget bash

root@readiness-httpdget-pod:/# cd /usr/share/nginx/html

root@readiness-httpdget-pod:/usr/share/nginx/html# ls

50x.html index.html

root@readiness-httpdget-pod:/usr/share/nginx/html# echo "readiness-httpdget info" > index1.html

root@readiness-httpdget-pod:/usr/share/nginx/html# ls

50x.html index.html index1.html

之后看pod状态与详情

[root@k8s-master lifecycle]# kubectl get pod -n default -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

readiness-httpdget-pod 1/1 Running 0 2m30s 10.244.2.25 k8s-node02

[root@k8s-master lifecycle]# kubectl describe pod readiness-httpdget-pod

Name: readiness-httpdget-pod

Namespace: default

Priority: 0

Node: k8s-node02/172.16.1.112

Start Time: Sat, 23 May 2020 16:10:04 +0800

Labels: test=readiness-httpdget

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"readiness-httpdget"},"name":"readiness-httpdget-pod","names...

Status: Running

IP: 10.244.2.25

IPs:

IP: 10.244.2.25

Containers:

readiness-httpget:

Container ID: docker://066d66aaef191b1db08e1b3efba6a9be75378d2fe70e99400fc513b91242089c

………………

Port:

Host Port:

State: Running

Started: Sat, 23 May 2020 16:10:05 +0800

Ready: True ##### 状态为True

Restart Count: 0

Readiness: http-get http://:80/index1.html delay=5s timeout=1s period=3s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-v48g4 (ro)

Conditions:

Type Status

Initialized True

Ready True ##### 为True

ContainersReady True ##### 为True

PodScheduled True

Volumes:

default-token-v48g4:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-v48g4

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned default/readiness-httpdget-pod to k8s-node02

Normal Pulled 2m33s kubelet, k8s-node02 Container image "registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17" already present on machine

Normal Created 2m33s kubelet, k8s-node02 Created container readiness-httpget

Normal Started 2m33s kubelet, k8s-node02 Started container readiness-httpget

Warning Unhealthy 85s (x22 over 2m28s) kubelet, k8s-node02 Readiness probe failed: HTTP probe failed with statuscode: 404

由上可见,容器已就绪。

检测探针-存活检测

存活检测-执行命令

pod yaml脚本

[root@k8s-master lifecycle]# pwd

/root/k8s_practice/lifecycle

[root@k8s-master lifecycle]# cat livenessProbe-exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-exec-pod

labels:

test: liveness

spec:

containers:

- name: liveness-exec

image: registry.cn-beijing.aliyuncs.com/google_registry/busybox:1.24

imagePullPolicy: IfNotPresent

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5 # 第一次检测前等待5秒

periodSeconds: 3 # 检测周期3秒一次

这个容器生命的前 30 秒,/tmp/healthy 文件是存在的。所以在这最开始的 30 秒内,执行命令 cat /tmp/healthy 会返回成功码。30 秒之后,执行命令 cat /tmp/healthy 就会返回失败状态码。

创建 Pod

[root@k8s-master lifecycle]# kubectl apply -f livenessProbe-exec.yaml

pod/liveness-exec-pod created

在 30 秒内,查看 Pod 的描述:

[root@k8s-master lifecycle]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-exec-pod 1/1 Running 0 17s 10.244.2.21 k8s-node02

[root@k8s-master lifecycle]# kubectl describe pod liveness-exec-pod

Name: liveness-exec-pod

Namespace: default

Priority: 0

Node: k8s-node02/172.16.1.112

………………

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 25s default-scheduler Successfully assigned default/liveness-exec-pod to k8s-node02

Normal Pulled 24s kubelet, k8s-node02 Container image "registry.cn-beijing.aliyuncs.com/google_registry/busybox:1.24" already present on machine

Normal Created 24s kubelet, k8s-node02 Created container liveness-exec

Normal Started 24s kubelet, k8s-node02 Started container liveness-exec

输出结果显示:存活探测器成功。

35 秒之后,再来看 Pod 的描述:

[root@k8s-master lifecycle]# kubectl get pod -o wide # 显示 RESTARTS 的值增加了 1

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-exec-pod 1/1 Running 1 89s 10.244.2.22 k8s-node02

[root@k8s-master lifecycle]# kubectl describe pod liveness-exec-pod

………………

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 42s default-scheduler Successfully assigned default/liveness-exec-pod to k8s-node02

Normal Pulled 41s kubelet, k8s-node02 Container image "registry.cn-beijing.aliyuncs.com/google_registry/busybox:1.24" already present on machine

Normal Created 41s kubelet, k8s-node02 Created container liveness-exec

Normal Started 41s kubelet, k8s-node02 Started container liveness-exec

Warning Unhealthy 2s (x3 over 8s) kubelet, k8s-node02 Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory

Normal Killing 2s kubelet, k8s-node02 Container liveness-exec failed liveness probe, will be restarted

由上可见,在输出结果的最下面,有信息显示存活探测器失败了,因此这个容器被杀死并且被重建了。

存活检测-HTTP请求

pod yaml脚本

[root@k8s-master lifecycle]# pwd

/root/k8s_practice/lifecycle

[root@k8s-master lifecycle]# cat livenessProbe-httpget.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-httpget-pod

labels:

test: liveness

spec:

containers:

- name: liveness-httpget

image: registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

httpGet: # 任何大于或等于 200 并且小于 400 的返回码表示成功,其它返回码都表示失败。

path: /index.html

port: 80

httpHeaders: #请求中自定义的 HTTP 头。HTTP 头字段允许重复。

- name: Custom-Header

value: Awesome

initialDelaySeconds: 5

periodSeconds: 3

创建 Pod,查看pod状态

[root@k8s-master lifecycle]# kubectl apply -f livenessProbe-httpget.yaml

pod/liveness-httpget-pod created

[root@k8s-master lifecycle]# kubectl get pod -n default -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-httpget-pod 1/1 Running 0 3s 10.244.2.27 k8s-node02

查看pod详情

[root@k8s-master lifecycle]# kubectl describe pod liveness-httpget-pod

Name: liveness-httpget-pod

Namespace: default

Priority: 0

Node: k8s-node02/172.16.1.112

Start Time: Sat, 23 May 2020 16:45:25 +0800

Labels: test=liveness

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness"},"name":"liveness-httpget-pod","namespace":"defau...

Status: Running

IP: 10.244.2.27

IPs:

IP: 10.244.2.27

Containers:

liveness-httpget:

Container ID: docker://4b42a351414667000fe94d4f3166d75e72a3401e549fed723126d2297124ea1a

………………

Port: 80/TCP

Host Port: 8080/TCP

State: Running

Started: Sat, 23 May 2020 16:45:26 +0800

Ready: True

Restart Count: 0

Liveness: http-get http://:80/index.html delay=5s timeout=1s period=3s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-v48g4 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

………………

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned default/liveness-httpget-pod to k8s-node02

Normal Pulled 5m52s kubelet, k8s-node02 Container image "registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17" already present on machine

Normal Created 5m52s kubelet, k8s-node02 Created container liveness-httpget

Normal Started 5m52s kubelet, k8s-node02 Started container liveness-httpget

由上可见,pod存活检测正常

我们进入pod的第一个容器,然后删除对应的文件

[root@k8s-master lifecycle]# kubectl exec -it liveness-httpget-pod -c liveness-httpget bash

root@liveness-httpget-pod:/# cd /usr/share/nginx/html/

root@liveness-httpget-pod:/usr/share/nginx/html# ls

50x.html index.html

root@liveness-httpget-pod:/usr/share/nginx/html# rm -f index.html

root@liveness-httpget-pod:/usr/share/nginx/html# ls

50x.html

再次看pod状态和详情,可见Pod的RESTARTS从0变为了1。

[root@k8s-master lifecycle]# kubectl get pod -n default -o wide # RESTARTS 从0变为了1

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-httpget-pod 1/1 Running 1 8m16s 10.244.2.27 k8s-node02

[root@k8s-master lifecycle]# kubectl describe pod liveness-httpget-pod

Name: liveness-httpget-pod

Namespace: default

Priority: 0

Node: k8s-node02/172.16.1.112

Start Time: Sat, 23 May 2020 16:45:25 +0800

Labels: test=liveness

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness"},"name":"liveness-httpget-pod","namespace":"defau...

Status: Running

IP: 10.244.2.27

IPs:

IP: 10.244.2.27

Containers:

liveness-httpget:

Container ID: docker://5d0962d383b1df5e59cd3d1100b259ff0415ac37c8293b17944034f530fb51c8

………………

Port: 80/TCP

Host Port: 8080/TCP

State: Running

Started: Sat, 23 May 2020 16:53:38 +0800

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Sat, 23 May 2020 16:45:26 +0800

Finished: Sat, 23 May 2020 16:53:38 +0800

Ready: True

Restart Count: 1

Liveness: http-get http://:80/index.html delay=5s timeout=1s period=3s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-v48g4 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-v48g4:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-v48g4

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned default/liveness-httpget-pod to k8s-node02

Normal Pulled 7s (x2 over 8m19s) kubelet, k8s-node02 Container image "registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17" already present on machine

Normal Created 7s (x2 over 8m19s) kubelet, k8s-node02 Created container liveness-httpget

Normal Started 7s (x2 over 8m19s) kubelet, k8s-node02 Started container liveness-httpget

Warning Unhealthy 7s (x3 over 13s) kubelet, k8s-node02 Liveness probe failed: HTTP probe failed with statuscode: 404

Normal Killing 7s kubelet, k8s-node02 Container liveness-httpget failed liveness probe, will be restarted

由上可见,当liveness-httpget检测失败,重建了Pod容器

存活检测-TCP端口

pod yaml脚本

[root@k8s-master lifecycle]# pwd

/root/k8s_practice/lifecycle

[root@k8s-master lifecycle]# cat livenessProbe-tcp.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-tcp-pod

labels:

test: liveness

spec:

containers:

- name: liveness-tcp

image: registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 5

periodSeconds: 3

TCP探测正常情况

创建 Pod,查看pod状态

[root@k8s-master lifecycle]# kubectl apply -f livenessProbe-tcp.yaml

pod/liveness-tcp-pod created

[root@k8s-master lifecycle]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-tcp-pod 1/1 Running 0 50s 10.244.4.23 k8s-node01

查看pod详情

[root@k8s-master lifecycle]# kubectl describe pod liveness-tcp-pod

Name: liveness-tcp-pod

Namespace: default

Priority: 0

Node: k8s-node01/172.16.1.111

Start Time: Sat, 23 May 2020 18:02:46 +0800

Labels: test=liveness

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness"},"name":"liveness-tcp-pod","namespace":"default"}...

Status: Running

IP: 10.244.4.23

IPs:

IP: 10.244.4.23

Containers:

liveness-tcp:

Container ID: docker://4de13e7c2e36c028b2094bf9dcf8e2824bfd15b8c45a0b963e301b91ee1a926d

………………

Port: 80/TCP

Host Port: 8080/TCP

State: Running

Started: Sat, 23 May 2020 18:03:04 +0800

Ready: True

Restart Count: 0

Liveness: tcp-socket :80 delay=5s timeout=1s period=3s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-v48g4 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-v48g4:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-v48g4

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned default/liveness-tcp-pod to k8s-node01

Normal Pulling 74s kubelet, k8s-node01 Pulling image "registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17"

Normal Pulled 58s kubelet, k8s-node01 Successfully pulled image "registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17"

Normal Created 57s kubelet, k8s-node01 Created container liveness-tcp

Normal Started 57s kubelet, k8s-node01 Started container liveness-tcp

以上是正常情况,可见存活探测成功。

模拟TCP探测失败情况

将上面yaml文件中的探测TCP端口进行如下修改:

livenessProbe:

tcpSocket:

port: 8090 # 之前是80

删除之前的pod并重新创建,并过一会儿看pod状态

[root@k8s-master lifecycle]# kubectl apply -f livenessProbe-tcp.yaml

pod/liveness-tcp-pod created

[root@k8s-master lifecycle]# kubectl get pod -o wide # 可见RESTARTS变为了1,再过一会儿会变为2,之后依次叠加

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-tcp-pod 1/1 Running 1 25s 10.244.2.28 k8s-node02

pod详情

[root@k8s-master lifecycle]# kubectl describe pod liveness-tcp-pod

Name: liveness-tcp-pod

Namespace: default

Priority: 0

Node: k8s-node02/172.16.1.112

Start Time: Sat, 23 May 2020 18:08:32 +0800

Labels: test=liveness

………………

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned default/liveness-tcp-pod to k8s-node02

Normal Pulled 12s (x2 over 29s) kubelet, k8s-node02 Container image "registry.cn-beijing.aliyuncs.com/google_registry/nginx:1.17" already present on machine

Normal Created 12s (x2 over 29s) kubelet, k8s-node02 Created container liveness-tcp

Normal Started 12s (x2 over 28s) kubelet, k8s-node02 Started container liveness-tcp

Normal Killing 12s kubelet, k8s-node02 Container liveness-tcp failed liveness probe, will be restarted

Warning Unhealthy 0s (x4 over 18s) kubelet, k8s-node02 Liveness probe failed: dial tcp 10.244.2.28:8090: connect: connection refused

由上可见,liveness-tcp检测失败,重建了Pod容器。

检测探针-启动检测

有时候,会有一些现有的应用程序在启动时需要较多的初始化时间【如:Tomcat服务】。这种情况下,在不影响对触发这种探测的死锁的快速响应的情况下,设置存活探测参数是要有技巧的。

技巧就是使用一个命令来设置启动探测。针对HTTP 或者 TCP 检测,可以通过设置 failureThreshold * periodSeconds 参数来保证有足够长的时间应对糟糕情况下的启动时间。

示例如下:

pod yaml文件

[root@k8s-master lifecycle]# pwd

/root/k8s_practice/lifecycle

[root@k8s-master lifecycle]# cat startupProbe-httpget.yaml

apiVersion: v1

kind: Pod

metadata:

name: startup-pod

labels:

test: startup

spec:

containers:

- name: startup

image: registry.cn-beijing.aliyuncs.com/google_registry/tomcat:7.0.94-jdk8-openjdk

imagePullPolicy: IfNotPresent

ports:

- name: web-port

containerPort: 8080

hostPort: 8080

livenessProbe:

httpGet:

path: /index.jsp

port: web-port

initialDelaySeconds: 5

periodSeconds: 10

failureThreshold: 1

startupProbe:

httpGet:

path: /index.jsp

port: web-port

periodSeconds: 10 #指定 kubelet 每隔 10 秒执行一次存活探测。默认是 10 秒。最小值是 1

failureThreshold: 30 #最大的失败次数

启动pod,并查看状态

[root@k8s-master lifecycle]# kubectl apply -f startupProbe-httpget.yaml

pod/startup-pod created

[root@k8s-master lifecycle]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

startup-pod 1/1 Running 0 8m46s 10.244.4.26 k8s-node01

查看pod详情

[root@k8s-master ~]# kubectl describe pod startup-pod

有启动探测,应用程序将会有最多 5 分钟(30 * 10 = 300s) 的时间来完成它的启动。一旦启动探测成功一次,存活探测任务就会接管对容器的探测,对容器死锁可以快速响应。 如果启动探测一直没有成功,容器会在 300 秒后被杀死,并且根据 restartPolicy 来设置 Pod 状态。

探测器配置详解

使用如下这些字段可以精确的控制存活和就绪检测行为:

initialDelaySeconds:容器启动后要等待多少秒后存活和就绪探测器才被初始化,默认是 0 秒,最小值是 0。

periodSeconds:执行探测的时间间隔(单位是秒)。默认是 10 秒。最小值是 1。

timeoutSeconds:探测的超时时间。默认值是 1 秒。最小值是 1。

successThreshold:探测器在失败后,被视为成功的最小连续成功数。默认值是 1。存活探测的这个值必须是 1。最小值是 1。

failureThreshold:当探测失败时,Kubernetes 的重试次数。存活探测情况下的放弃就意味着重新启动容器。就绪探测情况下的放弃 Pod 会被打上未就绪的标签。默认值是 3。最小值是 1。

HTTP 探测器可以在 httpGet 上配置额外的字段:

host:连接使用的主机名,默认是 Pod 的 IP。也可以在 HTTP 头中设置 “Host” 来代替。

scheme :用于设置连接主机的方式(HTTP 还是 HTTPS)。默认是 HTTP。

path:访问 HTTP 服务的路径。

httpHeaders:请求中自定义的 HTTP 头。HTTP 头字段允许重复。

port:访问容器的端口号或者端口名。如果数字必须在 1 ~ 65535 之间。

完毕!