- guava loadingCache代码示例

IM 胡鹏飞

Java工具类介绍

publicclassTest2{publicstaticvoidmain(String[]args)throwsException{LoadingCachecache=CacheBuilder.newBuilder()//设置并发级别为8,并发级别是指可以同时写缓存的线程数.concurrencyLevel(8)//设置缓存容器的初始容量为10.initialCapacity(10)//设置缓存

- k8s:安装 Helm 私有仓库ChartMuseum、helm-push插件并上传、安装Zookeeper

云游

dockerhelmhelm-push

ChartMuseum是Kubernetes生态中用于存储、管理和发布HelmCharts的开源系统,主要用于扩展Helm包管理器的功能核心功能集中存储:提供中央化仓库存储Charts,支持版本管理和权限控制。跨集群部署:支持多集群环境下共享Charts,简化部署流程。离线部署:适配无网络环境,可将Charts存储在本地或局域网内。HTTP接口:通过HTTP协议提供服务,用户

- EMQX 社区版单机和集群部署

pcj_888

MQTTMQTTEMQ

EMQ支持Docker,宿主机,k8s部署;支持单机或集群部署。以下给出EMQX社区版单机和集群部署方法1.Docker单机部署官方推荐最小配置:2核4G下载容器镜像dockerpullemqx/emqx:5.3.2启动容器dockerrun-d--nameemqx\-p1883:1883\-p8083:8083\-p8883:8883\-p8084:8084\-p18083:18083\emqx

- 玩转Docker | 使用Docker部署gopeed下载工具

心随_风动

玩转Dockerdocker容器运维

玩转Docker|使用Docker部署gopeed下载工具前言一、gopeed介绍Gopeed简介主要特点二、系统要求环境要求环境检查Docker版本检查检查操作系统版本三、部署gopeed服务下载镜像创建容器检查容器状态检查服务端口安全设置四、访问gopeed应用五、测试与下载六、总结前言在当今信息爆炸的时代,高效地获取和管理网络资源变得尤为重要。无论是下载大型文件还是进行日常的数据传输,一个稳

- Docker指定网桥和指定网桥IP

$dockernetworklsNETWORKIDNAMEDRIVER7fca4eb8c647bridgebridge9f904ee27bf5nonenullcf03ee007fb4hosthostBridge默认bridge网络,我们可以使用dockernetworkinspect命令查看返回的网络信息,我们使用dockerrun命令是将网络自动应用到新的容器Host如果是hosts模式,启动容

- Docker容器底层原理详解:从零理解容器化技术

Debug Your Career

面试docker容器dockerjava

一、容器本质:一个“隔离的进程”关键认知:Docker容器并不是一个完整的操作系统,而是一个被严格隔离的进程。这个进程拥有独立的文件系统、网络、进程视图等资源,但它直接运行在宿主机内核上(而虚拟机需要模拟硬件和操作系统)。类比理解:想象你在一个办公楼里租了一间独立办公室(容器)。你有自己的桌椅(文件系统)、电话分机(网络)、门牌号(主机名),但共享整栋楼的水电(宿主机内核)和电梯(硬件资源)。办公

- C++STL-set

s15335

C++STLc++开发语言

一.基础概念set也是一种容器,像vector,string这样,但它是树形容器。在物理结构上是二叉搜索树,逻辑上还是线性结构。set容器内元素不可重复,multiset内容器元素可以重复;这两个容器,插入的元素都是有序排列。二.基础用法1.set对象创建1.默认构造函数sets1;2.初始化列表sets2_1={9,8,7,6,5};//56789sets2_2({9,8,7,7,6,5});/

- Kubernetes自动扩缩容方案对比与实践指南

浅沫云归

后端技术栈小结kubernetesautoscalingdevops

Kubernetes自动扩缩容方案对比与实践指南随着微服务架构和容器化的广泛采用,Kubernetes自动扩缩容(Autoscaling)成为保障生产环境性能稳定与资源高效利用的关键技术。面对水平Pod扩缩容、垂直资源调整、集群节点扩缩容以及事件驱动扩缩容等多种需求,社区提供了HPA、VPA、ClusterAutoscaler、KEDA等多种方案。本篇文章将从业务背景、方案对比、优缺点分析、选型建

- 【运维实战】解决 K8s 节点无法拉取 pause:3.6 镜像导致 API Server 启动失败的问题

gs80140

各种问题运维kubernetes容器

目录【运维实战】解决K8s节点无法拉取pause:3.6镜像导致APIServer启动失败的问题问题分析✅解决方案:替代拉取方式导入pause镜像Step1.从私有仓库拉取pause镜像Step2.重新打tag为Kubernetes默认命名Step3.导出镜像为tar包Step4.拷贝镜像到目标节点Step5.在目标节点导入镜像到containerd的k8s.io命名空间Step6.验证镜像是否导

- zookeeper etcd区别

sun007700

zookeeperetcd分布式

ZooKeeper与etcd的核心区别体现在设计理念、数据模型、一致性协议及适用场景等方面。ZooKeeper基于ZAB协议实现分布式协调,采用树形数据结构和临时节点特性,适合传统分布式系统;而etcd基于Raft协议,以高性能键值对存储为核心,专为云原生场景优化,是Kubernetes等容器编排系统的默认存储组件。12架构与设计目标差异ZooKeeper。设计定位:专注于分

- 面试官:Spring 如何控制 Bean 的加载顺序?

在大多数情况下,我们不需要手动控制Bean的加载顺序,因为Spring的IoC容器足够智能。核心原则:依赖驱动加载SpringIoC容器会构建一个依赖关系图(DependencyGraph)。如果BeanA依赖于BeanB(例如,A的构造函数需要一个B类型的参数),Spring会保证在创建BeanA之前,BeanB已经被完全创建和初始化好了。@ServicepublicclassServiceA{

- C++ 标准库 <numeric>

以下对C++标准库中头文件所提供的数值算法与工具做一次系统、深入的梳理,包括算法功能、示例代码、复杂度分析及实践建议。一、概述中定义了一组对数值序列进行累加、内积、差分、扫描等操作的算法,以及部分辅助工具(如std::iota、std::gcd/std::lcm等)。所有算法均作用于迭代器区间,符合STL风格,可与任意容器或原始数组配合使用。从C++17、20起,又陆续加入了并行友好的std::r

- 学习日记-spring-day45-7.10

永日45670

学习springjava

知识点:1.初始化Bean单例池完成getBeancreateBean(1)知识点核心内容重点单例词初始化在容器初始化阶段预先创建单例对象,避免在getBean时动态创建单例词必须在容器初始化时完成加载,否则会触发异常getBean方法逻辑1.从beanDefinitionMap查询BeanDefinition2.根据scope判断单例/多例3.单例:直接从单例词获取4.多例:反射动态创建新对象多

- c++中迭代器的本质

三月微风

c++开发语言

C++迭代器的本质与实现原理迭代器是C++标准模板库(STL)的核心组件之一,它作为容器与算法之间的桥梁,提供了统一访问容器元素的方式。下面从多个维度深入解析迭代器的本质特性。一、迭代器的基本定义与分类迭代器的本质迭代器是一种行为类似指针的对象,用于遍历和操作容器中的元素。它提供了一种统一的方式来访问不同容器中的元素,而无需关心容器的具体实现细节。标准分类体系C++标准定义了5种迭代器类型,按功能

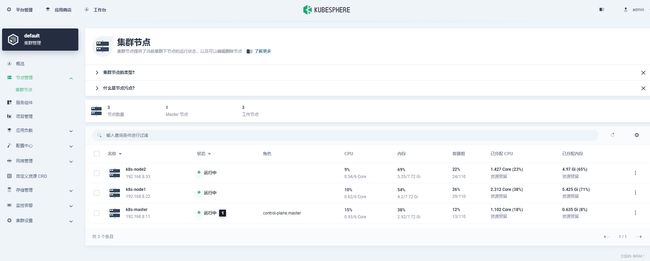

- 在 openEuler 24.03 LTS-SP1 安装 KubeSphere + K8s 集群时 kubelet 默认连接 127.0.0.1 问题分析与解决

gs80140

各种问题kuberneteskubelet容器

目录在openEuler24.03LTS-SP1安装KubeSphere+K8s集群时kubelet默认连接127.0.0.1问题分析与解决❗问题现象问题根因分析✅解决方案方案一:修改每个节点的kubelet配置(推荐)方案二:预防性修改安装模板(集群安装前)总结在openEuler24.03LTS-SP1安装KubeSphere+K8s集群时kubelet默认连接127.0.0.1问题分析与解决

- 在 Linux(openEuler 24.03 LTS-SP1)上安装 Kubernetes + KubeSphere 的防火墙放行全攻略

目录在Linux(openEuler24.03LTS-SP1)上安装Kubernetes+KubeSphere的防火墙放行全攻略一、为什么要先搞定防火墙?二、目标环境三、需放行的端口和协议列表四、核心工具说明1.修正后的exec.sh脚本(支持管道/重定向)2.批量放行脚本:open_firewall.sh五、使用示例1.批量放行端口2.查看当前防火墙规则3.仅开放单一端口(临时需求)4.检查特定

- 如何在Windows系统下使用Dockerfile构建Docker镜像:完整指南

996蹲坑

windowsdocker容器

前言Docker作为当前最流行的容器化技术,已经成为开发、测试和运维的必备工具。本文将详细介绍在Windows系统下使用Dockerfile构建Docker镜像的完整流程,包括两种镜像构建方式的对比、Dockerfile核心指令详解、实战案例演示以及Windows系统下的特殊注意事项。一、Docker镜像构建的两种方式1.容器转为镜像(不推荐)这种方式适合临时保存容器状态,但不适合生产环境使用:#

- 玩转Docker | 使用Docker部署HomeBox家庭库存管理工具

心随_风动

玩转Dockerdocker容器运维

玩转Docker|使用Docker部署HomeBox家庭库存管理工具前言一、HomeBox介绍Homebox简介主要特点主要使用场景二、系统要求环境要求环境检查Docker版本检查检查操作系统版本三、部署HomeBox服务下载HomeBox镜像编辑部署文件创建容器检查容器状态检查服务端口安全设置四、访问HomeBox服务访问HomeBox首页注册账号五、HomeBox使用体验总结前言随着智能家居和

- docker常见问题解决方法

小王聊技术

docker

目录迁移至其他服务器清理Docker占用的磁盘空间常见问题:迁移至其他服务器1.将docker容器导出dockerexport-o保存路径/xxx.tar容器id2.将容器tar远程拷贝到新的服务器(从新的服务器上向老服务器上请求复制)scproot@服务器地址:/data/xxx.tar/root3.将导入的tar包转为镜像dockerimport-cxxx.tarimage_name:tag

- 玩转Docker | 使用Docker部署NotepadMX笔记应用程序

心随_风动

玩转Dockerdocker笔记eureka

玩转Docker|使用Docker部署NotepadMX笔记应用程序前言一、NotepadMX介绍工具简介主要特点二、系统要求环境要求环境检查Docker版本检查检查操作系统版本三、部署NotepadMX服务下载NotepadMX镜像编辑部署文件创建容器检查容器状态检查服务端口安全设置四、访问NotepadMX服务访问NotepadMX首页设置访问验证编辑笔记总结前言在如今快节奏的工作与学习中,一

- 三、【docker】docker和docker-compose的常用命令

文章目录一、docker常用命令1、镜像管理2、容器管理3、容器监控和调试4、网络管理5、数据卷管理6、系统维护7、实用组合命令8、常用技巧二、docker-compose常用命令1、基本命令2、构建相关3、运行维护4、常用组合命令5、实用参数一、docker常用命令1、镜像管理#查看本地镜像dockerimages#拉取镜像dockerpull:#删除镜像dockerrmi#构建镜像docker

- 【C#】依赖注入知识点汇总

Mike_Wuzy

c#

在C#中实现依赖注入(DependencyInjection,DI)可以帮助你创建更解耦、可维护和易于测试的软件系统。以下是一些关于依赖注入的关键知识点及其示例代码。1.基本概念容器(Container)容器负责管理对象实例以及它们之间的依赖关系。IoC容器(InversionofControlContainer)是实现依赖注入的核心工具,常见的DI框架包括Unity、Autofac、Castle

- K3s-io/kine项目核心架构与数据流解析

富珂祯

K3s-io/kine项目核心架构与数据流解析kineRunKubernetesonMySQL,Postgres,sqlite,dqlite,notetcd.项目地址:https://gitcode.com/gh_mirrors/ki/kine项目概述K3s-io/kine是一个创新的存储适配器,它在传统SQL数据库之上实现了轻量级的键值存储功能。该项目最显著的特点是采用单一数据表结构,通过巧妙的

- 20250707-3-Kubernetes 核心概念-有了Docker,为什么还用K8s_笔记

Andy杨

CKA-专栏kubernetesdocker笔记

一、Kubernetes核心概念1.有了Docker,为什么还用Kubernetes1)企业需求独立性问题:Docker容器本质上是独立存在的,多个容器跨主机提供服务时缺乏统一管理机制负载均衡需求:为提高业务并发和高可用,企业会使用多台服务器部署多个容器实例,但Docker本身不具备负载均衡能力管理复杂度:随着Docker主机和容器数量增加,面临部署、升级、监控等统一管理难题运维效率:单机升

- 20250707-4-Kubernetes 集群部署、配置和验证-K8s基本资源概念初_笔记

一、kubeconfig配置文件文件作用:kubectl使用kubeconfig认证文件连接K8s集群生成方式:使用kubectlconfig指令生成核心字段:clusters:定义集群信息,包括证书和服务端地址contexts:定义上下文,关联集群和用户users:定义客户端认证信息current-context:指定当前使用的上下文二、Kubernetes弃用Docker1.弃用背景原因:

- k8s之configmap

西京刀客

云原生(CloudNative)云计算虚拟化#Kubernetes(k8s)kubernetes容器云原生

文章目录k8s之configmap什么是ConfigMap?为什么需要ConfigMap?ConfigMap的创建方式ConfigMap的使用方式实际应用场景ConfigMap最佳实践参考k8s之configmap什么是ConfigMap?ConfigMap是Kubernetes中用于存储非机密配置数据的API对象。它允许你将配置信息与容器镜像解耦,使应用程序更加灵活和可移植。ConfigMap以

- 银河麒麟V10离线安装Docker

checkQQ

安装部署记录Devops工具使用Liunx运维工具docker容器运维

场景:内网环境,无法连接公网,需要在麒麟系统部署一个docker环境运行容器。一、准备docker离线安装包:Indexoflinux/static/stable/x86_64/https://download.docker.com/linux/static/stable/x86_64/选择合适的版本,这里个人选择的20.10.14二、上传压缩包到服务器后进行解压tar--strip-compon

- FFmpeg滤镜相关的重要结构体

melonbo

FFMPEGffmpeg

核心结构体概览FFmpeg滤镜系统由多个关键结构体组成,构成了完整的滤镜处理框架。以下是滤镜系统中最重要的结构体及其相互关系:AVFilterGraph┬─AVFilterContext┬─AVFilter│├─AVFilterLink│└─AVFilterPad└─AVFilterInOut详细结构体分析1.AVFilterGraph(滤镜图容器)功能:管理整个滤镜图的所有组件和状态重要成员:t

- SkyWalking实现微服务链路追踪的埋点方案

MenzilBiz

服务器运维微服务skywalking

SkyWalking实现微服务链路追踪的埋点方案一、SkyWalking简介SkyWalking是一款开源的APM(应用性能监控)系统,特别为微服务、云原生架构和容器化(Docker/Kubernetes)应用而设计。它主要功能包括分布式追踪、服务网格遥测分析、指标聚合和可视化等。SkyWalking支持多种语言(Java、Go、Python等)和协议(HTTP、gRPC等),能够提供端到端的调用

- 揭秘华为欧拉:不只是操作系统,更是云时代的技能认证体系

揭秘华为欧拉:不只是操作系统,更是云时代的技能认证体系作为一名深耕IT培训领域的博主,今天带大家客观认识“华为欧拉”——这个在云计算领域频频出现的名词。一、华为欧拉究竟是什么?严格来说,“华为欧拉”核心包含两部分1.openEuler操作系统:一个由华为支持的企业级开源Linux操作系统发行版,专为云计算、云原生平台等场景设计优化。2.华为openEuler认证体系(HCIA/HCIP/HCIE-

- LeetCode[位运算] - #137 Single Number II

Cwind

javaAlgorithmLeetCode题解位运算

原题链接:#137 Single Number II

要求:

给定一个整型数组,其中除了一个元素之外,每个元素都出现三次。找出这个元素

注意:算法的时间复杂度应为O(n),最好不使用额外的内存空间

难度:中等

分析:

与#136类似,都是考察位运算。不过出现两次的可以使用异或运算的特性 n XOR n = 0, n XOR 0 = n,即某一

- 《JavaScript语言精粹》笔记

aijuans

JavaScript

0、JavaScript的简单数据类型包括数字、字符创、布尔值(true/false)、null和undefined值,其它值都是对象。

1、JavaScript只有一个数字类型,它在内部被表示为64位的浮点数。没有分离出整数,所以1和1.0的值相同。

2、NaN是一个数值,表示一个不能产生正常结果的运算结果。NaN不等于任何值,包括它本身。可以用函数isNaN(number)检测NaN,但是

- 你应该更新的Java知识之常用程序库

Kai_Ge

java

在很多人眼中,Java 已经是一门垂垂老矣的语言,但并不妨碍 Java 世界依然在前进。如果你曾离开 Java,云游于其它世界,或是每日只在遗留代码中挣扎,或许是时候抬起头,看看老 Java 中的新东西。

Guava

Guava[gwɑ:və],一句话,只要你做Java项目,就应该用Guava(Github)。

guava 是 Google 出品的一套 Java 核心库,在我看来,它甚至应该

- HttpClient

120153216

httpclient

/**

* 可以传对象的请求转发,对象已流形式放入HTTP中

*/

public static Object doPost(Map<String,Object> parmMap,String url)

{

Object object = null;

HttpClient hc = new HttpClient();

String fullURL

- Django model字段类型清单

2002wmj

django

Django 通过 models 实现数据库的创建、修改、删除等操作,本文为模型中一般常用的类型的清单,便于查询和使用: AutoField:一个自动递增的整型字段,添加记录时它会自动增长。你通常不需要直接使用这个字段;如果你不指定主键的话,系统会自动添加一个主键字段到你的model。(参阅自动主键字段) BooleanField:布尔字段,管理工具里会自动将其描述为checkbox。 Cha

- 在SQLSERVER中查找消耗CPU最多的SQL

357029540

SQL Server

返回消耗CPU数目最多的10条语句

SELECT TOP 10

total_worker_time/execution_count AS avg_cpu_cost, plan_handle,

execution_count,

(SELECT SUBSTRING(text, statement_start_of

- Myeclipse项目无法部署,Undefined exploded archive location

7454103

eclipseMyEclipse

做个备忘!

错误信息为:

Undefined exploded archive location

原因:

在工程转移过程中,导致工程的配置文件出错;

解决方法:

- GMT时间格式转换

adminjun

GMT时间转换

普通的时间转换问题我这里就不再罗嗦了,我想大家应该都会那种低级的转换问题吧,现在我向大家总结一下如何转换GMT时间格式,这种格式的转换方法网上还不是很多,所以有必要总结一下,也算给有需要的朋友一个小小的帮助啦。

1、可以使用

SimpleDateFormat SimpleDateFormat

EEE-三位星期

d-天

MMM-月

yyyy-四位年

- Oracle数据库新装连接串问题

aijuans

oracle数据库

割接新装了数据库,客户端登陆无问题,apache/cgi-bin程序有问题,sqlnet.log日志如下:

Fatal NI connect error 12170.

VERSION INFORMATION: TNS for Linux: Version 10.2.0.4.0 - Product

- 回顾java数组复制

ayaoxinchao

java数组

在写这篇文章之前,也看了一些别人写的,基本上都是大同小异。文章是对java数组复制基础知识的回顾,算是作为学习笔记,供以后自己翻阅。首先,简单想一下这个问题:为什么要复制数组?我的个人理解:在我们在利用一个数组时,在每一次使用,我们都希望它的值是初始值。这时我们就要对数组进行复制,以达到原始数组值的安全性。java数组复制大致分为3种方式:①for循环方式 ②clone方式 ③arrayCopy方

- java web会话监听并使用spring注入

bewithme

Java Web

在java web应用中,当你想在建立会话或移除会话时,让系统做某些事情,比如说,统计在线用户,每当有用户登录时,或退出时,那么可以用下面这个监听器来监听。

import java.util.ArrayList;

import java.ut

- NoSQL数据库之Redis数据库管理(Redis的常用命令及高级应用)

bijian1013

redis数据库NoSQL

一 .Redis常用命令

Redis提供了丰富的命令对数据库和各种数据库类型进行操作,这些命令可以在Linux终端使用。

a.键值相关命令

b.服务器相关命令

1.键值相关命令

&

- java枚举序列化问题

bingyingao

java枚举序列化

对象在网络中传输离不开序列化和反序列化。而如果序列化的对象中有枚举值就要特别注意一些发布兼容问题:

1.加一个枚举值

新机器代码读分布式缓存中老对象,没有问题,不会抛异常。

老机器代码读分布式缓存中新对像,反序列化会中断,所以在所有机器发布完成之前要避免出现新对象,或者提前让老机器拥有新增枚举的jar。

2.删一个枚举值

新机器代码读分布式缓存中老对象,反序列

- 【Spark七十八】Spark Kyro序列化

bit1129

spark

当使用SparkContext的saveAsObjectFile方法将对象序列化到文件,以及通过objectFile方法将对象从文件反序列出来的时候,Spark默认使用Java的序列化以及反序列化机制,通常情况下,这种序列化机制是很低效的,Spark支持使用Kyro作为对象的序列化和反序列化机制,序列化的速度比java更快,但是使用Kyro时要注意,Kyro目前还是有些bug。

Spark

- Hybridizing OO and Functional Design

bookjovi

erlanghaskell

推荐博文:

Tell Above, and Ask Below - Hybridizing OO and Functional Design

文章中把OO和FP讲的深入透彻,里面把smalltalk和haskell作为典型的两种编程范式代表语言,此点本人极为同意,smalltalk可以说是最能体现OO设计的面向对象语言,smalltalk的作者Alan kay也是OO的最早先驱,

- Java-Collections Framework学习与总结-HashMap

BrokenDreams

Collections

开发中常常会用到这样一种数据结构,根据一个关键字,找到所需的信息。这个过程有点像查字典,拿到一个key,去字典表中查找对应的value。Java1.0版本提供了这样的类java.util.Dictionary(抽象类),基本上支持字典表的操作。后来引入了Map接口,更好的描述的这种数据结构。

&nb

- 读《研磨设计模式》-代码笔记-职责链模式-Chain Of Responsibility

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

/**

* 业务逻辑:项目经理只能处理500以下的费用申请,部门经理是1000,总经理不设限。简单起见,只同意“Tom”的申请

* bylijinnan

*/

abstract class Handler {

/*

- Android中启动外部程序

cherishLC

android

1、启动外部程序

引用自:

http://blog.csdn.net/linxcool/article/details/7692374

//方法一

Intent intent=new Intent();

//包名 包名+类名(全路径)

intent.setClassName("com.linxcool", "com.linxcool.PlaneActi

- summary_keep_rate

coollyj

SUM

BEGIN

/*DECLARE minDate varchar(20) ;

DECLARE maxDate varchar(20) ;*/

DECLARE stkDate varchar(20) ;

DECLARE done int default -1;

/* 游标中 注册服务器地址 */

DE

- hadoop hdfs 添加数据目录出错

daizj

hadoophdfs扩容

由于原来配置的hadoop data目录快要用满了,故准备修改配置文件增加数据目录,以便扩容,但由于疏忽,把core-site.xml, hdfs-site.xml配置文件dfs.datanode.data.dir 配置项增加了配置目录,但未创建实际目录,重启datanode服务时,报如下错误:

2014-11-18 08:51:39,128 WARN org.apache.hadoop.h

- grep 目录级联查找

dongwei_6688

grep

在Mac或者Linux下使用grep进行文件内容查找时,如果给定的目标搜索路径是当前目录,那么它默认只搜索当前目录下的文件,而不会搜索其下面子目录中的文件内容,如果想级联搜索下级目录,需要使用一个“-r”参数:

grep -n -r "GET" .

上面的命令将会找出当前目录“.”及当前目录中所有下级目录

- yii 修改模块使用的布局文件

dcj3sjt126com

yiilayouts

方法一:yii模块默认使用系统当前的主题布局文件,如果在主配置文件中配置了主题比如: 'theme'=>'mythm', 那么yii的模块就使用 protected/themes/mythm/views/layouts 下的布局文件; 如果未配置主题,那么 yii的模块就使用 protected/views/layouts 下的布局文件, 总之默认不是使用自身目录 pr

- 设计模式之单例模式

come_for_dream

设计模式单例模式懒汉式饿汉式双重检验锁失败无序写入

今天该来的面试还没来,这个店估计不会来电话了,安静下来写写博客也不错,没事翻了翻小易哥的博客甚至与大牛们之间的差距,基础知识不扎实建起来的楼再高也只能是危楼罢了,陈下心回归基础把以前学过的东西总结一下。

*********************************

- 8、数组

豆豆咖啡

二维数组数组一维数组

一、概念

数组是同一种类型数据的集合。其实数组就是一个容器。

二、好处

可以自动给数组中的元素从0开始编号,方便操作这些元素

三、格式

//一维数组

1,元素类型[] 变量名 = new 元素类型[元素的个数]

int[] arr =

- Decode Ways

hcx2013

decode

A message containing letters from A-Z is being encoded to numbers using the following mapping:

'A' -> 1

'B' -> 2

...

'Z' -> 26

Given an encoded message containing digits, det

- Spring4.1新特性——异步调度和事件机制的异常处理

jinnianshilongnian

spring 4.1

目录

Spring4.1新特性——综述

Spring4.1新特性——Spring核心部分及其他

Spring4.1新特性——Spring缓存框架增强

Spring4.1新特性——异步调用和事件机制的异常处理

Spring4.1新特性——数据库集成测试脚本初始化

Spring4.1新特性——Spring MVC增强

Spring4.1新特性——页面自动化测试框架Spring MVC T

- squid3(高命中率)缓存服务器配置

liyonghui160com

系统:centos 5.x

需要的软件:squid-3.0.STABLE25.tar.gz

1.下载squid

wget http://www.squid-cache.org/Versions/v3/3.0/squid-3.0.STABLE25.tar.gz

tar zxf squid-3.0.STABLE25.tar.gz &&

- 避免Java应用中NullPointerException的技巧和最佳实践

pda158

java

1) 从已知的String对象中调用equals()和equalsIgnoreCase()方法,而非未知对象。 总是从已知的非空String对象中调用equals()方法。因为equals()方法是对称的,调用a.equals(b)和调用b.equals(a)是完全相同的,这也是为什么程序员对于对象a和b这么不上心。如果调用者是空指针,这种调用可能导致一个空指针异常

Object unk

- 如何在Swift语言中创建http请求

shoothao

httpswift

概述:本文通过实例从同步和异步两种方式上回答了”如何在Swift语言中创建http请求“的问题。

如果你对Objective-C比较了解的话,对于如何创建http请求你一定驾轻就熟了,而新语言Swift与其相比只有语法上的区别。但是,对才接触到这个崭新平台的初学者来说,他们仍然想知道“如何在Swift语言中创建http请求?”。

在这里,我将作出一些建议来回答上述问题。常见的

- Spring事务的传播方式

uule

spring事务

传播方式:

新建事务

required

required_new - 挂起当前

非事务方式运行

supports

&nbs