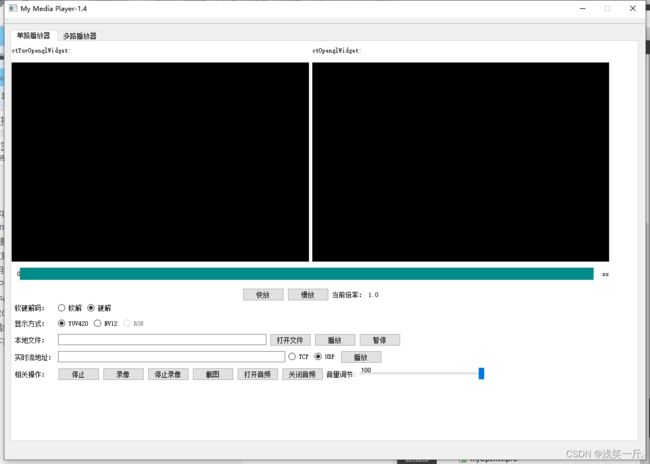

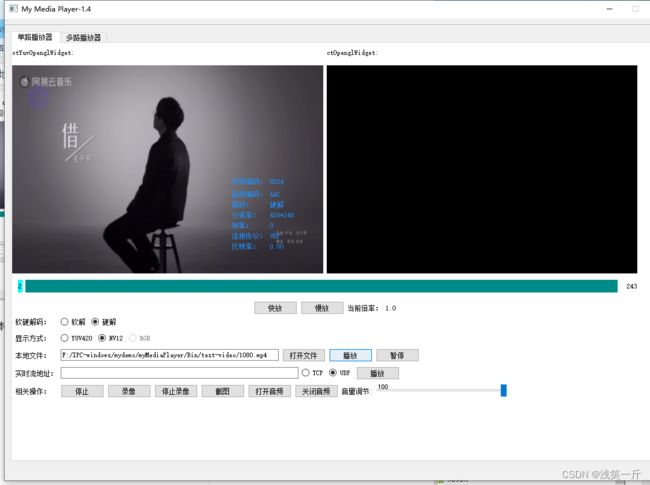

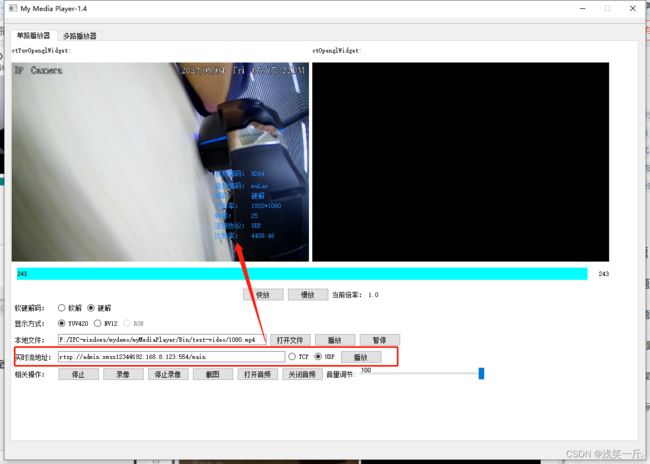

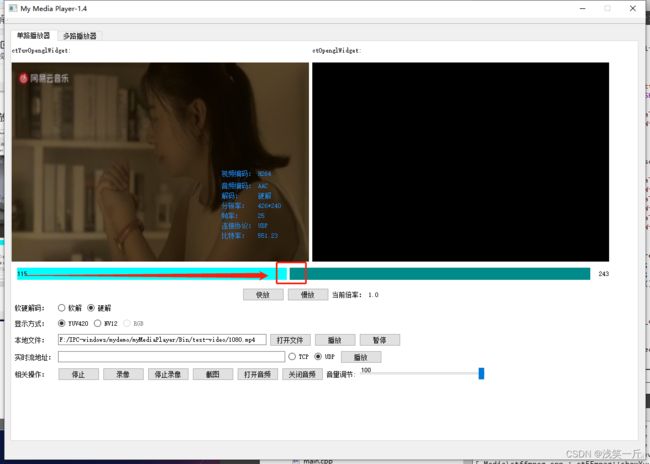

QT下开发的音视频播放器,支持单路与多路播放,支持软硬解码,支持本地文件与实时流播放,支持录像截图,支持YUV与RGB显示,支持音量调节,支持码流信息显示,支持进度条跳转等功能,稳定实用,提供源码下载

前言

本工程使用的qt版本是5.8版本,可以在5.8及其以上版本进行编译。ffmpeg采用的是5.1版本,在工程的WinLib下有x86和64位的ffmpeg可供选择。本地测试的视频文件在Bin目录下,实时流采用的是拉取摄像头的RTSP流进行测试。本播放器支持软硬解码,硬解码采用的DXVA2, ffmpeg解码后,通过重写QOpenGLWidget,可以让YUV转RGB在GPU进行处理,减少对CPU的使用。音频播放采用的是QAudioOutput,通过setVolumn接口可以控制音量的大小。本播放器支持PCM_MULAW、PCM_ALAW、AAC编码格式音频的播放。本播放器支持单路播放器与多路播放器,单路播放器中ctYuvOpenglWidget是让让YUV转RGB在GPU中处理而重写的类,ctOpenglWidget则是直接对RGB图像进行渲染。多路播放器可以支持多分屏切换,通过config文件夹下的mulvideo.ini配置要播放的码流地址。本工程的代码有注释,可以通过本博客查看部分代码或者在博客最后的链接处下载该播放器工程。

一、功能介绍

1.单路播放器

1.1 硬解+YUV420P 播放本地视频

1.2 硬解+NV12 播放本地视频

1.3 软解+YUV420P 播放本地视频

1.4 软解+NV12 播放本地视频

1.5 软解+RGB 播放本地视频

1.6 播放实时视频 (软硬解和显示方式与播放本地一致)

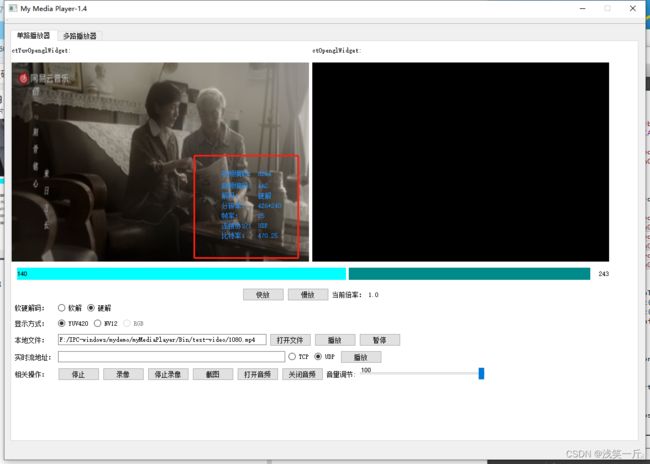

1.7 播放本地视频 + 时间轴跳转

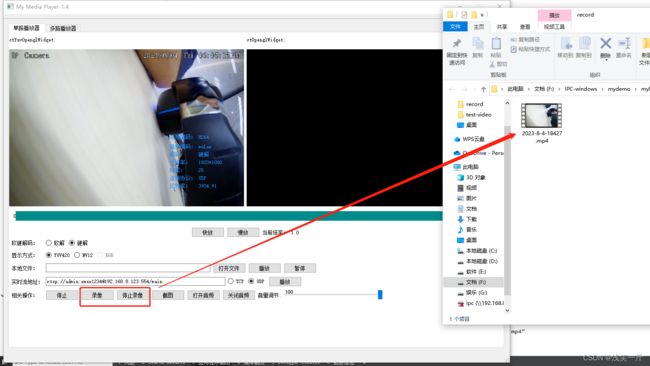

1.8 录像

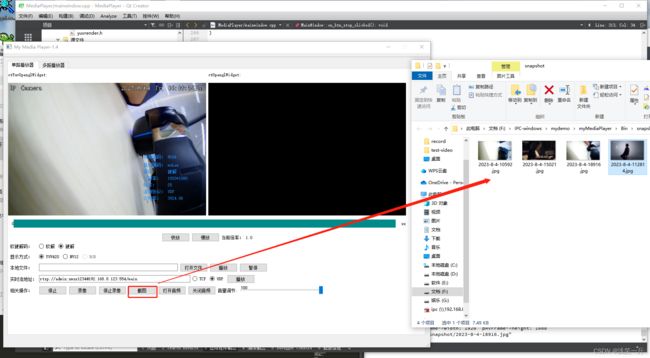

1.9 截图

1.10 音频控制

1.11 码流信息显示

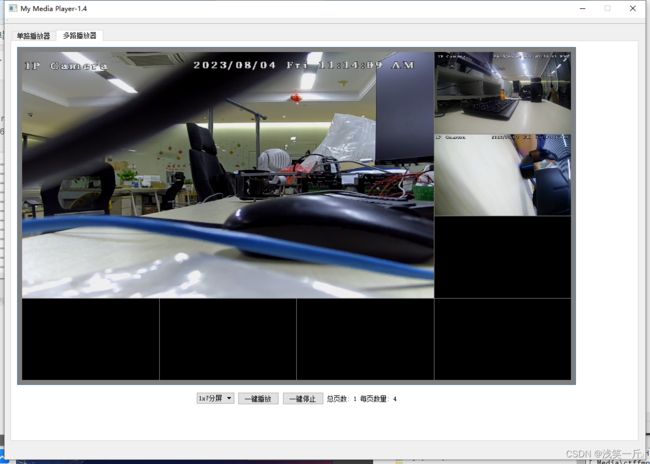

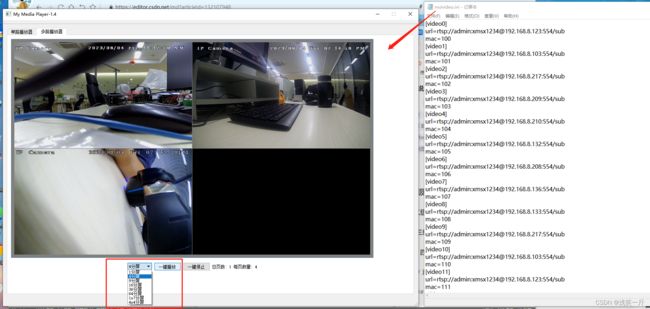

2.多路播放器

配置文件中按格式填入的实时流的url或者本地文件的路径,mac是要唯一,点击一键播放即可。支持多种分屏的切换,最多支持到64路。

二、部分代码分享

- mediathread.h

#ifndef MEDIATHREAD_H

#define MEDIATHREAD_H

#include

#include

#include

#include "ctffmpeg.h"

#include "commondef.h"

#include "mp4recorder.h"

#include "ctyuvopenglwidget.h"

#include

#define MAX_AUDIO_OUT_SIZE 8*1152

QT_FORWARD_DECLARE_CLASS(MediaThread)

typedef QHash QHashMediaThread;

typedef QHash::iterator QHashMediaThreadIterator;

class MediaThread : public QThread

{

Q_OBJECT

public:

MediaThread(ctYuvOpenglWidget *pShowWidget);

~MediaThread();

bool Init(QString sUrl, int nProtocolType = MEDIA_PROTOCOL_UDP, int nDecodeType = MEDIA_DECODE_HARD,

int nShowType = MEDIA_SHOW_YUV420);

void DeInit();

void startThread();

void stopThread();

void setPause(bool bPause);

bool getPauseStatus();

void Snapshot();

void startRecord();

void stopRecord();

void disconnectFFmpeg();

public:

int m_nMinAudioPlayerSize = 640;

int m_nPlaySpeed = 1;

QMutex m_decoderLock;

public slots:

void slot_seekFrame(int nValue);

private:

void run() override;

signals:

//rgb

void sig_emitImage(const QImage&);

//yuv

void sig_setYuvBuffer(uchar *ptr, uint nWidth, uint nHeight);

void sig_clearYuvBuffer();

void sig_showYUV();

void sig_showTotalTime(int nTotalTime);

void sig_showCurTime(int nCurTime);

void sig_setStreamInfo(STREAM_INFO_T stStreamInfo);

void sig_setStreamInfoShow(bool bShow);

private:

bool m_bRun = false;

bool m_bPause = false;

bool m_bRecord = false;

ctFFmpeg* m_pFFmpeg = nullptr;

mp4Recorder m_pMp4Recorder;

ctYuvOpenglWidget *m_pShowWidget;

};

#endif // MEDIATHREAD_H

- mediathread.cpp

#include "mediathread.h"

#include "ctaudioplayer.h"

#include

#include

#include

MediaThread::MediaThread(ctYuvOpenglWidget *pShowWidget) : m_pShowWidget(pShowWidget)

{

}

MediaThread::~MediaThread()

{

if(m_pFFmpeg)

{

m_pFFmpeg->DeInit();

delete m_pFFmpeg;

m_pFFmpeg = nullptr;

}

}

bool MediaThread::Init(QString sUrl, int nProtocolType, int nDecodeType, int nShowType)

{

qRegisterMetaType("STREAM_INFO_T");

if(nullptr == m_pFFmpeg)

{

MY_DEBUG << "new ctFFmpeg";

m_pFFmpeg = new ctFFmpeg;

//rgb

connect(m_pFFmpeg, SIGNAL(sig_getImage(const QImage&)),

this, SIGNAL(sig_emitImage(const QImage&)));

//yuv

connect(m_pFFmpeg, SIGNAL(sig_setYuvBuffer(uchar*, uint, uint)),

this, SIGNAL(sig_setYuvBuffer(uchar*, uint, uint)));

connect(m_pFFmpeg, SIGNAL(sig_clearYuvBuffer()),

this, SIGNAL(sig_clearYuvBuffer()));

connect(m_pFFmpeg, SIGNAL(sig_showYUV()),

this, SIGNAL(sig_showYUV()));

connect(m_pFFmpeg, SIGNAL(sig_showTotalTime(int)),

this, SIGNAL(sig_showTotalTime(int)));

connect(m_pFFmpeg, SIGNAL(sig_showCurTime(int)),

this, SIGNAL(sig_showCurTime(int)));

connect(m_pFFmpeg, SIGNAL(sig_setStreamInfo(STREAM_INFO_T)),

this, SIGNAL(sig_setStreamInfo(STREAM_INFO_T)));

}

if(m_pFFmpeg->Init(sUrl, nProtocolType, nDecodeType, nShowType) != 0)

{

MY_DEBUG << "FFmpeg Init error.";

return false;

}

return true;

}

void MediaThread::DeInit()

{

MY_DEBUG << "DeInit 000";

if(m_pFFmpeg)

{

m_pFFmpeg->DeInit();

MY_DEBUG << "DeInit 111";

delete m_pFFmpeg;

m_pFFmpeg = nullptr;

}

MY_DEBUG << "DeInit end";

}

void MediaThread::startThread()

{

m_bRun = true;

start();

}

void MediaThread::stopThread()

{

stopRecord();

m_bRun = false;

m_nPlaySpeed = 1;

}

void MediaThread::setPause(bool bPause)

{

m_bPause = bPause;

}

bool MediaThread::getPauseStatus()

{

return m_bPause;

}

void MediaThread::Snapshot()

{

m_pFFmpeg->Snapshot();

}

void MediaThread::startRecord()

{

QString sPath = RECORD_DEFAULT_PATH;

QDate date = QDate::currentDate();

QTime time = QTime::currentTime();

QString sRecordPath = QString("%1%2-%3-%4-%5%6%7.mp4").arg(sPath).arg(date.year()). \

arg(date.month()).arg(date.day()).arg(time.hour()).arg(time.minute()). \

arg(time.second());

MY_DEBUG << "sRecordPath:" << sRecordPath;

if(nullptr != m_pFFmpeg->m_pAVFmtCxt && m_bRun)

{

m_bRecord = m_pMp4Recorder.Init(m_pFFmpeg->m_pAVFmtCxt, m_pFFmpeg->m_CodecId,

m_pFFmpeg->m_AudioCodecId, sRecordPath);

}

}

void MediaThread::stopRecord()

{

if(m_bRecord)

{

MY_DEBUG << "stopRecord...";

m_pMp4Recorder.DeInit();

m_bRecord = false;

}

}

void MediaThread::disconnectFFmpeg()

{

disconnect(m_pFFmpeg, SIGNAL(sig_setYuvBuffer(uchar*, uint, uint)),

this, SIGNAL(sig_setYuvBuffer(uchar*, uint, uint)));

disconnect(m_pFFmpeg, SIGNAL(sig_clearYuvBuffer()),

this, SIGNAL(sig_clearYuvBuffer()));

disconnect(m_pFFmpeg, SIGNAL(sig_showYUV()),

this, SIGNAL(sig_showYUV()));

emit sig_clearYuvBuffer();

emit sig_showYUV();

}

void MediaThread::slot_seekFrame(int nValue)

{

if(m_pFFmpeg)

m_pFFmpeg->seekFrame(nValue);

}

void MediaThread::run()

{

char audioOut[MAX_AUDIO_OUT_SIZE] = {0};

while(m_bRun)

{

if(m_bPause)

{

msleep(100);

continue;

}

//获取播放器缓存大小

if(m_pFFmpeg->m_bSupportAudioPlay)

{

int nFreeSize = ctAudioPlayer::getInstance().getFreeSize();

if(nFreeSize < m_nMinAudioPlayerSize)

{

msleep(1);

continue;

}

}

//视频同步音频时间戳,变速靠音频(以音频为基准同步,视频向它看齐)

//msleep(40);//针对首帧pts不为0情况

AVPacket pkt = m_pFFmpeg->getPacket();

//MY_DEBUG << "pkt.size=" << pkt.size;

if (pkt.size <= 0)

{

msleep(10);

av_packet_unref(&pkt);

continue;

}

if(m_bRecord)

{

//MY_DEBUG << "record...";

AVPacket* pPkt = av_packet_clone(&pkt);

m_pMp4Recorder.saveOneFrame(*pPkt, m_pFFmpeg->m_CodecId, m_pFFmpeg->m_AudioCodecId);

av_packet_free(&pPkt);

}

//解码播放

if (pkt.stream_index == m_pFFmpeg->m_nAudioIndex &&

m_pFFmpeg->m_bSupportAudioPlay)

{

if(m_pFFmpeg->Decode(&pkt))

{

int nLen = m_pFFmpeg->getAudioFrame(audioOut);//获取一帧音频的pcm

if(nLen > 0)

ctAudioPlayer::getInstance().Write(audioOut, nLen);

while(m_pFFmpeg->RecvDecodedFrame() && m_pFFmpeg->m_bQuit)

{

int nLen = m_pFFmpeg->getAudioFrame(audioOut);//获取一帧音频的pcm

if(nLen > 0)

ctAudioPlayer::getInstance().Write(audioOut, nLen);

}

}

}

else if(pkt.stream_index == m_pFFmpeg->m_nVideoIndex)

{

if(m_pFFmpeg->m_nPlayWay == MEDIA_PLAY_FILE)//本地文件播放延时处理

{

AVRational time_base = m_pFFmpeg->m_pAVFmtCxt->streams[m_pFFmpeg->m_nVideoIndex]->time_base;

AVRational time_base_q = {1, AV_TIME_BASE}; // AV_TIME_BASE_Q;

int64_t pts_time = av_rescale_q(pkt.dts, time_base, time_base_q);

//MY_DEBUG << "pts_time:" << pts_time;

int64_t now_time = av_gettime() - m_pFFmpeg->m_nStartTime;

if (pts_time > now_time)

av_usleep((pts_time - now_time));

}

if(m_pFFmpeg->Decode(&pkt))

{

#if FILTER_ENABLE

if(m_pFFmpeg->m_nPlayWay == MEDIA_PLAY_FILE)

m_pFFmpeg->DealFilter();

#endif

if(m_pFFmpeg->m_nShowType == MEDIA_SHOW_RGB)

{

//cpu: yuv to rgb

m_pFFmpeg->showRgbFrame();

m_pFFmpeg->generateImage();

}

else

{

//将yuv转rgb放到GPU中执行,减少cpu的使用

if(m_pShowWidget)

{

if(m_pFFmpeg->showYuvFrame(m_pShowWidget->geometry().width(),

m_pShowWidget->geometry().height()) == false)

{

MY_DEBUG << "showYuvFrame failed.";

break;

}

}

while(m_pFFmpeg->RecvDecodedFrame() && m_pFFmpeg->m_bQuit)

{

if(m_pShowWidget)

{

if(m_pFFmpeg->showYuvFrame(m_pShowWidget->geometry().width(),

m_pShowWidget->geometry().height()) == false)

{

MY_DEBUG << "showYuvFrame failed.";

break;

}

}

}

}

}

}

av_packet_unref(&pkt);

}

emit sig_setStreamInfoShow(false);

MY_DEBUG << "run end";

}

- ctmediamanage.h

#ifndef CTMEDIAMANAGE_H

#define CTMEDIAMANAGE_H

#include

#include

#include

#include "commondef.h"

#include "mediathread.h"

#include "ctyuvopenglwidget.h"

#include

class ctMediaManage : public QObject

{

Q_OBJECT

public:

ctMediaManage();

~ctMediaManage();

static ctMediaManage& getInstance();

void playOne(QString sMac, QString sUrl, ctYuvOpenglWidget* pGL);

void stopOne(QString sMac, ctYuvOpenglWidget* pGL);

void playAll(QHashMediaDev& hashDev, QHashWidget &hashWidget);

void stopAll(QHashWidget &hashWidget);

QHashMediaThread m_workMediaThread;

private:

QMutex m_lock;

QHashWidget m_workWidgets;

//MediaThread* m_pMediaThread[MAX_VIDEO_NUM] = {nullptr};

};

#endif // CTMEDIAMANAGE_H

- ctmediamanage.cpp

#include "ctmediamanage.h"

#include "ctmediatask.h"

#include

ctMediaManage::ctMediaManage()

{

}

ctMediaManage::~ctMediaManage()

{

m_workWidgets.clear();

}

ctMediaManage &ctMediaManage::getInstance()

{

static ctMediaManage s_obj;

return s_obj;

}

void ctMediaManage::playOne(QString sMac, QString sUrl, ctYuvOpenglWidget *pGL)

{

QMutexLocker guard(&m_lock);

if(m_workMediaThread.contains(sMac))

{

MY_DEBUG << "m_workWidgets exist mac";

return;

}

if(pGL->getVideoState() != VIDEO_STATE_CONNECTED)

{

MediaThread* pThread = new MediaThread(pGL);

if(pThread)

{

connect(pThread, SIGNAL(sig_setYuvBuffer(uchar*, uint, uint)),

pGL, SLOT(slot_setYuvBuffer(uchar*, uint, uint)));

connect(pThread, SIGNAL(sig_clearYuvBuffer()),

pGL, SLOT(slot_clearYuvBuffer()));

connect(pThread, SIGNAL(sig_showYUV()),

pGL, SLOT(slot_showYUV()));

m_workMediaThread.insert(sMac, pThread);

//m_workWidgets.insert(sMac, pGL);

ctMediaPlayTask* pTask = new ctMediaPlayTask(nullptr, sUrl, pThread);

pGL->setVideoMac(sMac);

pGL->setVideoState(VIDEO_STATE_CONNECTED);

QThreadPool::globalInstance()->start(pTask);

}

}

else

{

MY_DEBUG << "this dev is playing.";

}

}

void ctMediaManage::stopOne(QString sMac, ctYuvOpenglWidget *pGL)

{

QMutexLocker guard(&m_lock);

if(nullptr == pGL)

return;

if(!sMac.isEmpty() && m_workMediaThread.contains(sMac))

{

MediaThread* pThread = (MediaThread*)m_workMediaThread[sMac];

if(pThread)

{

pThread->disconnectFFmpeg();

disconnect(pThread, SIGNAL(sig_setYuvBuffer(uchar*, uint, uint)),

pGL, SLOT(slot_setYuvBuffer(uchar*, uint, uint)));

disconnect(pThread, SIGNAL(sig_clearYuvBuffer()),

pGL, SLOT(slot_clearYuvBuffer()));

disconnect(pThread, SIGNAL(sig_showYUV()),

pGL, SLOT(slot_showYUV()));

pGL->setVideoState(VIDEO_STATE_UNCONNECTED);

pGL->setVideoMac(QString(""));

ctMediaStopTask* pTask = new ctMediaStopTask(nullptr, pThread);

QThreadPool::globalInstance()->start(pTask);

m_workMediaThread.remove(sMac);

}

}

}

void ctMediaManage::playAll(QHashMediaDev &hashDev, QHashWidget &hashWidget)

{

QHashMediaDevIterator dev = hashDev.begin();

for(int i = 0; i < 3 && dev != hashDev.end(); i++)

{

QString& sMac = dev.value().sDevId;

QString sUrl = dev.value().sUrl;

if(!m_workMediaThread.contains(sMac))

{

QString sWidgetName = QString("video%1").arg(i);

ctYuvOpenglWidget* pGL = hashWidget[sWidgetName];

playOne(sMac, sUrl, pGL);

dev++;

}

}

}

void ctMediaManage::stopAll(QHashWidget &hashWidget)

{

QHashWidgetIterator iter = hashWidget.begin();

for(; iter != hashWidget.end(); ++iter)

{

stopOne(iter.value()->getVideoMac(), iter.value());

}

}

三、工程源码下载

可执行程序下载(免费):https://download.csdn.net/download/linyibin_123/88205206

工程源码下载:https://download.csdn.net/download/linyibin_123/88171711