linux-vsock-internals-vsock框架

转:https://terenceli.github.io/%E6%8A%80%E6%9C%AF/2020/04/18/vsock-internals

文章目录

- 一、vsock背景

- 二、vsock框架

-

-

- send/recv data

- guest send

- host recv

- multi-transport

- Reference

-

一、vsock背景

VM Sockets(vsock) is a fast and efficient communication mechanism between guest virtual machines and their host.

It was added by VMware in commit VSOCK: Introduce VM Sockets.

The commit added a new socket address family named vsock and its vmci transport.

VM Sockets can be used in a lot of situation such as the VMware Tools inside the guest.

As vsock is very useful the community has development vsock supporting other hypervisor such as qemu&&kvm and HyperV.

Redhat added the virtio transport for vsock in VSOCK: Introduce virtio_transport.ko, for vhost transport in host was added in comit VSOCK: Introduce vhost_vsock.ko.

Microsoft added the HyperV transport in commit hv_sock: implements Hyper-V transport for Virtual Sockets (AF_VSOCK), Of course this host transport is in Windows kernel and no open sourced.

This post will focus the virtio transport in guest and vhost transport in host.

VM Sockets (vsock)是虚拟机和宿主机之间的快速高效通信机制。它是由VMware在提交VSOCK: Introduce VM Sockets时添加的。该提交添加了一个名为vsock的新套接字地址族及其vmci传输。VM Sockets可以在许多情况下使用,例如宿主机中的VMware Tools。由于vsock非常有用,社区还开发了支持其他虚拟化程序(如qemu&&kvm和HyperV)的vsock。Redhat在vSOCK: lntroduce virtio_transport.ko中为vsock添加了virtio传输,在主机中添加了vhost传输,该传输在提交VSOCK: lntroduce vhost_vsock.ko中引入。Microsoft在提交hv_sock:

VM套接字(Vsock)是虚拟机和宿主机之间的快速高效通信机制它是由VMware在提交VSOCK:引入VM套接字时添加的。该提交添加了一个名为vsock的新套接字地址族及其vmci传输.VM套接字可以在许多情况下使用,例如宿主机中的VMware工具。由于vsock非常有用,社区还开发了支持其他虚拟化程序(如qemu&&kvm和HyperV)的vsock。RedHat在vSOCK:Introductvirtio_transport.ko中为vsock添加了virtio传输,在主机中添加了vhost传输,该传输在提交vSOCK:Introductvhost_vsock.ko中引入。微软在提交hv_sock:

implements Hyper-V transport for Virtual Sockets(AF_VSOCK)中添加了HyperV传输。当然,这个主机传输是在Windows内核中,并没有开源。本文将重点介绍客户端中的virtio传输和主机中的vhost传输。

为虚拟套接字(AF_VSOCK)中添加了HyperV传输实现超V传输。当然,这个主机传输是在内核中,并没有开源.本文将重点介绍客户端中的virtio传输和主机中的vhost传输.

二、vsock框架

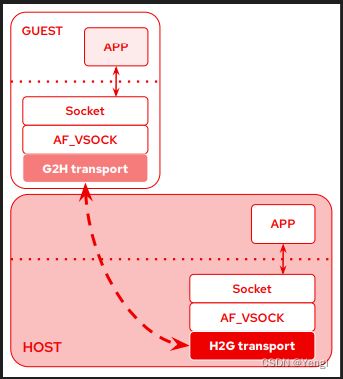

Following pics is from Stefano Garzarella’s slides

There are several layers here.

application, use

socket layer, support for socket API

AF_VSOCK address family, implement the vsock core

transport, trasnport the data between guest and host.

The transport layer is the mostly needed to talk as the other three just need to implement standand interfaces in kernel.

Transport as its name indicated, is used to transport the data between guest and host just like the networking card tranpost data between local and remote socket.

There are two kinds of transports according to data’s flow direction.

G2H: guest->host transport, they run in the guest and the guest vsock networking protocol uses this to communication with the host

H2G: host->guest transport, they run in the host and the host vsock networing protocol uses this to communiction with the guest.

Usually H2G transport is implemented as a device emulation, and G2H transport is implemented as the emulated device’s driver.

For example, in VMware the H2G transport is a emulated vmci PCI device and the G2H is vmci device driver.

In qemu the H2G transport is a emulated vhost-vsock device and the G2H transport is the vosck device’s driver.

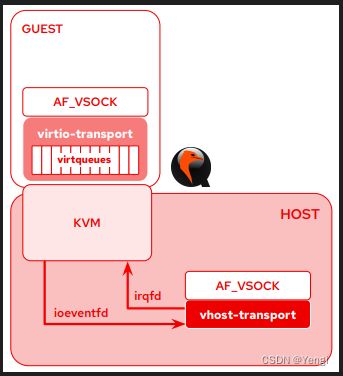

Following pic shows the virtio(in guest) and vhost(in host) transport. This pic also from Stefano Garzarella’s slides.

这里有好几层。应用程序,使用

根据数据的流向有两种传输方式。

G2H: guest->host传输,它们运行在客户机中,客户机vsock网络协议使用它与主机通信;

H2G: host->guest传输,它们运行在主机中,主机vsock网络协议使用它与客户机通信。

通常H2G传输是作为设备仿真实现的,而G2H传输是作为被仿真设备的驱动实现的。例如,在VMware中,H2G传输是一个模拟的vmci PCI设备,G2H是vmci设备驱动程序。在qemu中,H2G传输是模拟的vhost-vsock设备,G2H传输是vosck设备的驱动程序。

下图显示了virtio(在guest中)和vhost(在host中)传输。这张照片也来自Stefano Garzarella的幻灯片。

vsock socket address family and G2H transport is implemented in ‘net/vmw_vsock’ directory in linux tree.

H2G transport is implemented in ‘drivers’ directory, vhost vsock is in ‘drivers/vhost/vsock.c’ and vmci is in ‘drivers/misc/vmw_vmci’ directory.

Following pic shows the more detailed virtio<->vhost transport in qemu.

vsock套接字地址族和G2H传输在linux树的’ net/vmw_vsock ‘目录下实现。H2G传输在’ drivers ‘目录中实现,vhost vsock在’ drivers/vhost/vsock.c ‘中,vmci在’ drivers/misc/vmw_vmci '目录中。下图显示了qemu中更详细的virtio<->vhost传输。

Following is the steps how guest and host initialize their tranport channel.

When start qemu, we need add ‘-device vhost-vsock-pci,guest-cid=’ in qemu cmdline.

load the vhost_vsock driver in host.

The guest kernel will probe the vhost-vsock pci device and load its driver. This virtio driver is registered in ‘virtio_vsock_init’ function.

The virtio_vsock driver initializes the emulated vhost-vsock device. This will communication with vhost_vsock driver.

Transport layer has a global variable named ‘transport’. Both guest and host side need to register his vsock transport by calling ‘vsock_core_init’. This function will set the ‘transport’ to an transport implementaion.

For example the guest kernel function ‘virtio_vsock_init’ calls ‘vsock_core_init’ to set the ‘transport’ to ‘virtio_transport.transport’ and the host kernel function ‘vhost_vsock_init’ calls ‘vsock_core_init’ to set the ‘transport’ to ‘vhost_transport.transport’.

After initialization, the guest and host can use vsock to talk to each other.

这段内容介绍了客户端和主机如何初始化传输通道。需要在qemu cmdline中添加“-device vhost-vsock-pci,guest-cid=”来启动qemu。在主机上加载vhost_vsock驱动程序,客户端内核将探测vhost-vsock pci设备并加载其驱动程序。virtio_vsock驱动程序在“virtio_vsock_init”函数中注册。virtio_vsock驱动程序初始化仿真vhost-vsock设备,这将与vhost_vsock驱动程序通信。传输层有一个名为“transport”的全局变量。客户端和主机都需要通过调用“vsock_core_init”来注册自己的vsock传输。这个函数将把“transport”设置为一个传输实现。例如,客户端内核函数“virtio_vsock_init”调用“vsock_core_init”将“transport”设置为“virtio_transport.transport”,主机内核函数“vhost_vsock_init”调用“vsock_core_init”将“transport”设置为“vhost_transport.transport”。初始化后,客户端和主机可以使用vsock进行通信。

send/recv data

vsock has two type just like udp and tcp for ipv4. Following shows the ‘vsock_stream_ops’

static const struct proto_ops vsock_stream_ops = {

.family = PF_VSOCK,

.owner = THIS_MODULE,

.release = vsock_release,

.bind = vsock_bind,

.connect = vsock_stream_connect,

.socketpair = sock_no_socketpair,

.accept = vsock_accept,

.getname = vsock_getname,

.poll = vsock_poll,

.ioctl = sock_no_ioctl,

.listen = vsock_listen,

.shutdown = vsock_shutdown,

.setsockopt = vsock_stream_setsockopt,

.getsockopt = vsock_stream_getsockopt,

.sendmsg = vsock_stream_sendmsg,

.recvmsg = vsock_stream_recvmsg,

.mmap = sock_no_mmap,

.sendpage = sock_no_sendpage,

};

Most of the ‘proto_ops’ of vsock is easy to understand. Here I just use send/recv process to show how the transport layer ‘transport’ data between ‘guest’ and ‘host’.

guest send

‘vsock_stream_sendmsg’ is used to send data to host, it calls transport’s ‘stream_enqueue’ callback, in guest this function is ‘virtio_transport_stream_enqueue’. It creates a ‘virtio_vsock_pkt_info’ and called ‘virtio_transport_send_pkt_info’.

ssize_t

virtio_transport_stream_enqueue(struct vsock_sock *vsk,

struct msghdr *msg,

size_t len)

{

struct virtio_vsock_pkt_info info = {

.op = VIRTIO_VSOCK_OP_RW,

.type = VIRTIO_VSOCK_TYPE_STREAM,

.msg = msg,

.pkt_len = len,

.vsk = vsk,

};

return virtio_transport_send_pkt_info(vsk, &info);

}

virtio_transport_send_pkt_info

-->virtio_transport_alloc_pkt

-->virtio_transport_get_ops()->send_pkt(pkt);(virtio_transport_send_pkt)

‘virtio_transport_alloc_pkt’ allocate a buffer(‘pkt->buf’) to store the send data’. ‘virtio_transport_send_pkt’ insert the ‘virtio_vsock_pkt’ to a list and queue it to a queue_work. The actully data send is in ‘virtio_transport_send_pkt_work’ function.

In ‘virtio_transport_send_pkt_work’ it is the virtio driver’s standard operation, prepare scatterlist using msg header and msg itself, call ‘virtqueue_add_sgs’ and call ‘virtqueue_kick’.

'virtio_transport_alloc_pkt’函数用于分配一个缓冲区(‘pkt->buf’)来存储发送的数据。'virtio_transport_send_pkt’函数将’virtio_vsock_pkt’插入一个列表,并将其排队到一个queue_work中。实际发送数据的操作在’virtio_transport_send_pkt_work’函数中进行。在’virtio_transport_send_pkt_work’函数中,它是virtio驱动的标准操作,使用消息头和消息本身准备scatterlist,调用’virtqueue_add_sgs’函数,然后调用’virtqueue_kick’函数。

static void

virtio_transport_send_pkt_work(struct work_struct *work)

{

struct virtio_vsock *vsock =

container_of(work, struct virtio_vsock, send_pkt_work);

struct virtqueue *vq;

bool added = false;

bool restart_rx = false;

mutex_lock(&vsock->tx_lock);

...

vq = vsock->vqs[VSOCK_VQ_TX];

for (;;) {

struct virtio_vsock_pkt *pkt;

struct scatterlist hdr, buf, *sgs[2];

int ret, in_sg = 0, out_sg = 0;

bool reply;

...

pkt = list_first_entry(&vsock->send_pkt_list,

struct virtio_vsock_pkt, list);

list_del_init(&pkt->list);

spin_unlock_bh(&vsock->send_pkt_list_lock);

virtio_transport_deliver_tap_pkt(pkt);

reply = pkt->reply;

sg_init_one(&hdr, &pkt->hdr, sizeof(pkt->hdr));

sgs[out_sg++] = &hdr;

if (pkt->buf) {

sg_init_one(&buf, pkt->buf, pkt->len);

sgs[out_sg++] = &buf;

}

ret = virtqueue_add_sgs(vq, sgs, out_sg, in_sg, pkt, GFP_KERNEL);

/* Usually this means that there is no more space available in

* the vq

*/

...

added = true;

}

if (added)

virtqueue_kick(vq);

...

}

host recv

The host side’s handle for the tx queue kick is ‘vhost_vsock_handle_tx_kick’, this is initialized in ‘vhost_vsock_dev_open’ function.

‘vhost_vsock_handle_tx_kick’ also perform the virtio backedn standard operation, pop the vring desc and calls ‘vhost_vsock_alloc_pkt’ to reconstruct a ‘virtio_vsock_pkt’, then calls ‘virtio_transport_recv_pkt’ to delivery the packet to destination.

在主机端,用于触发传输队列的处理程序的句柄名为“vhost_vsock_handle_tx_kick”,该句柄在“vhost_vsock_dev_open”函数中初始化。此外,“vhost_vsock_handle_tx_kick”还执行virtio后端的标准操作,弹出vring描述符并调用“vhost_vsock_alloc_pkt”来重构一个“virtio_vsock_pkt”,然后调用“virtio_transport_recv_pkt”将数据包传递到目的地。

static void vhost_vsock_handle_tx_kick(struct vhost_work *work)

{

struct vhost_virtqueue *vq = container_of(work, struct vhost_virtqueue,

poll.work);

struct vhost_vsock *vsock = container_of(vq->dev, struct vhost_vsock,

dev);

struct virtio_vsock_pkt *pkt;

int head, pkts = 0, total_len = 0;

unsigned int out, in;

bool added = false;

mutex_lock(&vq->mutex);

...

vhost_disable_notify(&vsock->dev, vq);

do {

u32 len;

...

head = vhost_get_vq_desc(vq, vq->iov, ARRAY_SIZE(vq->iov),

&out, &in, NULL, NULL);

...

pkt = vhost_vsock_alloc_pkt(vq, out, in);

...

len = pkt->len;

/* Deliver to monitoring devices all received packets */

virtio_transport_deliver_tap_pkt(pkt);

/* Only accept correctly addressed packets */

if (le64_to_cpu(pkt->hdr.src_cid) == vsock->guest_cid)

virtio_transport_recv_pkt(pkt);

else

virtio_transport_free_pkt(pkt);

len += sizeof(pkt->hdr);

vhost_add_used(vq, head, len);

total_len += len;

added = true;

} while(likely(!vhost_exceeds_weight(vq, ++pkts, total_len)));

...

}

‘virtio_transport_recv_pkt’ is the actually function to delivery the msg data. It calls ‘vsock_find_connected_socket’ to find the destination remote socket then according to the dest socket state calls specific function. For ‘TCP_ESTABLISHED’ it calls ‘virtio_transport_recv_connected’.

‘virtio_transport_recv_pkt’是传递消息数据的实际函数。它调用‘vsock_find_connected_socket’来查找目标远程套接字,然后根据目标套接字状态调用特定的函数。对于‘TCP_ESTABLISHED’,它调用‘virtio_transport_recv_connected’。

void virtio_transport_recv_pkt(struct virtio_vsock_pkt *pkt)

{

struct sockaddr_vm src, dst;

struct vsock_sock *vsk;

struct sock *sk;

bool space_available;

vsock_addr_init(&src, le64_to_cpu(pkt->hdr.src_cid),

le32_to_cpu(pkt->hdr.src_port));

vsock_addr_init(&dst, le64_to_cpu(pkt->hdr.dst_cid),

le32_to_cpu(pkt->hdr.dst_port));

...

/* The socket must be in connected or bound table

* otherwise send reset back

*/

sk = vsock_find_connected_socket(&src, &dst);

...

vsk = vsock_sk(sk);

...

switch (sk->sk_state) {

case TCP_LISTEN:

virtio_transport_recv_listen(sk, pkt);

virtio_transport_free_pkt(pkt);

break;

case TCP_SYN_SENT:

virtio_transport_recv_connecting(sk, pkt);

virtio_transport_free_pkt(pkt);

break;

case TCP_ESTABLISHED:

virtio_transport_recv_connected(sk, pkt);

break;

case TCP_CLOSING:

virtio_transport_recv_disconnecting(sk, pkt);

virtio_transport_free_pkt(pkt);

break;

default:

virtio_transport_free_pkt(pkt);

break;

}

release_sock(sk);

/* Release refcnt obtained when we fetched this socket out of the

* bound or connected list.

*/

sock_put(sk);

return;

free_pkt:

virtio_transport_free_pkt(pkt);

}

For the send data the ‘pkt->hdr.op’ is ‘VIRTIO_VSOCK_OP_RW’ so ‘virtio_transport_recv_enqueue’ will be called. ‘virtio_transport_recv_enqueue’ adds the packet to the destination’s socket’s queue ‘rx_queue’.

So when the host/othere VM calls recv, the ‘vsock_stream_recvmsg’ will be called and the transport layer’s ‘stream_dequeue’ callback(virtio_transport_stream_do_dequeue) will be called. virtio_transport_stream_do_dequeue will pop the entry from ‘rx_queue’ and store it to msghdr and return to the userspace application.

当发送数据时,‘pkt->hdr.op’为’VIRTIO_VSOCK_OP_RW’,因此将调用’virtio_transport_recv_enqueue’。‘virtio_transport_recv_enqueue’将数据包添加到目标套接字的队列’rx_queue’中。因此,当主机/其他虚拟机调用recv时,将调用’vsock_stream_recvmsg’,并调用传输层的’stream_dequeue’回调(virtio_transport_stream_do_dequeue)。virtio_transport_stream_do_dequeue将从’rx_queue’中弹出条目并将其存储到msghdr中,然后返回给用户空间应用程序。

static ssize_t

virtio_transport_stream_do_dequeue(struct vsock_sock *vsk,

struct msghdr *msg,

size_t len)

{

struct virtio_vsock_sock *vvs = vsk->trans;

struct virtio_vsock_pkt *pkt;

size_t bytes, total = 0;

u32 free_space;

int err = -EFAULT;

spin_lock_bh(&vvs->rx_lock);

while (total < len && !list_empty(&vvs->rx_queue)) {

pkt = list_first_entry(&vvs->rx_queue,

struct virtio_vsock_pkt, list);

bytes = len - total;

if (bytes > pkt->len - pkt->off)

bytes = pkt->len - pkt->off;

/* sk_lock is held by caller so no one else can dequeue.

* Unlock rx_lock since memcpy_to_msg() may sleep.

*/

spin_unlock_bh(&vvs->rx_lock);

err = memcpy_to_msg(msg, pkt->buf + pkt->off, bytes);

if (err)

goto out;

spin_lock_bh(&vvs->rx_lock);

total += bytes;

pkt->off += bytes;

if (pkt->off == pkt->len) {

virtio_transport_dec_rx_pkt(vvs, pkt);

list_del(&pkt->list);

virtio_transport_free_pkt(pkt);

}

}

...

return total;

...

}

multi-transport

From above as we can see one kernel(both host/guest) can only register one transport. This is problematic in nested virtualization environment. For example the host with L0 VMware VM and in it there is a L1 qemu/kvm VM. The L0 VM can only register one transport, if it register the ‘vmci’ transport it can just talk to the VMware vmci device. If it register the ‘vhost_vsock’ it can just talk to the L1 VM. Fortunately Stefano Garzarella has addressed this issue in commit vsock: add multi-transports support . Who interested this can learn more.

Reference

virtio-vsock Zero-configuration host/guest communication, Stefan Hajnoczi, KVM froum 2015https://vmsplice.net/~stefan/stefanha-kvm-forum-2015.pdf

VSOCK: VM↔host socket with minimal configuration, Stefano Garzarella, DevConf.CZ 2020https://static.sched.com/hosted_files/devconfcz2020a/b1/DevConf.CZ_2020_vsock_v1.1.pdf

AF_VSOCK: nested VMs and loopback support availablehttps://stefano-garzarella.github.io/posts/2020-02-20-vsock-nested-vms-loopback/