基于metrics-server弹性伸缩

目录

一、环境准备

1.1、主机初始化配置

1.2、部署docker环境

二、部署kubernetes集群

2.1、组件介绍

2.2、配置阿里云yum源

2.3、安装kubelet kubeadm kubectl

2.4、配置init-config.yaml

2.5、安装master节点

2.6、安装node节点

2.7、安装flannel、cni

2.8 节点管理命令

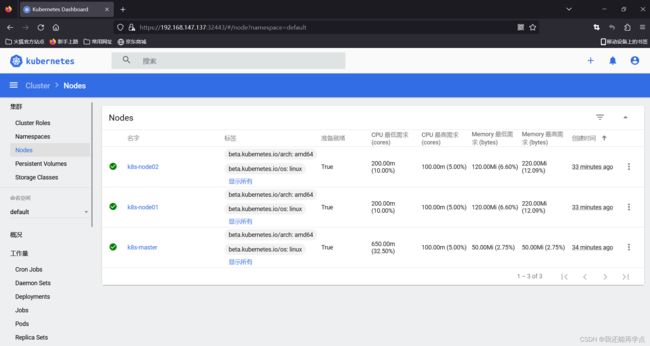

三、安装Dashboard UI

3.1、部署Dashboard

3.2、开放端口设置

3.3、权限配置

3.4、访问Token配置

四、metrics-server服务部署

4.1、在Node节点上下载镜像

4.2、修改 Kubernetes apiserver 启动参数

4.3、Master上进行部署

五、弹性伸缩

5.1、弹性伸缩介绍

5.2、弹性伸缩工作原理

5.3、弹性伸缩实战

一、环境准备

| 操作系统 |

IP地址 |

主机名 |

组件 |

| CentOS7.5 |

192.168.147.137 |

k8s-master |

kubeadm、kubelet、kubectl、docker-ce |

| CentOS7.5 |

192.168.147.139 |

k8s-node01 |

kubeadm、kubelet、kubectl、docker-ce |

| CentOS7.5 |

192.168.147.140 |

k8s-node02 |

kubeadm、kubelet、kubectl、docker-ce |

注意:所有主机配置推荐CPU:2C+ Memory:2G+

1.1、主机初始化配置

所有主机配置禁用防火墙和selinux

[root@localhost ~]# setenforce 0

[root@localhost ~]# iptables -F

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

[root@localhost ~]# systemctl stop NetworkManager

[root@localhost ~]# systemctl disable NetworkManager

[root@localhost ~]# sed -i '/^SELINUX=/s/enforcing/disabled/' /etc/selinux/config

配置主机名并绑定hosts,不同主机名称不同

[root@client2 ~]# hostname k8s-master

[root@client2 ~]# bash

[root@k8s-master ~]# cat << EOF >> /etc/hosts

> 192.168.147.137 k8s-master

> 192.168.147.139 k8s-node01

> 192.168.147.140 k8s-node02

> EOF

[root@k8s-master ~]# scp /etc/hosts 192.168.147.139:/etc/hosts

[root@k8s-master ~]# scp /etc/hosts 192.168.147.140:/etc/hosts

主机配置初始化

[root@k8s-master ~]# yum -y install vim wget net-tools lrzsz

[root@k8s-master ~]# swapoff -a

[root@k8s-master ~]# sed -i '/swap/s/^/#/' /etc/fstab

[root@k8s-node01 ~]# cat << EOF >> /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@k8s-node01 ~]# modprobe br_netfilter

[root@k8s-node01 ~]# sysctl -p

1.2、部署docker环境

三台主机上分别部署 Docker 环境,因为 Kubernetes 对容器的编排需要 Docker 的支持

[root@k8s-master ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

使用 YUM 方式安装 Docker 时,推荐使用阿里的 YUM 源。阿里的官方开源站点地址是:https://developer.aliyun.com/mirror/,可以在站点内找到 Docker 的源地址。

[root@k8s-master ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-master ~]# yum clean all && yum makecache fast

[root@k8s-master ~]# yum -y install docker-ce

[root@k8s-master ~]# systemctl start docker

[root@k8s-master ~]# systemctl enable docker

镜像加速器(所有主机配置)

很多镜像都是在国外的服务器上,由于网络上存在的问题,经常导致无法拉取镜像的错误,所以最好将镜像拉取地址设置成国内的。目前国内很多公有云服务商都提供了镜像加速服务。镜像加速配置如下所示。

https://dockerhub.azk8s.cn //Azure 中国镜像

https://hub-mirror.c.163.com //网易云加速器

[root@k8s-master ~]# cat << END > /etc/docker/daemon.json

{

"registry-mirrors":[ "https://nyakyfun.mirror.aliyuncs.com" ]

}

END

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl restart docker

将镜像加速地址直接写入/etc/docker/daemon.json 文件内,如果文件不存在,可直接新建文件并保存。通过该文件扩展名可以看出,daemon.json 的内容必须符合 json 格式,书写时要注意。同时,由于单一镜像服务存在不可用的情况,在配置加速时推荐配置两个或多个加速地址,从而达到冗余、高可用的目的。

二、部署kubernetes集群

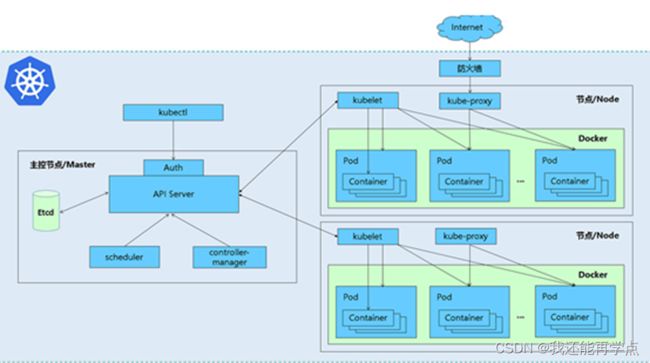

2.1、组件介绍

三个节点都需要安装下面三个组件

- kubeadm:安装工具,使所有的组件都会以容器的方式运行

- kubectl:客户端连接K8S API工具

- kubelet:运行在node节点,用来启动容器的工具

2.2、配置阿里云yum源

推荐使用阿里云的yum源安装:

[root@k8s-master ~]# cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@k8s-master ~]# ls /etc/yum.repos.d/

backup Centos-7.repo CentOS-Media.repo CentOS-x86_64-kernel.repo docker-ce.repo kubernetes.repo

2.3、安装kubelet kubeadm kubectl

所有主机配置

17、19版本都可以

[root@k8s-master ~]# yum install -y kubelet-1.17.0 kubeadm-1.17.0 kubectl-1.17.0

[root@k8s-master ~]# systemctl enable kubelet

kubelet 刚安装完成后,通过 systemctl start kubelet 方式是无法启动的,需要加入节点或初始化为 master 后才可启动成功。

如果在命令执行过程中出现索引 gpg 检查失败的情况, 请使用 yum install -y --nogpgcheck kubelet kubeadm kubectl 来安装。

2.4、配置init-config.yaml

Kubeadm 提供了很多配置项,Kubeadm 配置在 Kubernetes 集群中是存储在ConfigMap 中的,也可将这些配置写入配置文件,方便管理复杂的配置项。Kubeadm 配内容是通过 kubeadm config 命令写入配置文件的。

在master节点安装,master 定于为192.168.147.137,通过如下指令创建默认的init-config.yaml文件:

[root@k8s-master ~]# kubeadm config print init-defaults > init-config.yaml其中,kubeadm config 除了用于输出配置项到文件中,还提供了其他一些常用功能,如下所示。

- kubeadm config view:查看当前集群中的配置值。

- kubeadm config print join-defaults:输出 kubeadm join 默认参数文件的内容。

- kubeadm config images list:列出所需的镜像列表。

- kubeadm config images pull:拉取镜像到本地。

- kubeadm config upload from-flags:由配置参数生成 ConfigMap。

init-config.yaml配置

[root@k8s-master ~]# cat init-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.147.137 //master节点IP地址

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master //如果使用域名保证可以解析,或直接使用 IP 地址

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd //etcd 容器挂载到本地的目录

imageRepository: registry.aliyuncs.com/google_containers //修改为国内地址

kind: ClusterConfiguration

kubernetesVersion: v1.17.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 //新增加 Pod 网段

scheduler: {}

2.5、安装master节点

拉取所需镜像

[root@k8s-master ~]# kubeadm config images list --config init-config.yaml

W0817 09:27:02.869422 20103 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0817 09:27:02.869465 20103 validation.go:28] Cannot validate kubelet config - no validator is available

registry.aliyuncs.com/google_containers/kube-apiserver:v1.17.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.17.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.17.0

registry.aliyuncs.com/google_containers/pause:3.1

registry.aliyuncs.com/google_containers/etcd:3.4.3-0

registry.aliyuncs.com/google_containers/coredns:1.6.5[root@k8s-master ~]# kubeadm config images pull --config init-config.yaml

W0817 09:27:46.519233 20108 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0817 09:27:46.519271 20108 validation.go:28] Cannot validate kubelet config - no validator is available

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.17.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.17.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.17.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.5

安装matser节点

[root@k8s-master ~]# kubeadm init --config=init-config.yaml //初始化安装K8S根据提示操作

kubectl 默认会在执行的用户家目录下面的.kube 目录下寻找config 文件。这里是将在初始化时[kubeconfig]步骤生成的admin.conf 拷贝到.kube/config

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

kubeadm init 主要执行了以下操作:

[init]:指定版本进行初始化操作

[preflight] :初始化前的检查和下载所需要的Docker镜像文件

[kubelet-start] :生成kubelet 的配置文件”/var/lib/kubelet/config.yaml”,没有这个文件kubelet无法启动,所以初始化之前的kubelet 实际上启动失败。

[certificates]:生成Kubernetes 使用的证书,存放在/etc/kubernetes/pki 目录中。

[kubeconfig] :生成 Kubeconfig 文件,存放在/etc/kubernetes 目录中,组件之间通信需要使用对应文件。

[control-plane]:使用/etc/kubernetes/manifest 目录下的YAML 文件,安装 Master 组件。

[etcd]:使用/etc/kubernetes/manifest/etcd.yaml 安装Etcd 服务。

[wait-control-plane]:等待control-plan 部署的Master 组件启动。

[apiclient]:检查Master组件服务状态。

[uploadconfig]:更新配置

[kubelet]:使用configMap 配置kubelet。

[patchnode]:更新CNI信息到Node 上,通过注释的方式记录。

[mark-control-plane]:为当前节点打标签,打了角色Master,和不可调度标签,这样默认就不会使用Master 节点来运行Pod。

[bootstrap-token]:生成token 记录下来,后边使用kubeadm join 往集群中添加节点时会用到

[addons]:安装附加组件CoreDNS 和kube-proxy

Kubeadm 通过初始化安装是不包括网络插件的,也就是说初始化之后是不具备相关网络功能的,比如 k8s-master 节点上查看节点信息都是“Not Ready”状态、Pod 的 CoreDNS无法提供服务等。

2.6、安装node节点

根据master安装时的提示信息

[root@k8s-node01 ~]# kubeadm join 192.168.147.137:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:d3d51c71ca07191581fff8a91c70fbfb5ffe9c620eafa71e6e256b6fb0ea3488

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 100s v1.17.0

k8s-node01 NotReady 30s v1.17.0

k8s-node02 NotReady 25s v1.17.0

前面已经提到,在初始化 k8s-master 时并没有网络相关配置,所以无法跟 node 节点通信,因此状态都是“NotReady”。但是通过 kubeadm join 加入的 node 节点已经在k8s-master 上可以看到。

2.7、安装flannel、cni

Master 节点NotReady 的原因就是因为没有使用任何的网络插件,此时Node 和Master的连接还不正常。目前最流行的Kubernetes 网络插件有Flannel、Calico、Canal、Weave 这里选择使用flannel。

所有主机:

master上传kube-flannel.yml,所有主机上传flannel_v0.12.0-amd64.tar、cni-plugins-linux-amd64-v0.8.6.tgz

[root@k8s-master ~]# docker load < flannel_v0.12.0-amd64.tar

256a7af3acb1: Loading layer 5.844MB/5.844MB

d572e5d9d39b: Loading layer 10.37MB/10.37MB

57c10be5852f: Loading layer 2.249MB/2.249MB

7412f8eefb77: Loading layer 35.26MB/35.26MB

05116c9ff7bf: Loading layer 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

[root@k8s-master ~]# tar xf cni-plugins-linux-amd64-v0.8.6.tgz

[root@k8s-master ~]# cp flannel /opt/cni/bin/

master主机:上传并创建kube-flannel.yaml

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 5m6s v1.17.0

k8s-node01 Ready 3m56s v1.17.0

k8s-node02 Ready 3m51s v1.17.0

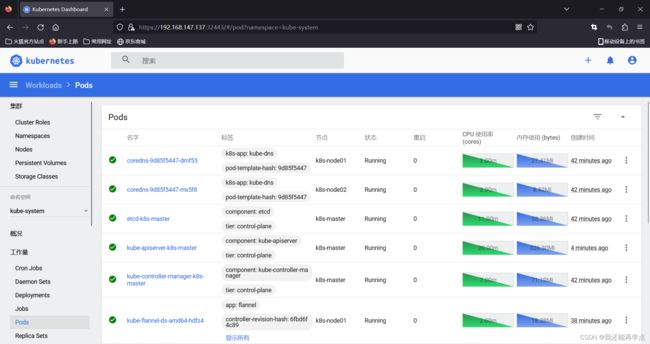

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-9d85f5447-dmf55 1/1 Running 0 16m

coredns-9d85f5447-mx5f8 1/1 Running 0 16m

etcd-k8s-master 1/1 Running 0 16m

kube-apiserver-k8s-master 1/1 Running 0 16m

kube-controller-manager-k8s-master 1/1 Running 0 16m

kube-flannel-ds-amd64-hdfz4 1/1 Running 0 11m

kube-flannel-ds-amd64-td6jh 1/1 Running 0 11m

kube-flannel-ds-amd64-wggsc 1/1 Running 0 11m

kube-proxy-8tscv 1/1 Running 0 15m

kube-proxy-sf5qz 1/1 Running 0 16m

kube-proxy-z54n6 1/1 Running 0 15m

kube-scheduler-k8s-master 1/1 Running 0 16m

2.8 节点管理命令

以下命令无需执行,仅作为了解

重置master和node配置

[root@k8s-master ~]# kubeadm reset删除node配置

[root@k8s-master ~]# kubectl delete node 192.168.147.137

[root@k8s-node01 ~]# docker rm -f $(docker ps -aq)

[root@k8s-node01 ~]# systemctl stop kubelet

[root@k8s-node01 ~]# rm -rf /etc/kubernetes/*

[root@k8s-node01 ~]# rm -rf /var/lib/kubelet/*

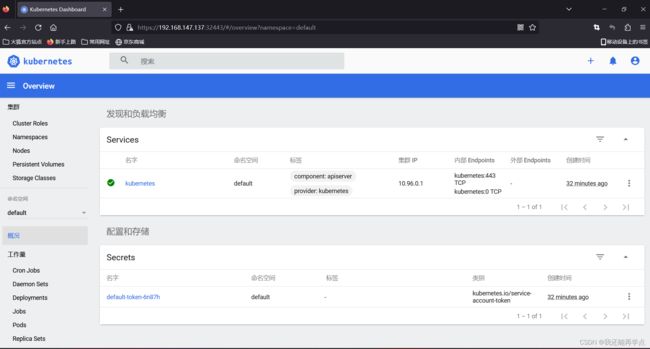

三、安装Dashboard UI

3.1、部署Dashboard

dashboard的github仓库地址:https://github.com/kubernetes/dashboard

代码仓库当中,有给出安装示例的相关部署文件,我们可以直接获取之后,直接部署即可

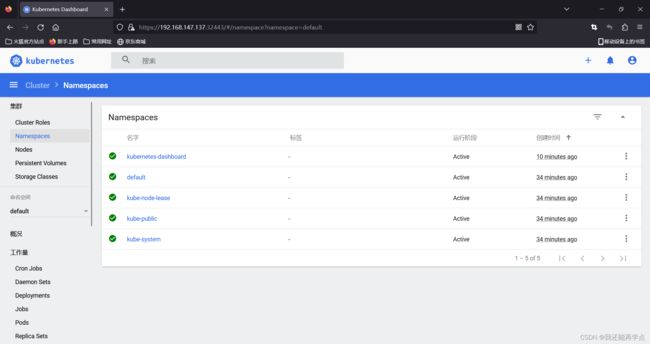

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml默认这个部署文件当中,会单独创建一个名为kubernetes-dashboard的命名空间,并将kubernetes-dashboard部署在该命名空间下。dashboard的镜像来自docker hub官方,所以可不用修改镜像地址,直接从官方获取即可。

3.2、开放端口设置

在默认情况下,dashboard并不对外开放访问端口,这里简化操作,直接使用nodePort的方式将其端口暴露出来,修改serivce部分的定义:

所有主机下载镜像

[root@k8s-master ~]# docker pull kubernetesui/dashboard:v2.0.0

v2.0.0: Pulling from kubernetesui/dashboard

2a43ce254c7f: Pull complete

Digest: sha256:06868692fb9a7f2ede1a06de1b7b32afabc40ec739c1181d83b5ed3eb147ec6e

Status: Downloaded newer image for kubernetesui/dashboard:v2.0.0

docker.io/kubernetesui/dashboard:v2.0.0

[root@k8s-master ~]# docker pull kubernetesui/metrics-scraper:v1.0.4

v1.0.4: Pulling from kubernetesui/metrics-scraper

07008dc53a3e: Pull complete

1f8ea7f93b39: Pull complete

04d0e0aeff30: Pull complete

Digest: sha256:555981a24f184420f3be0c79d4efb6c948a85cfce84034f85a563f4151a81cbf

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.4

docker.io/kubernetesui/metrics-scraper:v1.0.4

[root@k8s-master ~]# vim recommended.yaml

39 spec:

40 type: NodePort

41 ports:

42 - port: 443

43 targetPort: 8443

44 nodePort: 32443

45 selector:

3.3、权限配置

配置一个超级管理员权限

[root@k8s-master ~]# vim recommended.yaml

164 name: cluster-admin

[root@k8s-master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-c79c65bb7-k9xv9 1/1 Running 0 5m40s

kubernetes-dashboard-56484d4c5-dkfgm 1/1 Running 0 5m40s

[root@k8s-master ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-9d85f5447-dmf55 1/1 Running 0 29m 10.244.1.2 k8s-node01

kube-system coredns-9d85f5447-mx5f8 1/1 Running 0 29m 10.244.2.2 k8s-node02

kube-system etcd-k8s-master 1/1 Running 0 30m 192.168.147.137 k8s-master

kube-system kube-apiserver-k8s-master 1/1 Running 0 30m 192.168.147.137 k8s-master

kube-system kube-controller-manager-k8s-master 1/1 Running 0 30m 192.168.147.137 k8s-master

kube-system kube-flannel-ds-amd64-hdfz4 1/1 Running 0 25m 192.168.147.139 k8s-node01

kube-system kube-flannel-ds-amd64-td6jh 1/1 Running 0 25m 192.168.147.140 k8s-node02

kube-system kube-flannel-ds-amd64-wggsc 1/1 Running 0 25m 192.168.147.137 k8s-master

kube-system kube-proxy-8tscv 1/1 Running 0 28m 192.168.147.140 k8s-node02

kube-system kube-proxy-sf5qz 1/1 Running 0 29m 192.168.147.137 k8s-master

kube-system kube-proxy-z54n6 1/1 Running 0 29m 192.168.147.139 k8s-node01

kube-system kube-scheduler-k8s-master 1/1 Running 0 30m 192.168.147.137 k8s-master

kubernetes-dashboard dashboard-metrics-scraper-c79c65bb7-k9xv9 1/1 Running 0 6m6s 10.244.2.3 k8s-node02

kubernetes-dashboard kubernetes-dashboard-56484d4c5-dkfgm 1/1 Running 0 6m6s 10.244.1.3 k8s-node01

3.4、访问Token配置

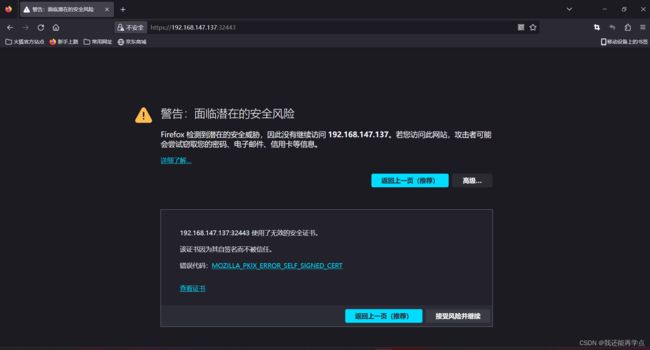

使用谷歌浏览器测试访问 https://192.168.147.137:32443

接受风险并继续

可以看到出现如上图画面,需要我们输入一个kubeconfig文件或者一个token。事实上在安装dashboard时,也为我们默认创建好了一个serviceaccount,为kubernetes-dashboard,并为其生成好了token,我们可以通过如下指令获取该sa的token:

[root@k8s-master ~]# kubectl describe secret -n kubernetes-dashboard $(kubectl get secret -n kubernetes-dashboard |grep kubernetes-dashboard-token | awk '{print $1}') |grep token | awk '{print $2}'

kubernetes-dashboard-token-kxcj9

kubernetes.io/service-account-token

eyJhbGciOiJSUzI1NiIsImtpZCI6IkxfVmx1aDJVblJzODJiZFFlcG8zX0ZhbFpqTXlZOEhLMlhPcDJFdDVkeGsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1reGNqOSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImYzMGMxMGMxLWM2MGQtNGZhMi04NzBkLTg4OTk1NjI3ZjU4ZCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.fqUzKZ0n4gWM2pZc2Eyl7AfVQ5NLEQm5GqYvbmsWl2BO5OYuNZr_9LOWZmjzstz4MwqdFktf4C8FYhqZ52DsqAxdXSu1Ob8dVFFgX4cUvI7okSUW1rZQXgheKDC_NZQKWtYRfBbtb86Nk1sfdy2xtKs4KPQA7h-XH1HO3MOvo7yd_yRpv8QkVnwGuJCjgtdgqXSpH20Sqg2FfL4wFi5fhwkskQaNPiwYRnQH24DS6BEZBWEE22BxuLyZm76dge8xEizYmzXwvvhY7EFv6P4U3LV7JENq-6oNFYzlT0o5XXBtHZyO_H78VfCYYnLUg-6nHzNR0oUwfG-i4VXNWLIPrQ

四、metrics-server服务部署

4.1、在Node节点上下载镜像

heapster已经被metrics-server取代,metrics-server是K8S中的资源指标获取工具

所有node节点上

[root@k8s-node02 ~]# docker pull bluersw/metrics-server-amd64:v0.3.6

v0.3.6: Pulling from bluersw/metrics-server-amd64

e8d8785a314f: Pull complete

b2f4b24bed0d: Pull complete

Digest: sha256:c9c4e95068b51d6b33a9dccc61875df07dc650abbf4ac1a19d58b4628f89288b

Status: Downloaded newer image for bluersw/metrics-server-amd64:v0.3.6

docker.io/bluersw/metrics-server-amd64:v0.3.6

[root@k8s-node02 ~]# docker tag bluersw/metrics-server-amd64:v0.3.6 k8s.gcr.io/metrics-server-amd64:v0.3.6

4.2、修改 Kubernetes apiserver 启动参数

在kube-apiserver项中添加如下配置选项 修改后apiserver会自动重启

添加一行

[root@k8s-master ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

40 - --enable-aggregator-routing=true

4.3、Master上进行部署

[root@k8s-master ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml修改安装脚本:

[root@k8s-master ~]# vim components.yaml

添加2行

91 - --kubelet-preferred-address-types=InternalIP

92 - --kubelet-insecure-tls

[root@k8s-master ~]# kubectl create -f components.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

等待1-2分钟查看结果

[root@k8s-master ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 67m 3% 1066Mi 62%

k8s-node01 26m 1% 846Mi 49%

k8s-node02 29m 1% 808Mi 47% 再回到dashboard界面可以看到CPU和内存使用情况了

五、弹性伸缩

5.1、弹性伸缩介绍

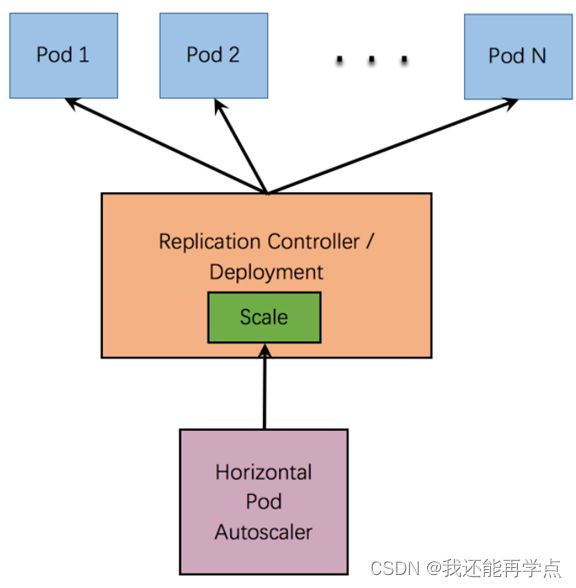

HPA(Horizontal Pod Autoscaler,Pod水平自动伸缩)的操作对象是replication controller, deployment, replica set, stateful set 中的pod数量。注意,Horizontal Pod Autoscaling不适用于无法伸缩的对象,例如DaemonSets。

HPA根据观察到的CPU使用量与用户的阈值进行比对,做出是否需要增减实例(Pods)数量的决策。控制器会定期调整副本控制器或部署中副本的数量,以使观察到的平均CPU利用率与用户指定的目标相匹配。

5.2、弹性伸缩工作原理

Horizontal Pod Autoscaler 会实现为一个控制循环,其周期由--horizontal-pod-autoscaler-sync-period选项指定(默认15秒)。

在每个周期内,controller manager都会根据每个HorizontalPodAutoscaler定义的指定的指标去查询资源利用率。 controller manager从资源指标API(针对每个pod资源指标)或自定义指标API(针对所有其他指标)获取指标。

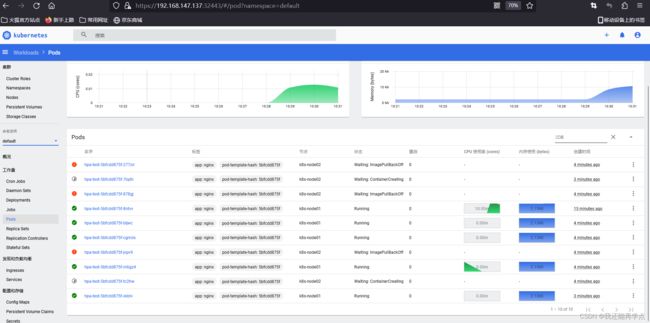

5.3、弹性伸缩实战

[root@k8s-master ~]# mkdir hpa

[root@k8s-master ~]# cd hpa

创建hpa测试应用的deployment

[root@k8s-master hpa]# vim nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: hpa-test

labels:

app: hpa

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19.6

ports:

- containerPort: 80

resources:

requests:

cpu: 0.010

memory: 100Mi

limits:

cpu: 0.010

memory: 100Mi

使用的资源是: CPU 0.010个核,内存100M

[root@k8s-master hpa]# kubectl apply -f nginx.yaml

deployment.apps/hpa-test created

[root@k8s-master hpa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hpa-test-5bfcdd875f-8ntvv 1/1 Running 0 2m16s

创建hpa策略

[root@k8s-master hpa]# kubectl autoscale --max=10 --min=1 --cpu-percent=5 deployment hpa-test

horizontalpodautoscaler.autoscaling/hpa-test autoscaled

[root@k8s-master hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-test Deployment/hpa-test /5% 1 10 0 7s

模拟业务压力测试

[root@k8s-master hpa]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

hpa-test-5bfcdd875f-bh849 1/1 Running 0 7m57s 10.244.2.5 k8s-node02

hpa-test-5bfcdd875f-hcnv8 1/1 Running 0 7m57s 10.244.1.4 k8s-node01

hpa-test-5bfcdd875f-l4fj7 1/1 Running 0 7m57s 10.244.2.4 k8s-node02

[root@k8s-master ~]# while true;do curl -I 10.244.2.5 ;done 观察资源使用情况及弹性伸缩情况

[root@k8s-master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-test Deployment/hpa-test 0%/5% 1 10 3 5m15s

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

hpa-test-5bfcdd875f-8ntvv 1/1 Running 0 9m31s 10.244.1.4 k8s-node01

[root@k8s-master ~]# while true;do curl -l 10.244.1.4 ;done

观察资源使用情况及弹性伸缩情况

[root@k8s-master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-test Deployment/hpa-test 110%/5% 1 10 10 8m18s

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hpa-test-5bfcdd875f-277zn 0/1 ImagePullBackOff 0 4m23s

hpa-test-5bfcdd875f-7lqdh 0/1 ContainerCreating 0 4m7s

hpa-test-5bfcdd875f-878gj 0/1 ImagePullBackOff 0 4m38s

hpa-test-5bfcdd875f-8ntvv 1/1 Running 0 15m

hpa-test-5bfcdd875f-bljwc 1/1 Running 0 4m23s

hpa-test-5bfcdd875f-cgmdx 1/1 Running 0 4m38s

hpa-test-5bfcdd875f-jvpv9 0/1 ImagePullBackOff 0 4m38s

hpa-test-5bfcdd875f-m6gz4 1/1 Running 0 4m23s

hpa-test-5bfcdd875f-tc2hw 0/1 ContainerCreating 0 4m23s

hpa-test-5bfcdd875f-xkblv 1/1 Running 0 4m7s

CPU、内存立马开始上升了

根据电脑性能,弹缩时间不等,多等一会

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hpa-test-5bfcdd875f-277zn 1/1 Running 0 22m

hpa-test-5bfcdd875f-7lqdh 1/1 Running 0 21m

hpa-test-5bfcdd875f-878gj 1/1 Running 0 22m

hpa-test-5bfcdd875f-8ntvv 1/1 Running 0 33m

hpa-test-5bfcdd875f-bljwc 1/1 Running 0 22m

hpa-test-5bfcdd875f-cgmdx 1/1 Running 0 22m

hpa-test-5bfcdd875f-jvpv9 1/1 Running 0 22m

hpa-test-5bfcdd875f-m6gz4 1/1 Running 0 22m

hpa-test-5bfcdd875f-tc2hw 1/1 Running 0 22m

hpa-test-5bfcdd875f-xkblv 1/1 Running 0 21m

最终会全部running的

将压力测试终止后,稍等一小会儿pod数量会自动缩减到1

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hpa-test-5bfcdd875f-277zn 1/1 Running 0 22m删除hpa策略

[root@k8s-master ~]# kubectl delete hpa hpa-test

horizontalpodautoscaler.autoscaling "hpa-test" deleted

[root@k8s-master ~]# kubectl get hpa

No resources found in default namespace.