Python3爬取淘宝网商品数据

前言

在这里我就不再一一介绍每个步骤的具体操作了,因为在上一次爬取今日头条数据的时候都已经讲的非常清楚了,所以在这里我只会在重点上讲述这个是这么实现的,如果想要看具体步骤请先去看我今日头条的文章内容,里面有非常详细的介绍以及是怎么找到加密js代码和api接口。

Python3爬取今日头条文章视频数据,完美解决as、cp、_signature的加密方法

QQ群聊

855262907

分析淘宝网

这次选择的是淘宝网热卖而不是淘宝网,二者虽然名字有不同,但是数据还是一样的,区别就在于前者把后者的所有店铺和商品的海量数据按照销售量、好评度、信誉度综合测评、重新计算、重新排序展现给买家的一个导购网站。

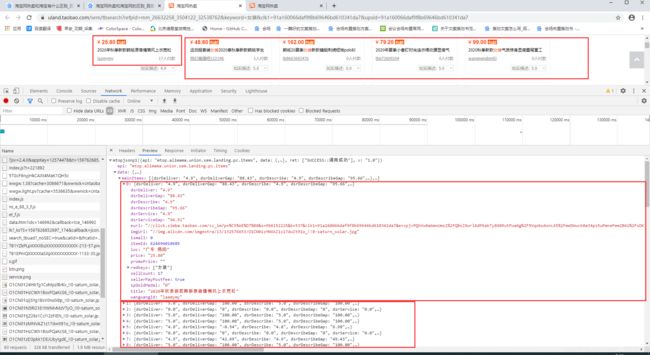

找到准确数据:

jsv: 2.4.0

appKey: 12574478

t: 1597626852590

sign: 457c09a81147eb493d1f58ad6ab64d52

api: mtop.alimama.union.sem.landing.pc.items

v: 1.0

AntiCreep: true

dataType: jsonp

type: jsonp

ecode: 0

callback: mtopjsonp1

data: {"keyword":"女装","ppath":"","loc":"","minPrice":"","maxPrice":"","ismall":"","ship":"","itemAssurance":"","exchange7":"","custAssurance":"","b":"","clk1":"91a160066daf9f8b69646bd610341da7","pvoff":"","pageSize":"100","page":"","elemtid":"1","refpid":"mm_26632258_3504122_32538762","pid":"430673_1006","featureNames":"spGoldMedal,dsrDescribe,dsrDescribeGap,dsrService,dsrServiceGap,dsrDeliver, dsrDeliverGap","ac":"5TGcF6nyjHkCAXt4MaK1QH5c","wangwangid":"","catId":""}

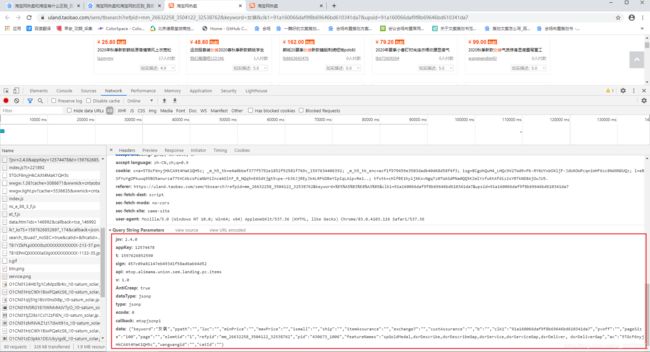

jsv: 2.4.0

appKey: 12574478

t: 1597626856031

sign: 4a87f4308903ded469a1328275ded458

api: mtop.alimama.union.sem.landing.pc.items

v: 1.0

AntiCreep: true

dataType: jsonp

type: jsonp

ecode: 0

callback: mtopjsonp1

data: {"keyword":"女装","ppath":"","loc":"","minPrice":"","maxPrice":"","ismall":"","ship":"","itemAssurance":"","exchange7":"","custAssurance":"","b":"","clk1":"91a160066daf9f8b69646bd610341da7","pvoff":"","pageSize":"100","page":"","elemtid":"1","refpid":"mm_26632258_3504122_32538762","pid":"430673_1006","featureNames":"spGoldMedal,dsrDescribe,dsrDescribeGap,dsrService,dsrServiceGap,dsrDeliver, dsrDeliverGap","ac":"5TGcF6nyjHkCAXt4MaK1QH5c","wangwangid":"","catId":""}

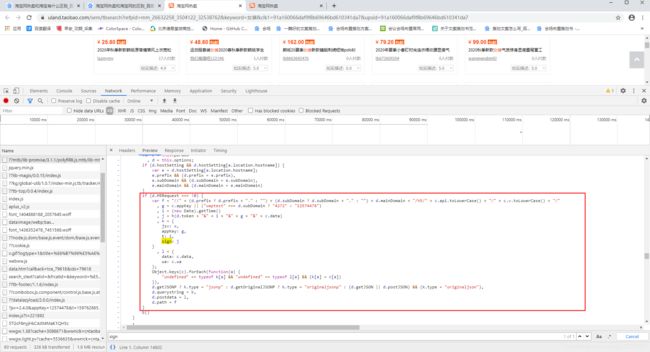

发现请求数据有所改变的地方只有t和sign以及data中的page和ac,其他均没有发生改变,t参数明眼就能猜出是时间戳,sign参数还得通过调试和阅读源码进行逆向分析。

解决t和sign参数

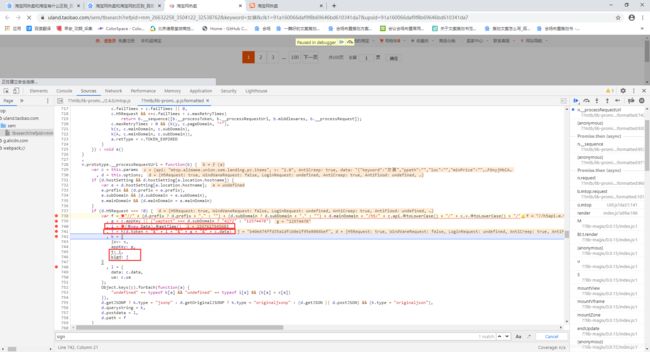

发现sign和t以及其他参数都在这里面构造的。

所以我们现在开始下断点调试这些参数,得出sign参数的构造过程。可以发现t就是当前时间戳,然后sign是由h(d.token + "&" + i + "&" + g + "&" + c.data)构成,d.token是this.options.token也就是cookie值中的_m_h5_tk参数的前部分,i是时间戳,g就是12574478,c.data就是this.params.data。

this.options.token = e4a0bbef377f5792e1852f92581f769c(参考值)

this.params.data = {"keyword":"女装","ppath":"","loc":"","minPrice":"","maxPrice":"","ismall":"","ship":"","itemAssurance":"","exchange7":"","custAssurance":"","b":"","clk1":"91a160066daf9f8b69646bd610341da7","pvoff":"","pageSize":"100","page":"","elemtid":"1","refpid":"mm_26632258_3504122_32538762","pid":"430673_1006","featureNames":"spGoldMedal,dsrDescribe,dsrDescribeGap,dsrService,dsrServiceGap,dsrDeliver, dsrDeliverGap","ac":"5TGcF6nyjHkCAXt4MaK1QH5c","wangwangid":"","catId":""}

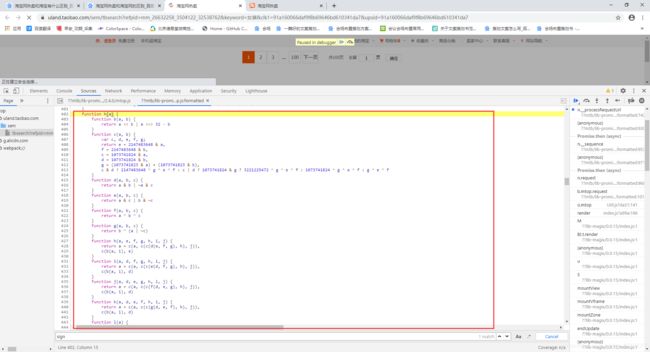

function h(a) {

function b(a, b) {

return a << b | a >>> 32 - b

}

function c(a, b) {

var c, d, e, f, g;

return e = 2147483648 & a,

f = 2147483648 & b,

c = 1073741824 & a,

d = 1073741824 & b,

g = (1073741823 & a) + (1073741823 & b),

c & d ? 2147483648 ^ g ^ e ^ f : c | d ? 1073741824 & g ? 3221225472 ^ g ^ e ^ f : 1073741824 ^ g ^ e ^ f : g ^ e ^ f

}

function d(a, b, c) {

return a & b | ~a & c

}

function e(a, b, c) {

return a & c | b & ~c

}

function f(a, b, c) {

return a ^ b ^ c

}

function g(a, b, c) {

return b ^ (a | ~c)

}

function h(a, e, f, g, h, i, j) {

return a = c(a, c(c(d(e, f, g), h), j)),

c(b(a, i), e)

}

function i(a, d, f, g, h, i, j) {

return a = c(a, c(c(e(d, f, g), h), j)),

c(b(a, i), d)

}

function j(a, d, e, g, h, i, j) {

return a = c(a, c(c(f(d, e, g), h), j)),

c(b(a, i), d)

}

function k(a, d, e, f, h, i, j) {

return a = c(a, c(c(g(d, e, f), h), j)),

c(b(a, i), d)

}

function l(a) {

for (var b, c = a.length, d = c + 8, e = (d - d % 64) / 64, f = 16 * (e + 1), g = new Array(f - 1), h = 0, i = 0; c > i; )

b = (i - i % 4) / 4,

h = i % 4 * 8,

g[b] = g[b] | a.charCodeAt(i) << h,

i++;

return b = (i - i % 4) / 4,

h = i % 4 * 8,

g[b] = g[b] | 128 << h,

g[f - 2] = c << 3,

g[f - 1] = c >>> 29,

g

}

function m(a) {

var b, c, d = "", e = "";

for (c = 0; 3 >= c; c++)

b = a >>> 8 * c & 255,

e = "0" + b.toString(16),

d += e.substr(e.length - 2, 2);

return d

}

function n(a) {

a = a.replace(/\r\n/g, "\n");

for (var b = "", c = 0; c < a.length; c++) {

var d = a.charCodeAt(c);

128 > d ? b += String.fromCharCode(d) : d > 127 && 2048 > d ? (b += String.fromCharCode(d >> 6 | 192),

b += String.fromCharCode(63 & d | 128)) : (b += String.fromCharCode(d >> 12 | 224),

b += String.fromCharCode(d >> 6 & 63 | 128),

b += String.fromCharCode(63 & d | 128))

}

return b

}

var o, p, q, r, s, t, u, v, w, x = [], y = 7, z = 12, A = 17, B = 22, C = 5, D = 9, E = 14, F = 20, G = 4, H = 11, I = 16, J = 23, K = 6, L = 10, M = 15, N = 21;

for (a = n(a),

x = l(a),

t = 1732584193,

u = 4023233417,

v = 2562383102,

w = 271733878,

o = 0; o < x.length; o += 16)

p = t,

q = u,

r = v,

s = w,

t = h(t, u, v, w, x[o + 0], y, 3614090360),

w = h(w, t, u, v, x[o + 1], z, 3905402710),

v = h(v, w, t, u, x[o + 2], A, 606105819),

u = h(u, v, w, t, x[o + 3], B, 3250441966),

t = h(t, u, v, w, x[o + 4], y, 4118548399),

w = h(w, t, u, v, x[o + 5], z, 1200080426),

v = h(v, w, t, u, x[o + 6], A, 2821735955),

u = h(u, v, w, t, x[o + 7], B, 4249261313),

t = h(t, u, v, w, x[o + 8], y, 1770035416),

w = h(w, t, u, v, x[o + 9], z, 2336552879),

v = h(v, w, t, u, x[o + 10], A, 4294925233),

u = h(u, v, w, t, x[o + 11], B, 2304563134),

t = h(t, u, v, w, x[o + 12], y, 1804603682),

w = h(w, t, u, v, x[o + 13], z, 4254626195),

v = h(v, w, t, u, x[o + 14], A, 2792965006),

u = h(u, v, w, t, x[o + 15], B, 1236535329),

t = i(t, u, v, w, x[o + 1], C, 4129170786),

w = i(w, t, u, v, x[o + 6], D, 3225465664),

v = i(v, w, t, u, x[o + 11], E, 643717713),

u = i(u, v, w, t, x[o + 0], F, 3921069994),

t = i(t, u, v, w, x[o + 5], C, 3593408605),

w = i(w, t, u, v, x[o + 10], D, 38016083),

v = i(v, w, t, u, x[o + 15], E, 3634488961),

u = i(u, v, w, t, x[o + 4], F, 3889429448),

t = i(t, u, v, w, x[o + 9], C, 568446438),

w = i(w, t, u, v, x[o + 14], D, 3275163606),

v = i(v, w, t, u, x[o + 3], E, 4107603335),

u = i(u, v, w, t, x[o + 8], F, 1163531501),

t = i(t, u, v, w, x[o + 13], C, 2850285829),

w = i(w, t, u, v, x[o + 2], D, 4243563512),

v = i(v, w, t, u, x[o + 7], E, 1735328473),

u = i(u, v, w, t, x[o + 12], F, 2368359562),

t = j(t, u, v, w, x[o + 5], G, 4294588738),

w = j(w, t, u, v, x[o + 8], H, 2272392833),

v = j(v, w, t, u, x[o + 11], I, 1839030562),

u = j(u, v, w, t, x[o + 14], J, 4259657740),

t = j(t, u, v, w, x[o + 1], G, 2763975236),

w = j(w, t, u, v, x[o + 4], H, 1272893353),

v = j(v, w, t, u, x[o + 7], I, 4139469664),

u = j(u, v, w, t, x[o + 10], J, 3200236656),

t = j(t, u, v, w, x[o + 13], G, 681279174),

w = j(w, t, u, v, x[o + 0], H, 3936430074),

v = j(v, w, t, u, x[o + 3], I, 3572445317),

u = j(u, v, w, t, x[o + 6], J, 76029189),

t = j(t, u, v, w, x[o + 9], G, 3654602809),

w = j(w, t, u, v, x[o + 12], H, 3873151461),

v = j(v, w, t, u, x[o + 15], I, 530742520),

u = j(u, v, w, t, x[o + 2], J, 3299628645),

t = k(t, u, v, w, x[o + 0], K, 4096336452),

w = k(w, t, u, v, x[o + 7], L, 1126891415),

v = k(v, w, t, u, x[o + 14], M, 2878612391),

u = k(u, v, w, t, x[o + 5], N, 4237533241),

t = k(t, u, v, w, x[o + 12], K, 1700485571),

w = k(w, t, u, v, x[o + 3], L, 2399980690),

v = k(v, w, t, u, x[o + 10], M, 4293915773),

u = k(u, v, w, t, x[o + 1], N, 2240044497),

t = k(t, u, v, w, x[o + 8], K, 1873313359),

w = k(w, t, u, v, x[o + 15], L, 4264355552),

v = k(v, w, t, u, x[o + 6], M, 2734768916),

u = k(u, v, w, t, x[o + 13], N, 1309151649),

t = k(t, u, v, w, x[o + 4], K, 4149444226),

w = k(w, t, u, v, x[o + 11], L, 3174756917),

v = k(v, w, t, u, x[o + 2], M, 718787259),

u = k(u, v, w, t, x[o + 9], N, 3951481745),

t = c(t, p),

u = c(u, q),

v = c(v, r),

w = c(w, s);

var O = m(t) + m(u) + m(v) + m(w);

return O.toLowerCase()

}

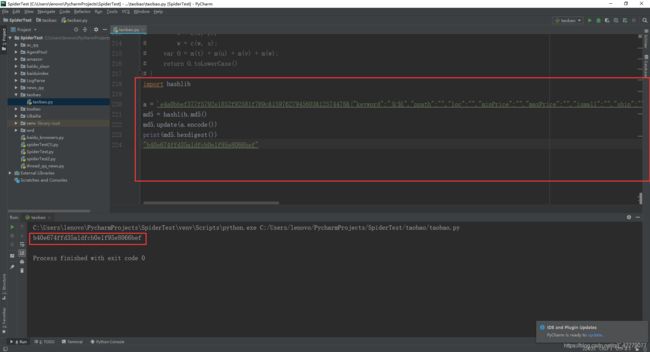

发现这个h函数就是个md5加密方式,这样的话也就太容易了。

解决page和ac参数

page参数一看就知道是页数了,第一页为空,第二页为2,如:访问第一页时,page:"",访问第二页时,page:"2",后面以此类推。

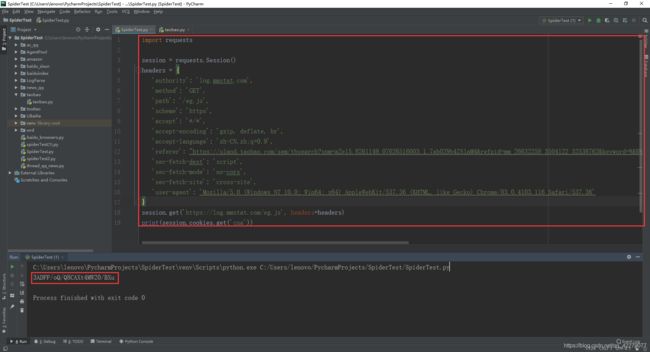

ac参数就是我们cookie值中的cna参数值,cna参数值得访问网站获得https://log.mmstat.com/eg.js,获得

代码如下:

import requests

session = requests.Session()

headers = {

'authority': 'log.mmstat.com',

'method': 'GET',

'path': '/eg.js',

'scheme': 'https',

'accept': '*/*',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'zh-CN,zh;q=0.9',

'referer': "https://uland.taobao.com/sem/tbsearch?spm=a2e15.8261149.07626516003.1.7ab029b4ZS1aM4&refpid=mm_26632258_3504122_32538762&keyword=%E6%B7%98%E5%AE%9D&clk1=f1a5efd94339f0e6e7cba63ed79b8554&upsid=f1a5efd94339f0e6e7cba63ed79b8554&page=2&_input_charset=utf-8",

'sec-fetch-dest': 'script',

'sec-fetch-mode': 'no-cors',

'sec-fetch-site': 'cross-site',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36'

}

session.get('https://log.mmstat.com/eg.js', headers=headers)

print(session.cookies.get('cna'))

有人可能说我为什么会知道这些,因为我认真的分析了整个网站,这个部分的分析过程就不放出来了。

Python进行爬取

前面已经分析了整个网站了,废话不多说了,直接开始上开始上全代码了,复制过去就可以直接运行的代码,淘宝对每个结果最多只显示100页,所以这里也只有100页,如果你需要搜索的与我的不同自己直接修改即可。

import hashlib

import time

import requests

from urllib import parse

import json

class TaoBao():

def __init__(self,keyword):

self.keyword = keyword

self.session = requests.Session()

self.cookies = {}

self.data = {}

self.params = {}

self.headers = {}

for page in range(1,100+1):

if page == 1:

self.get_url()

self.get_m_h5_tk()

self.get_cna()

self.get_data(page)

else:

self.get_data(page)

def to_cookie(self):

cookie = ''

for key, value in self.cookies.items():

if '_m_h5' in key or 'cna' == key:

cookie += key + '=' + value + '; '

return cookie

def h(self,a):

md5 = hashlib.md5()

md5.update(a.encode())

return md5.hexdigest()

# 获取uland地址和clk1值

def get_url(self):

headers = {

"Host":"redirect.simba.taobao.com",

"Upgrade-Insecure-Requests":"1",

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36",

"Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Accept-Encoding":"gzip, deflate",

"Accept-Language":"zh-CN,zh;q=0.9",

"Connection":"keep-alive"

}

url = 'http://redirect.simba.taobao.com/rd?c=un&w=unionsem&k=74175f13c74157df&p=mm_26632258_3504122_32538762&b=R9JQC0peugGMqgRlL5O&s={keyword}&f=https%3A%2F%2Fuland.taobao.com%2Fsem%2Ftbsearch%3Frefpid%3Dmm_26632258_3504122_32538762%26keyword{keywords}'.format(keyword=parse.quote(self.keyword),keywords=parse.quote(parse.quote('='+self.keyword)))

response = self.session.get(url,headers=headers,allow_redirects=False)

self.clk1 = response.headers.get('Location').split('&')[2]

self.uland_url = response.headers.get('Location')

# 获取_m_h5_tk和_m_h5_tk_enc

def get_m_h5_tk(self):

self.data = {

"keyword": self.keyword,

"ppath": "", "loc": "",

"minPrice": "",

"maxPrice": "",

"ismall": "",

"ship": "",

"itemAssurance": "",

"exchange7": "",

"custAssurance": "",

"b": "",

"clk1": self.clk1,

"pvoff": "",

"pageSize": "100",

"page": "",

"elemtid": "1",

"refpid": "mm_26632258_3504122_32538762",

"pid": "430673_1006",

"featureNames": "spGoldMedal,dsrDescribe,dsrDescribeGap,dsrService,dsrServiceGap,dsrDeliver, dsrDeliverGap",

"ac": "",

"wangwangid": "",

"catId": ""

}

self.timestamp = str(time.time()).replace('.', '')[:13]

self.params = {

'jsv': '2.4.0',

'appKey': '12574478',

't': self.timestamp,

'sign': self.h('undefined' + "&" + self.timestamp + "&" + '12574478' + "&" + json.dumps(self.data,ensure_ascii=False).replace(' ', '')),

'api': 'mtop.alimama.union.sem.landing.pc.items',

'v': '1.0',

'AntiCreep': 'true',

'dataType': 'jsonp',

'type': 'jsonp',

'ecode': '0',

'callback': 'mtopjsonp1',

'data': json.dumps(self.data, ensure_ascii=False).replace(' ', '')

}

self.headers = {

'host': 'h5api.m.taobao.com',

'accept': '*/*',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'zh-Hans-CN, zh-Hans; q=0.8, en-US; q=0.5, en; q=0.3',

'referer': self.uland_url,

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36',

}

self.session.get('https://h5api.m.taobao.com' + '/h5/mtop.alimama.union.sem.landing.pc.items/1.0/?' + parse.urlencode(self.params), headers=self.headers)

for key, value in self.session.cookies.items():

self.cookies[key] = value

self.token = self.cookies['_m_h5_tk'].split('_')[0]

# 获取cna

def get_cna(self):

headers = {

'authority': 'log.mmstat.com',

'method': 'GET',

'path': '/eg.js',

'scheme': 'https',

'accept': '*/*',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'zh-CN,zh;q=0.9',

'referer': self.uland_url,

'sec-fetch-dest': 'script',

'sec-fetch-mode': 'no-cors',

'sec-fetch-site': 'cross-site',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36'

}

self.session.get('https://log.mmstat.com/eg.js', headers=headers)

for key, value in self.session.cookies.items():

self.cookies[key] = value

# 获取数据

def get_data(self, page):

self.data['page'] = "" if page == 1 else page

self.data['ac'] = "" if page == 1 else self.cookies.get('cna')

self.timestamp = str(time.time()).replace('.', '')[:13]

self.params['t'] = self.timestamp

self.params['sign'] = self.h(self.token + "&" + self.timestamp + "&" + '12574478' + "&" + json.dumps(self.data,ensure_ascii=False).replace(' ',''))

self.params['data'] = json.dumps(self.data, ensure_ascii=False).replace(' ', '')

self.headers['cookie'] = self.to_cookie()

response = self.session.get('https://h5api.m.taobao.com' + '/h5/mtop.alimama.union.sem.landing.pc.items/1.0/?' + parse.urlencode(self.params), headers=self.headers)

json_data = json.loads(response.text.replace('mtopjsonp1(','').replace(')',''))

print(json_data)

if __name__ == '__main__':

TaoBao('男装') # 搜索关键词