系统学习Linux-keepalived

目录

keepalived双机热备

keepalived+lvs(DR)

1.实验环境

先配置主调度器

web节点配置

keepalived双机热备

web服务器安装nginx和keepalived

配置好这些可以进行漂移了

复制keepalived.conf 进行修改web1为主web2为从优先级设置好

#web1服务器

[root@localhost ~]# yum install -y keepalived nginx

[root@localhost ~]# cd /etc/keepalived/

[root@localhost keepalived]# cp keepalived.conf keepalived.conf.bak

[root@localhost keepalived]# vim keepalived.conf.bak

! Configuration File for keepalived

global_defs {

router_id NGINX1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.31.100

192.168.31.101

192.168.31.102

}

}

[root@localhost keepalived]# systemctl restart keepalived.service

#web2服务器

[root@localhost ~]# yum install -y keepalived nginx

[root@localhost ~]# cd /etc/keepalived/

[root@localhost keepalived]# cp keepalived.conf keepalived.conf.bak

[root@localhost keepalived]# vim keepalived.conf.bak

! Configuration File for keepalived

global_defs {

router_id NGINX2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.31.100

192.168.31.101

192.168.31.102

}

}

[root@localhost keepalived]# systemctl restart keepalived.service

启动服务后ip a查看主从ip

web1虚ip已存在

#web1

[root@localhost keepalived]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.31.200/32 brd 192.168.31.200 scope global lo:0

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:12:94:c3 brd ff:ff:ff:ff:ff:ff

inet 192.168.31.5/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.31.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.31.101/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.31.102/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe12:94c3/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

#web2

[root@localhost ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.31.200/32 brd 192.168.31.200 scope global lo:0

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:49:51:51 brd ff:ff:ff:ff:ff:ff

inet 192.168.31.6/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe49:5151/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

写入web1与web2网页内容

#web1

[root@localhost keepalived]# cd /usr/share/nginx/html/

[root@localhost html]# ls

404.html 50x.html en-US icons img index.html nginx-logo.png poweredby.png

[root@localhost html]# echo nginx1 > index.html

[root@localhost html]# systemctl restart nginx

#web2

[root@localhost ~]# cd /usr/share/nginx/html/

[root@localhost html]# ls

404.html 50x.html en-US icons img index.html nginx-logo.png poweredby.png

[root@localhost html]# echo nginx2 > index.html

[root@localhost html]# systemctl restart nginx

主测试

因master正常运行所以现在访问的是web1主服务

[root@localhost keepalived]# curl 192.168.31.100

nginx1

[root@localhost keepalived]# curl 192.168.31.101

nginx1

[root@localhost keepalived]# curl 192.168.31.102

nginx1

如果停掉master会自动切换到backup(web2上)

注这里有一个问题配置文件复制修改的是keepalived.conf.bak这个是有错误的,修改原配置文件才可以适配到所以我删除了复制出来的bak,修改的原文件才适配成功

#web1

[root@localhost html]# systemctl stop keepalived.service

#web2

[root@localhost keepalived]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.31.200/32 brd 192.168.31.200 scope global lo:0

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:49:51:51 brd ff:ff:ff:ff:ff:ff

inet 192.168.31.6/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.31.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.31.101/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.31.102/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe49:5151/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

到主上测试自动切换master成功

[root@localhost keepalived]# curl 192.168.31.100

nginx2

[root@localhost keepalived]# curl 192.168.31.101

nginx2

[root@localhost keepalived]# curl 192.168.31.102

nginx2

测试断掉web1网卡等待地址漂移到web2上

网卡测试失败有bug或者过慢(正常停网卡可以)

停网卡形成脑裂现象服务还在,主依然是主,从依然是从,

停服务可以直接跳到web2服务

主机上配置FNS共享目录

#主机上配置NFS共享

[root@localhost keepalived]# mkdir /opt/pub

[root@localhost keepalived]# vim /etc/exports

/opt/pub 192.168.31.0/24(rw,sync,no_root_squash)

rpcbind安装fns-utils和fns

[root@localhost keepalived]# systemctl start nfs

[root@localhost keepalived]# showmount -e

Export list for localhost.localdomain:

/opt/put 192.168.31.0/24

#命令用于查找 showmount 命令的路径。

[root@localhost keepalived]# which showmount

/usr/sbin/showmount

#命令用于查询已安装软件包中包含指定文件的软件包。

[root@localhost keepalived]# rpm -qf /usr/sbin/showmount

nfs-utils-1.3.0-0.68.el7.x86_64

#命令用于重新导出所有已经在 /etc/exports 文件中定义的共享目录。

[root@localhost keepalived]# exportfs -arv

exporting 192.168.31.0/24:/opt/pub

web2、3

[root@localhost keepalived]# showmount -e 192.168.31.4

Export list for 192.168.31.4:

/opt/pub 192.168.31.0/24

[root@localhost keepalived]# mount 192.168.31.4:/opt/pub /usr/share/nginx/html/

[root@localhost keepalived]# showmount -e 192.168.31.4

Export list for 192.168.31.4:

/opt/pub 192.168.31.0/24

[root@localhost keepalived]# mount 192.168.31.4:/opt/pub /usr/share/nginx/html/

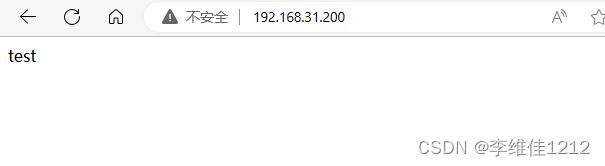

403是因为没有共享的网页文件内容

[root@localhost keepalived]# cd /opt/pub/

[root@localhost pub]# echo test > index.html

去web1停掉服务后继续测试网页

[root@localhost keepalived]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.31.200/32 brd 192.168.31.200 scope global lo:0

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:12:94:c3 brd ff:ff:ff:ff:ff:ff

inet 192.168.31.5/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe12:94c3/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

依然可以访问内容ip虽然一样但现在访问的已经是web2的虚地址

#web2

[root@localhost keepalived]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.31.200/32 brd 192.168.31.200 scope global lo:0

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:49:51:51 brd ff:ff:ff:ff:ff:ff

inet 192.168.31.6/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.31.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.31.101/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.31.102/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe49:5151/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:45:34:d8 brd ff:ff:ff:ff:ff:ff

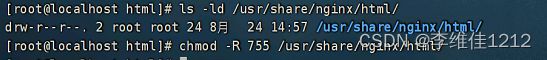

挂过来如果目录从755变成644需要改回去

keepalived+lvs(DR)

使用keepalived解决lvs的单点故障

高可用集群

1.实验环境

四台主机

vip(虚拟地址):192.168.31.200

| 角色 | 主机名 | IP | 接口 |

| 从调度器 | ds01 | 192.168.31.3 | enss33 |

| 主调度器 | ds02 | 192.168.31.4 | enss33 |

| rs节点1 | rs01 | 192.168.31.5 | enss33 |

| rs节点2 | rs02 | 192.168.31.6 | enss33 |

加入LVS就需要设置以下配置

主从需要安装并加载mod

yum install -y keepalived

yum install -y ipvsadm

modprobe ip_vs

先配置主调度器

#主调度器ds02

[root@localhost keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL1

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.31.200

}

}

! Configuration File for keepalived

}

}

virtual_server 192.168.31.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.31.5 80 {

weight 1

HTTP GET {

url {

path /

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.31.6 80 {

weight 1

HTTP_GET {

url {

path /

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

复制配置内容到从调度器ds01或者scp传输

#从调度器ds01

[root@localhost keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL2

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.31.200

}

}

virtual_ipaddress {

192.168.31.200

}

}

virtual_server 192.168.31.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.31.5 80 {

weight 1

HTTP GET {

url {

path /

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.31.6 80 {

weight 1

HTTP_GET {

url {

path /

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

重启服务并查看查看lvs节点状态

#主调度器ds02

[root@localhost pub]# systemctl restart keepalived.service

[root@localhost keepalived]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.31.200:80 rr persistent 50

-> 192.168.31.5:80 Route 1 0 0

-> 192.168.31.6:80 Route 1 0 0

#从调度器ds01

[root@localhost pub]# systemctl restart keepalived.service

[root@localhost keepalived]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.31.200:80 rr persistent 50

-> 192.168.31.5:80 Route 1 0 0

-> 192.168.31.6:80 Route 1 0 0 web节点配置

调整ARP参数(rs1、2节点都需要配置)

net.ipv4.conf.all.arp_ignore=1

net.ipv4.conf.all.arp_announce=2

net.ipv4.conf.default.arp_ignore=1

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce=2

[root@localhost keepalived]# vim /etc/sysctl.conf

[root@localhost keepalived]# sysctl -p

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

net.ipv4.conf.default.arp_ignore = 1

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

配置虚拟IP地址

#rs节点1、2相同配置

[root@localhost keepalived]# cd /etc/sysconfig/network-scripts/

[root@localhost network-scripts]# cp ifcfg-lo ifcfg-lo:0

[root@localhost network-scripts]# vim ifcfg-lo:0

DEVICE=lo:0

IPADDR=192.168.31.200

NETMASK=255.255.255.255

ONBOOT=yes

NAME=loopback:0

#添加回环路径

[root@localhost network-scripts]# route add -host 192.168.31.200/32 dev lo:0

测试

停掉主调度器ds02

systemctl stop keepalived.service

此时依然可以访问证明主从搭建成功,从已经开始工作