hive3.1.4源码编译兼容spark3.0.0 hive on spark hadoop3.x修改源码依赖 步骤详细

hive编译

自从CDH宣布收费之后,公司决定使用开源的组件,对现有的大数据集群进行替换。

使用hive3.1.2和spark3.0.0配置hive on spark的时候,发现官方下载的hive3.1.2和spark3.0.0不兼容,hive3.1.2对应的版本是spark2.3.0,而spark3.0.0对应的hadoop版本是hadoop2.6或hadoop2.7。

所以,如果想要使用高版本的hive和hadoop,我们要重新编译hive,兼容spark3.0.0。

目前,有关hive3.1.2编译的帖子相对较少,少有的几篇也比较简略,此次编译,改动比较大,除了兼容spark3.0.0外,还将hive3.1.3的guava的版本提升到了hadoop3.x一致,以兼容hadoop3.1.4。另外还修复官方hive3.1.2 release版本的一个bug。

1.准备虚拟机.

虚拟机一台,安装一台带图形化界面的CentOS7

2.安装jdk

参考本人写的hadoop3.1.4源码编译中的jdk安装

https://blog.csdn.net/weixin_52918377/article/details/116456751

3.安装maven

参考本人写的hadoop3.1.4源码编译中的maven安装

https://blog.csdn.net/weixin_52918377/article/details/116456751

4.安装idea

4.1下载idea安装包

https://www.jetbrains.com/idea/download/#section=linux

4.2上传解压安装包

上传到**/opt/resource**目录下

解压到**/opt/bigdata**目录

[root@localhost resource]# ls

apache-hive-3.1.2-src.tar.gz ideaIU-2021.1.1.tar.gz

apache-maven-3.6.3-bin.tar.gz jdk-8u212-linux-x64.tar.gz

[root@localhost resource]# tar -zxvf ideaIU-2021.1.1.tar.gz -C /opt/bigdata/

4.3启动idea

打开一个终端,执行如下命令

[root@localhost along]# nohup /opt/bigdata/idea-IU-211.7142.45/bin/idea.sh >/dev/null 2>&1 &

4.4配置maven

出现idea界面后,配置maven

5.下载打开源码

5.1下载hive3.1.2源码

http://archive.apache.org/dist/hive/hive-3.1.2/

将源码上传到**/opt/resource目录,并解压到/opt/hive-src**目录

[root@localhost resource]# tar -zxvf apache-hive-3.1.2-src.tar.gz -C /opt/hive-src/

5.2使用idea打开hive源码

使用idea打开源码,下载项目所需要的jar包。

注意:下载完依赖后,pom文件会报很多处错误,这个不能决定是否是错误。需要使用官方提供的编译打包方式去检验才行。

6.打包测试

参考官方:https://cwiki.apache.org/confluence/display/Hive/GettingStarted#GettingStarted-BuildingHivefromSource

6.1执行编译命令

打开terminal终端,使用如下命令进行进行打包,检验编译环境是否正常

mvn clean package -Pdist -DskipTests -Dmaven.javadoc.skip=true

可能会遇到如下问题:

maven打包报错

Failure to find org.pentaho:pentaho-aggdesigner-algorithm:jar:5.1.5-jhyde

Could not find artifact org.pentaho:pentaho-aggdesigner-algorithm:jar in ...

http://maven.aliyun.com/nexus/content/group/public

6.2解决jar缺失(没有出错跳过)

pentaho-aggdesigner-algorithm-5.1.5-jhyde.jar缺失

解决尝试一

再maven的setting文件中添加,增加2个阿里云仓库地址

<mirror>

<id>aliyunmavenid>

<mirrorOf>*mirrorOf>

<name>spring-pluginname>

<url>https://maven.aliyun.com/repository/spring-pluginurl>

mirror>

<mirror>

<id>repo2id>

<name>Mirror from Maven Repo2name>

<url>https://repo.spring.io/plugins-release/url>

<mirrorOf>centralmirrorOf>

重启idea,重新执行打包命令

尝试解决二

如果不能解决,可以尝试手动下载jar包,并上传到目标目录

jar包下载地址

https://public.nexus.pentaho.org/repository/proxy-public-3rd-party-release/org/pentaho/pentaho-aggdesigner-algorithm/5.1.5-jhyde/pentaho-aggdesigner-algorithm-5.1.5-jhyde.jar

重启idea,重新执行打包命令

这两种方法多试几次,一般就能解决了

看一下编译成功的提示

[INFO] Hive Streaming ..................................... SUCCESS [ 13.823 s]

[INFO] Hive Llap External Client .......................... SUCCESS [ 13.599 s]

[INFO] Hive Shims Aggregator .............................. SUCCESS [ 0.353 s]

[INFO] Hive Kryo Registrator .............................. SUCCESS [ 9.883 s]

[INFO] Hive TestUtils ..................................... SUCCESS [ 0.587 s]

[INFO] Hive Packaging ..................................... SUCCESS [04:22 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 33:10 min

[INFO] Finished at: 2021-05-19T01:55:24+08:00

[INFO] ------------------------------------------------------------------------

[root@localhost apache-hive-3.1.2-src]#

可以在**/opt/hive-src/apache-hive-3.1.2-src/packaging/target**目录下查看编译完成的安装包

7.提升hive的guave版本

集群中所安装的Hadoop-3.1.4中和Hive-3.1.2中包含guava的依赖,Hadoop-3.1.4中的版本为guava-27.0-jre,而Hive-3.1.2中的版本为guava-19.0。由于Hive运行时会加载Hadoop依赖,故会出现依赖冲突的问题。

7.1修改hive源码中的pom依赖

将pom.xml文件中147行的

<guava.version>19.0guava.version>

修改为

<guava.version>27.0-jreguava.version>

7.2重新执行打包命令

mvn clean package -Pdist -DskipTests -Dmaven.javadoc.skip=true

会出现打包报错信息

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 09:53 min

[INFO] Finished at: 2021-05-19T02:22:04+08:00

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.6.1:compile (default-compile) on project hive-llap-common: Compilation failure: Compilation failure:

[ERROR] /opt/hive-src/apache-hive-3.1.2-src/llap-common/src/java/org/apache/hadoop/hive/llap/AsyncPbRpcProxy.java:[173,16] 无法将类 com.google.common.util.concurrent.Futur方法 addCallback应用到给定类型;

[ERROR] 需要: com.google.common.util.concurrent.ListenableFuture<V>,com.google.common.util.concurrent.FutureCallback<? super V>,java.util.concurrent.Executor

[ERROR] 找到: com.google.common.util.concurrent.ListenableFuture<U>,org.apache.hadoop.hive.llap.AsyncPbRpcProxy.ResponseCallback<U>

[ERROR] 原因: 无法推断类型变量 V

[ERROR] (实际参数列表和形式参数列表长度不同)

[ERROR] /opt/hive-src/apache-hive-3.1.2-src/llap-common/src/java/org/apache/hadoop/hive/llap/AsyncPbRpcProxy.java:[274,12] 无法将类 com.google.common.util.concurrent.Futur方法 addCallback应用到给定类型;

[ERROR] 需要: com.google.common.util.concurrent.ListenableFuture<V>,com.google.common.util.concurrent.FutureCallback<? super V>,java.util.concurrent.Executor

[ERROR] 找到: com.google.common.util.concurrent.ListenableFuture<java.lang.Void>,<匿名com.google.common.util.concurrent.FutureCallback<java.lang.Void>>

[ERROR] 原因: 无法推断类型变量 V

[ERROR] (实际参数列表和形式参数列表长度不同)

[ERROR] -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <args> -rf :hive-llap-common

7.3根据错误提示,修改hive源码(8个类)

修改内容参考

https://github.com/gitlbo/hive/commits/3.1.2

1.druid-handler/src/java/org/apache/hadoop/hive/druid/serde/DruidScanQueryRecordReader.java

2.llap-server/src/java/org/apache/hadoop/hive/llap/daemon/impl/AMReporter.java

3.llap-server/src/java/org/apache/hadoop/hive/llap/daemon/impl/LlapTaskReporter.java

4.llap-server/src/java/org/apache/hadoop/hive/llap/daemon/impl/TaskExecutorService.java

5.ql/src/test/org/apache/hadoop/hive/ql/exec/tez/SampleTezSessionState.java

6.ql/src/java/org/apache/hadoop/hive/ql/exec/tez/WorkloadManager.java

7.llap-tez/src/java/org/apache/hadoop/hive/llap/tezplugins/LlapTaskSchedulerService.java

8.llap-common/src/java/org/apache/hadoop/hive/llap/AsyncPbRpcProxy.java

修改完以上15处代码之后,重新执行打包命令

[root@localhost apache-hive-3.1.2-src]# mvn clean package -Pdist -DskipTests -Dmaven.javadoc.skip=true

等待打包成功

[INFO] Hive HCatalog Pig Adapter .......................... SUCCESS [ 5.753 s]

[INFO] Hive HCatalog Server Extensions .................... SUCCESS [ 7.925 s]

[INFO] Hive HCatalog Webhcat Java Client .................. SUCCESS [ 5.259 s]

[INFO] Hive HCatalog Webhcat .............................. SUCCESS [ 16.249 s]

[INFO] Hive HCatalog Streaming ............................ SUCCESS [ 6.908 s]

[INFO] Hive HPL/SQL ....................................... SUCCESS [ 10.499 s]

[INFO] Hive Streaming ..................................... SUCCESS [ 4.031 s]

[INFO] Hive Llap External Client .......................... SUCCESS [ 4.874 s]

[INFO] Hive Shims Aggregator .............................. SUCCESS [ 0.157 s]

[INFO] Hive Kryo Registrator .............................. SUCCESS [ 3.802 s]

[INFO] Hive TestUtils ..................................... SUCCESS [ 0.245 s]

[INFO] Hive Packaging ..................................... SUCCESS [01:30 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 11:28 min

[INFO] Finished at: 2021-05-19T18:52:13+08:00

8.整合spark3.0.0

8.1修改pom.xml文件

在pom.xml文件的201行前后

<spark.version>3.0.0spark.version>

<scala.binary.version>2.12scala.binary.version>

<scala.version>2.12.11scala.version>

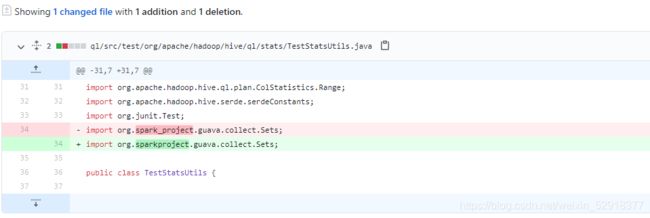

8.2修改hive源码(3个类)

修改内容参考

https://github.com/gitlbo/hive/commits/3.1.2

1.ql/src/test/org/apache/hadoop/hive/ql/stats/TestStatsUtils.java

2.spark-client/src/main/java/org/apache/hive/spark/client/metrics/ShuffleWriteMetrics.java

3.spark-client/src/main/java/org/apache/hive/spark/counter/SparkCounter.java

8.3再次执行打包命令

[root@localhost apache-hive-3.1.2-src]# mvn clean package -Pdist -DskipTests -Dmaven.javadoc.skip=true

8.4打包成功

[INFO] Hive HCatalog Core ................................. SUCCESS [ 7.934 s]

[INFO] Hive HCatalog Pig Adapter .......................... SUCCESS [ 5.008 s]

[INFO] Hive HCatalog Server Extensions .................... SUCCESS [ 4.758 s]

[INFO] Hive HCatalog Webhcat Java Client .................. SUCCESS [ 4.559 s]

[INFO] Hive HCatalog Webhcat .............................. SUCCESS [ 13.373 s]

[INFO] Hive HCatalog Streaming ............................ SUCCESS [ 6.729 s]

[INFO] Hive HPL/SQL ....................................... SUCCESS [ 7.727 s]

[INFO] Hive Streaming ..................................... SUCCESS [ 5.462 s]

[INFO] Hive Llap External Client .......................... SUCCESS [ 4.594 s]

[INFO] Hive Shims Aggregator .............................. SUCCESS [ 0.139 s]

[INFO] Hive Kryo Registrator .............................. SUCCESS [ 9.136 s]

[INFO] Hive TestUtils ..................................... SUCCESS [ 0.198 s]

[INFO] Hive Packaging ..................................... SUCCESS [01:18 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 10:23 min

[INFO] Finished at: 2021-05-20T02:18:34+08:00

[INFO] ------------------------------------------------------------------------

[root@localhost apache-hive-3.1.2-src]#

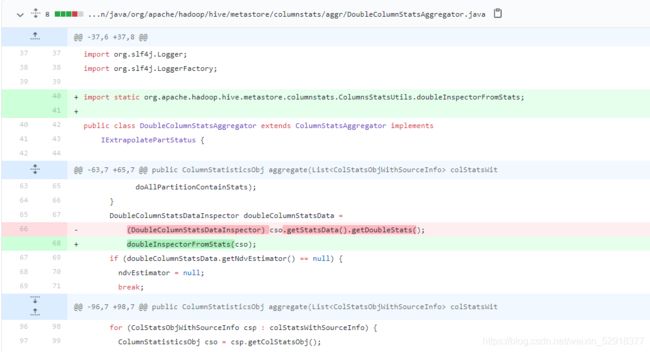

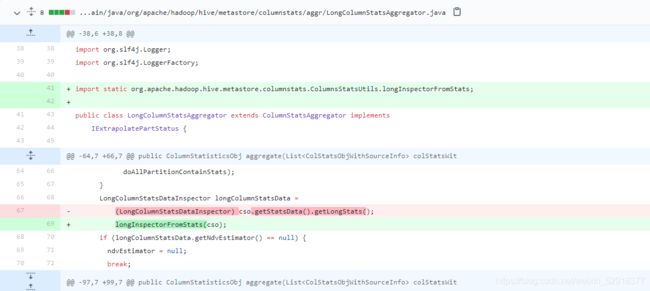

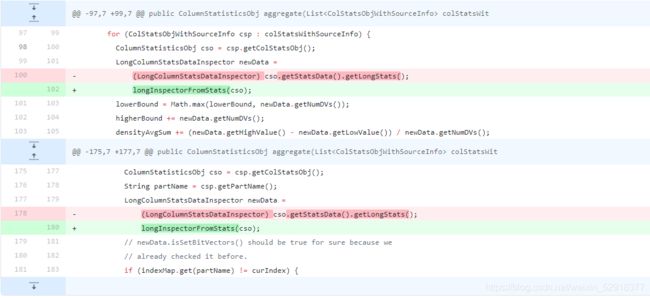

9.修复数据插入的bug(源码修改16个类)

HIVE-19316

修改内容参考

https://github.com/gitlbo/hive/commits/3.1.2

9.1修改源码(16个类)

1.新建类standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/ColumnsStatsUtils.java

/*

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.hadoop.hive.metastore.columnstats;

import org.apache.hadoop.hive.metastore.api.ColumnStatisticsObj;

import org.apache.hadoop.hive.metastore.columnstats.cache.DateColumnStatsDataInspector;

import org.apache.hadoop.hive.metastore.columnstats.cache.DecimalColumnStatsDataInspector;

import org.apache.hadoop.hive.metastore.columnstats.cache.DoubleColumnStatsDataInspector;

import org.apache.hadoop.hive.metastore.columnstats.cache.LongColumnStatsDataInspector;

import org.apache.hadoop.hive.metastore.columnstats.cache.StringColumnStatsDataInspector;

/**

* Utils class for columnstats package.

*/

public final class ColumnsStatsUtils {

private ColumnsStatsUtils(){}

/**

* Convertes to DateColumnStatsDataInspector if it's a DateColumnStatsData.

* @param cso ColumnStatisticsObj

* @return DateColumnStatsDataInspector

*/

public static DateColumnStatsDataInspector dateInspectorFromStats(ColumnStatisticsObj cso) {

DateColumnStatsDataInspector dateColumnStats;

if (cso.getStatsData().getDateStats() instanceof DateColumnStatsDataInspector) {

dateColumnStats =

(DateColumnStatsDataInspector)(cso.getStatsData().getDateStats());

} else {

dateColumnStats = new DateColumnStatsDataInspector(cso.getStatsData().getDateStats());

}

return dateColumnStats;

}

/**

* Convertes to StringColumnStatsDataInspector

* if it's a StringColumnStatsData.

* @param cso ColumnStatisticsObj

* @return StringColumnStatsDataInspector

*/

public static StringColumnStatsDataInspector stringInspectorFromStats(ColumnStatisticsObj cso) {

StringColumnStatsDataInspector columnStats;

if (cso.getStatsData().getStringStats() instanceof StringColumnStatsDataInspector) {

columnStats =

(StringColumnStatsDataInspector)(cso.getStatsData().getStringStats());

} else {

columnStats = new StringColumnStatsDataInspector(cso.getStatsData().getStringStats());

}

return columnStats;

}

/**

* Convertes to LongColumnStatsDataInspector if it's a LongColumnStatsData.

* @param cso ColumnStatisticsObj

* @return LongColumnStatsDataInspector

*/

public static LongColumnStatsDataInspector longInspectorFromStats(ColumnStatisticsObj cso) {

LongColumnStatsDataInspector columnStats;

if (cso.getStatsData().getLongStats() instanceof LongColumnStatsDataInspector) {

columnStats =

(LongColumnStatsDataInspector)(cso.getStatsData().getLongStats());

} else {

columnStats = new LongColumnStatsDataInspector(cso.getStatsData().getLongStats());

}

return columnStats;

}

/**

* Convertes to DoubleColumnStatsDataInspector

* if it's a DoubleColumnStatsData.

* @param cso ColumnStatisticsObj

* @return DoubleColumnStatsDataInspector

*/

public static DoubleColumnStatsDataInspector doubleInspectorFromStats(ColumnStatisticsObj cso) {

DoubleColumnStatsDataInspector columnStats;

if (cso.getStatsData().getDoubleStats() instanceof DoubleColumnStatsDataInspector) {

columnStats =

(DoubleColumnStatsDataInspector)(cso.getStatsData().getDoubleStats());

} else {

columnStats = new DoubleColumnStatsDataInspector(cso.getStatsData().getDoubleStats());

}

return columnStats;

}

/**

* Convertes to DecimalColumnStatsDataInspector

* if it's a DecimalColumnStatsData.

* @param cso ColumnStatisticsObj

* @return DecimalColumnStatsDataInspector

*/

public static DecimalColumnStatsDataInspector decimalInspectorFromStats(ColumnStatisticsObj cso) {

DecimalColumnStatsDataInspector columnStats;

if (cso.getStatsData().getDecimalStats() instanceof DecimalColumnStatsDataInspector) {

columnStats =

(DecimalColumnStatsDataInspector)(cso.getStatsData().getDecimalStats());

} else {

columnStats = new DecimalColumnStatsDataInspector(cso.getStatsData().getDecimalStats());

}

return columnStats;

}

}

2.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/aggr/DateColumnStatsAggregator.java

3.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/aggr/DecimalColumnStatsAggregator.java

4.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/aggr/DoubleColumnStatsAggregator.java

5.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/aggr/LongColumnStatsAggregator.java

6.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/aggr/StringColumnStatsAggregator.java

7.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/cache/DateColumnStatsDataInspector.java

8.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/cache/DecimalColumnStatsDataInspector.java

9.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/cache/DoubleColumnStatsDataInspector.java

10.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/cache/LongColumnStatsDataInspector.java

11.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/cache/StringColumnStatsDataInspector.java

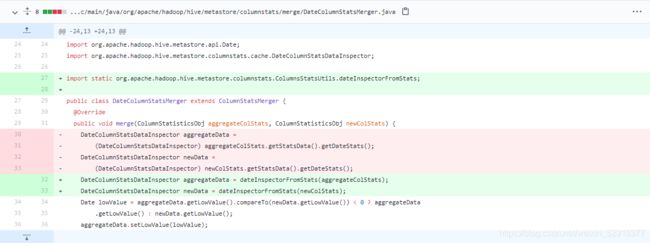

12.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/merge/DateColumnStatsMerger.java

13.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/merge/DecimalColumnStatsMerger.java

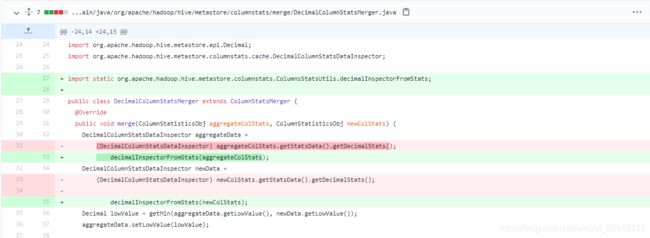

14.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/merge/DoubleColumnStatsMerger.java

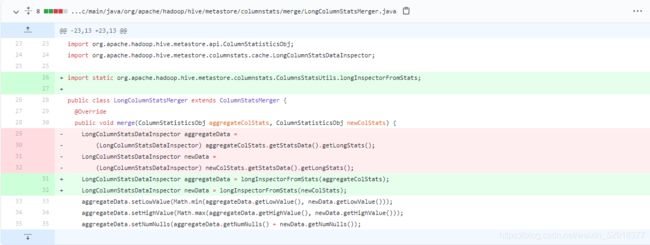

15.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/merge/LongColumnStatsMerger.java

16.standalone-metastore/src/main/java/org/apache/hadoop/hive/metastore/columnstats/merge/StringColumnStatsMerger.java

9.2重新编译

[root@localhost apache-hive-3.1.2-src]# mvn clean package -Pdist -DskipTests -Dmaven.javadoc.skip=true

来看一下最后的编译成果吧

[INFO] Reactor Summary for Hive 3.1.2:

[INFO]

[INFO] Hive Upgrade Acid .................................. SUCCESS [ 18.808 s]

[INFO] Hive ............................................... SUCCESS [ 0.864 s]

[INFO] Hive Classifications ............................... SUCCESS [ 1.717 s]

[INFO] Hive Shims Common .................................. SUCCESS [ 5.278 s]

[INFO] Hive Shims 0.23 .................................... SUCCESS [ 7.141 s]

[INFO] Hive Shims Scheduler ............................... SUCCESS [ 3.793 s]

[INFO] Hive Shims ......................................... SUCCESS [ 2.450 s]

[INFO] Hive Common ........................................ SUCCESS [ 16.272 s]

[INFO] Hive Service RPC ................................... SUCCESS [ 7.408 s]

[INFO] Hive Serde ......................................... SUCCESS [ 12.279 s]

[INFO] Hive Standalone Metastore .......................... SUCCESS [01:16 min]

[INFO] Hive Metastore ..................................... SUCCESS [ 8.464 s]

[INFO] Hive Vector-Code-Gen Utilities ..................... SUCCESS [ 0.887 s]

[INFO] Hive Llap Common ................................... SUCCESS [ 9.334 s]

[INFO] Hive Llap Client ................................... SUCCESS [ 6.968 s]

[INFO] Hive Llap Tez ...................................... SUCCESS [ 7.052 s]

[INFO] Hive Spark Remote Client ........................... SUCCESS [ 8.889 s]

[INFO] Hive Query Language ................................ SUCCESS [02:16 min]

[INFO] Hive Llap Server ................................... SUCCESS [ 16.585 s]

[INFO] Hive Service ....................................... SUCCESS [ 16.059 s]

[INFO] Hive Accumulo Handler .............................. SUCCESS [ 12.643 s]

[INFO] Hive JDBC .......................................... SUCCESS [ 32.533 s]

[INFO] Hive Beeline ....................................... SUCCESS [ 7.864 s]

[INFO] Hive CLI ........................................... SUCCESS [ 5.747 s]

[INFO] Hive Contrib ....................................... SUCCESS [ 3.981 s]

[INFO] Hive Druid Handler ................................. SUCCESS [ 25.850 s]

[INFO] Hive HBase Handler ................................. SUCCESS [ 8.885 s]

[INFO] Hive JDBC Handler .................................. SUCCESS [ 4.180 s]

[INFO] Hive HCatalog ...................................... SUCCESS [ 0.972 s]

[INFO] Hive HCatalog Core ................................. SUCCESS [ 8.249 s]

[INFO] Hive HCatalog Pig Adapter .......................... SUCCESS [ 5.723 s]

[INFO] Hive HCatalog Server Extensions .................... SUCCESS [ 6.205 s]

[INFO] Hive HCatalog Webhcat Java Client .................. SUCCESS [ 6.694 s]

[INFO] Hive HCatalog Webhcat .............................. SUCCESS [ 12.961 s]

[INFO] Hive HCatalog Streaming ............................ SUCCESS [ 8.863 s]

[INFO] Hive HPL/SQL ....................................... SUCCESS [ 8.735 s]

[INFO] Hive Streaming ..................................... SUCCESS [ 4.532 s]

[INFO] Hive Llap External Client .......................... SUCCESS [ 4.471 s]

[INFO] Hive Shims Aggregator .............................. SUCCESS [ 0.230 s]

[INFO] Hive Kryo Registrator .............................. SUCCESS [ 3.398 s]

[INFO] Hive TestUtils ..................................... SUCCESS [ 0.322 s]

[INFO] Hive Packaging ..................................... SUCCESS [01:33 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 10:30 min

[INFO] Finished at: 2021-05-20T18:35:34+08:00

[INFO] ------------------------------------------------------------------------

[root@localhost apache-hive-3.1.2-src]#

10.改造原来hive(可略过)

未使用官方包安装hive的可以忽略,可以直接使用编译好的hive进行安装,我这里使用官方的包安装过一次hive,使用编译过后的包进行改造。

将编译好的hive安装包,复制到目标服务器hdp14

[root@localhost target]# pwd

/opt/hive-src/apache-hive-3.1.2-src/packaging/target

[root@localhost target]# ls

antrun apache-hive-3.1.2-src.tar.gz tmp

apache-hive-3.1.2-bin archive-tmp warehouse

apache-hive-3.1.2-bin.tar.gz maven-shared-archive-resources

apache-hive-3.1.2-jdbc.jar testconf

[root@localhost target]# scp apache-hive-3.1.2-bin.tar.gz [email protected]:/opt/resource

链接hdp14,将安装包解压到安装目录**/opt/bigdata**

[along@hdp14 resource]$ tar -zxvf apache-hive-3.1.2-bin.tar.gz -C /opt/bigdata/

修改旧的hiv而文件夹名称

[along@hdp14 bigdata]$ mv hive hivebak

修改新的hive文件名称

[along@hdp14 bigdata]$ mv apache-hive-3.1.2-bin/ hive

将之前安装的hive配置文件放入新的hive中

[along@hdp14 hive]$ cp /opt/bigdata/hivebak/conf/hive-site.xml conf/

[along@hdp14 hive]$ cp /opt/bigdata/hivebak/conf/spark-defaults.conf conf/

[along@hdp14 hive]$ cp /opt/bigdatahivebak/conf/hive-log4j2.properties conf/

复制mysql驱动

[along@hdp14 hive]$ cp /opt/bigdata/hivebak/lib/mysql-connector-java-5.1.48.jar lib/

复制启动脚本

[along@hdp14 hive]$ cp /opt/bigdata/hivebak/bin/hiveservices.sh lib/hiveservices.sh

11.测试hive on spark

11.1启动环境

启动zookeeper,hadoop集群,hive

[along@hdp14 hive]$ zk.sh start

[along@hdp14 hive]$ hdp.sh start

[along@hdp14 conf]$ bin/hiveservices.sh start

启动客户端

[along@hdp14 hive]$ bin/hive

11.2插入数据测试

创建一张表

hive (default)> create table student(id bigint, name string);

插入一条数据

hive (default)> insert into table student values(1,'along');

执行结果

hive (default)> insert into table student values(1,'along');

Query ID = along_20210520131520_268ee359-1a6d-4605-96d2-bcf39a5ea87a

Total jobs = 1

Launching Job 1 out of 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Running with YARN Application = application_1621480971754_0006

Kill Command = /opt/bigdata/hadoop-3.1.4/bin/yarn application -kill application_1621480971754_0006

Hive on Spark Session Web UI URL: http://hdp17:42418

Query Hive on Spark job[0] stages: [0, 1]

Spark job[0] status = RUNNING

--------------------------------------------------------------------------------------

STAGES ATTEMPT STATUS TOTAL COMPLETED RUNNING PENDING FAILED

--------------------------------------------------------------------------------------

Stage-0 ........ 0 FINISHED 1 1 0 0 0

Stage-1 ........ 0 FINISHED 1 1 0 0 0

--------------------------------------------------------------------------------------

STAGES: 02/02 [==========================>>] 100% ELAPSED TIME: 44.13 s

--------------------------------------------------------------------------------------

Spark job[0] finished successfully in 44.13 second(s)

WARNING: Spark Job[0] Spent 12% (3400 ms / 28795 ms) of task time in GC

Loading data to table default.student

OK

col1 col2

Time taken: 136.278 seconds

hive (default)>