线上问诊:业务数据采集

系列文章目录

线上问诊:业务数据采集

线上问诊:数仓数据同步

文章目录

- 系列文章目录

- 前言

- 一、环境安装

-

- 1.DataX

- 二、全量同步

-

- 1.DataX配置文件生成

- 2.启动hadoop测试一下。

- 3.全量同步

- 三、增量同步

-

- 1.配置Flume

- 2.编写Flume拦截器

- 3.通道测试

- 4.修改Maxwell参数

- 5.编写Flume启停脚本

- 6.创建增量同步脚本

- 总结

前言

上次博客记录的是数据的采集,这次我们记录一下数据从MYSQL到HDFS的数据同步。

一、环境安装

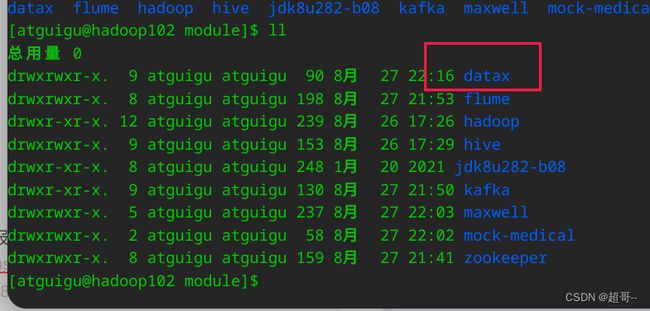

1.DataX

上传并解压

尚硅谷提供的资料里边解压会报一个错误,所以自己寻找了一下。

DataX下载

用datax提供的自检命令测试一下。

python /opt/module/datax/bin/datax.py /opt/module/datax/job/job.json

二、全量同步

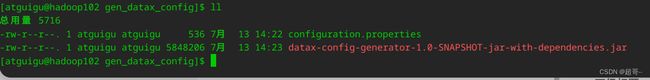

1.DataX配置文件生成

mkdir /opt/module/gen_datax_config

cd /opt/module/gen_datax_config

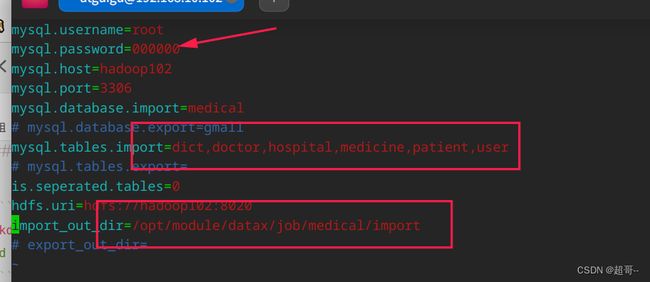

mysql.username=root

mysql.password=000000

mysql.host=hadoop102

mysql.port=3306

mysql.database.import=medical

# mysql.database.export=

mysql.tables.import=dict,doctor,hospital,medicine,patient,user

# mysql.tables.export=

is.seperated.tables=0

hdfs.uri=hdfs://hadoop102:8020

import_out_dir=/opt/module/datax/job/medical/import

# export_out_dir=

执行文件

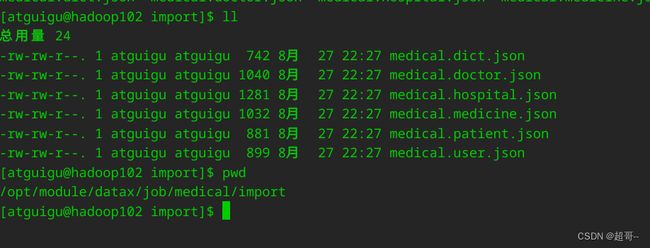

java -jar datax-config-generator-1.0-SNAPSHOT-jar-with-dependencies.jar

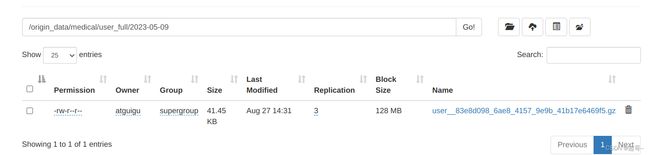

2.启动hadoop测试一下。

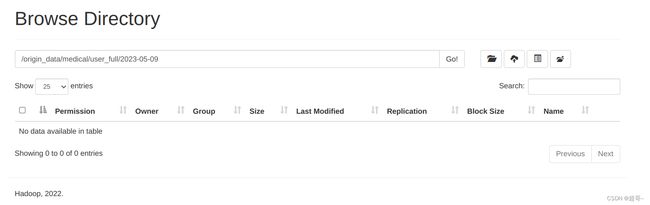

hadoop fs -mkdir -p /origin_data/medical/user_full/2023-05-09

python /opt/module/datax/bin/datax.py -p"-Dtargetdir=/origin_data/medical/user_full/2023-05-09" /opt/module/datax/job/medical/import/medical.user.json

测试成功后编写全量同步脚本。

vim ~/bin/medical_mysql_to_hdfs_full.sh

#!/bin/bash

DATAX_HOME=/opt/module/datax

DATAX_DATA=/opt/module/datax/job/medical

#清理脏数据

handle_targetdir() {

hadoop fs -rm -r $1 >/dev/null 2>&1

hadoop fs -mkdir -p $1

}

#数据同步

import_data() {

local datax_config=$1

local target_dir=$2

handle_targetdir "$target_dir"

echo "正在处理$1"

python $DATAX_HOME/bin/datax.py -p"-Dtargetdir=$target_dir" $datax_config >/tmp/datax_run.log 2>&1

if [ $? -ne 0 ]

then

echo "处理失败, 日志如下:"

cat /tmp/datax_run.log

fi

}

#接收表名变量

tab=$1

# 如果传入日期则do_date等于传入的日期,否则等于前一天日期

if [ -n "$2" ] ;then

do_date=$2

else

do_date=$(date -d "-1 day" +%F)

fi

case ${tab} in

dict|doctor|hospital|medicine|patient|user)

import_data $DATAX_DATA/import/medical.${tab}.json /origin_data/medical/${tab}_full/$do_date

;;

"all")

for tmp in dict doctor hospital medicine patient user

do

import_data $DATAX_DATA/import/medical.${tmp}.json /origin_data/medical/${tmp}_full/$do_date

done

;;

esac

添加权限。

chmod +x ~/bin/medical_mysql_to_hdfs_full.sh

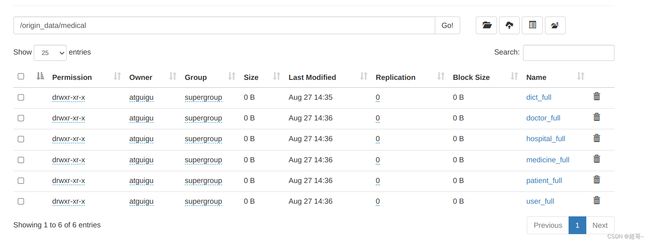

3.全量同步

medical_mysql_to_hdfs_full.sh all 2023-05-09

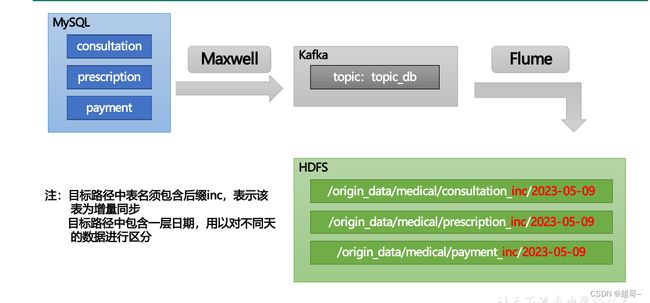

三、增量同步

1.配置Flume

在hadoop104进行配置

vim medical_kafka_to_hdfs.conf

a1.sources = r1

a1.channels = c1

a1.sinks = k1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 5000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092,hadoop104:9092

a1.sources.r1.kafka.topics = topic_db

a1.sources.r1.kafka.consumer.group.id = medical-flume

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.atguigu.medical.flume.interceptors.TimestampAndTableNameInterceptor$Builder

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /opt/module/flume/checkpoint/medical

a1.channels.c1.dataDirs = /opt/module/flume/data/medical

a1.channels.c1.maxFileSize = 2146435071

a1.channels.c1.capacity = 1000000

a1.channels.c1.keep-alive = 6

## sink1

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /origin_data/medical/%{tableName}_inc/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = db

a1.sinks.k1.hdfs.round = false

a1.sinks.k1.hdfs.rollInterval = 10

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = gzip

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

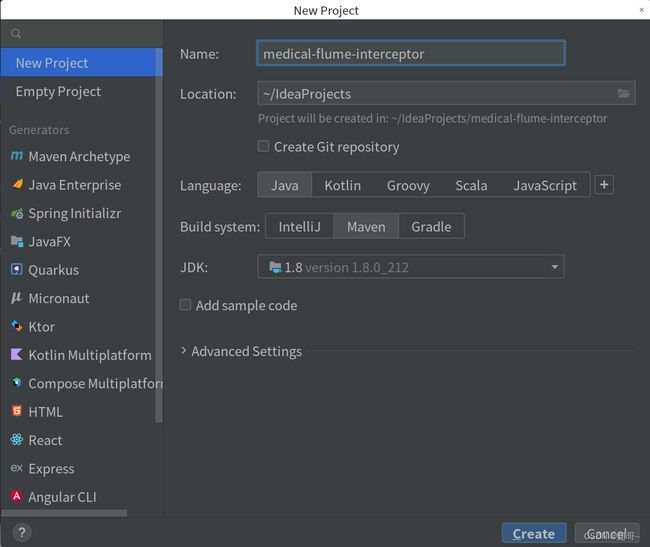

2.编写Flume拦截器

创建项目

medical-flume-interceptor

编写pom.xml

<dependencies>

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-core</artifactId>

<version>1.10.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.68</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

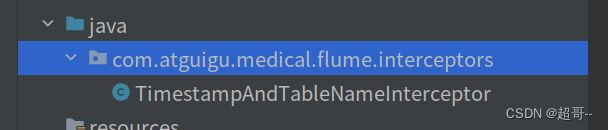

创建包

com.atguigu.medical.flume.interceptors

创建类

package com.atguigu.medical.flume.interceptors;

import com.alibaba.fastjson.JSONObject;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.StandardCharsets;

import java.util.List;

import java.util.Map;

public class TimestampAndTableNameInterceptor implements Interceptor {

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

Map<String, String> headers = event.getHeaders();

String log = new String(event.getBody(), StandardCharsets.UTF_8);

JSONObject jsonObject = JSONObject.parseObject(log);

Long ts = jsonObject.getLong("ts");

String tableName = jsonObject.getString("table");

headers.put("timestamp", ts * 1000 + "");

headers.put("tableName", tableName);

return event;

}

@Override

public List<Event> intercept(List<Event> events) {

for (Event event : events) {

intercept(event);

}

return events;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder {

@Override

public Interceptor build() {

return new TimestampAndTableNameInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

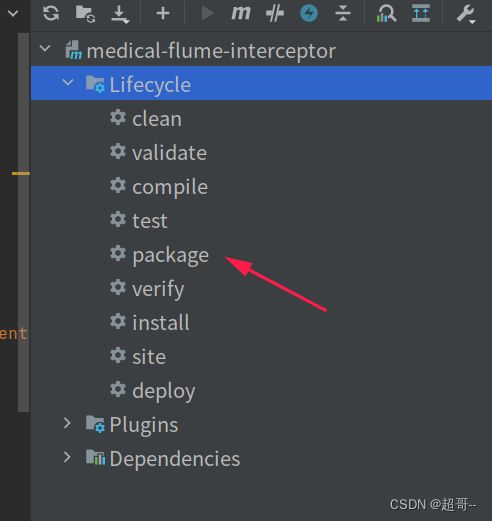

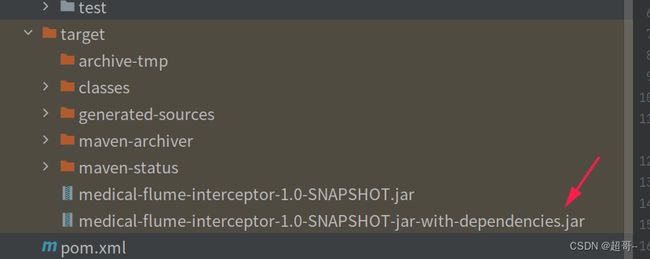

idea打包

将文件上传

放入到hadoop104的/opt/module/flume/lib

3.通道测试

启动Zookeeper、Kafka和Maxwell

在Hadoop104启动Flume

bin/flume-ng agent -n a1 -c conf/ -f job/medical_kafka_to_hdfs.conf -Dflume.root.logger=INFO,console

然后再次进行数据模拟。

medical_mock.sh 1

4.修改Maxwell参数

vim /opt/module/maxwell/config.properties

5.编写Flume启停脚本

vim medical-f1.sh

#!/bin/bash

case $1 in

"start")

echo " --------启动 hadoop104 业务数据flume-------"

ssh hadoop104 "nohup /opt/module/flume/bin/flume-ng agent -n a1 -c /opt/module/flume/conf -f /opt/module/flume/job/medical_kafka_to_hdfs.conf > /opt/module/flume/medical-f1.log 2>&1 &"

;;

"stop")

echo " --------停止 hadoop104 业务数据flume-------"

ssh hadoop104 "ps -ef | grep medical_kafka_to_hdfs | grep -v grep |awk '{print \$2}' | xargs -n1 kill"

;;

esac

chmod +x medical-f1.sh

6.创建增量同步脚本

vim medical_mysql_to_kafka_inc_init.sh

#!/bin/bash

# 该脚本的作用是初始化所有的增量表,只需执行一次

MAXWELL_HOME=/opt/module/maxwell

import_data() {

for tab in $@

do

$MAXWELL_HOME/bin/maxwell-bootstrap --database medical --table $tab --config $MAXWELL_HOME/config.properties

done

}

case $1 in

consultation | payment | prescription | prescription_detail | user | patient | doctor)

import_data $1

;;

"all")

import_data consultation payment prescription prescription_detail user patient doctor

;;

esac

chmod +x medical_mysql_to_kafka_inc_init.sh

删除之前的增量数据,再次测试。

hadoop fs -ls /origin_data/medical | grep _inc | awk '{print $8}' | xargs hadoop fs -rm -r -f

medical_mysql_to_kafka_inc_init.sh all

再次测试后出现增量数据,代表通过建立完成。

总结

数据的同步到这里就结束了。