HCIP-OpenStack组件之neutron

neutron(ovs、ovn)

OVS

OVS(Open vSwitch)是虚拟交换机,遵循SDN(Software Defined Network,软件定义网络)架构来管理的。

OVS介绍参考:https://mp.weixin.qq.com/s?__biz=MzAwMDQyOTcwOA==&mid=2247485088&idx=1&sn=f7eb3126eaa7d8c2a5056332694aea8b&chksm=9ae85d43ad9fd455f5b293c7a7fa60847b14eea18030105f2f330ce5076e7ec44eca7d30abad&cur_album_id=2470011981178322946&scene=189#wechat_redirect

ovs由三个组件组成:dataPath、vswitchd和ovsdb。

dataPath(opevswitch.ko):openvswitch.ko是ovs的内核模块,当openvswitch.ko模块被加载到内核时,会在网卡上注册一个钩子函数,每当网络包到达网卡时这个钩子函数就会被调用。openvswitch.ko模块在处理网络包时,会先匹配内核中能不能匹配到策略(内核流表)来处理,如果匹配到了策略,则直接在内核态根据该策略做网络包转发,这个过程全程在内核中完成,处理速度非常快,也称之为fast path(快速通道);如果内核中没有匹配到相应策略,则把数据包交给用户态的vswitchd进程处理,此时叫作slow path(慢通道)。dataPath模块可以通过ovs-dpctl命令来配置。

vswitchd:vswitchd是ovs的核心模块,它工作在用户空间(user space),负责与OpenFlow控制器、第三方软件通信。vswitchd接收到数据包时,会去匹配用户态流表,如果匹配成功则根据相关规则转发;如果匹配不成功,则会根据OpenFlow协议规范处理,把数据包上报给控制器(如果有)或者丢弃。

ovsdb:ovs数据库,存储整个ovs的配置信息,包括接口、交换内容、vlan、虚拟交换机信息等。

ovs相关术语解释:

1、Bridge:网桥,也就是交换机(不过是虚拟的,即vSwitch),一台主机中可以创建多个网桥。当数据包从网桥的某个端口进来后,网桥会根据一定的规则把该数据包转发到另外的端口,也可以修改或者丢弃报文。Bridge桥指的是虚拟交换机。

2、Port:交换机的端口,有以下几种类型:

Normal: 将物理网卡添加到bridge时它们会成为Port,类型为Normal。此时物理网卡配置ip已没有意义,它已经“退化成一根网线”只负责数据报文的进出。Normal类型的Port常用于vlan模式下多台物理主机相连的那个口,交换机的一端属于Trunk模式。

Internal: 此类型的Port,ovs会自动创建一个虚拟网卡接口(Interface),此端口收到数据都会转发给这块网卡,从网卡发出的数据也会通过Port交给ovs处理。当ovs创建一个新的Bridge时,会自动创建一个与网桥同名的Internal Port,同时也会创建一个与网桥同名的Interface。另外,Internal Port可配置IP地址,然后将其up,即可实现ovs三层网络。

Patch: 与veth pair功能类似,常用于连接两个Bridge。veth pair:两个网络虚拟端口(设备)

Tunnel: 实现overlay网络,支持GRE、vxlan、STT、Geneve和IPSec等隧道协议。Tunnel:隧道,三层

3、Interface:网卡,虚拟的(TUN/TAP)或物理的都可以。TAP:单个网络虚拟端口(设备),基于二层;TUN:基于三层。

4、Controller:控制器,ovs可以接收一个或多个OpenFlow控制器的管理,主要功能为下发流表来控制转发规则。

5、FlowTable:流表,ovs进行数据转发的核心功能,定义了端口之间的转发数据规则。每条流表规则可以分为匹配和动作两部分,“匹配”决定哪些数据将被处理,“动作”则决定了这些数据将被如何处理。

ens160的ip地址没有了,用的是br-ex的ip地址出去的。

ovs安装

1.开启一台新的linux

2.配置在线yum源(openstack那个在线yum源)

配置yum源(先把原有的备份后清空)

# cd /etc/yum.repos.d/ # rm -rf *

# cat cloud.repo

[highavailability]

name=CentOS Stream 8 - HighAvailability

baseurl=https://mirrors.aliyun.com/centos/8-stream/HighAvailability/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[nfv]

name=CentOS Stream 8 - NFV

baseurl=https://mirrors.aliyun.com/centos/8-stream/NFV/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[rt]

name=CentOS Stream 8 - RT

baseurl=https://mirrors.aliyun.com/centos/8-stream/RT/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[resilientstorage]

name=CentOS Stream 8 - ResilientStorage

baseurl=https://mirrors.aliyun.com/centos/8-stream/ResilientStorage/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[extras-common]

name=CentOS Stream 8 - Extras packages

baseurl=https://mirrors.aliyun.com/centos/8-stream/extras/x86_64/extras-common/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Extras-SHA512

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[extras]

name=CentOS Stream - Extras

mirrorlist=http://mirrorlist.centos.org/?release=&arch=&repo=extras&infra=

#baseurl=http://mirror.centos.org///extras//os/

baseurl=https://mirrors.aliyun.com/centos/8-stream/extras/x86_64/os/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

[centos-ceph-pacific]

name=CentOS - Ceph Pacific

baseurl=https://mirrors.aliyun.com/centos/8-stream/storage/x86_64/ceph-pacific/

gpgcheck=0

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Storage

[centos-rabbitmq-38]

name=CentOS-8 - RabbitMQ 38

baseurl=https://mirrors.aliyun.com/centos/8-stream/messaging/x86_64/rabbitmq-38/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Messaging

[centos-nfv-openvswitch]

name=CentOS Stream 8 - NFV OpenvSwitch

baseurl=https://mirrors.aliyun.com/centos/8-stream/nfv/x86_64/openvswitch-2/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-NFV

module_hotfixes=1

[baseos]

name=CentOS Stream 8 - BaseOS

baseurl=https://mirrors.aliyun.com/centos/8-stream/BaseOS/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[appstream]

name=CentOS Stream 8 - AppStream

baseurl=https://mirrors.aliyun.com/centos/8-stream/AppStream/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[centos-openstack-victoria]

name=CentOS 8 - OpenStack victoria

baseurl=https://mirrors.aliyun.com/centos/8-stream/cloud/x86_64/openstack-victoria/

#baseurl=https://repo.huaweicloud.com/centos/8-stream/cloud/x86_64/openstack-yoga/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Cloud

module_hotfixes=1

[powertools]

name=CentOS Stream 8 - PowerTools

#mirrorlist=http://mirrorlist.centos.org/?release=&arch=&repo=PowerTools&infra=

baseurl=https://mirrors.aliyun.com/centos/8-stream/PowerTools/x86_64/os/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

# yum clean all 清理缓存

# yum makecache 重新建立缓存

# yum repolist all 列出yum仓库(13个)

3.安装基础包及ovs(Tab补全命令,安装bash-completion包后执行bash就行)

安装openvswitch3.1过程报错说找不到gpgkey文件就禁用gpgcheck=0再次安装就行了

yum install -y vim net-tools bash-completion centos-release-openstack-victoria.noarch tcpdump openvswitch3.1

或再单独安装yum install -y openvswitch3.1*

查看安装版本:[root@ovs ~]# ovs-vsctl --version

4.启动ovs服务

[root@ovs ~]# systemctl start openvswitch

[root@ovs ~]# systemctl enable openvswitch

[root@ovs ~]# ps -ef | grep openvswitch

[root@ovs ~]# ovs-vsctl show 查看ovs虚拟交换机信息

[root@ovs ~]# ovs-vsctl --help 求帮助 或[root@ovs ~]# man ovs-vsctl

5、创建ovs虚拟交换机

当创建一个虚拟交换机会生成一个和虚拟交换机同名的Port 和Interface,type为internal(内部的)

[root@ovs ~]# ovs-vsctl add-br br-int

[root@ovs ~]# ovs-vsctl add-br br-memeda 添加

[root@ovs ~]# ovs-vsctl del-br br-memeda 删除

[root@ovs ~]# ovs-vsctl list-br 查看

br-int

br-memeda

[root@ovs ~]# ovs-vsctl show 查询ovs虚拟交换机信息,Bridge桥指的是虚拟交换机

54c67146-9a9f-40be-8cb7-e8792879aafa

Bridge br-memeda

Port br-memeda

Interface br-memeda

type: internal

Bridge br-int

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.3"

[root@ovs ~]# ip netns 查看网络命名空间

[root@ovs ~]# ip netns add ns1 添加网络命名空间

[root@ovs ~]# ip netns add ns2

[root@ovs ~]# ip netns

ns2

ns1

创建两个veth pair(一个veth pair有两个网络虚拟接口,veth可理解为网卡端口) 并将一端虚拟接口(veth1和veth2)连接到两个网络命名空间里面。veth pair:两个网络虚拟端口(设备)。

创建两个veth pair,并分别把这两个veth pair的一端放到上述两个网络命名空间

# ip link help 或# man ip link 求帮助

第一个网络命名空间配置

[root@ovs ~]# ip link add veth11 type veth peer name veth1

[root@ovs ~]# ip link set veth1 netns ns1

[root@ovs ~]# ip netns exec ns1 ip link set veth1 up

第二个网络命名空间配置

[root@ovs ~]# ip link add veth22 type veth peer name veth2

[root@ovs ~]# ip link set veth2 netns ns2

[root@ovs ~]# ip netns exec ns2 ip link set veth2 up

将另外一端虚拟接口(veth11和veth22)连接到ovs虚拟交换机上

[root@ovs ~]# ip link set veth11 up

[root@ovs ~]# ip link set veth22 up

[root@ovs ~]# ovs-vsctl add-port br-memeda veth11

[root@ovs ~]# ovs-vsctl add-port br-memeda veth22

[root@ovs ~]# ovs-vsctl show 发现br-memeda虚拟交换机多了2个Port(Port veth22、Port veth11)

3b79f2e1-f433-4015-905e-8945dcada530

Bridge br-memeda

Port br-memeda

Interface br-memeda

type: internal

Port veth22

Interface veth22

Port veth11

Interface veth11

Bridge br-int

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.3"

[root@ovs ~]# ip netns exec ns1 ip addr add 1.1.1.1/24 dev veth1

[root@ovs ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

7: veth1@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group d efault qlen 1000

link/ether fe:f9:3b:cb:9b:c5 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 1.1.1.1/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::fcf9:3bff:fecb:9bc5/64 scope link

valid_lft forever preferred_lft forever

[root@ovs ~]# ip netns exec ns2 ip addr add 1.1.1.2/24 dev veth2

[root@ovs ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

9: veth2@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 0a:e3:ac:a8:f3:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 1.1.1.2/24 scope global veth2

valid_lft forever preferred_lft forever

inet6 fe80::8e3:acff:fea8:f3bc/64 scope link

valid_lft forever preferred_lft forever

两个网络命名空间测试连通性

[root@ovs ~]# ip netns exec ns1 ping -c 3 1.1.1.2

PING 1.1.1.2 (1.1.1.2) 56(84) bytes of data.

64 bytes from 1.1.1.2: icmp_seq=1 ttl=64 time=2.98 ms

64 bytes from 1.1.1.2: icmp_seq=2 ttl=64 time=0.167 ms

64 bytes from 1.1.1.2: icmp_seq=3 ttl=64 time=0.081 ms

--- 1.1.1.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2065ms

rtt min/avg/max/mdev = 0.081/1.075/2.979/1.346 ms

[root@ovs ~]# ip netns exec ns2 ping -c 3 1.1.1.1

PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data.

64 bytes from 1.1.1.1: icmp_seq=1 ttl=64 time=0.923 ms

64 bytes from 1.1.1.1: icmp_seq=2 ttl=64 time=0.084 ms

64 bytes from 1.1.1.1: icmp_seq=3 ttl=64 time=0.091 ms

--- 1.1.1.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2007ms

rtt min/avg/max/mdev = 0.084/0.366/0.923/0.393 ms

vlan虚拟的本地局域网,vlan隔离为了减少网络阻塞和数据包安全

ovs虚拟交换机能和物理交换机一样定义vlan,一个vlan10(tag10),一个vlan20(tag20),把插在ovs交换机上的两个虚拟网络设备对端口分别打上不同的tag(默认是0),也就是配置到不同的vlan里,再验证网络连通性。

[root@ovs ~]# ovs-vsctl set port veth11 tag=10

[root@ovs ~]# ovs-vsctl set port veth22 tag=20

[root@ovs ~]# ovs-vsctl show 发现br-memeda虚拟交换机的Port veth22和Port veth11下面多了tag标签

3b79f2e1-f433-4015-905e-8945dcada530

Bridge br-memeda

Port br-memeda

Interface br-memeda

type: internal

Port veth22

tag: 20

Interface veth22

Port veth11

tag: 10

Interface veth11

Bridge br-int

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.3"

添加不同vlan(tag标签)后ping不通,需借助路由或物理三层交换机

[root@ovs ~]# ip netns exec ns1 ping -c 3 1.1.1.2

PING 1.1.1.2 (1.1.1.2) 56(84) bytes of data.

--- 1.1.1.2 ping statistics ---

3 packets transmitted, 0 received, 100% packet loss, time 2064ms

[root@ovs ~]# ovs-vsctl set port veth22 tag=10 把veth22也改成tag=10就相当于同一个vlan二层互通了

[root@ovs ~]# ovs-vsctl show

3b79f2e1-f433-4015-905e-8945dcada530

Bridge br-memeda

Port br-memeda

Interface br-memeda

type: internal

Port veth22

tag: 10

Interface veth22

Port veth11

tag: 10

Interface veth11

Bridge br-int

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.3"

[root@ovs ~]# ip netns exec ns1 ping -c 3 1.1.1.2 同一个vlan(tag标签)能ping通进行二层通信

PING 1.1.1.2 (1.1.1.2) 56(84) bytes of data.

64 bytes from 1.1.1.2: icmp_seq=1 ttl=64 time=1.43 ms

64 bytes from 1.1.1.2: icmp_seq=2 ttl=64 time=0.093 ms

64 bytes from 1.1.1.2: icmp_seq=3 ttl=64 time=0.086 ms

--- 1.1.1.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2051ms

rtt min/avg/max/mdev = 0.086/0.535/1.426/0.630 ms

FlowTable:流表,ovs进行数据转发的核心功能,定义了端口之间的转发数据规则。每条流表规则可以分为匹配和动作两部分,“匹配”决定哪些数据将被处理,“动作”则决定了这些数据将被如何处理。

流量走向,添加流表,针对流量进口添加规则。

查看ovs默认的流表

[root@ovs ~]# ovs-ofctl dump-flows br-memeda 查看虚拟交换机的流规则

cookie=0x0, duration=2161.884s, table=0, n_packets=49, n_bytes=3682, priority=0 action s=NORMAL

此时ovs就类似于传统交换机,我们给ovs交换机添加一条优先级为2(数字越大优先级越高,高于默认表项的0优先级)的流表项,把veth11进来的请求都drop掉,发现ns1不能ping通ns2。

[root@ovs ~]# ovs-ofctl add-flow br-memeda "priority=2,in_port=veth11,actions=drop" 添加流规则

[root@ovs ~]# ovs-ofctl dump-flows br-memeda

cookie=0x0, duration=2.578s, table=0, n_packets=0, n_bytes=0, priority=2,in_port=veth11 actions=drop

cookie=0x0, duration=2217.329s, table=0, n_packets=49, n_bytes=3682, priority=0 actions=NORMAL

[root@ovs ~]# ip netns exec ns1 ping -c 3 1.1.1.2

PING 1.1.1.2 (1.1.1.2) 56(84) bytes of data.

--- 1.1.1.2 ping statistics ---

3 packets transmitted, 0 received, 100% packet loss, time 2076ms

删除刚添加的表项,ns1与ns2又能正常通信

[root@ovs ~]# ovs-ofctl del-flows br-memeda "in_port=veth11" 删除刚添加的流规则就互通了

[root@ovs ~]# ip netns exec ns1 ping -c 3 1.1.1.2

PING 1.1.1.2 (1.1.1.2) 56(84) bytes of data.

64 bytes from 1.1.1.2: icmp_seq=1 ttl=64 time=0.766 ms

64 bytes from 1.1.1.2: icmp_seq=2 ttl=64 time=0.096 ms

64 bytes from 1.1.1.2: icmp_seq=3 ttl=64 time=0.088 ms

--- 1.1.1.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2043ms

rtt min/avg/max/mdev = 0.088/0.316/0.766/0.318 ms

[root@ovs ~]# ovs-ofctl dump-flows br-memeda

cookie=0x0, duration=2315.744s, table=0, n_packets=59, n_bytes=4438, priority=0 action s=NORMAL

OVN

OVN建立在OVS之上的,遵循SDN(Software Defined Network,软件定义网络)架构来管理的,用软件将控制面和转发面分离,OVN做控制面,OVS做转发面。

ovn是建立在ovs之上的,ovn必须有底层的ovs,ovs可理解为二层交换机,ovn可理解为三层交换机。

OVS介绍参考:https://mp.weixin.qq.com/s?__biz=MzAwMDQyOTcwOA==&mid=2247485357&idx=1&sn=1e80c02232e2bdafec8dcf71dd4fa265&chksm=9ae85c4ead9fd5584e488f7f7f5b7d2ad4bd86c18cb780d057653b23eac4001dabe8f9b6d98a&cur_album_id=2470011981178322946&scene=189#wechat_redirect

单纯的ovs在云计算领域还存在着一些问题,例如:

1、ovs只能做二层转发,没有三层的能力,无法在ovs上进行路由配置等操作;

2、ovs没有高可用配置;

3、在虚拟化领域vm从一台物理机迁移到另一台物理机,以及容器领域container从一个节点迁移到另一个节点都是非常常见的场景,而单纯的ovs的配置只适用于当前节点。当发生上述迁移过程时,新的节点因对应的ovs没有相关配置,会导致迁移过来的vm或者container无法正常运作。

针对这些问题,出现了ovn(Open Virtual Network),ovn提供的功能包括:

1、分布式虚拟路由器(distributed virtual routers)

2、分布式虚拟交换机(distributed logical switches)

3、访问控制列表(ACL)

4、DHCP

5、DNS server

在openstack里面,创建一个网络,就相当于创建了一个逻辑虚拟交换机,这个逻辑交换机(网络)信息会被保存到北向数据库里面。openstack创建一个网络,会以逻辑交换机(switch)的形式保存到北向数据库。

ovn官网对ovn的逻辑架构如下所示:

CMS

|

|

+-----------|-----------+

| | |

| OVN/CMS Plugin |

| | |

| | |

| OVN Northbound DB |

| | |

| | |

| ovn-northd |

| | |

+-----------|-----------+

|

|

+-------------------+

| OVN Southbound DB |

+-------------------+

|

|

+------------------+------------------+

| | |

HV 1 | | HV n |

+---------------|---------------+ . +---------------|---------------+

| | | . | | |

| ovn-controller | . | ovn-controller |

| | | | . | | | |

| | | | | | | |

| ovs-vswitchd ovsdb-server | | ovs-vswitchd ovsdb-server |

| | | |

+-------------------------------+ +-------------------------------+

ovn根据功能可以把节点分为两类:

central: 可以看做中心节点,central节点组件包括OVN/CMS plugin、OVN Northbound DB、ovn-northd、OVN Southbound DB。

hypervisor(hv): 可以看做工作节点,hypervisor节点组件包括ovn-controller、ovs-vswitchd、ovsdb-server。

central节点相关组件和hypervisor组件运行在同一个物理节点上。

相关组件的功能如下:

1、CMS: 云管软件(Cloud Management Software),例如openstack(ovn最初就是设计给openstack用的)。

2、OVN/CMS plugin: 云管软件插件,例如openstack的neutron plugin。它的作用是将逻辑网络配置转换成OVN理解的数据,并写到北向数据库(OVN Northbound DB)中。

3、OVN Northbound DB: ovn北向数据库,保存CMS plugin下发的配置,它有两个客户端CMS plugin和ovn-northd。通过ovn-nbctl命令直接操作它。北向数据库保存逻辑网络信息(交换机和路由器等)

4、ovn-northd: 北向进程将OVN Northbound DB中的数据进行转换并保存到OVN Southbound DB。所有信息经过北向数据库通过ovn-northd北向进程和南向数据库互通。

5、OVN Southbound DB: ovn南向数据库,它也有两个客户端: 上面的ovn-northd和下面的运行在每个hypervisor上的ovn-controller。通过ovn-sbctl命令直接操作它。南向数据库保存各个节点的物理网络信息。

6、ovn-controller: 相当于OVN在每个hypervisor上的agent(代理)。北向它连接到OVN Southbound Database学习最新的配置转换成openflow流表,南向它连接到ovs-vswitchd下发转换后的流表,同时也连接到ovsdb-server获取它需要的配置信息。

7、ovs-vswitchd和ovs-dbserver: ovs用户态的两个进程。

每个节点都有个ovn-controller控制器,这个ovn-controller控制器是管理ovs(ovs-vswitchd、ovsdb-server)的,ovn-controller对接到南向数据库,经过ovn-northd北向进程和北向数据库互通,之后和openstack互通。

南向数据库保存物理网络状态信息,北向数据库保存逻辑网络状态信息。

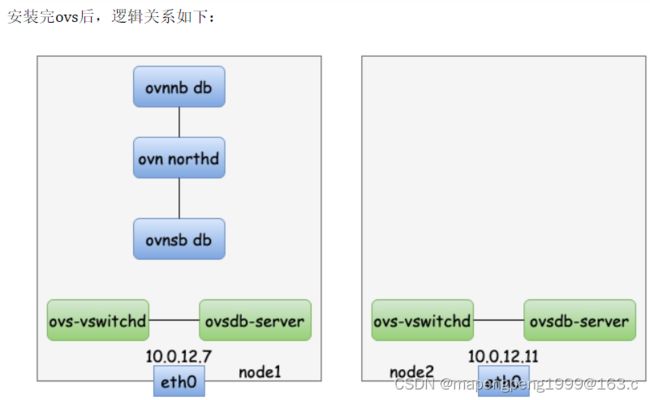

克隆出两台虚拟机,安装ovs、ovn

CentOS Stream 8 版本

systemctl stop firewalld.service

systemctl disable firewalld.service

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

mkdir /etc/yum.repos.d/bak

mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak/

cat <<EOF > /etc/yum.repos.d/cloudcs.repo

[ceph]

name=ceph

baseurl=https://mirrors.aliyun.com/ceph/rpm-18.1.1/el8/x86_64/

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

gpgcheck=1

enabled=1

[ceph-noarch]

name=ceph-noarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-18.1.1/el8/noarch/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

enabled=1

[ceph-SRPMS]

name=SRPMS

baseurl=https://mirrors.aliyun.com/ceph/rpm-18.1.1/el8/SRPMS/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

enabled=1

[highavailability]

name=CentOS Stream 8 - HighAvailability

baseurl=https://mirrors.aliyun.com/centos/8-stream/HighAvailability/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[nfv]

name=CentOS Stream 8 - NFV

baseurl=https://mirrors.aliyun.com/centos/8-stream/NFV/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[rt]

name=CentOS Stream 8 - RT

baseurl=https://mirrors.aliyun.com/centos/8-stream/RT/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[resilientstorage]

name=CentOS Stream 8 - ResilientStorage

baseurl=https://mirrors.aliyun.com/centos/8-stream/ResilientStorage/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[extras-common]

name=CentOS Stream 8 - Extras packages

baseurl=https://mirrors.aliyun.com/centos/8-stream/extras/x86_64/extras-common/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Extras-SHA512

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[extras]

name=CentOS Stream $releasever - Extras

mirrorlist=http://mirrorlist.centos.org/?release=$stream&arch=$basearch&repo=extras&infra=$infra

#baseurl=http://mirror.centos.org/$contentdir/$stream/extras/$basearch/os/

baseurl=https://mirrors.aliyun.com/centos/8-stream/extras/x86_64/os/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

[centos-ceph-pacific]

name=CentOS - Ceph Pacific

baseurl=https://mirrors.aliyun.com/centos/8-stream/storage/x86_64/ceph-pacific/

gpgcheck=0

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Storage

[centos-rabbitmq-38]

name=CentOS-8 - RabbitMQ 38

baseurl=https://mirrors.aliyun.com/centos/8-stream/messaging/x86_64/rabbitmq-38/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Messaging

[centos-nfv-openvswitch]

name=CentOS Stream 8 - NFV OpenvSwitch

baseurl=https://mirrors.aliyun.com/centos/8-stream/nfv/x86_64/openvswitch-2/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-NFV

module_hotfixes=1

[baseos]

name=CentOS Stream 8 - BaseOS

baseurl=https://mirrors.aliyun.com/centos/8-stream/BaseOS/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[appstream]

name=CentOS Stream 8 - AppStream

baseurl=https://mirrors.aliyun.com/centos/8-stream/AppStream/x86_64/os/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

gpgcheck=1

repo_gpgcheck=0

metadata_expire=6h

countme=1

enabled=1

[centos-openstack-victoria]

name=CentOS 8 - OpenStack victoria

baseurl=https://mirrors.aliyun.com/centos/8-stream/cloud/x86_64/openstack-victoria/

#baseurl=https://repo.huaweicloud.com/centos/8-stream/cloud/x86_64/openstack-yoga/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Cloud

module_hotfixes=1

[powertools]

name=CentOS Stream 8 - PowerTools

#mirrorlist=http://mirrorlist.centos.org/?release=$stream&arch=$basearch&repo=PowerTools&infra=$infra

baseurl=https://mirrors.aliyun.com/centos/8-stream/PowerTools/x86_64/os/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

EOF

yum install -y vim net-tools bash-completion git tcpdump autoconf automake libtool make python3 centos-release-openstack-victoria.noarch

yum install -y openvswitch3.1*

yum install -y ovn22.12*

查看安装版本来检查ovn是否安装成功,# ovn-appctl --version

echo 'export PATH=$PATH:/usr/share/ovn/scripts:/usr/share/openvswitch/scripts' >> /etc/profile

source /etc/profile 重新读取配置文件让配置文件立即生效

central相关组件启动:把node1作为central节点,安装central必需的三个组件:OVN Northbound DB、ovn-northd、OVN Southbound DB。

在控制节点启动central,只用在一个控制节点上启动即可(node1或node2上开启都行,这里是在node1开启),central只需要一套即可。

ovn-ctl start_northd命令会自动启动北桥数据库、ovn-northd、南桥数据库三个服务

[root@node1 ~]# ovn-ctl start_northd

/etc/ovn/ovnnb_db.db does not exist ... (warning).

Creating empty database /etc/ovn/ovnnb_db.db [ OK ]

Starting ovsdb-nb [ OK ]

/etc/ovn/ovnsb_db.db does not exist ... (warning).

Creating empty database /etc/ovn/ovnsb_db.db [ OK ]

Starting ovsdb-sb [ OK ]

Starting ovn-northd [ OK ]

[root@node1 ~]# ps -ef | grep ovn

root 34102 34101 0 21:02 ? 00:00:00 ovsdb-server -vconsole:off -vfile:info --log-file=/var/log/ovn/ovsdb-server-nb.log --remote=punix:/var/run ovn/ovnnb_db.sock --pidfile=/var/run/ovn/ovnnb_db.pid --unixctl=/var/run/ovn/ovnnb_db.ctl --detach --monitor --remote=db:OVN_Northbound,NB_Global,connections --private-key=db:OVN_Northbound,SSL,private_key --certificate=db:OVN_Northbound,SSL,certificate --ca-cert=db:OVN_Northbound,SSL,ca_cert --ssl-protocols=db:OVN_Northbound,SSL,ssl_protocols --ssl-ciphers=db:OVN_Northbound,SSL,ssl_ciphers /etc/ovn/ovnnb_db.db

root 34118 34117 0 21:02 ? 00:00:00 ovsdb-server -vconsole:off -vfile:info --log-file=/var/log/ovn/ovsdb-server-sb.log --remote=punix:/var/run ovn/ovnsb_db.sock --pidfile=/var/run/ovn/ovnsb_db.pid --unixctl=/var/run/ovn/ovnsb_db.ctl --detach --monitor --remote=db:OVN_Southbound,SB_Global,connections --private-key=db:OVN_Southbound,SSL,private_key --certificate=db:OVN_Southbound,SSL,certificate --ca-cert=db:OVN_Southbound,SSL,ca_cert --ssl-protocols=db:OVN_Southbound,SSL,ssl_protocols --ssl-ciphers=db:OVN_Southbound,SSL,ssl_ciphers /etc/ovn/ovnsb_db.db

root 34128 1 0 21:02 ? 00:00:00 ovn-northd: monitoring pid 34129 (healthy)

root 34129 34128 0 21:02 ? 00:00:00 ovn-northd -vconsole:emer -vsyslog:err -vfile:info --ovnnb-db=unix:/var/run/ovn/ovnnb_db.sock --ovnsb-db=unix:/var/run/ovn/ovnsb_db.sock --no-chdir --log-file=/var/log/ovn/ovn-northd.log --pidfile=/var/run/ovn/ovn-northd.pid --detach --monitor

root 34302 34259 0 21:07 pts/0 00:00:00 grep --color=auto ovn

hypervisor相关组件启动:hypervisor节点包含三个组件:ovn-controller、ovs-vswitchd和ovsdb-server。

启动hypervisor(hv)相关组件:node1和node2两台节点上都要启动,首先启动两个节点上的 ovs-vswitchd 和 ovsdb-server

[root@node1 ~]# ovs-ctl start --system-id=random

/etc/openvswitch/conf.db does not exist ... (warning).

Creating empty database /etc/openvswitch/conf.db [ OK ]

Starting ovsdb-server [ OK ]

Configuring Open vSwitch system IDs [ OK ]

Inserting openvswitch module [ OK ]

Starting ovs-vswitchd [ OK ]

Enabling remote OVSDB managers [ OK ]

[root@node2 ~]# ovs-ctl start --system-id=random

/etc/openvswitch/conf.db does not exist ... (warning).

Creating empty database /etc/openvswitch/conf.db [ OK ]

Starting ovsdb-server [ OK ]

Configuring Open vSwitch system IDs [ OK ]

Inserting openvswitch module [ OK ]

Starting ovs-vswitchd [ OK ]

Enabling remote OVSDB managers [ OK ]

[root@node1 ~]# ovn-ctl start_controller

Starting ovn-controller [ OK ]

[root@node1 ~]# ovs-vsctl show ovn-controler启动后会自动创建br-int网桥

ed157e0c-cac3-46b9-830c-f2d710b475d5

Bridge br-int

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.3"

[root@node2 ~]# ovn-ctl start_controller

Starting ovn-controller [ OK ]

[root@node2 ~]# ovs-vsctl show ovn-controler启动后会自动创建br-int网桥

f6669675-b42d-47de-be95-b26bf6d1e069

Bridge br-int

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.3"

可以看出此时hypervisor并没有和central关联起来(也就是ovn-controller没有和南向数据库连接)。可以在node1上验证:[root@node1 ~]# ovn-nbctl show

hypervisor连接central,开放南北数据库端口:

ovn-northd之所以能连上南向数据和北向数据库,是因为它们部署在同一台机器上,通过unix sock连接

central节点开放北向数据库端口6441,该端口主要给CMS plugins连接使用

central节点开放南向数据库端口6442,该端口给ovn-controller连接

[root@node1 ~]# ovn-nbctl set-connection ptcp:6641:10.1.1.41

[root@node1 ~]# ovn-sbctl set-connection ptcp:6642:10.1.1.41

[root@node1 ~]# netstat -tulnp |grep 664

tcp 0 0 10.1.1.41:6641 0.0.0.0:* LISTEN 34102/ovsdb-server

tcp 0 0 10.1.1.41:6642 0.0.0.0:* LISTEN 34118/ovsdb-server

node1上ovn-controller连接南向数据库

ovn-remote:指定南向数据库连接地址

ovn-encap-ip:指定ovs/controller本地ip

ovn-encap-type:指定隧道协议,这里用的是geneve

system-id:节点标识

[root@node1 ~]# ovs-vsctl set Open_vSwitch . external-ids:ovn-remote="tcp:10.1.1.41:6642" external-ids:ovn-encap-ip="10.1.1.41" external-ids:ovn-encap-type=geneve external-ids:system-id=node1

node2上ovn-controller连接南向数据库

[root@node1 ~]# ovs-vsctl set Open_vSwitch . external-ids:ovn-remote="tcp:10.1.1.41:6642" external-ids:ovn-encap-ip="10.1.1.42" external-ids:ovn-encap-type=geneve external-ids:system-id=node2

在node1查看南向数据库信息

[root@node1 ~]# ovn-sbctl show

Chassis node2

hostname: node2

Encap geneve

ip: "10.1.1.42"

options: {csum="true"}

Chassis node1

hostname: node1

Encap geneve

ip: "10.1.1.41"

options: {csum="true"}

以上的逻辑架构是站在底层组件和服务的角度来看的。

接下来换一种角度,站在逻辑网络的角度来看。

geneve隧道:ovn-controller连接南向数据库时,指定了external-ids:ovn-encap-type=geneve参数,此时看看两个节点上的ovs信息如下,会发现两个节点上都有一个ovn创建的ovs交换机br-int,而且br-int交换机上添加的节点port/interface类型都为geneve

[root@node1 ~]# ovs-vsctl show node1上查看ovs信息

ed157e0c-cac3-46b9-830c-f2d710b475d5

Bridge br-int

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

Port ovn-node2-0

Interface ovn-node2-0

type: geneve

options: {csum="true", key=flow, remote_ip="10.1.1.42"}

ovs_version: "3.1.3"

[root@node2 ~]# ovs-vsctl show node2上查看ovs信息

f6669675-b42d-47de-be95-b26bf6d1e069

Bridge br-int

fail_mode: secure

datapath_type: system

Port ovn-node1-0

Interface ovn-node1-0

type: geneve

options: {csum="true", key=flow, remote_ip="10.1.1.41"}

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.3"

[root@node1 ~]# ip link | grep gene 查看geneve隧道link

5: genev_sys_6081: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65000 qdisc noqueue master ovs-system state UNKNOWN mode DEFAULT group default qlen 1000

查看geneve隧道link详情,从dstport 6081可以看出geneve隧道udp端口是6081

[root@node1 ~]# ip -d link show genev_sys_6081

5: genev_sys_6081: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65000 qdisc noqueue master ovs-system state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 6a:e3:ff:a5:cc:d6 brd ff:ff:ff:ff:ff:ff promiscuity 1 minmtu 68 maxmtu 65465

geneve external id 0 ttl auto dstport 6081 udp6zerocsumrx

openvswitch_slave addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

查看geneve隧道udp端口,最后一列为“-”表示这个端口是内核态程序监听

[root@node1 ~]# netstat -nulp|grep 6081

udp 0 0 0.0.0.0:6081 0.0.0.0:* -

udp6 0 0 :::6081 :::* -

[root@node2 ~]# ip link | grep gene

5: genev_sys_6081: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65000 qdisc noqueue master ovs-system state UNKNOWN mode DEFAULT group default qlen 1000

[root@node2 ~]# ip -d link show genev_sys_6081

5: genev_sys_6081: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65000 qdisc noqueue master ovs-system state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 4e:db:f1:e4:43:94 brd ff:ff:ff:ff:ff:ff promiscuity 1 minmtu 68 maxmtu 65465

geneve external id 0 ttl auto dstport 6081 udp6zerocsumrx

openvswitch_slave addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

[root@node2 ~]# netstat -nulp|grep 6081

udp 0 0 0.0.0.0:6081 0.0.0.0:* -

udp6 0 0 :::6081 :::* -

在做以下实验验证时需要注意MAC地址的合法性,不要误配置。MAC地址分为三类:

广播地址(全F)

FF:FF:FF:FF:FF:FF

主播地址(第一个字节为奇数)

X1:XX:XX:XX:XX:XX

X3:XX:XX:XX:XX:XX

X5:XX:XX:XX:XX:XX

X7:XX:XX:XX:XX:XX

X9:XX:XX:XX:XX:XX

XB:XX:XX:XX:XX:XX

XD:XX:XX:XX:XX:XX

XF:XX:XX:XX:XX:XX

可用MAC地址(第一个字节为偶数)

X0:XX:XX:XX:XX:XX

X2:XX:XX:XX:XX:XX

X4:XX:XX:XX:XX:XX

X6:XX:XX:XX:XX:XX

X8:XX:XX:XX:XX:XX

XA:XX:XX:XX:XX:XX

XC:XX:XX:XX:XX:XX

XE:XX:XX:XX:XX:XX

在每个节点上创建一个网络命名空间ns1(因为在两个节点上所以同名ns1不会冲突),网络命名空间可理解为虚拟机,并且在ovs交换机上创建一组port和interfacce,然后把interface放到网络命名空间下。veth pair:两个网络虚拟端口(设备),veth可理解为网卡端口,一个端口在虚拟机上,一个端口在br-int虚拟交换机上。

node1上执行

[root@node1 ~]# ip netns add ns1

[root@node1 ~]# ip link add veth11 type veth peer name veth12

[root@node1 ~]# ip link set veth12 netns ns1

[root@node1 ~]# ip link set veth11 up

[root@node1 ~]# ip netns exec ns1 ip link set veth12 address 00:00:00:00:00:01

[root@node1 ~]# ip netns exec ns1 ip link set veth12 up

[root@node1 ~]# ovs-vsctl add-port br-int veth11

[root@node1 ~]# ip netns exec ns1 ip addr add 192.168.1.10/24 dev veth12

node2上执行,注意veth12的ip和和node1上veth12 ip在同一个子网

[root@node2 ~]# ip netns add ns1

[root@node2 ~]# ip link add veth11 type veth peer name veth12

[root@node2 ~]# ip link set veth12 netns ns1

[root@node2 ~]# ip link set veth11 up

[root@node2 ~]# ip netns exec ns1 ip link set veth12 address 00:00:00:00:00:02

[root@node2 ~]# ip netns exec ns1 ip link set veth12 up

[root@node2 ~]# ovs-vsctl add-port br-int veth11

[root@node2 ~]# ip netns exec ns1 ip addr add 192.168.1.20/24 dev veth12

查看node1上br-int交换机信息

[root@node1 ~]# ovs-vsctl show

ed157e0c-cac3-46b9-830c-f2d710b475d5

Bridge br-int

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

Port veth11

Interface veth11

Port ovn-node2-0

Interface ovn-node2-0

type: geneve

options: {csum="true", key=flow, remote_ip="10.1.1.42"}

ovs_version: "3.1.3"

查看node2上br-int交换机信息

[root@node2 ~]# ovs-vsctl show

f6669675-b42d-47de-be95-b26bf6d1e069

Bridge br-int

fail_mode: secure

datapath_type: system

Port veth11

Interface veth11

Port ovn-node1-0

Interface ovn-node1-0

type: geneve

options: {csum="true", key=flow, remote_ip="10.1.1.41"}

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.3"

现在从node1上的ns1 ping node2上的ns1是不通的,因为它们是不同主机上的网络,二/三层广播域暂时还不可达。

[root@node1 ~]# ip netns exec ns1 ping -c 3 192.168.1.20

PING 192.168.1.20 (192.168.1.20) 56(84) bytes of data.

--- 192.168.1.20 ping statistics ---

3 packets transmitted, 0 received, 100% packet loss, time 2047ms

查看openstack的控制节点发现,ovn的北向数据库中有逻辑交换机信息。

在openstack里面,创建一个网络,就相当于创建了一个逻辑虚拟交换机,这个逻辑交换机(网络)信息会被保存到北向数据库里面。一个网络就是一个逻辑交换机。

在node1中查看发现,ovn的北向数据库中没有逻辑交换机信息

在openstack不同节点的虚拟机ip互通,这两个虚拟机ip连的是同一个网络,是同一个逻辑交换机上的同一个子网不同ip所以互通。

这两个节点的虚拟机ns1的ip是手工配置的独立的、不互通,这两个虚拟机ip没有连到逻辑交换机上,加个逻辑交换机就能互通。

逻辑交换机(Logical Switch):为了使node1和node2上两个连接到ovs交换机的ns能正常通信,需借助ovn的逻辑交换机,注意逻辑交换机是北向数据库概念。

在node1上创建逻辑交换机

[root@node1 ~]# ovn-nbctl ls-add ls1

[root@node1 ~]# ovn-nbctl show

switch 86349e35-cdb4-42f7-a702-4b4a9d5653ef (ls1)

在逻辑交换机上添加端口

添加并设置用于连接node1的端口,注意mac地址要和veth pair网络命名空间内的那端匹配起来

[root@node1 ~]# ovn-nbctl lsp-add ls1 ls1-node1-ns1

[root@node1 ~]# ovn-nbctl lsp-set-addresses ls1-node1-ns1 00:00:00:00:00:01

[root@node1 ~]# ovn-nbctl lsp-set-port-security ls1-node1-ns1 00:00:00:00:00:01

添加并设置用于连接node2的端口,注意mac地址要匹配起来

[root@node1 ~]# ovn-nbctl lsp-add ls1 ls1-node2-ns1

[root@node1 ~]# ovn-nbctl lsp-set-addresses ls1-node2-ns1 00:00:00:00:00:02

[root@node1 ~]# ovn-nbctl lsp-set-port-security ls1-node2-ns1 00:00:00:00:00:02

查看逻辑交换机信息

[root@node1 ~]# ovn-nbctl show

switch 86349e35-cdb4-42f7-a702-4b4a9d5653ef (ls1)

port ls1-node1-ns1

addresses: ["00:00:00:00:00:01"]

port ls1-node2-ns1

addresses: ["00:00:00:00:00:02"]

node1上执行,veth11端口连接逻辑交换机端口

[root@node1 ~]# ovs-vsctl set interface veth11 external-ids:iface-id=ls1-node1-ns1

node2上执行,veth11端口连接逻辑交换机端口

[root@node2 ~]# ovs-vsctl set interface veth11 external-ids:iface-id=ls1-node2-ns1

再次查看南向数据库信息,发现端口已连接

[root@node1 ~]# ovn-sbctl show

Chassis node2

hostname: node2

Encap geneve

ip: "10.1.1.42"

options: {csum="true"}

Port_Binding ls1-node2-ns1

Chassis node1

hostname: node1

Encap geneve

ip: "10.1.1.41"

options: {csum="true"}

Port_Binding ls1-node1-ns1

node1上验证网络连通性

[root@node1 ~]# ip netns exec ns1 ping -c 3 192.168.1.20

PING 192.168.1.20 (192.168.1.20) 56(84) bytes of data.

64 bytes from 192.168.1.20: icmp_seq=1 ttl=64 time=4.68 ms

64 bytes from 192.168.1.20: icmp_seq=2 ttl=64 time=0.908 ms

64 bytes from 192.168.1.20: icmp_seq=3 ttl=64 time=0.756 ms

--- 192.168.1.20 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2004ms

rtt min/avg/max/mdev = 0.756/2.115/4.682/1.816 ms

node2上验证网络连通性

[root@node2 ~]# ip netns exec ns1 ping -c 3 192.168.1.10

PING 192.168.1.10 (192.168.1.10) 56(84) bytes of data.

64 bytes from 192.168.1.10: icmp_seq=1 ttl=64 time=3.34 ms

64 bytes from 192.168.1.10: icmp_seq=2 ttl=64 time=0.863 ms

64 bytes from 192.168.1.10: icmp_seq=3 ttl=64 time=0.372 ms

--- 192.168.1.10 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 0.372/1.525/3.342/1.300 ms

现在node1和node2的ns1互通了,相当于创建了两个实例,这两个实例ip用的子网是连在同一个逻辑交换机上的,是同一个逻辑交换机上的同一个子网不同ip所以互通。

geneve隧道验证:从node1上的ns1 ping node2上的ns1的例子,抓包看看各个相关组件报文,验证geneve隧道封解包。通过抓包分析,可以看出geneve隧道在ovn/ovs跨主机通信的重要作用,同时也能看到ovn逻辑交换机可以把不同宿主机上的二层网络打通,或者说ovn逻辑交换机可以把ovs二层广播域扩展到跨主机。

// node1上ns1 ping node2上ns1

# ip netns exec ns1 ping -c 1 192.168.1.20

PING 192.168.1.20 (192.168.1.20) 56(84) bytes of data.

64 bytes from 192.168.1.20: icmp_seq=1 ttl=64 time=1.00 ms

--- 192.168.1.20 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.009/1.009/1.009/0.000 ms

// node1上ns1中的veth12抓包

# ip netns exec ns1 tcpdump -i veth12 -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on veth12, link-type EN10MB (Ethernet), capture size 262144 bytes

22:23:11.364011 IP 192.168.1.10 > 192.168.1.20: ICMP echo request, id 24275, seq 1, length 64

22:23:11.365000 IP 192.168.1.20 > 192.168.1.10: ICMP echo reply, id 24275, seq 1, length 64

22:23:16.364932 ARP, Request who-has 192.168.1.20 tell 192.168.1.10, length 28

22:23:16.365826 ARP, Reply 192.168.1.20 is-at 00:00:00:00:00:02, length 28

// node1上veth12的另一端veth11抓包

# tcpdump -i veth11 -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on veth11, link-type EN10MB (Ethernet), capture size 262144 bytes

22:25:11.225987 IP 192.168.1.10 > 192.168.1.20: ICMP echo request, id 25166, seq 1, length 64

22:25:11.226914 IP 192.168.1.20 > 192.168.1.10: ICMP echo reply, id 25166, seq 1, length 64

22:25:16.236933 ARP, Request who-has 192.168.1.20 tell 192.168.1.10, length 28

22:25:16.237563 ARP, Request who-has 192.168.1.10 tell 192.168.1.20, length 28

22:25:16.237627 ARP, Reply 192.168.1.10 is-at 00:00:00:00:00:01, length 28

22:25:16.237649 ARP, Reply 192.168.1.20 is-at 00:00:00:00:00:02, length 28

// node1上genev_sys_6081网卡抓包

# tcpdump -i genev_sys_6081 -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on genev_sys_6081, link-type EN10MB (Ethernet), capture size 262144 bytes

22:28:15.872064 IP 192.168.1.10 > 192.168.1.20: ICMP echo request, id 26492, seq 1, length 64

22:28:15.872717 IP 192.168.1.20 > 192.168.1.10: ICMP echo reply, id 26492, seq 1, length 64

22:28:20.877100 ARP, Request who-has 192.168.1.20 tell 192.168.1.10, length 28

22:28:20.877640 ARP, Request who-has 192.168.1.10 tell 192.168.1.20, length 28

22:28:20.877654 ARP, Reply 192.168.1.20 is-at 00:00:00:00:00:02, length 28

22:28:20.877737 ARP, Reply 192.168.1.10 is-at 00:00:00:00:00:01, length 28

// node1上eth0抓包,可以看出数据包经过genev_sys_6081后做了geneve封装

# tcpdump -i eth0 port 6081 -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

22:30:23.446147 IP 10.0.12.7.51123 > 10.0.12.11.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: IP 192.168.1.10 > 192.168.1.20: ICMP echo request, id 27458, seq 1, length 64

22:30:23.446659 IP 10.0.12.11.50319 > 10.0.12.7.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: IP 192.168.1.20 > 192.168.1.10: ICMP echo reply, id 27458, seq 1, length 64

22:30:28.461137 IP 10.0.12.7.49958 > 10.0.12.11.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: ARP, Request who-has 192.168.1.20 tell 192.168.1.10, length 28

22:30:28.461554 IP 10.0.12.11.61016 > 10.0.12.7.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: ARP, Request who-has 192.168.1.10 tell 192.168.1.20, length 28

22:30:28.461571 IP 10.0.12.11.61016 > 10.0.12.7.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: ARP, Reply 192.168.1.20 is-at 00:00:00:00:00:02, length 28

22:30:28.461669 IP 10.0.12.7.49958 > 10.0.12.11.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: ARP, Reply 192.168.1.10 is-at 00:00:00:00:00:01, length 28

===================跨主机===================

// node2上eth0抓包

# tcpdump -i eth0 port 6081 -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

22:23:11.364189 IP 10.0.12.7.51123 > 10.0.12.11.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: IP 192.168.1.10 > 192.168.1.20: ICMP echo request, id 24275, seq 1, length 64

22:23:11.364662 IP 10.0.12.11.50319 > 10.0.12.7.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: IP 192.168.1.20 > 192.168.1.10: ICMP echo reply, id 24275, seq 1, length 64

22:23:16.365086 IP 10.0.12.7.49958 > 10.0.12.11.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: ARP, Request who-has 192.168.1.20 tell 192.168.1.10, length 28

22:23:16.365487 IP 10.0.12.11.61016 > 10.0.12.7.6081: Geneve, Flags [C], vni 0x1, options [8 bytes]: ARP, Reply 192.168.1.20 is-at 00:00:00:00:00:02, length 28

// node2上genev_sys_6081网卡抓包,可以看到数据包从genev_sys_6081出来后做了geneve解封

# tcpdump -i genev_sys_6081 -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on genev_sys_6081, link-type EN10MB (Ethernet), capture size 262144 bytes

22:25:11.226186 IP 192.168.1.10 > 192.168.1.20: ICMP echo request, id 25166, seq 1, length 64

22:25:11.226553 IP 192.168.1.20 > 192.168.1.10: ICMP echo reply, id 25166, seq 1, length 64

22:25:16.237070 ARP, Request who-has 192.168.1.20 tell 192.168.1.10, length 28

22:25:16.237162 ARP, Request who-has 192.168.1.10 tell 192.168.1.20, length 28

22:25:16.237203 ARP, Reply 192.168.1.20 is-at 00:00:00:00:00:02, length 28

22:25:16.237523 ARP, Reply 192.168.1.10 is-at 00:00:00:00:00:01, length 28

// node2上veth11抓包

# tcpdump -i veth11 -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on veth11, link-type EN10MB (Ethernet), capture size 262144 bytes

22:28:15.872198 IP 192.168.1.10 > 192.168.1.20: ICMP echo request, id 26492, seq 1, length 64

22:28:15.872235 IP 192.168.1.20 > 192.168.1.10: ICMP echo reply, id 26492, seq 1, length 64

22:28:20.876913 ARP, Request who-has 192.168.1.10 tell 192.168.1.20, length 28

22:28:20.877274 ARP, Request who-has 192.168.1.20 tell 192.168.1.10, length 28

22:28:20.877287 ARP, Reply 192.168.1.20 is-at 00:00:00:00:00:02, length 28

22:28:20.877613 ARP, Reply 192.168.1.10 is-at 00:00:00:00:00:01, length 28

// node2上ns1中的veth12抓包

# ip netns exec ns1 tcpdump -i veth12 -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on veth12, link-type EN10MB (Ethernet), capture size 262144 bytes

22:30:23.446212 IP 192.168.1.10 > 192.168.1.20: ICMP echo request, id 27458, seq 1, length 64

22:30:23.446242 IP 192.168.1.20 > 192.168.1.10: ICMP echo reply, id 27458, seq 1, length 64

22:30:28.460912 ARP, Request who-has 192.168.1.10 tell 192.168.1.20, length 28

22:30:28.461260 ARP, Request who-has 192.168.1.20 tell 192.168.1.10, length 28

22:30:28.461272 ARP, Reply 192.168.1.20 is-at 00:00:00:00:00:02, length 28

22:30:28.461530 ARP, Reply 192.168.1.10 is-at 00:00:00:00:00:01, length 28

逻辑路由器(Logical Router):

前面验证了ovn逻辑交换机跨主机同子网的通信,那不同子网间又该如何通信呢?这就要用到ovn的逻辑路由器了。

先在node2上再创建个网络命名空间ns2,ip设置为另外一个子网192.168.2.30/24,并且再增加一个逻辑交换机。

node2上执行

[root@node2 ~]# ip netns 查看网络命名空间

ns1 (id: 0)

[root@node2 ~]# ip netns add ns2

[root@node2 ~]# ip link add veth21 type veth peer name veth22

[root@node2 ~]# ip link set veth22 netns ns2

[root@node2 ~]# ip link set veth21 up

[root@node2 ~]# ip netns exec ns2 ip link set veth22 address 00:00:00:00:00:03

[root@node2 ~]# ip netns exec ns2 ip link set veth22 up

[root@node2 ~]# ovs-vsctl add-port br-int veth21

[root@node2 ~]# ip netns exec ns2 ip addr add 192.168.2.30/24 dev veth22

[root@node2 ~]# ip netns

ns2 (id: 1)

ns1 (id: 0)

node1上用ovn命令新增一个逻辑交换机,并配置好端口

[root@node1 ~]# ovn-nbctl ls-add ls2

[root@node1 ~]# ovn-nbctl lsp-add ls2 ls2-node2-ns2

[root@node1 ~]# ovn-nbctl lsp-set-addresses ls2-node2-ns2 00:00:00:00:00:03

[root@node1 ~]# ovn-nbctl lsp-set-port-security ls2-node2-ns2 00:00:00:00:00:03

node2上ovs交换机端口和ovn逻辑交换机端口匹配起来

[root@node2 ~]# ovs-vsctl set interface veth21 external-ids:iface-id=ls2-node2-ns2

查看北向数据库和南向数据库信息

[root@node1 ~]# ovn-nbctl show

switch 484606e0-944d-4c6b-9807-502f05bebb18 (ls2)

port ls2-node2-ns2

addresses: ["00:00:00:00:00:03"]

switch 86349e35-cdb4-42f7-a702-4b4a9d5653ef (ls1)

port ls1-node1-ns1

addresses: ["00:00:00:00:00:01"]

port ls1-node2-ns1

addresses: ["00:00:00:00:00:02"]

[root@node1 ~]# ovn-sbctl show

Chassis node2

hostname: node2

Encap geneve

ip: "10.1.1.42"

options: {csum="true"}

Port_Binding ls2-node2-ns2

Port_Binding ls1-node2-ns1

Chassis node1

hostname: node1

Encap geneve

ip: "10.1.1.41"

options: {csum="true"}

Port_Binding ls1-node1-ns1

创建ovn逻辑路由器连接两个逻辑交换机

添加逻辑路由器,路由信息保存在北向数据库

[root@node1 ~]# ovn-nbctl lr-add lr1

逻辑路由器添加连接交换机ls1的端口

[root@node1 ~]# ovn-nbctl lrp-add lr1 lr1-ls1 00:00:00:00:11:00 192.168.1.1/24

逻辑路由器添加连接交换机ls2的端口

[root@node1 ~]# ovn-nbctl lrp-add lr1 lr1-ls2 00:00:00:00:12:00 192.168.2.1/24

逻辑路由器连接逻辑交换机ls1

[root@node1 ~]# ovn-nbctl lsp-add ls1 ls1-lr1

[root@node1 ~]# ovn-nbctl lsp-set-type ls1-lr1 router

[root@node1 ~]# ovn-nbctl lsp-set-addresses ls1-lr1 00:00:00:00:11:00

[root@node1 ~]# ovn-nbctl lsp-set-options ls1-lr1 router-port=lr1-ls1

逻辑路由器连接逻辑交换机ls2

[root@node1 ~]# ovn-nbctl lsp-add ls2 ls2-lr1

[root@node1 ~]# ovn-nbctl lsp-set-type ls2-lr1 router

[root@node1 ~]# ovn-nbctl lsp-set-addresses ls2-lr1 00:00:00:00:12:00

[root@node1 ~]# ovn-nbctl lsp-set-options ls2-lr1 router-port=lr1-ls2

查看北向数据库和南向数据库信息

[root@node1 ~]# ovn-nbctl show

switch 484606e0-944d-4c6b-9807-502f05bebb18 (ls2)

port ls2-node2-ns2

addresses: ["00:00:00:00:00:03"]

port ls2-lr1

type: router

addresses: ["00:00:00:00:12:00"]

router-port: lr1-ls2

switch 86349e35-cdb4-42f7-a702-4b4a9d5653ef (ls1)

port ls1-node1-ns1

addresses: ["00:00:00:00:00:01"]

port ls1-node2-ns1

addresses: ["00:00:00:00:00:02"]

port ls1-lr1

type: router

addresses: ["00:00:00:00:11:00"]

router-port: lr1-ls1

router e9c151a0-5db7-4af6-91bd-89049c4bbf9f (lr1)

port lr1-ls2

mac: "00:00:00:00:12:00"

networks: ["192.168.2.1/24"]

port lr1-ls1

mac: "00:00:00:00:11:00"

networks: ["192.168.1.1/24"]

[root@node1 ~]# ovn-sbctl show

Chassis node2

hostname: node2

Encap geneve

ip: "10.1.1.42"

options: {csum="true"}

Port_Binding ls2-node2-ns2

Port_Binding ls1-node2-ns1

Chassis node1

hostname: node1

Encap geneve

ip: "10.1.1.41"

options: {csum="true"}

Port_Binding ls1-node1-ns1

从node1的ns1(192.168.1.10/24) ping node2的ns2(192.168.2.30),验证跨节点不同子网的连通性。

[root@node1 ~]# ip netns exec ns1 ping -c 1 192.168.2.30

connect: Network is unreachable connect: 网络不可达

查看ns1上的路由配置,显然此时没有到192.168.2.0/24网段的路由

[root@node1 ~]# ip netns exec ns1 ip route show

192.168.1.0/24 dev veth12 proto kernel scope link src 192.168.1.10

[root@node1 ~]# ip netns exec ns1 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

192.168.1.0 0.0.0.0 255.255.255.0 U 0 0 0 veth12

因为路由器是三层概念,要先给ovs的相关port配置上ip

[root@node1 ~]# ovn-nbctl lsp-set-addresses ls1-node1-ns1 00:00:00:00:00:01

[root@node1 ~]# ovn-nbctl lsp-set-addresses ls1-node2-ns1 00:00:00:00:00:02

[root@node1 ~]# ovn-nbctl lsp-set-addresses ls2-node2-ns2 00:00:00:00:00:03

再给三个网络命名空间添加默认路由,网关为ovn逻辑路由器对应的port ip

node1上ns1

[root@node1 ~]# ip netns exec ns1 ip route add default via 192.168.1.1 dev veth12

node2上ns1

[root@node2 ~]# ip netns exec ns1 ip route add default via 192.168.1.1 dev veth12

node2上ns2

[root@node2 ~]# ip netns exec ns2 ip route add default via 192.168.2.1 dev veth22

再次查看下南北向数据库信息

[root@node1 ~]# ovn-nbctl show

switch 484606e0-944d-4c6b-9807-502f05bebb18 (ls2)

port ls2-node2-ns2

addresses: ["00:00:00:00:00:03"]

port ls2-lr1

type: router

addresses: ["00:00:00:00:12:00"]

router-port: lr1-ls2

switch 86349e35-cdb4-42f7-a702-4b4a9d5653ef (ls1)

port ls1-node1-ns1

addresses: ["00:00:00:00:00:01"]

port ls1-node2-ns1

addresses: ["00:00:00:00:00:02"]

port ls1-lr1

type: router

addresses: ["00:00:00:00:11:00"]

router-port: lr1-ls1

router e9c151a0-5db7-4af6-91bd-89049c4bbf9f (lr1)

port lr1-ls2

mac: "00:00:00:00:12:00"

networks: ["192.168.2.1/24"]

port lr1-ls1

mac: "00:00:00:00:11:00"

networks: ["192.168.1.1/24"]

[root@node1 ~]# ovn-sbctl show

Chassis node2

hostname: node2

Encap geneve

ip: "10.1.1.42"

options: {csum="true"}

Port_Binding ls2-node2-ns2

Port_Binding ls1-node2-ns1

Chassis node1

hostname: node1

Encap geneve

ip: "10.1.1.41"

options: {csum="true"}

Port_Binding ls1-node1-ns1

node1上ns1连通网关

[root@node1 ~]# ip netns exec ns1 ping -c 1 192.168.1.1

PING 192.168.1.1 (192.168.1.1) 56(84) bytes of data.

64 bytes from 192.168.1.1: icmp_seq=1 ttl=254 time=20.10 ms

--- 192.168.1.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 20.950/20.950/20.950/0.000 ms

node2上ns2连通网关

[root@node2 ~]# ip netns exec ns2 ping -c 1 192.168.2.1

PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data.

64 bytes from 192.168.2.1: icmp_seq=1 ttl=254 time=38.5 ms

--- 192.168.2.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 38.477/38.477/38.477/0.000 ms

node1上ns1 ping node2上ns2

[root@node1 ~]# ip netns exec ns1 ping -c 1 192.168.2.30

PING 192.168.2.30 (192.168.2.30) 56(84) bytes of data.

64 bytes from 192.168.2.30: icmp_seq=1 ttl=63 time=1.23 ms

--- 192.168.2.30 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.225/1.225/1.225/0.000 ms

注意:ovn逻辑交换机/逻辑路由器是北向数据库概念,这两个逻辑概念经过ovn-northd“翻译”到了南向数据库中,再通过hypervisor上的ovn-controller同步到ovs/ovsdb-server,最终形成ovs的port和流表等数据。

ovn逻辑交换机通过geneve隧道,把二层广播域扩展到了不同主机上的ovs;而ovn逻辑路由器则是把三层广播域扩展到了不同主机上的ovs,从而实现跨主机的网络通信。

ovn逻辑交换机和逻辑路由器都会在所有的hypervisor中生成对应的流表配置,这也是ovn网络高可用以及解决实例迁移等问题的原理。