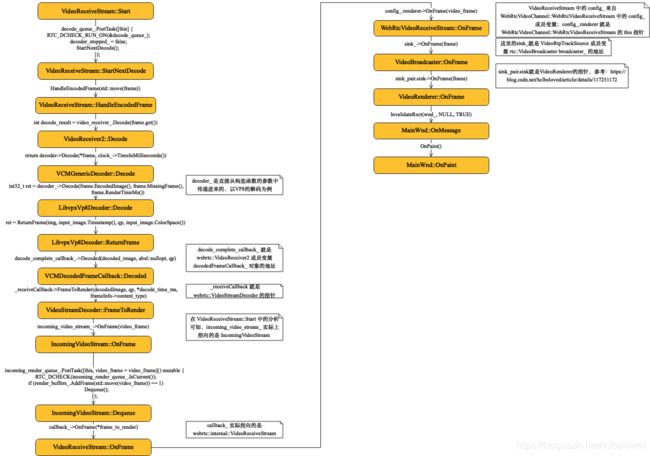

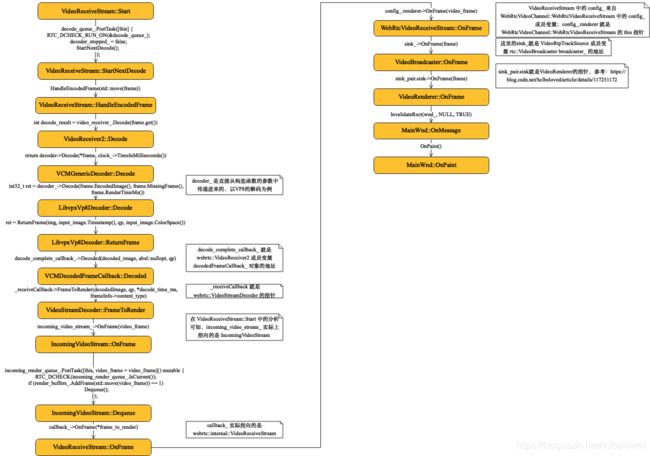

1 函数调用关系图

2 代码

void VideoReceiveStream::Start() {

RTC_DCHECK_RUN_ON(&worker_sequence_checker_);

if (decoder_running_) {

return;

}

const bool protected_by_fec = config_.rtp.protected_by_flexfec ||

rtp_video_stream_receiver_.IsUlpfecEnabled();

frame_buffer_->Start();

if (rtp_video_stream_receiver_.IsRetransmissionsEnabled() &&

protected_by_fec) {

frame_buffer_->SetProtectionMode(kProtectionNackFEC);

}

transport_adapter_.Enable();

rtc::VideoSinkInterface* renderer = nullptr;

if (config_.enable_prerenderer_smoothing) {

incoming_video_stream_.reset(new IncomingVideoStream(

task_queue_factory_, config_.render_delay_ms, this));

renderer = incoming_video_stream_.get();

} else {

renderer = this;

}

for (const Decoder& decoder : config_.decoders) {

std::unique_ptr video_decoder =

decoder.decoder_factory->LegacyCreateVideoDecoder(decoder.video_format, // VideoDecoderFactory::LegacyCreateVideoDecoder

config_.stream_id);

// If we still have no valid decoder, we have to create a "Null" decoder

// that ignores all calls. The reason we can get into this state is that the

// old decoder factory interface doesn't have a way to query supported

// codecs.

if (!video_decoder) {

video_decoder = std::make_unique();

}

std::string decoded_output_file =

field_trial::FindFullName("WebRTC-DecoderDataDumpDirectory");

// Because '/' can't be used inside a field trial parameter, we use ';'

// instead.

// This is only relevant to WebRTC-DecoderDataDumpDirectory

// field trial. ';' is chosen arbitrary. Even though it's a legal character

// in some file systems, we can sacrifice ability to use it in the path to

// dumped video, since it's developers-only feature for debugging.

absl::c_replace(decoded_output_file, ';', '/');

if (!decoded_output_file.empty()) {

char filename_buffer[256];

rtc::SimpleStringBuilder ssb(filename_buffer);

ssb << decoded_output_file << "/webrtc_receive_stream_"

<< this->config_.rtp.remote_ssrc << "-" << rtc::TimeMicros()

<< ".ivf";

video_decoder = CreateFrameDumpingDecoderWrapper(

std::move(video_decoder), FileWrapper::OpenWriteOnly(ssb.str()));

}

video_decoders_.push_back(std::move(video_decoder));

video_receiver_.RegisterExternalDecoder(video_decoders_.back().get(),// 设置解码器

decoder.payload_type);

VideoCodec codec = CreateDecoderVideoCodec(decoder);//注意这里

const bool raw_payload =

config_.rtp.raw_payload_types.count(codec.plType) > 0;

rtp_video_stream_receiver_.AddReceiveCodec(

codec, decoder.video_format.parameters, raw_payload);

RTC_CHECK_EQ(VCM_OK, video_receiver_.RegisterReceiveCodec(

&codec, num_cpu_cores_, false));

}

RTC_DCHECK(renderer != nullptr);

video_stream_decoder_.reset(

new VideoStreamDecoder(&video_receiver_, &stats_proxy_, renderer)); // 创建 VideoStreamDecoder,将 VideoReceiveStream 中的成员变量 VideoReceiver2 video_receiver_ 的指针传递进来

// Make sure we register as a stats observer *after* we've prepared the

// |video_stream_decoder_|.

call_stats_->RegisterStatsObserver(this);

// Start decoding on task queue.

video_receiver_.DecoderThreadStarting(); // VideoReceiver2 video_receiver_;

stats_proxy_.DecoderThreadStarting();

decode_queue_.PostTask([this] {

RTC_DCHECK_RUN_ON(&decode_queue_);

decoder_stopped_ = false;

StartNextDecode(); //在事件循环中不停的执行

});

decoder_running_ = true;

rtp_video_stream_receiver_.StartReceive(); //注意这里

}

std::unique_ptr VideoDecoderFactory::LegacyCreateVideoDecoder(

const SdpVideoFormat& format,

const std::string& receive_stream_id) {

return CreateVideoDecoder(format); // 多态

}

std::unique_ptr InternalDecoderFactory::CreateVideoDecoder(

const SdpVideoFormat& format) {

if (!IsFormatSupported(GetSupportedFormats(), format)) {

RTC_LOG(LS_ERROR) << "Trying to create decoder for unsupported format";

return nullptr;

}

if (absl::EqualsIgnoreCase(format.name, cricket::kVp8CodecName))

return VP8Decoder::Create(); //注意这里

if (absl::EqualsIgnoreCase(format.name, cricket::kVp9CodecName))

return VP9Decoder::Create(); //注意这里

if (absl::EqualsIgnoreCase(format.name, cricket::kH264CodecName))

return H264Decoder::Create(); //注意这里

RTC_NOTREACHED();

return nullptr;

}

VideoCodec CreateDecoderVideoCodec(const VideoReceiveStream::Decoder& decoder) {

VideoCodec codec;

memset(&codec, 0, sizeof(codec));

codec.plType = decoder.payload_type;

codec.codecType = PayloadStringToCodecType(decoder.video_format.name);

if (codec.codecType == kVideoCodecVP8) {

*(codec.VP8()) = VideoEncoder::GetDefaultVp8Settings();

} else if (codec.codecType == kVideoCodecVP9) {

*(codec.VP9()) = VideoEncoder::GetDefaultVp9Settings();

} else if (codec.codecType == kVideoCodecH264) {

*(codec.H264()) = VideoEncoder::GetDefaultH264Settings(); //注意这里

} else if (codec.codecType == kVideoCodecMultiplex) {

VideoReceiveStream::Decoder associated_decoder = decoder;

associated_decoder.video_format =

SdpVideoFormat(CodecTypeToPayloadString(kVideoCodecVP9));

VideoCodec associated_codec = CreateDecoderVideoCodec(associated_decoder);

associated_codec.codecType = kVideoCodecMultiplex;

return associated_codec;

}

codec.width = 320;

codec.height = 180;

const int kDefaultStartBitrate = 300;

codec.startBitrate = codec.minBitrate = codec.maxBitrate =

kDefaultStartBitrate;

return codec;

}

// 一些设置

VideoStreamDecoder::VideoStreamDecoder(

VideoReceiver2* video_receiver,

ReceiveStatisticsProxy* receive_statistics_proxy,

rtc::VideoSinkInterface* incoming_video_stream)

: video_receiver_(video_receiver),

receive_stats_callback_(receive_statistics_proxy),

incoming_video_stream_(incoming_video_stream) {

RTC_DCHECK(video_receiver_);

video_receiver_->RegisterReceiveCallback(this); //注意这里

}

int32_t VideoReceiver2::RegisterReceiveCallback(

VCMReceiveCallback* receiveCallback) {

RTC_DCHECK_RUN_ON(&construction_thread_checker_);

RTC_DCHECK(!IsDecoderThreadRunning());

// This value is set before the decoder thread starts and unset after

// the decoder thread has been stopped.

decodedFrameCallback_.SetUserReceiveCallback(receiveCallback); //注意这里

return VCM_OK;

}

void VCMDecodedFrameCallback::SetUserReceiveCallback(

VCMReceiveCallback* receiveCallback) {

RTC_DCHECK(construction_thread_.IsCurrent());

RTC_DCHECK((!_receiveCallback && receiveCallback) ||

(_receiveCallback && !receiveCallback));

_receiveCallback = receiveCallback; //实际上指向的就是 webrtc::VideoStreamDecoder

}

void VideoReceiveStream::StartNextDecode() {

TRACE_EVENT0("webrtc", "VideoReceiveStream::StartNextDecode");

frame_buffer_->NextFrame( //不停的获取frame

GetWaitMs(), keyframe_required_, &decode_queue_,

/* encoded frame handler */

[this](std::unique_ptr frame, ReturnReason res) {

RTC_DCHECK_EQ(frame == nullptr, res == ReturnReason::kTimeout);

RTC_DCHECK_EQ(frame != nullptr, res == ReturnReason::kFrameFound);

decode_queue_.PostTask([this, frame = std::move(frame)]() mutable {//将取出的frame再次入队进行处理

RTC_DCHECK_RUN_ON(&decode_queue_);

if (decoder_stopped_)

return;

if (frame) {

HandleEncodedFrame(std::move(frame)); // 注意这里

} else {

HandleFrameBufferTimeout();

}

StartNextDecode();

});

});

}

void VideoReceiveStream::HandleEncodedFrame(

std::unique_ptr frame) {

int64_t now_ms = clock_->TimeInMilliseconds();

// Current OnPreDecode only cares about QP for VP8.

int qp = -1;

if (frame->CodecSpecific()->codecType == kVideoCodecVP8) {

if (!vp8::GetQp(frame->data(), frame->size(), &qp)) {

RTC_LOG(LS_WARNING) << "Failed to extract QP from VP8 video frame";

}

}

stats_proxy_.OnPreDecode(frame->CodecSpecific()->codecType, qp);

int decode_result = video_receiver_.Decode(frame.get());//注意这里 VideoReceiver2::Decode

if (decode_result == WEBRTC_VIDEO_CODEC_OK ||

decode_result == WEBRTC_VIDEO_CODEC_OK_REQUEST_KEYFRAME) {

keyframe_required_ = false;

frame_decoded_ = true;

rtp_video_stream_receiver_.FrameDecoded(frame->id.picture_id);

if (decode_result == WEBRTC_VIDEO_CODEC_OK_REQUEST_KEYFRAME)

RequestKeyFrame();

} else if (!frame_decoded_ || !keyframe_required_ ||

(last_keyframe_request_ms_ + max_wait_for_keyframe_ms_ < now_ms)) {

keyframe_required_ = true;

// TODO(philipel): Remove this keyframe request when downstream project

// has been fixed.

RequestKeyFrame();

last_keyframe_request_ms_ = now_ms;

}

}

int32_t VideoReceiver2::Decode(const VCMEncodedFrame* frame) {

RTC_DCHECK_RUN_ON(&decoder_thread_checker_);

TRACE_EVENT0("webrtc", "VideoReceiver2::Decode");

// Change decoder if payload type has changed

VCMGenericDecoder* decoder =

codecDataBase_.GetDecoder(*frame, &decodedFrameCallback_); //注意这里

if (decoder == nullptr) {

return VCM_NO_CODEC_REGISTERED;

}

return decoder->Decode(*frame, clock_->TimeInMilliseconds()); //注意这里 VCMGenericDecoder::Decode

}

VCMGenericDecoder* VCMDecoderDataBase::GetDecoder(

const VCMEncodedFrame& frame,

VCMDecodedFrameCallback* decoded_frame_callback) {

RTC_DCHECK(decoded_frame_callback->UserReceiveCallback());

uint8_t payload_type = frame.PayloadType();

if (payload_type == receive_codec_.plType || payload_type == 0) {

return ptr_decoder_.get();

}

// If decoder exists - delete.

if (ptr_decoder_) {

ptr_decoder_.reset();

memset(&receive_codec_, 0, sizeof(VideoCodec));

}

ptr_decoder_ = CreateAndInitDecoder(frame, &receive_codec_); //注意这里

if (!ptr_decoder_) {

return nullptr;

}

VCMReceiveCallback* callback = decoded_frame_callback->UserReceiveCallback();

callback->OnIncomingPayloadType(receive_codec_.plType); //注意这里

if (ptr_decoder_->RegisterDecodeCompleteCallback(decoded_frame_callback) < //注意这里

0) {

ptr_decoder_.reset();

memset(&receive_codec_, 0, sizeof(VideoCodec));

return nullptr;

}

return ptr_decoder_.get();

}

std::unique_ptr VCMDecoderDataBase::CreateAndInitDecoder(

const VCMEncodedFrame& frame,

VideoCodec* new_codec) const {

uint8_t payload_type = frame.PayloadType();

RTC_LOG(LS_INFO) << "Initializing decoder with payload type '"

<< static_cast(payload_type) << "'.";

RTC_DCHECK(new_codec);

const VCMDecoderMapItem* decoder_item = FindDecoderItem(payload_type);

if (!decoder_item) {

RTC_LOG(LS_ERROR) << "Can't find a decoder associated with payload type: "

<< static_cast(payload_type);

return nullptr;

}

std::unique_ptr ptr_decoder;

const VCMExtDecoderMapItem* external_dec_item =

FindExternalDecoderItem(payload_type);

if (external_dec_item) {

// External codec.

ptr_decoder.reset(new VCMGenericDecoder(

external_dec_item->external_decoder_instance, true)); // 将 external_dec_item->external_decoder_instance 传递到 VCMGenericDecoder 中的 std::unique_ptr decoder_ 成员变量中,external_dec_item->external_decoder_instance 可以是webrtc::LibvpxVp8Decoder、webrtc::VP9DecoderImpl、webrtc::H264DecoderImpl

} else {

RTC_LOG(LS_ERROR) << "No decoder of this type exists.";

}

if (!ptr_decoder)

return nullptr;

// Copy over input resolutions to prevent codec reinitialization due to

// the first frame being of a different resolution than the database values.

// This is best effort, since there's no guarantee that width/height have been

// parsed yet (and may be zero).

if (frame.EncodedImage()._encodedWidth > 0 &&

frame.EncodedImage()._encodedHeight > 0) {

decoder_item->settings->width = frame.EncodedImage()._encodedWidth;

decoder_item->settings->height = frame.EncodedImage()._encodedHeight;

}

if (ptr_decoder->InitDecode(decoder_item->settings.get(), // VCMGenericDecoder::InitDecode 里面会有进一步的多态操作 decoder_->InitDecode(settings, numberOfCores)

decoder_item->number_of_cores) < 0) {

return nullptr;

}

memcpy(new_codec, decoder_item->settings.get(), sizeof(VideoCodec));

return ptr_decoder;

}

int32_t VCMGenericDecoder::Decode(const VCMEncodedFrame& frame, int64_t nowMs) {

TRACE_EVENT1("webrtc", "VCMGenericDecoder::Decode", "timestamp",

frame.Timestamp());

_frameInfos[_nextFrameInfoIdx].decodeStartTimeMs = nowMs;

_frameInfos[_nextFrameInfoIdx].renderTimeMs = frame.RenderTimeMs();

_frameInfos[_nextFrameInfoIdx].rotation = frame.rotation();

_frameInfos[_nextFrameInfoIdx].timing = frame.video_timing();

_frameInfos[_nextFrameInfoIdx].ntp_time_ms =

frame.EncodedImage().ntp_time_ms_;

_frameInfos[_nextFrameInfoIdx].packet_infos = frame.PacketInfos();

// Set correctly only for key frames. Thus, use latest key frame

// content type. If the corresponding key frame was lost, decode will fail

// and content type will be ignored.

if (frame.FrameType() == VideoFrameType::kVideoFrameKey) {

_frameInfos[_nextFrameInfoIdx].content_type = frame.contentType();

_last_keyframe_content_type = frame.contentType();

} else {

_frameInfos[_nextFrameInfoIdx].content_type = _last_keyframe_content_type;

}

_callback->Map(frame.Timestamp(), &_frameInfos[_nextFrameInfoIdx]);

_nextFrameInfoIdx = (_nextFrameInfoIdx + 1) % kDecoderFrameMemoryLength;

int32_t ret = decoder_->Decode(frame.EncodedImage(), frame.MissingFrame(), // decoder_ 是直接从构造函数的参数中传递进来的

frame.RenderTimeMs());

_callback->OnDecoderImplementationName(decoder_->ImplementationName()); // VCMDecodedFrameCallback::OnDecoderImplementationName

if (ret < WEBRTC_VIDEO_CODEC_OK) {

RTC_LOG(LS_WARNING) << "Failed to decode frame with timestamp "

<< frame.Timestamp() << ", error code: " << ret;

_callback->Pop(frame.Timestamp());

return ret;

} else if (ret == WEBRTC_VIDEO_CODEC_NO_OUTPUT) {

// No output

_callback->Pop(frame.Timestamp());

}

return ret;

}

//以VP8的解码为例

int LibvpxVp8Decoder::Decode(const EncodedImage& input_image,

bool missing_frames,

int64_t /*render_time_ms*/) {

if (!inited_) {

return WEBRTC_VIDEO_CODEC_UNINITIALIZED;

}

if (decode_complete_callback_ == NULL) {

return WEBRTC_VIDEO_CODEC_UNINITIALIZED;

}

if (input_image.data() == NULL && input_image.size() > 0) {

// Reset to avoid requesting key frames too often.

if (propagation_cnt_ > 0)

propagation_cnt_ = 0;

return WEBRTC_VIDEO_CODEC_ERR_PARAMETER;

}

// Post process configurations.

#if defined(WEBRTC_ARCH_ARM) || defined(WEBRTC_ARCH_ARM64) || \

defined(WEBRTC_ANDROID)

if (use_postproc_arm_) {

vp8_postproc_cfg_t ppcfg;

ppcfg.post_proc_flag = VP8_MFQE;

// For low resolutions, use stronger deblocking filter.

int last_width_x_height = last_frame_width_ * last_frame_height_;

if (last_width_x_height > 0 && last_width_x_height <= 320 * 240) {

// Enable the deblock and demacroblocker based on qp thresholds.

RTC_DCHECK(qp_smoother_);

int qp = qp_smoother_->GetAvg();

if (qp > deblock_.min_qp) {

int level = deblock_.max_level;

if (qp < deblock_.degrade_qp) {

// Use lower level.

level = deblock_.max_level * (qp - deblock_.min_qp) /

(deblock_.degrade_qp - deblock_.min_qp);

}

// Deblocking level only affects VP8_DEMACROBLOCK.

ppcfg.deblocking_level = std::max(level, 1);

ppcfg.post_proc_flag |= VP8_DEBLOCK | VP8_DEMACROBLOCK;

}

}

vpx_codec_control(decoder_, VP8_SET_POSTPROC, &ppcfg);

}

#else

vp8_postproc_cfg_t ppcfg;

// MFQE enabled to reduce key frame popping.

ppcfg.post_proc_flag = VP8_MFQE | VP8_DEBLOCK;

// For VGA resolutions and lower, enable the demacroblocker postproc.

if (last_frame_width_ * last_frame_height_ <= 640 * 360) {

ppcfg.post_proc_flag |= VP8_DEMACROBLOCK;

}

// Strength of deblocking filter. Valid range:[0,16]

ppcfg.deblocking_level = 3;

vpx_codec_control(decoder_, VP8_SET_POSTPROC, &ppcfg);

#endif

// Always start with a complete key frame.

if (key_frame_required_) {

if (input_image._frameType != VideoFrameType::kVideoFrameKey)

return WEBRTC_VIDEO_CODEC_ERROR;

// We have a key frame - is it complete?

if (input_image._completeFrame) {

key_frame_required_ = false;

} else {

return WEBRTC_VIDEO_CODEC_ERROR;

}

}

// Restrict error propagation using key frame requests.

// Reset on a key frame refresh.

if (input_image._frameType == VideoFrameType::kVideoFrameKey &&

input_image._completeFrame) {

propagation_cnt_ = -1;

// Start count on first loss.

} else if ((!input_image._completeFrame || missing_frames) &&

propagation_cnt_ == -1) {

propagation_cnt_ = 0;

}

if (propagation_cnt_ >= 0) {

propagation_cnt_++;

}

vpx_codec_iter_t iter = NULL;

vpx_image_t* img;

int ret;

// Check for missing frames.

if (missing_frames) {

// Call decoder with zero data length to signal missing frames.

if (vpx_codec_decode(decoder_, NULL, 0, 0, kDecodeDeadlineRealtime)) {

// Reset to avoid requesting key frames too often.

if (propagation_cnt_ > 0)

propagation_cnt_ = 0;

return WEBRTC_VIDEO_CODEC_ERROR;

}

img = vpx_codec_get_frame(decoder_, &iter);

iter = NULL;

}

const uint8_t* buffer = input_image.data();

if (input_image.size() == 0) {

buffer = NULL; // Triggers full frame concealment.

}

if (vpx_codec_decode(decoder_, buffer, input_image.size(), 0,

kDecodeDeadlineRealtime)) {

// Reset to avoid requesting key frames too often.

if (propagation_cnt_ > 0) {

propagation_cnt_ = 0;

}

return WEBRTC_VIDEO_CODEC_ERROR;

}

img = vpx_codec_get_frame(decoder_, &iter);

int qp;

vpx_codec_err_t vpx_ret =

vpx_codec_control(decoder_, VPXD_GET_LAST_QUANTIZER, &qp);

RTC_DCHECK_EQ(vpx_ret, VPX_CODEC_OK);

ret = ReturnFrame(img, input_image.Timestamp(), qp, input_image.ColorSpace()); //注意这里

if (ret != 0) {

// Reset to avoid requesting key frames too often.

if (ret < 0 && propagation_cnt_ > 0)

propagation_cnt_ = 0;

return ret;

}

// Check Vs. threshold

if (propagation_cnt_ > kVp8ErrorPropagationTh) {

// Reset to avoid requesting key frames too often.

propagation_cnt_ = 0;

return WEBRTC_VIDEO_CODEC_ERROR;

}

return WEBRTC_VIDEO_CODEC_OK;

}

int LibvpxVp8Decoder::ReturnFrame(

const vpx_image_t* img,

uint32_t timestamp,

int qp,

const webrtc::ColorSpace* explicit_color_space) {

if (img == NULL) {

// Decoder OK and NULL image => No show frame

return WEBRTC_VIDEO_CODEC_NO_OUTPUT;

}

if (qp_smoother_) {

if (last_frame_width_ != static_cast(img->d_w) ||

last_frame_height_ != static_cast(img->d_h)) {

qp_smoother_->Reset();

}

qp_smoother_->Add(qp);

}

last_frame_width_ = img->d_w;

last_frame_height_ = img->d_h;

// Allocate memory for decoded image.

rtc::scoped_refptr buffer =

buffer_pool_.CreateBuffer(img->d_w, img->d_h);

if (!buffer.get()) {

// Pool has too many pending frames.

RTC_HISTOGRAM_BOOLEAN("WebRTC.Video.LibvpxVp8Decoder.TooManyPendingFrames",

1);

return WEBRTC_VIDEO_CODEC_NO_OUTPUT;

}

libyuv::I420Copy(img->planes[VPX_PLANE_Y], img->stride[VPX_PLANE_Y],

img->planes[VPX_PLANE_U], img->stride[VPX_PLANE_U],

img->planes[VPX_PLANE_V], img->stride[VPX_PLANE_V],

buffer->MutableDataY(), buffer->StrideY(),

buffer->MutableDataU(), buffer->StrideU(),

buffer->MutableDataV(), buffer->StrideV(), img->d_w,

img->d_h);

VideoFrame decoded_image = VideoFrame::Builder()

.set_video_frame_buffer(buffer)

.set_timestamp_rtp(timestamp)

.set_color_space(explicit_color_space)

.build();

decode_complete_callback_->Decoded(decoded_image, absl::nullopt, qp); // decode_complete_callback_ 就是 webrtc::VideoReceiver2 成员变量 decodedFrameCallback_ 对象的地址

return WEBRTC_VIDEO_CODEC_OK;

}

void VCMDecodedFrameCallback::Decoded(VideoFrame& decodedImage,

absl::optional decode_time_ms,

absl::optional qp) {

// Wait some extra time to simulate a slow decoder.

if (_extra_decode_time) {

rtc::Thread::SleepMs(_extra_decode_time->ms());

}

RTC_DCHECK(_receiveCallback) << "Callback must not be null at this point";

TRACE_EVENT_INSTANT1("webrtc", "VCMDecodedFrameCallback::Decoded",

"timestamp", decodedImage.timestamp());

// TODO(holmer): We should improve this so that we can handle multiple

// callbacks from one call to Decode().

VCMFrameInformation* frameInfo;

{

rtc::CritScope cs(&lock_);

frameInfo = _timestampMap.Pop(decodedImage.timestamp());

}

if (frameInfo == NULL) {

RTC_LOG(LS_WARNING) << "Too many frames backed up in the decoder, dropping "

"this one.";

_receiveCallback->OnDroppedFrames(1);

return;

}

decodedImage.set_ntp_time_ms(frameInfo->ntp_time_ms);

decodedImage.set_packet_infos(frameInfo->packet_infos);

decodedImage.set_rotation(frameInfo->rotation);

const int64_t now_ms = _clock->TimeInMilliseconds();

if (!decode_time_ms) {

decode_time_ms = now_ms - frameInfo->decodeStartTimeMs;

}

_timing->StopDecodeTimer(*decode_time_ms, now_ms);

// Report timing information.

TimingFrameInfo timing_frame_info;

if (frameInfo->timing.flags != VideoSendTiming::kInvalid) {

int64_t capture_time_ms = decodedImage.ntp_time_ms() - ntp_offset_;

// Convert remote timestamps to local time from ntp timestamps.

frameInfo->timing.encode_start_ms -= ntp_offset_;

frameInfo->timing.encode_finish_ms -= ntp_offset_;

frameInfo->timing.packetization_finish_ms -= ntp_offset_;

frameInfo->timing.pacer_exit_ms -= ntp_offset_;

frameInfo->timing.network_timestamp_ms -= ntp_offset_;

frameInfo->timing.network2_timestamp_ms -= ntp_offset_;

int64_t sender_delta_ms = 0;

if (decodedImage.ntp_time_ms() < 0) {

// Sender clock is not estimated yet. Make sure that sender times are all

// negative to indicate that. Yet they still should be relatively correct.

sender_delta_ms =

std::max({capture_time_ms, frameInfo->timing.encode_start_ms,

frameInfo->timing.encode_finish_ms,

frameInfo->timing.packetization_finish_ms,

frameInfo->timing.pacer_exit_ms,

frameInfo->timing.network_timestamp_ms,

frameInfo->timing.network2_timestamp_ms}) +

1;

}

timing_frame_info.capture_time_ms = capture_time_ms - sender_delta_ms;

timing_frame_info.encode_start_ms =

frameInfo->timing.encode_start_ms - sender_delta_ms;

timing_frame_info.encode_finish_ms =

frameInfo->timing.encode_finish_ms - sender_delta_ms;

timing_frame_info.packetization_finish_ms =

frameInfo->timing.packetization_finish_ms - sender_delta_ms;

timing_frame_info.pacer_exit_ms =

frameInfo->timing.pacer_exit_ms - sender_delta_ms;

timing_frame_info.network_timestamp_ms =

frameInfo->timing.network_timestamp_ms - sender_delta_ms;

timing_frame_info.network2_timestamp_ms =

frameInfo->timing.network2_timestamp_ms - sender_delta_ms;

}

timing_frame_info.flags = frameInfo->timing.flags;

timing_frame_info.decode_start_ms = frameInfo->decodeStartTimeMs;

timing_frame_info.decode_finish_ms = now_ms;

timing_frame_info.render_time_ms = frameInfo->renderTimeMs;

timing_frame_info.rtp_timestamp = decodedImage.timestamp();

timing_frame_info.receive_start_ms = frameInfo->timing.receive_start_ms;

timing_frame_info.receive_finish_ms = frameInfo->timing.receive_finish_ms;

_timing->SetTimingFrameInfo(timing_frame_info);

decodedImage.set_timestamp_us(frameInfo->renderTimeMs *

rtc::kNumMicrosecsPerMillisec);

_receiveCallback->FrameToRender(decodedImage, qp, *decode_time_ms, // _receiveCallback 就是 webrtc::VideoStreamDecoder 的指针

frameInfo->content_type);

}

int32_t VideoStreamDecoder::FrameToRender(VideoFrame& video_frame,

absl::optional qp,

int32_t decode_time_ms,

VideoContentType content_type) {

receive_stats_callback_->OnDecodedFrame(video_frame, qp, decode_time_ms, //

content_type);

incoming_video_stream_->OnFrame(video_frame); // 在 VideoReceiveStream::Start 中的分析可知,incoming_video_stream_ 实际上指向的是 IncomingVideoStream

return 0;

}

void IncomingVideoStream::OnFrame(const VideoFrame& video_frame) {

TRACE_EVENT0("webrtc", "IncomingVideoStream::OnFrame");

RTC_CHECK_RUNS_SERIALIZED(&decoder_race_checker_);

RTC_DCHECK(!incoming_render_queue_.IsCurrent());

// TODO(srte): Using video_frame = std::move(video_frame) would move the frame

// into the lambda instead of copying it, but it doesn't work unless we change

// OnFrame to take its frame argument by value instead of const reference.

incoming_render_queue_.PostTask([this, video_frame = video_frame]() mutable { // 放到单独的线程中进行处理

RTC_DCHECK(incoming_render_queue_.IsCurrent());

if (render_buffers_.AddFrame(std::move(video_frame)) == 1)

Dequeue(); //

});

}

void IncomingVideoStream::Dequeue() {

TRACE_EVENT0("webrtc", "IncomingVideoStream::Dequeue");

RTC_DCHECK(incoming_render_queue_.IsCurrent());

absl::optional frame_to_render = render_buffers_.FrameToRender();

if (frame_to_render)

callback_->OnFrame(*frame_to_render); // callback_ 实际指向的是 webrtc::internal::VideoReceiveStream

if (render_buffers_.HasPendingFrames()) {

uint32_t wait_time = render_buffers_.TimeToNextFrameRelease();

incoming_render_queue_.PostDelayedTask([this]() { Dequeue(); }, wait_time);

}

}

void VideoReceiveStream::OnFrame(const VideoFrame& video_frame) {

int64_t sync_offset_ms;

double estimated_freq_khz;

// TODO(tommi): GetStreamSyncOffsetInMs grabs three locks. One inside the

// function itself, another in GetChannel() and a third in

// GetPlayoutTimestamp. Seems excessive. Anyhow, I'm assuming the function

// succeeds most of the time, which leads to grabbing a fourth lock.

if (rtp_stream_sync_.GetStreamSyncOffsetInMs(

video_frame.timestamp(), video_frame.render_time_ms(),

&sync_offset_ms, &estimated_freq_khz)) {

// TODO(tommi): OnSyncOffsetUpdated grabs a lock.

stats_proxy_.OnSyncOffsetUpdated(sync_offset_ms, estimated_freq_khz);

}

source_tracker_.OnFrameDelivered(video_frame.packet_infos());

config_.renderer->OnFrame(video_frame); // VideoReceiveStream 中的 config_ 来自 WebRtcVideoChannel::WebRtcVideoReceiveStream 中的 config_ 成员变量

// config_.renderer 就是 WebRtcVideoChannel::WebRtcVideoReceiveStream 的 this 指针

// TODO(tommi): OnRenderFrame grabs a lock too.

stats_proxy_.OnRenderedFrame(video_frame);

}

void WebRtcVideoChannel::WebRtcVideoReceiveStream::OnFrame(

const webrtc::VideoFrame& frame) {

rtc::CritScope crit(&sink_lock_);

int64_t time_now_ms = rtc::TimeMillis();

if (first_frame_timestamp_ < 0)

first_frame_timestamp_ = time_now_ms;

int64_t elapsed_time_ms = time_now_ms - first_frame_timestamp_;

if (frame.ntp_time_ms() > 0)

estimated_remote_start_ntp_time_ms_ = frame.ntp_time_ms() - elapsed_time_ms;

if (sink_ == NULL) {

RTC_LOG(LS_WARNING) << "VideoReceiveStream not connected to a VideoSink.";

return;

}

sink_->OnFrame(frame); //注意这里

}

PeerConnection::ApplyRemoteDescription

===>

transceiver->internal()->receiver_internal()->SetupMediaChannel(ssrc);// transceiver->internal() 返回的是指向 RtpTransceiver 的指针

//RtpTransceiver::receiver_internal()返回的实际上就是 webrtc:::VideoRtpReceiver

void VideoRtpReceiver::SetupMediaChannel(uint32_t ssrc) {

if (!media_channel_) {

RTC_LOG(LS_ERROR)

<< "VideoRtpReceiver::SetupMediaChannel: No video channel exists.";

}

RestartMediaChannel(ssrc); //注意这里

}

void VideoRtpReceiver::RestartMediaChannel(absl::optional ssrc) {

RTC_DCHECK(media_channel_);

if (!stopped_ && ssrc_ == ssrc) {

return;

}

if (!stopped_) {

SetSink(nullptr);

}

stopped_ = false;

ssrc_ = ssrc;

SetSink(source_->sink()); //source_->sink() 就是 VideoRtpTrackSource::sink(),也就是设置的 VideoBroadcaster 指针

// Attach any existing frame decryptor to the media channel.

MaybeAttachFrameDecryptorToMediaChannel(

ssrc, worker_thread_, frame_decryptor_, media_channel_, stopped_);

// TODO(bugs.webrtc.org/8694): Stop using 0 to mean unsignalled SSRC

// value.

delay_->OnStart(media_channel_, ssrc.value_or(0));

}

bool VideoRtpReceiver::SetSink(rtc::VideoSinkInterface* sink) {

RTC_DCHECK(media_channel_);

RTC_DCHECK(!stopped_);

return worker_thread_->Invoke(RTC_FROM_HERE, [&] {

// TODO(bugs.webrtc.org/8694): Stop using 0 to mean unsignalled SSRC

return media_channel_->SetSink(ssrc_.value_or(0), sink); //注意这里

});

}

bool WebRtcVideoChannel::SetSink(

uint32_t ssrc,

rtc::VideoSinkInterface* sink) {

RTC_DCHECK_RUN_ON(&thread_checker_);

RTC_LOG(LS_INFO) << "SetSink: ssrc:" << ssrc << " "

<< (sink ? "(ptr)" : "nullptr");

if (ssrc == 0) {

default_unsignalled_ssrc_handler_.SetDefaultSink(this, sink);

return true;

}

std::map::iterator it =

receive_streams_.find(ssrc); //注意这里

if (it == receive_streams_.end()) {

return false;

}

it->second->SetSink(sink); //注意这里

return true;

}

void WebRtcVideoChannel::WebRtcVideoReceiveStream::SetSink(

rtc::VideoSinkInterface* sink) {

rtc::CritScope crit(&sink_lock_);

sink_ = sink; //注意这里

}

void VideoBroadcaster::OnFrame(const webrtc::VideoFrame& frame) {

rtc::CritScope cs(&sinks_and_wants_lock_);

bool current_frame_was_discarded = false;

for (auto& sink_pair : sink_pairs()) {

if (sink_pair.wants.rotation_applied &&

frame.rotation() != webrtc::kVideoRotation_0) {

// Calls to OnFrame are not synchronized with changes to the sink wants.

// When rotation_applied is set to true, one or a few frames may get here

// with rotation still pending. Protect sinks that don't expect any

// pending rotation.

RTC_LOG(LS_VERBOSE) << "Discarding frame with unexpected rotation.";

sink_pair.sink->OnDiscardedFrame();

current_frame_was_discarded = true;

continue;

}

if (sink_pair.wants.black_frames) {

webrtc::VideoFrame black_frame =

webrtc::VideoFrame::Builder()

.set_video_frame_buffer(

GetBlackFrameBuffer(frame.width(), frame.height()))

.set_rotation(frame.rotation())

.set_timestamp_us(frame.timestamp_us())

.set_id(frame.id())

.build();

sink_pair.sink->OnFrame(black_frame);

} else if (!previous_frame_sent_to_all_sinks_) {

// Since last frame was not sent to some sinks, full update is needed.

webrtc::VideoFrame copy = frame;

copy.set_update_rect(

webrtc::VideoFrame::UpdateRect{0, 0, frame.width(), frame.height()});

sink_pair.sink->OnFrame(copy);

} else {

sink_pair.sink->OnFrame(frame); //VideoRenderer::OnFrame

}

}

previous_frame_sent_to_all_sinks_ = !current_frame_was_discarded;

}