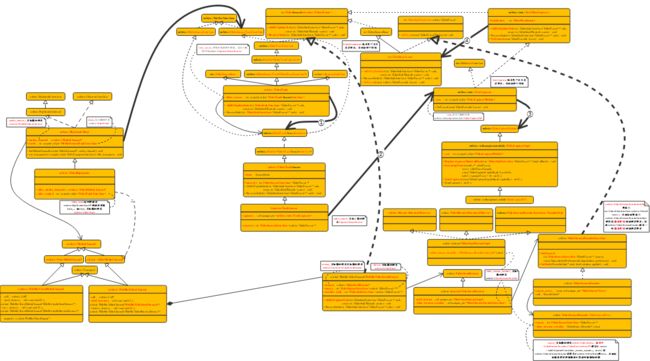

webrtc-m79-视频采集器到视频编码器流水线的建立

1 问题

视频采集器采集到像素格式的VideoFrame经过编码后才能通过P2PtransportChannel传递给对端,这其中就包括了两个主要环节:

第一个环节:采集器到编码器之间流水线的建立;

第二个环节:采集到的像素格式的VideoFrame沿着流水线送到编码器,并发送到对端;

2 采集器到编码器之间流水线的建立

3 相关代码

3.1 采集器到编码器之间流水线的建立

VideoTrack 到 编码器 流水线的建立

void Conductor::AddTracks()

===>

rtc::scoped_refptr video_device = CapturerTrackSource::Create();

if (video_device) {

rtc::scoped_refptr video_track_( // video_track_ 实际指向的是 webrtc::VideoTrack

peer_connection_factory_->CreateVideoTrack(kVideoLabel, video_device));

main_wnd_->StartLocalRenderer(video_track_); 建立了 VideoTrack 到本地渲染的流水线

result_or_error = peer_connection_->AddTrack(video_track_, {kStreamId}); 建立了 VideoTrack 到编码器的流水线

if (!result_or_error.ok()) {

RTC_LOG(LS_ERROR) << "Failed to add video track to PeerConnection: "

<< result_or_error.error().message();

}

} else {

RTC_LOG(LS_ERROR) << "OpenVideoCaptureDevice failed";

}

/// 将 audio 或者 video track 添加到 PC

RTCErrorOr> PeerConnection::AddTrack(

rtc::scoped_refptr track,

const std::vector& stream_ids) {

RTC_DCHECK_RUN_ON(signaling_thread());

TRACE_EVENT0("webrtc", "PeerConnection::AddTrack");

if (!track) {

LOG_AND_RETURN_ERROR(RTCErrorType::INVALID_PARAMETER, "Track is null.");

}

if (!(track->kind() == MediaStreamTrackInterface::kAudioKind ||

track->kind() == MediaStreamTrackInterface::kVideoKind)) {

LOG_AND_RETURN_ERROR(RTCErrorType::INVALID_PARAMETER,

"Track has invalid kind: " + track->kind());

}

if (IsClosed()) {

LOG_AND_RETURN_ERROR(RTCErrorType::INVALID_STATE,

"PeerConnection is closed.");

}

if (FindSenderForTrack(track)) {

LOG_AND_RETURN_ERROR(

RTCErrorType::INVALID_PARAMETER,

"Sender already exists for track " + track->id() + ".");

}

auto sender_or_error =

(IsUnifiedPlan() ? AddTrackUnifiedPlan(track, stream_ids) ///针对视频, AddTrackUnifiedPlan 返回的实际上就是 VideoRtpSender

: AddTrackPlanB(track, stream_ids)); ///

if (sender_or_error.ok()) {

UpdateNegotiationNeeded();

stats_->AddTrack(track);

}

return sender_or_error;

}

RTCErrorOr>

PeerConnection::AddTrackUnifiedPlan(

rtc::scoped_refptr track,

const std::vector& stream_ids) {

auto transceiver = FindFirstTransceiverForAddedTrack(track);

if (transceiver) {

RTC_LOG(LS_INFO) << "Reusing an existing "

<< cricket::MediaTypeToString(transceiver->media_type())

<< " transceiver for AddTrack.";

if (transceiver->direction() == RtpTransceiverDirection::kRecvOnly) {

transceiver->internal()->set_direction(

RtpTransceiverDirection::kSendRecv);

} else if (transceiver->direction() == RtpTransceiverDirection::kInactive) {

transceiver->internal()->set_direction(

RtpTransceiverDirection::kSendOnly);

}

transceiver->sender()->SetTrack(track);

transceiver->internal()->sender_internal()->set_stream_ids(stream_ids);

} else { / 运行到这个分支当中

cricket::MediaType media_type =

(track->kind() == MediaStreamTrackInterface::kAudioKind

? cricket::MEDIA_TYPE_AUDIO

: cricket::MEDIA_TYPE_VIDEO);

RTC_LOG(LS_INFO) << "Adding " << cricket::MediaTypeToString(media_type)

<< " transceiver in response to a call to AddTrack.";

std::string sender_id = track->id();

// Avoid creating a sender with an existing ID by generating a random ID.

// This can happen if this is the second time AddTrack has created a sender

// for this track.

if (FindSenderById(sender_id)) {

sender_id = rtc::CreateRandomUuid();

}

auto sender = CreateSender(media_type, sender_id, track, stream_ids, {}); ///

auto receiver = CreateReceiver(media_type, rtc::CreateRandomUuid());

transceiver = CreateAndAddTransceiver(sender, receiver); ///

transceiver->internal()->set_created_by_addtrack(true);

transceiver->internal()->set_direction(RtpTransceiverDirection::kSendRecv);

}

return transceiver->sender(); /// 针对视频返回的实际上就是 VideoRtpSender

}

PeerConnection::CreateSende

rtc::scoped_refptr>

PeerConnection::CreateSender(

cricket::MediaType media_type,

const std::string& id,

rtc::scoped_refptr track, /// 如果是视频,则 track 实际指向的是 webrtc::VideoTrack

const std::vector& stream_ids, / webrtc::VideoTrack 也继承自 MediaStreamTrackInterface

const std::vector& send_encodings) {

RTC_DCHECK_RUN_ON(signaling_thread());

rtc::scoped_refptr> sender;

if (media_type == cricket::MEDIA_TYPE_AUDIO) { 音频

RTC_DCHECK(!track ||

(track->kind() == MediaStreamTrackInterface::kAudioKind));

sender = RtpSenderProxyWithInternal::Create(

signaling_thread(),

AudioRtpSender::Create(worker_thread(), id, stats_.get(), this));

NoteUsageEvent(UsageEvent::AUDIO_ADDED);

} else {

RTC_DCHECK_EQ(media_type, cricket::MEDIA_TYPE_VIDEO); // 视频

RTC_DCHECK(!track ||

(track->kind() == MediaStreamTrackInterface::kVideoKind));

sender = RtpSenderProxyWithInternal::Create( //

signaling_thread(), VideoRtpSender::Create(worker_thread(), id, this));

NoteUsageEvent(UsageEvent::VIDEO_ADDED);

}

bool set_track_succeeded = sender->SetTrack(track); VideoRtpSender::SetTrack 实际上就是其父类 RtpSenderBase::SetTrack

RTC_DCHECK(set_track_succeeded);

sender->internal()->set_stream_ids(stream_ids); //

sender->internal()->set_init_send_encodings(send_encodings);

return sender;

}

rtc::scoped_refptr VideoRtpSender::Create(

rtc::Thread* worker_thread,

const std::string& id,

SetStreamsObserver* set_streams_observer) {

return rtc::scoped_refptr(

new rtc::RefCountedObject(worker_thread, id,

set_streams_observer));

}

/// 如果是视频,则 track 实际指向的是 webrtc::VideoTrack

bool RtpSenderBase::SetTrack(MediaStreamTrackInterface* track) {

TRACE_EVENT0("webrtc", "RtpSenderBase::SetTrack");

if (stopped_) {

RTC_LOG(LS_ERROR) << "SetTrack can't be called on a stopped RtpSender.";

return false;

}

if (track && track->kind() != track_kind()) {

RTC_LOG(LS_ERROR) << "SetTrack with " << track->kind()

<< " called on RtpSender with " << track_kind()

<< " track.";

return false;

}

// Detach from old track.

if (track_) {

DetachTrack();

track_->UnregisterObserver(this);

RemoveTrackFromStats();

}

// Attach to new track.

bool prev_can_send_track = can_send_track();

// Keep a reference to the old track to keep it alive until we call SetSend.

rtc::scoped_refptr old_track = track_;

track_ = track; // rtc::scoped_refptr track_;

if (track_) {

track_->RegisterObserver(this); / Notifier::RegisterObserver

AttachTrack(); 多态,这里是子类的调用 VideoRtpSender::AttachTrack

}

// Update channel.

if (can_send_track()) {

SetSend(); /// 多态,这里是子类的调用 VideoRtpSender::SetSend

AddTrackToStats();

} else if (prev_can_send_track) {

ClearSend();

}

attachment_id_ = (track_ ? GenerateUniqueId() : 0);

return true;

}

void VideoRtpSender::SetSend() {

RTC_DCHECK(!stopped_);

RTC_DCHECK(can_send_track());

if (!media_channel_) {

RTC_LOG(LS_ERROR) << "SetVideoSend: No video channel exists.";

return;

}

cricket::VideoOptions options; / video_track() 返回的是实际上指向 webrtc::VideoTrack 的指针

VideoTrackSourceInterface* source = video_track()->GetSource(); / VideoTrack::GetSource 直接定义到了头文件中

if (source) {

options.is_screencast = source->is_screencast();

options.video_noise_reduction = source->needs_denoising();

}

switch (cached_track_content_hint_) {

case VideoTrackInterface::ContentHint::kNone:

break;

case VideoTrackInterface::ContentHint::kFluid:

options.is_screencast = false;

break;

case VideoTrackInterface::ContentHint::kDetailed:

case VideoTrackInterface::ContentHint::kText:

options.is_screencast = true;

break;

}

bool success = worker_thread_->Invoke(RTC_FROM_HERE, [&] {

return video_media_channel()->SetVideoSend(ssrc_, &options, video_track()); / video_track() 返回的是实际上指向 webrtc::VideoTrack 的指针

}); // WebRtcVideoChannel::SetVideoSend

RTC_DCHECK(success);

}

bool WebRtcVideoChannel::AddSendStream(const StreamParams& sp)

===>

webrtc::VideoSendStream::Config config(this, media_transport()); //

WebRtcVideoSendStream* stream = new WebRtcVideoSendStream(

call_, sp, std::move(config), default_send_options_, /// 注意这里的 config // 注意里面的成员 config.send_transport

video_config_.enable_cpu_adaptation, bitrate_config_.max_bitrate_bps, /// 也就是说此处的

send_codec_, send_rtp_extensions_, send_params_);

uint32_t ssrc = sp.first_ssrc();

send_streams_[ssrc] = stream;

VideoSendStream::Config::Config(Transport* send_transport, send_transport 实际指向的是 WebRtcVideoChannel

MediaTransportInterface* media_transport)

: rtp(),

encoder_settings(VideoEncoder::Capabilities(rtp.lntf.enabled)),

send_transport(send_transport), // send_transport 实际指向的是 WebRtcVideoChannel

media_transport(media_transport) {}

/// VideoTrack::GetSource

class VideoTrack : public MediaStreamTrack,

public rtc::VideoSourceBase,

public ObserverInterface {

public: / video_source_ 实际上指向的是 webrtc::VideoTrackSource 的子类,这里指向的是 webrtc::CapturerTrackSource

VideoTrackSourceInterface* GetSource() const override {

return video_source_.get();

}

bool WebRtcVideoChannel::SetVideoSend(

uint32_t ssrc,

const VideoOptions* options,

rtc::VideoSourceInterface* source) { /// source 是指向 webrtc::VideoTrack 的指针

RTC_DCHECK_RUN_ON(&thread_checker_);

TRACE_EVENT0("webrtc", "SetVideoSend");

RTC_DCHECK(ssrc != 0);

RTC_LOG(LS_INFO) << "SetVideoSend (ssrc= " << ssrc << ", options: "

<< (options ? options->ToString() : "nullptr")

<< ", source = " << (source ? "(source)" : "nullptr") << ")";

// WebRtcVideoChannel::AddSendStream 中向 send_streams_ 中加入数据

const auto& kv = send_streams_.find(ssrc); / WebRtcVideoChannel 的成员变量 std::map send_streams_ RTC_GUARDED_BY(thread_checker_);

if (kv == send_streams_.end()) {

// Allow unknown ssrc only if source is null.

RTC_CHECK(source == nullptr);

RTC_LOG(LS_ERROR) << "No sending stream on ssrc " << ssrc;

return false;

}

return kv->second->SetVideoSend(options, source); // WebRtcVideoSendStream::SetVideoSend

}

bool WebRtcVideoChannel::WebRtcVideoSendStream::SetVideoSend(

const VideoOptions* options,

rtc::VideoSourceInterface* source) { // /// source 是指向 webrtc::VideoTrack 的指针

TRACE_EVENT0("webrtc", "WebRtcVideoSendStream::SetVideoSend");

RTC_DCHECK_RUN_ON(&thread_checker_);

if (options) {

VideoOptions old_options = parameters_.options;

parameters_.options.SetAll(*options);

if (parameters_.options.is_screencast.value_or(false) !=

old_options.is_screencast.value_or(false) &&

parameters_.codec_settings) {

// If screen content settings change, we may need to recreate the codec

// instance so that the correct type is used.

SetCodec(*parameters_.codec_settings);

// Mark screenshare parameter as being updated, then test for any other

// changes that may require codec reconfiguration.

old_options.is_screencast = options->is_screencast;

}

if (parameters_.options != old_options) {

ReconfigureEncoder();

}

}

if (source_ && stream_) { / webrtc::VideoSendStream* stream_ RTC_GUARDED_BY(&thread_checker_); 实际指向的是 webrtc::internal::VideoSendStream

stream_->SetSource(nullptr, webrtc::DegradationPreference::DISABLED);

} // 注意:class webrtc::internal::VideoSendStream : public webrtc::VideoSendStream

// Switch to the new source.

source_ = source; // rtc::VideoSourceInterface* source_ RTC_GUARDED_BY(&thread_checker_);

if (source && stream_) {

stream_->SetSource(this, GetDegradationPreference()); /// 多态,实际上是子类的调用 webrtc::internal::VideoSendStream::SetSource

}

return true;

}

WebRtcVideoChannel::WebRtcVideoSendStream::WebRtcVideoSendStream(

webrtc::Call* call,

const StreamParams& sp,

webrtc::VideoSendStream::Config config, ///

const VideoOptions& options,

bool enable_cpu_overuse_detection,

int max_bitrate_bps,

const absl::optional& codec_settings,

const absl::optional>& rtp_extensions,

// TODO(deadbeef): Don't duplicate information between send_params,

// rtp_extensions, options, etc.

const VideoSendParameters& send_params)

: worker_thread_(rtc::Thread::Current()),

ssrcs_(sp.ssrcs),

ssrc_groups_(sp.ssrc_groups),

call_(call),

enable_cpu_overuse_detection_(enable_cpu_overuse_detection),

source_(nullptr),

stream_(nullptr),

encoder_sink_(nullptr),

parameters_(std::move(config), options, max_bitrate_bps, codec_settings), //

// WebRtcVideoSendStream 中的 webrtc::VideoSendStream* stream_ RTC_GUARDED_BY(&thread_checker_); 成员变量的创建

void WebRtcVideoChannel::WebRtcVideoSendStream::RecreateWebRtcStream() {

RTC_DCHECK_RUN_ON(&thread_checker_);

if (stream_ != NULL) {

call_->DestroyVideoSendStream(stream_);

}

RTC_CHECK(parameters_.codec_settings);

RTC_DCHECK_EQ((parameters_.encoder_config.content_type ==

webrtc::VideoEncoderConfig::ContentType::kScreen),

parameters_.options.is_screencast.value_or(false))

<< "encoder content type inconsistent with screencast option";

parameters_.encoder_config.encoder_specific_settings =

ConfigureVideoEncoderSettings(parameters_.codec_settings->codec);

webrtc::VideoSendStream::Config config = parameters_.config.Copy(); //

if (!config.rtp.rtx.ssrcs.empty() && config.rtp.rtx.payload_type == -1) {

RTC_LOG(LS_WARNING) << "RTX SSRCs configured but there's no configured RTX "

"payload type the set codec. Ignoring RTX.";

config.rtp.rtx.ssrcs.clear();

}

if (parameters_.encoder_config.number_of_streams == 1) {

// SVC is used instead of simulcast. Remove unnecessary SSRCs.

if (config.rtp.ssrcs.size() > 1) {

config.rtp.ssrcs.resize(1);

if (config.rtp.rtx.ssrcs.size() > 1) {

config.rtp.rtx.ssrcs.resize(1);

}

}

}

stream_ = call_->CreateVideoSendStream(std::move(config), //

parameters_.encoder_config.Copy());

parameters_.encoder_config.encoder_specific_settings = NULL;

if (source_) {

stream_->SetSource(this, GetDegradationPreference());

}

// Call stream_->Start() if necessary conditions are met.

UpdateSendState();

}

/// webrtc::internal::VideoSendStream::SetSource

void VideoSendStream::SetSource(

rtc::VideoSourceInterface* source, / source 是指向 webrtc::VideoTrack 的指针

const DegradationPreference& degradation_preference) {

RTC_DCHECK_RUN_ON(&thread_checker_); // video_stream_encoder_ 是 webrtc::internal::VideoSendStream 的成员变量

video_stream_encoder_->SetSource(source, degradation_preference); /// std::unique_ptr video_stream_encoder_

} VideoStreamEncoder::SetSource

webrtc::internal::VideoSendStream

namespace internal {

VideoSendStream::VideoSendStream(

Clock* clock,

int num_cpu_cores,

ProcessThread* module_process_thread,

TaskQueueFactory* task_queue_factory,

CallStats* call_stats,

RtpTransportControllerSendInterface* transport,

BitrateAllocatorInterface* bitrate_allocator,

SendDelayStats* send_delay_stats,

RtcEventLog* event_log,

VideoSendStream::Config config,

VideoEncoderConfig encoder_config,

const std::map& suspended_ssrcs,

const std::map& suspended_payload_states,

std::unique_ptr fec_controller)

: worker_queue_(transport->GetWorkerQueue()),

stats_proxy_(clock, config, encoder_config.content_type),

config_(std::move(config)), //

content_type_(encoder_config.content_type) {

RTC_DCHECK(config_.encoder_settings.encoder_factory);

RTC_DCHECK(config_.encoder_settings.bitrate_allocator_factory);

video_stream_encoder_ =

CreateVideoStreamEncoder(clock, task_queue_factory, num_cpu_cores, /

&stats_proxy_, config_.encoder_settings);

worker_queue_->PostTask(ToQueuedTask(

[this, clock, call_stats, transport, bitrate_allocator, send_delay_stats,

event_log, &suspended_ssrcs, &encoder_config, &suspended_payload_states,

&fec_controller]() {

send_stream_.reset(new VideoSendStreamImpl( // std::unique_ptr send_stream_;

clock, &stats_proxy_, worker_queue_, call_stats, transport,

bitrate_allocator, send_delay_stats, video_stream_encoder_.get(),

event_log, &config_, encoder_config.max_bitrate_bps, 注意这里的 &config_ , 类型是 const VideoSendStream::Config* config, 注意里面的成员:config->send_transport

encoder_config.bitrate_priority, suspended_ssrcs,

suspended_payload_states, encoder_config.content_type,

std::move(fec_controller), config_.media_transport));

},

[this]() { thread_sync_event_.Set(); }));

VideoStreamEncoder 类的继承关系

class VideoStreamEncoder : public VideoStreamEncoderInterface,

private EncodedImageCallback,

// Protected only to provide access to tests.

protected AdaptationObserverInterface

namespace webrtc {

std::unique_ptr CreateVideoStreamEncoder(

Clock* clock,

TaskQueueFactory* task_queue_factory,

uint32_t number_of_cores,

VideoStreamEncoderObserver* encoder_stats_observer,

const VideoStreamEncoderSettings& settings) {

return std::make_unique( //

clock, number_of_cores, encoder_stats_observer, settings,

std::make_unique(encoder_stats_observer),

task_queue_factory);

}

} // namespace webrtc

void VideoStreamEncoder::SetSource(

rtc::VideoSourceInterface* source, / source 是指向 webrtc::VideoTrack 的指针

const DegradationPreference& degradation_preference) {

RTC_DCHECK_RUN_ON(&thread_checker_); / 在 VideoStreamEncoder 中的构造函数中初始化了 source_proxy_(new VideoSourceProxy(this)),

source_proxy_->SetSource(source, degradation_preference); / const std::unique_ptr source_proxy_; 这里就是 VideoSourceProxy::SetSource

encoder_queue_.PostTask([this, degradation_preference] {

RTC_DCHECK_RUN_ON(&encoder_queue_);

if (degradation_preference_ != degradation_preference) {

// Reset adaptation state, so that we're not tricked into thinking there's

// an already pending request of the same type.

last_adaptation_request_.reset();

if (degradation_preference == DegradationPreference::BALANCED ||

degradation_preference_ == DegradationPreference::BALANCED) {

// TODO(asapersson): Consider removing |adapt_counters_| map and use one

// AdaptCounter for all modes.

source_proxy_->ResetPixelFpsCount();

adapt_counters_.clear();

}

}

degradation_preference_ = degradation_preference;

if (encoder_)

ConfigureQualityScaler(encoder_->GetEncoderInfo());

if (!IsFramerateScalingEnabled(degradation_preference) &&

max_framerate_ != -1) {

// If frame rate scaling is no longer allowed, remove any potential

// allowance for longer frame intervals.

overuse_detector_->OnTargetFramerateUpdated(max_framerate_);

}

});

}

/ VideoSourceProxy 是 VideoStreamEncoder 的内部类

class VideoStreamEncoder::VideoSourceProxy {

public:

explicit VideoSourceProxy(VideoStreamEncoder* video_stream_encoder)

: video_stream_encoder_(video_stream_encoder),

degradation_preference_(DegradationPreference::DISABLED),

source_(nullptr),

max_framerate_(std::numeric_limits::max()) {}

void SetSource(rtc::VideoSourceInterface* source, /// source 是指向 webrtc::VideoTrack 的指针

const DegradationPreference& degradation_preference) {

// Called on libjingle's worker thread.

RTC_DCHECK_RUN_ON(&main_checker_);

rtc::VideoSourceInterface* old_source = nullptr;

rtc::VideoSinkWants wants;

{

rtc::CritScope lock(&crit_);

degradation_preference_ = degradation_preference;

old_source = source_;

source_ = source; / rtc::VideoSourceInterface* source_ RTC_GUARDED_BY(&crit_);

wants = GetActiveSinkWantsInternal();

}

if (old_source != source && old_source != nullptr) {

old_source->RemoveSink(video_stream_encoder_);

}

if (!source) {

return;

}

///

source->AddOrUpdateSink(video_stream_encoder_, wants); / 这里才是最关键的,此处实际上是将 VideoStreamEncoder 的 this 指针设置到了 webrtc::VideoTrack 中

} / 也就是作为 webrtc::VideoTrack 的 sink ,然后一步一步的传递到内部的 VideoBroadcaster 中。

所以在后面的 broadcaster_.OnFrame(frame) 时,其中一个就会回调到 VideoStreamEncoder::OnFrame 中来,后面分析“像素(RGB YUV)格式的 VideoFrame 送到编码器”

PeerConnection::CreateAndAddTransceiver

rtc::scoped_refptr>

PeerConnection::CreateAndAddTransceiver(

rtc::scoped_refptr> sender,

rtc::scoped_refptr>

receiver) {

// Ensure that the new sender does not have an ID that is already in use by

// another sender.

// Allow receiver IDs to conflict since those come from remote SDP (which

// could be invalid, but should not cause a crash).

RTC_DCHECK(!FindSenderById(sender->id()));

auto transceiver = RtpTransceiverProxyWithInternal::Create(

signaling_thread(),

new RtpTransceiver(sender, receiver, channel_manager()));

transceivers_.push_back(transceiver);

transceiver->internal()->SignalNegotiationNeeded.connect(

this, &PeerConnection::OnNegotiationNeeded);

return transceiver;

}

3.2 采集到的像素格式的VideoFrame沿着流水线送到编码器,并发送到 对端

像素(RGB YUV)格式的 VideoFrame 送到编码器

WINDOWS 平台摄像机捕获的码流 //

void CaptureSinkFilter::ProcessCapturedFrame(

unsigned char* buffer,

size_t length,

const VideoCaptureCapability& frame_info) {

// Called on the capture thread.

capture_observer_->IncomingFrame(buffer, length, frame_info); / WINDOWS

}

LINUX 平台摄像机捕获的码流 //

bool VideoCaptureModuleV4L2::CaptureProcess() {

int retVal = 0;

fd_set rSet;

struct timeval timeout;

FD_ZERO(&rSet);

FD_SET(_deviceFd, &rSet);

timeout.tv_sec = 1;

timeout.tv_usec = 0;

// _deviceFd written only in StartCapture, when this thread isn't running.

retVal = select(_deviceFd + 1, &rSet, NULL, NULL, &timeout);

if (retVal < 0 && errno != EINTR) // continue if interrupted

{

// select failed

return false;

} else if (retVal == 0) {

// select timed out

return true;

} else if (!FD_ISSET(_deviceFd, &rSet)) {

// not event on camera handle

return true;

}

{

MutexLock lock(&capture_lock_);

if (quit_) {

return false;

}

if (_captureStarted) {

struct v4l2_buffer buf;

memset(&buf, 0, sizeof(struct v4l2_buffer));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

// dequeue a buffer - repeat until dequeued properly!

while (ioctl(_deviceFd, VIDIOC_DQBUF, &buf) < 0) {

if (errno != EINTR) {

RTC_LOG(LS_INFO) << "could not sync on a buffer on device "

<< strerror(errno);

return true;

}

}

VideoCaptureCapability frameInfo;

frameInfo.width = _currentWidth;

frameInfo.height = _currentHeight;

frameInfo.videoType = _captureVideoType;

// convert to to I420 if needed

IncomingFrame((unsigned char*)_pool[buf.index].start, buf.bytesused,

frameInfo); / LINUX

// enqueue the buffer again

if (ioctl(_deviceFd, VIDIOC_QBUF, &buf) == -1) {

RTC_LOG(LS_INFO) << "Failed to enqueue capture buffer";

}

}

}

usleep(0);

return true;

}

int32_t VideoCaptureImpl::IncomingFrame(uint8_t* videoFrame,

size_t videoFrameLength,

const VideoCaptureCapability& frameInfo,

int64_t captureTime /*=0*/) {

MutexLock lock(&api_lock_);

const int32_t width = frameInfo.width;

const int32_t height = frameInfo.height;

TRACE_EVENT1("webrtc", "VC::IncomingFrame", "capture_time", captureTime);

// Not encoded, convert to I420.

if (frameInfo.videoType != VideoType::kMJPEG &&

CalcBufferSize(frameInfo.videoType, width, abs(height)) !=

videoFrameLength) {

RTC_LOG(LS_ERROR) << "Wrong incoming frame length.";

return -1;

}

int stride_y = width;

int stride_uv = (width + 1) / 2;

int target_width = width;

int target_height = abs(height);

// SetApplyRotation doesn't take any lock. Make a local copy here.

bool apply_rotation = apply_rotation_;

if (apply_rotation) {

// Rotating resolution when for 90/270 degree rotations.

if (_rotateFrame == kVideoRotation_90 ||

_rotateFrame == kVideoRotation_270) {

target_width = abs(height);

target_height = width;

}

}

// Setting absolute height (in case it was negative).

// In Windows, the image starts bottom left, instead of top left.

// Setting a negative source height, inverts the image (within LibYuv).

// TODO(nisse): Use a pool?

rtc::scoped_refptr buffer = I420Buffer::Create(

target_width, target_height, stride_y, stride_uv, stride_uv);

libyuv::RotationMode rotation_mode = libyuv::kRotate0;

if (apply_rotation) {

switch (_rotateFrame) {

case kVideoRotation_0:

rotation_mode = libyuv::kRotate0;

break;

case kVideoRotation_90:

rotation_mode = libyuv::kRotate90;

break;

case kVideoRotation_180:

rotation_mode = libyuv::kRotate180;

break;

case kVideoRotation_270:

rotation_mode = libyuv::kRotate270;

break;

}

}

const int conversionResult = libyuv::ConvertToI420(

videoFrame, videoFrameLength, buffer.get()->MutableDataY(),

buffer.get()->StrideY(), buffer.get()->MutableDataU(),

buffer.get()->StrideU(), buffer.get()->MutableDataV(),

buffer.get()->StrideV(), 0, 0, // No Cropping

width, height, target_width, target_height, rotation_mode,

ConvertVideoType(frameInfo.videoType));

if (conversionResult < 0) {

RTC_LOG(LS_ERROR) << "Failed to convert capture frame from type "

<< static_cast(frameInfo.videoType) << "to I420.";

return -1;

}

VideoFrame captureFrame =

VideoFrame::Builder()

.set_video_frame_buffer(buffer)

.set_timestamp_rtp(0)

.set_timestamp_ms(rtc::TimeMillis())

.set_rotation(!apply_rotation ? _rotateFrame : kVideoRotation_0)

.build();

captureFrame.set_ntp_time_ms(captureTime);

DeliverCapturedFrame(captureFrame); /

return 0;

}

int32_t VideoCaptureImpl::DeliverCapturedFrame(VideoFrame& captureFrame) {

UpdateFrameCount(); // frame count used for local frame rate callback.

if (_dataCallBack) {

_dataCallBack->OnFrame(captureFrame); /// VcmCapturer::OnFrame

}

return 0;

}

void VcmCapturer::OnFrame(const VideoFrame& frame) {

TestVideoCapturer::OnFrame(frame);

}

void TestVideoCapturer::OnFrame(const VideoFrame& original_frame) {

int cropped_width = 0;

int cropped_height = 0;

int out_width = 0;

int out_height = 0;

VideoFrame frame = MaybePreprocess(original_frame);

if (!video_adapter_.AdaptFrameResolution(

frame.width(), frame.height(), frame.timestamp_us() * 1000,

&cropped_width, &cropped_height, &out_width, &out_height)) {

// Drop frame in order to respect frame rate constraint.

return;

}

if (out_height != frame.height() || out_width != frame.width()) {

// Video adapter has requested a down-scale. Allocate a new buffer and

// return scaled version.

rtc::scoped_refptr scaled_buffer =

I420Buffer::Create(out_width, out_height);

scaled_buffer->ScaleFrom(*frame.video_frame_buffer()->ToI420());

broadcaster_.OnFrame(VideoFrame::Builder()

.set_video_frame_buffer(scaled_buffer)

.set_rotation(kVideoRotation_0)

.set_timestamp_us(frame.timestamp_us())

.set_id(frame.id())

.build());

} else {

// No adaptations needed, just return the frame as is.

broadcaster_.OnFrame(frame); /

}

}

void VideoBroadcaster::OnFrame(const webrtc::VideoFrame& frame) {

rtc::CritScope cs(&sinks_and_wants_lock_);

bool current_frame_was_discarded = false;

for (auto& sink_pair : sink_pairs()) { // for 循环遍历挂在 VideoBroadcaster 这个 source 下面的所有 sink ,并将视频流数据进行分发

if (sink_pair.wants.rotation_applied &&

frame.rotation() != webrtc::kVideoRotation_0) {

// Calls to OnFrame are not synchronized with changes to the sink wants.

// When rotation_applied is set to true, one or a few frames may get here

// with rotation still pending. Protect sinks that don't expect any

// pending rotation.

RTC_LOG(LS_VERBOSE) << "Discarding frame with unexpected rotation.";

sink_pair.sink->OnDiscardedFrame();

current_frame_was_discarded = true;

continue;

}

if (sink_pair.wants.black_frames) {

webrtc::VideoFrame black_frame =

webrtc::VideoFrame::Builder()

.set_video_frame_buffer(

GetBlackFrameBuffer(frame.width(), frame.height()))

.set_rotation(frame.rotation())

.set_timestamp_us(frame.timestamp_us())

.set_id(frame.id())

.build();

sink_pair.sink->OnFrame(black_frame);

} else if (!previous_frame_sent_to_all_sinks_) {

// Since last frame was not sent to some sinks, full update is needed.

webrtc::VideoFrame copy = frame;

copy.set_update_rect(

webrtc::VideoFrame::UpdateRect{0, 0, frame.width(), frame.height()});

sink_pair.sink->OnFrame(copy);

} else {

sink_pair.sink->OnFrame(frame); 其中有一个会送到编码器 VideoStreamEncoder::OnFrame 中

}

}

previous_frame_sent_to_all_sinks_ = !current_frame_was_discarded;

}

void VideoStreamEncoder::OnFrame(const VideoFrame& video_frame) {

RTC_DCHECK_RUNS_SERIALIZED(&incoming_frame_race_checker_);

VideoFrame incoming_frame = video_frame;

// Local time in webrtc time base.

int64_t current_time_us = clock_->TimeInMicroseconds();

int64_t current_time_ms = current_time_us / rtc::kNumMicrosecsPerMillisec;

// In some cases, e.g., when the frame from decoder is fed to encoder,

// the timestamp may be set to the future. As the encoding pipeline assumes

// capture time to be less than present time, we should reset the capture

// timestamps here. Otherwise there may be issues with RTP send stream.

if (incoming_frame.timestamp_us() > current_time_us)

incoming_frame.set_timestamp_us(current_time_us);

// Capture time may come from clock with an offset and drift from clock_.

int64_t capture_ntp_time_ms;

if (video_frame.ntp_time_ms() > 0) {

capture_ntp_time_ms = video_frame.ntp_time_ms();

} else if (video_frame.render_time_ms() != 0) {

capture_ntp_time_ms = video_frame.render_time_ms() + delta_ntp_internal_ms_;

} else {

capture_ntp_time_ms = current_time_ms + delta_ntp_internal_ms_;

}

incoming_frame.set_ntp_time_ms(capture_ntp_time_ms);

// Convert NTP time, in ms, to RTP timestamp.

const int kMsToRtpTimestamp = 90;

incoming_frame.set_timestamp(

kMsToRtpTimestamp * static_cast(incoming_frame.ntp_time_ms()));

if (incoming_frame.ntp_time_ms() <= last_captured_timestamp_) {

// We don't allow the same capture time for two frames, drop this one.

RTC_LOG(LS_WARNING) << "Same/old NTP timestamp ("

<< incoming_frame.ntp_time_ms()

<< " <= " << last_captured_timestamp_

<< ") for incoming frame. Dropping.";

encoder_queue_.PostTask([this, incoming_frame]() {

RTC_DCHECK_RUN_ON(&encoder_queue_);

accumulated_update_rect_.Union(incoming_frame.update_rect());

});

return;

}

bool log_stats = false;

if (current_time_ms - last_frame_log_ms_ > kFrameLogIntervalMs) {

last_frame_log_ms_ = current_time_ms;

log_stats = true;

}

last_captured_timestamp_ = incoming_frame.ntp_time_ms();

int64_t post_time_us = rtc::TimeMicros();

++posted_frames_waiting_for_encode_;

encoder_queue_.PostTask(

[this, incoming_frame, post_time_us, log_stats]() {

RTC_DCHECK_RUN_ON(&encoder_queue_);

encoder_stats_observer_->OnIncomingFrame(incoming_frame.width(),

incoming_frame.height());

++captured_frame_count_;

const int posted_frames_waiting_for_encode =

posted_frames_waiting_for_encode_.fetch_sub(1);

RTC_DCHECK_GT(posted_frames_waiting_for_encode, 0);

if (posted_frames_waiting_for_encode == 1) {

MaybeEncodeVideoFrame(incoming_frame, post_time_us); /// VideoStreamEncoder::MaybeEncodeVideoFrame

} else {

// There is a newer frame in flight. Do not encode this frame.

RTC_LOG(LS_VERBOSE)

<< "Incoming frame dropped due to that the encoder is blocked.";

++dropped_frame_count_;

encoder_stats_observer_->OnFrameDropped(

VideoStreamEncoderObserver::DropReason::kEncoderQueue);

accumulated_update_rect_.Union(incoming_frame.update_rect());

}

if (log_stats) {

RTC_LOG(LS_INFO) << "Number of frames: captured "

<< captured_frame_count_

<< ", dropped (due to encoder blocked) "

<< dropped_frame_count_ << ", interval_ms "

<< kFrameLogIntervalMs;

captured_frame_count_ = 0;

dropped_frame_count_ = 0;

}

});

}

void VideoStreamEncoder::MaybeEncodeVideoFrame(const VideoFrame& video_frame,

int64_t time_when_posted_us) {

RTC_DCHECK_RUN_ON(&encoder_queue_);

if (!last_frame_info_ || video_frame.width() != last_frame_info_->width ||

video_frame.height() != last_frame_info_->height ||

video_frame.is_texture() != last_frame_info_->is_texture) {

pending_encoder_reconfiguration_ = true;

last_frame_info_ = VideoFrameInfo(video_frame.width(), video_frame.height(),

video_frame.is_texture());

RTC_LOG(LS_INFO) << "Video frame parameters changed: dimensions="

<< last_frame_info_->width << "x"

<< last_frame_info_->height

<< ", texture=" << last_frame_info_->is_texture << ".";

// Force full frame update, since resolution has changed.

accumulated_update_rect_ =

VideoFrame::UpdateRect{0, 0, video_frame.width(), video_frame.height()};

}

// We have to create then encoder before the frame drop logic,

// because the latter depends on encoder_->GetScalingSettings.

// According to the testcase

// InitialFrameDropOffWhenEncoderDisabledScaling, the return value

// from GetScalingSettings should enable or disable the frame drop.

// Update input frame rate before we start using it. If we update it after

// any potential frame drop we are going to artificially increase frame sizes.

// Poll the rate before updating, otherwise we risk the rate being estimated

// a little too high at the start of the call when then window is small.

uint32_t framerate_fps = GetInputFramerateFps();

input_framerate_.Update(1u, clock_->TimeInMilliseconds());

int64_t now_ms = clock_->TimeInMilliseconds();

if (pending_encoder_reconfiguration_) {

ReconfigureEncoder();

last_parameters_update_ms_.emplace(now_ms);

} else if (!last_parameters_update_ms_ ||

now_ms - *last_parameters_update_ms_ >=

kParameterUpdateIntervalMs) {

if (last_encoder_rate_settings_) {

// Clone rate settings before update, so that SetEncoderRates() will

// actually detect the change between the input and

// |last_encoder_rate_setings_|, triggering the call to SetRate() on the

// encoder.

EncoderRateSettings new_rate_settings = *last_encoder_rate_settings_;

new_rate_settings.rate_control.framerate_fps =

static_cast(framerate_fps);

SetEncoderRates(

UpdateBitrateAllocationAndNotifyObserver(new_rate_settings));

}

last_parameters_update_ms_.emplace(now_ms);

}

// Because pending frame will be dropped in any case, we need to

// remember its updated region.

if (pending_frame_) {

encoder_stats_observer_->OnFrameDropped(

VideoStreamEncoderObserver::DropReason::kEncoderQueue);

accumulated_update_rect_.Union(pending_frame_->update_rect());

}

if (DropDueToSize(video_frame.size())) {

RTC_LOG(LS_INFO) << "Dropping frame. Too large for target bitrate.";

int fps_count = GetConstAdaptCounter().FramerateCount(kQuality);

int res_count = GetConstAdaptCounter().ResolutionCount(kQuality);

AdaptDown(kQuality);

if (degradation_preference_ == DegradationPreference::BALANCED &&

GetConstAdaptCounter().FramerateCount(kQuality) > fps_count) {

// Adapt framerate in same step as resolution.

AdaptDown(kQuality);

}

if (GetConstAdaptCounter().ResolutionCount(kQuality) > res_count) {

encoder_stats_observer_->OnInitialQualityResolutionAdaptDown();

}

++initial_framedrop_;

// Storing references to a native buffer risks blocking frame capture.

if (video_frame.video_frame_buffer()->type() !=

VideoFrameBuffer::Type::kNative) {

pending_frame_ = video_frame;

pending_frame_post_time_us_ = time_when_posted_us;

} else {

// Ensure that any previously stored frame is dropped.

pending_frame_.reset();

accumulated_update_rect_.Union(video_frame.update_rect());

}

return;

}

initial_framedrop_ = kMaxInitialFramedrop;

if (EncoderPaused()) {

// Storing references to a native buffer risks blocking frame capture.

if (video_frame.video_frame_buffer()->type() !=

VideoFrameBuffer::Type::kNative) {

if (pending_frame_)

TraceFrameDropStart();

pending_frame_ = video_frame;

pending_frame_post_time_us_ = time_when_posted_us;

} else {

// Ensure that any previously stored frame is dropped.

pending_frame_.reset();

TraceFrameDropStart();

accumulated_update_rect_.Union(video_frame.update_rect());

}

return;

}

pending_frame_.reset();

frame_dropper_.Leak(framerate_fps);

// Frame dropping is enabled iff frame dropping is not force-disabled, and

// rate controller is not trusted.

const bool frame_dropping_enabled =

!force_disable_frame_dropper_ &&

!encoder_info_.has_trusted_rate_controller;

frame_dropper_.Enable(frame_dropping_enabled);

if (frame_dropping_enabled && frame_dropper_.DropFrame()) {

RTC_LOG(LS_VERBOSE)

<< "Drop Frame: "

<< "target bitrate "

<< (last_encoder_rate_settings_

? last_encoder_rate_settings_->encoder_target.bps()

: 0)

<< ", input frame rate " << framerate_fps;

OnDroppedFrame(

EncodedImageCallback::DropReason::kDroppedByMediaOptimizations);

accumulated_update_rect_.Union(video_frame.update_rect());

return;

}

EncodeVideoFrame(video_frame, time_when_posted_us);

}

void VideoStreamEncoder::EncodeVideoFrame(const VideoFrame& video_frame,

int64_t time_when_posted_us) {

RTC_DCHECK_RUN_ON(&encoder_queue_);

// If the encoder fail we can't continue to encode frames. When this happens

// the WebrtcVideoSender is notified and the whole VideoSendStream is

// recreated.

if (encoder_failed_)

return;

TraceFrameDropEnd();

// Encoder metadata needs to be updated before encode complete callback.

VideoEncoder::EncoderInfo info = encoder_->GetEncoderInfo();

if (info.implementation_name != encoder_info_.implementation_name) {

encoder_stats_observer_->OnEncoderImplementationChanged(

info.implementation_name);

if (bitrate_adjuster_) {

// Encoder implementation changed, reset overshoot detector states.

bitrate_adjuster_->Reset();

}

}

if (bitrate_adjuster_) {

for (size_t si = 0; si < kMaxSpatialLayers; ++si) {

if (info.fps_allocation[si] != encoder_info_.fps_allocation[si]) {

bitrate_adjuster_->OnEncoderInfo(info);

break;

}

}

}

encoder_info_ = info;

last_encode_info_ms_ = clock_->TimeInMilliseconds();

VideoFrame out_frame(video_frame);

const VideoFrameBuffer::Type buffer_type =

out_frame.video_frame_buffer()->type();

const bool is_buffer_type_supported =

buffer_type == VideoFrameBuffer::Type::kI420 ||

(buffer_type == VideoFrameBuffer::Type::kNative &&

info.supports_native_handle);

if (!is_buffer_type_supported) {

// This module only supports software encoding.

rtc::scoped_refptr converted_buffer(

out_frame.video_frame_buffer()->ToI420());

if (!converted_buffer) {

RTC_LOG(LS_ERROR) << "Frame conversion failed, dropping frame.";

return;

}

VideoFrame::UpdateRect update_rect = out_frame.update_rect();

if (!update_rect.IsEmpty() &&

out_frame.video_frame_buffer()->GetI420() == nullptr) {

// UpdatedRect is reset to full update if it's not empty, and buffer was

// converted, therefore we can't guarantee that pixels outside of

// UpdateRect didn't change comparing to the previous frame.

update_rect =

VideoFrame::UpdateRect{0, 0, out_frame.width(), out_frame.height()};

}

out_frame.set_video_frame_buffer(converted_buffer);

out_frame.set_update_rect(update_rect);

}

// Crop frame if needed.

if ((crop_width_ > 0 || crop_height_ > 0) &&

out_frame.video_frame_buffer()->type() !=

VideoFrameBuffer::Type::kNative) {

// If the frame can't be converted to I420, drop it.

auto i420_buffer = video_frame.video_frame_buffer()->ToI420();

if (!i420_buffer) {

RTC_LOG(LS_ERROR) << "Frame conversion for crop failed, dropping frame.";

return;

}

int cropped_width = video_frame.width() - crop_width_;

int cropped_height = video_frame.height() - crop_height_;

rtc::scoped_refptr cropped_buffer =

I420Buffer::Create(cropped_width, cropped_height);

// TODO(ilnik): Remove scaling if cropping is too big, as it should never

// happen after SinkWants signaled correctly from ReconfigureEncoder.

VideoFrame::UpdateRect update_rect = video_frame.update_rect();

if (crop_width_ < 4 && crop_height_ < 4) {

cropped_buffer->CropAndScaleFrom(*i420_buffer, crop_width_ / 2,

crop_height_ / 2, cropped_width,

cropped_height);

update_rect.offset_x -= crop_width_ / 2;

update_rect.offset_y -= crop_height_ / 2;

update_rect.Intersect(

VideoFrame::UpdateRect{0, 0, cropped_width, cropped_height});

} else {

cropped_buffer->ScaleFrom(*i420_buffer);

if (!update_rect.IsEmpty()) {

// Since we can't reason about pixels after scaling, we invalidate whole

// picture, if anything changed.

update_rect =

VideoFrame::UpdateRect{0, 0, cropped_width, cropped_height};

}

}

out_frame.set_video_frame_buffer(cropped_buffer);

out_frame.set_update_rect(update_rect);

out_frame.set_ntp_time_ms(video_frame.ntp_time_ms());

// Since accumulated_update_rect_ is constructed before cropping,

// we can't trust it. If any changes were pending, we invalidate whole

// frame here.

if (!accumulated_update_rect_.IsEmpty()) {

accumulated_update_rect_ =

VideoFrame::UpdateRect{0, 0, out_frame.width(), out_frame.height()};

}

}

if (!accumulated_update_rect_.IsEmpty()) {

accumulated_update_rect_.Union(out_frame.update_rect());

accumulated_update_rect_.Intersect(

VideoFrame::UpdateRect{0, 0, out_frame.width(), out_frame.height()});

out_frame.set_update_rect(accumulated_update_rect_);

accumulated_update_rect_.MakeEmptyUpdate();

}

TRACE_EVENT_ASYNC_STEP0("webrtc", "Video", video_frame.render_time_ms(),

"Encode");

overuse_detector_->FrameCaptured(out_frame, time_when_posted_us);

RTC_DCHECK_LE(send_codec_.width, out_frame.width());

RTC_DCHECK_LE(send_codec_.height, out_frame.height());

// Native frames should be scaled by the client.

// For internal encoders we scale everything in one place here.

RTC_DCHECK((out_frame.video_frame_buffer()->type() ==

VideoFrameBuffer::Type::kNative) ||

(send_codec_.width == out_frame.width() &&

send_codec_.height == out_frame.height()));

TRACE_EVENT1("webrtc", "VCMGenericEncoder::Encode", "timestamp",

out_frame.timestamp());

frame_encode_metadata_writer_.OnEncodeStarted(out_frame);

const int32_t encode_status = encoder_->Encode(out_frame, &next_frame_types_); // 具体的编码操作,比如 H264EncoderImpl::Encode 、LibvpxVp8Encoder::Encode

was_encode_called_since_last_initialization_ = true;

if (encode_status < 0) {

if (encode_status == WEBRTC_VIDEO_CODEC_ENCODER_FAILURE) {

RTC_LOG(LS_ERROR) << "Encoder failed, failing encoder format: "

<< encoder_config_.video_format.ToString();

if (settings_.encoder_switch_request_callback) {

encoder_failed_ = true;

settings_.encoder_switch_request_callback->RequestEncoderFallback();

} else {

RTC_LOG(LS_ERROR)

<< "Encoder failed but no encoder fallback callback is registered";

}

} else {

RTC_LOG(LS_ERROR) << "Failed to encode frame. Error code: "

<< encode_status;

}

return;

}

for (auto& it : next_frame_types_) {

it = VideoFrameType::kVideoFrameDelta;

}

}

int32_t H264EncoderImpl::Encode(

const VideoFrame& input_frame,

const std::vector* frame_types) {

if (encoders_.empty()) {

ReportError();

return WEBRTC_VIDEO_CODEC_UNINITIALIZED;

}

if (!encoded_image_callback_) {

RTC_LOG(LS_WARNING)

<< "InitEncode() has been called, but a callback function "

<< "has not been set with RegisterEncodeCompleteCallback()";

ReportError();

return WEBRTC_VIDEO_CODEC_UNINITIALIZED;

}

rtc::scoped_refptr frame_buffer =

input_frame.video_frame_buffer()->ToI420();

bool send_key_frame = false;

for (size_t i = 0; i < configurations_.size(); ++i) {

if (configurations_[i].key_frame_request && configurations_[i].sending) {

send_key_frame = true;

break;

}

}

if (!send_key_frame && frame_types) {

for (size_t i = 0; i < configurations_.size(); ++i) {

const size_t simulcast_idx =

static_cast(configurations_[i].simulcast_idx);

if (configurations_[i].sending && simulcast_idx < frame_types->size() &&

(*frame_types)[simulcast_idx] == VideoFrameType::kVideoFrameKey) {

send_key_frame = true;

break;

}

}

}

RTC_DCHECK_EQ(configurations_[0].width, frame_buffer->width());

RTC_DCHECK_EQ(configurations_[0].height, frame_buffer->height());

// Encode image for each layer.

for (size_t i = 0; i < encoders_.size(); ++i) {

// EncodeFrame input.

pictures_[i] = {0};

pictures_[i].iPicWidth = configurations_[i].width;

pictures_[i].iPicHeight = configurations_[i].height;

pictures_[i].iColorFormat = EVideoFormatType::videoFormatI420;

pictures_[i].uiTimeStamp = input_frame.ntp_time_ms();

// Downscale images on second and ongoing layers.

if (i == 0) {

pictures_[i].iStride[0] = frame_buffer->StrideY();

pictures_[i].iStride[1] = frame_buffer->StrideU();

pictures_[i].iStride[2] = frame_buffer->StrideV();

pictures_[i].pData[0] = const_cast(frame_buffer->DataY());

pictures_[i].pData[1] = const_cast(frame_buffer->DataU());

pictures_[i].pData[2] = const_cast(frame_buffer->DataV());

} else {

pictures_[i].iStride[0] = downscaled_buffers_[i - 1]->StrideY();

pictures_[i].iStride[1] = downscaled_buffers_[i - 1]->StrideU();

pictures_[i].iStride[2] = downscaled_buffers_[i - 1]->StrideV();

pictures_[i].pData[0] =

const_cast(downscaled_buffers_[i - 1]->DataY());

pictures_[i].pData[1] =

const_cast(downscaled_buffers_[i - 1]->DataU());

pictures_[i].pData[2] =

const_cast(downscaled_buffers_[i - 1]->DataV());

// Scale the image down a number of times by downsampling factor.

libyuv::I420Scale(pictures_[i - 1].pData[0], pictures_[i - 1].iStride[0],

pictures_[i - 1].pData[1], pictures_[i - 1].iStride[1],

pictures_[i - 1].pData[2], pictures_[i - 1].iStride[2],

configurations_[i - 1].width,

configurations_[i - 1].height, pictures_[i].pData[0],

pictures_[i].iStride[0], pictures_[i].pData[1],

pictures_[i].iStride[1], pictures_[i].pData[2],

pictures_[i].iStride[2], configurations_[i].width,

configurations_[i].height, libyuv::kFilterBilinear);

}

if (!configurations_[i].sending) {

continue;

}

if (frame_types != nullptr) {

// Skip frame?

if ((*frame_types)[i] == VideoFrameType::kEmptyFrame) {

continue;

}

}

if (send_key_frame) {

// API doc says ForceIntraFrame(false) does nothing, but calling this

// function forces a key frame regardless of the |bIDR| argument's value.

// (If every frame is a key frame we get lag/delays.)

encoders_[i]->ForceIntraFrame(true);

configurations_[i].key_frame_request = false;

}

// EncodeFrame output.

SFrameBSInfo info;

memset(&info, 0, sizeof(SFrameBSInfo));

// Encode!

int enc_ret = encoders_[i]->EncodeFrame(&pictures_[i], &info);

if (enc_ret != 0) {

RTC_LOG(LS_ERROR)

<< "OpenH264 frame encoding failed, EncodeFrame returned " << enc_ret

<< ".";

ReportError();

return WEBRTC_VIDEO_CODEC_ERROR;

}

encoded_images_[i]._encodedWidth = configurations_[i].width;

encoded_images_[i]._encodedHeight = configurations_[i].height;

encoded_images_[i].SetTimestamp(input_frame.timestamp());

encoded_images_[i]._frameType = ConvertToVideoFrameType(info.eFrameType);

encoded_images_[i].SetSpatialIndex(configurations_[i].simulcast_idx);

// Split encoded image up into fragments. This also updates

// |encoded_image_|.

// 编码后,编码数据保存在info中,RtpFragmentize将编码数据拷贝到 encoded_images_[i]中,并将其中的nalu信息统计在frag_header内

RTPFragmentationHeader frag_header;

RtpFragmentize(&encoded_images_[i], *frame_buffer, &info, &frag_header);

// Encoder can skip frames to save bandwidth in which case

// |encoded_images_[i]._length| == 0.

if (encoded_images_[i].size() > 0) {

// Parse QP.

h264_bitstream_parser_.ParseBitstream(encoded_images_[i].data(),

encoded_images_[i].size());

h264_bitstream_parser_.GetLastSliceQp(&encoded_images_[i].qp_);

// Deliver encoded image.

CodecSpecificInfo codec_specific;

codec_specific.codecType = kVideoCodecH264;

codec_specific.codecSpecific.H264.packetization_mode =

packetization_mode_;

codec_specific.codecSpecific.H264.temporal_idx = kNoTemporalIdx;

codec_specific.codecSpecific.H264.idr_frame =

info.eFrameType == videoFrameTypeIDR;

codec_specific.codecSpecific.H264.base_layer_sync = false;

if (configurations_[i].num_temporal_layers > 1) {

const uint8_t tid = info.sLayerInfo[0].uiTemporalId;

codec_specific.codecSpecific.H264.temporal_idx = tid;

codec_specific.codecSpecific.H264.base_layer_sync =

tid > 0 && tid < tl0sync_limit_[i];

if (codec_specific.codecSpecific.H264.base_layer_sync) {

tl0sync_limit_[i] = tid;

}

if (tid == 0) {

tl0sync_limit_[i] = configurations_[i].num_temporal_layers;

}

}

// 编码成功后,将数据回调出去,接收者即为 VideoStreamEncoder

encoded_image_callback_->OnEncodedImage(encoded_images_[i],

&codec_specific, &frag_header);

}

}

return WEBRTC_VIDEO_CODEC_OK;

}

/ encoded_image_callback_ 的设置

void VideoStreamEncoder::ReconfigureEncoder()

===>

encoder_initialized_ = true;

encoder_->RegisterEncodeCompleteCallback(this); ///

frame_encode_metadata_writer_.OnEncoderInit(send_codec_,

HasInternalSource());

int32_t H264EncoderImpl::RegisterEncodeCompleteCallback(

EncodedImageCallback* callback) {

encoded_image_callback_ = callback;

return WEBRTC_VIDEO_CODEC_OK;

}

EncodedImageCallback::Result VideoStreamEncoder::OnEncodedImage(

const EncodedImage& encoded_image,

const CodecSpecificInfo* codec_specific_info,

const RTPFragmentationHeader* fragmentation) {

TRACE_EVENT_INSTANT1("webrtc", "VCMEncodedFrameCallback::Encoded",

"timestamp", encoded_image.Timestamp());

const size_t spatial_idx = encoded_image.SpatialIndex().value_or(0);

EncodedImage image_copy(encoded_image);

frame_encode_metadata_writer_.FillTimingInfo(spatial_idx, &image_copy);

std::unique_ptr fragmentation_copy =

frame_encode_metadata_writer_.UpdateBitstream(codec_specific_info,

fragmentation, &image_copy);

// Piggyback ALR experiment group id and simulcast id into the content type.

const uint8_t experiment_id =

experiment_groups_[videocontenttypehelpers::IsScreenshare(

image_copy.content_type_)];

// TODO(ilnik): This will force content type extension to be present even

// for realtime video. At the expense of miniscule overhead we will get

// sliced receive statistics.

RTC_CHECK(videocontenttypehelpers::SetExperimentId(&image_copy.content_type_,

experiment_id));

// We count simulcast streams from 1 on the wire. That's why we set simulcast

// id in content type to +1 of that is actual simulcast index. This is because

// value 0 on the wire is reserved for 'no simulcast stream specified'.

RTC_CHECK(videocontenttypehelpers::SetSimulcastId(

&image_copy.content_type_, static_cast(spatial_idx + 1)));

// Encoded is called on whatever thread the real encoder implementation run

// on. In the case of hardware encoders, there might be several encoders

// running in parallel on different threads.

encoder_stats_observer_->OnSendEncodedImage(image_copy, codec_specific_info);

// The simulcast id is signaled in the SpatialIndex. This makes it impossible

// to do simulcast for codecs that actually support spatial layers since we

// can't distinguish between an actual spatial layer and a simulcast stream.

// TODO(bugs.webrtc.org/10520): Signal the simulcast id explicitly.

int simulcast_id = 0;

if (codec_specific_info &&

(codec_specific_info->codecType == kVideoCodecVP8 ||

codec_specific_info->codecType == kVideoCodecH264 ||

codec_specific_info->codecType == kVideoCodecGeneric)) {

simulcast_id = encoded_image.SpatialIndex().value_or(0);

}

std::unique_ptr codec_info_copy;

{

rtc::CritScope cs(&encoded_image_lock_);

if (codec_specific_info && codec_specific_info->generic_frame_info) {

codec_info_copy =

std::make_unique(*codec_specific_info);

GenericFrameInfo& generic_info = *codec_info_copy->generic_frame_info;

generic_info.frame_id = next_frame_id_++;

if (encoder_buffer_state_.size() <= static_cast(simulcast_id)) {

RTC_LOG(LS_ERROR) << "At most " << encoder_buffer_state_.size()

<< " simulcast streams supported.";

} else {

std::array& state =

encoder_buffer_state_[simulcast_id];

for (const CodecBufferUsage& buffer : generic_info.encoder_buffers) {

if (state.size() <= static_cast(buffer.id)) {

RTC_LOG(LS_ERROR)

<< "At most " << state.size() << " encoder buffers supported.";

break;

}

if (buffer.referenced) {

int64_t diff = generic_info.frame_id - state[buffer.id];

if (diff <= 0) {

RTC_LOG(LS_ERROR) << "Invalid frame diff: " << diff << ".";

} else if (absl::c_find(generic_info.frame_diffs, diff) ==

generic_info.frame_diffs.end()) {

generic_info.frame_diffs.push_back(diff);

}

}

if (buffer.updated)

state[buffer.id] = generic_info.frame_id;

}

}

}

}

// EncoderSink* sink_; 设置见下方

EncodedImageCallback::Result result = sink_->OnEncodedImage( VideoSendStreamImpl::OnEncodedImage

image_copy, codec_info_copy ? codec_info_copy.get() : codec_specific_info,

fragmentation_copy ? fragmentation_copy.get() : fragmentation);

// We are only interested in propagating the meta-data about the image, not

// encoded data itself, to the post encode function. Since we cannot be sure

// the pointer will still be valid when run on the task queue, set it to null.

image_copy.set_buffer(nullptr, 0);

int temporal_index = 0;

if (codec_specific_info) {

if (codec_specific_info->codecType == kVideoCodecVP9) {

temporal_index = codec_specific_info->codecSpecific.VP9.temporal_idx;

} else if (codec_specific_info->codecType == kVideoCodecVP8) {

temporal_index = codec_specific_info->codecSpecific.VP8.temporalIdx;

}

}

if (temporal_index == kNoTemporalIdx) {

temporal_index = 0;

}

RunPostEncode(image_copy, rtc::TimeMicros(), temporal_index);

if (result.error == Result::OK) {

// In case of an internal encoder running on a separate thread, the

// decision to drop a frame might be a frame late and signaled via

// atomic flag. This is because we can't easily wait for the worker thread

// without risking deadlocks, eg during shutdown when the worker thread

// might be waiting for the internal encoder threads to stop.

if (pending_frame_drops_.load() > 0) {

int pending_drops = pending_frame_drops_.fetch_sub(1);

RTC_DCHECK_GT(pending_drops, 0);

result.drop_next_frame = true;

}

}

return result;

}

// VideoSendStreamImpl 是 webrtc::internal::VideoSendStream 的成员变量 send_stream_ ,并在

// VideoSendStream 的构造函数中被初始化,上面的代码中已经讲解了

VideoSendStreamImpl::VideoSendStreamImpl

====>

// video_stream_encoder_ 就是在 webrtc::internal::VideoSendStream 中创建的,然后

// 被传递到了 VideoSendStreamImpl 的构造函数中

video_stream_encoder_->SetSink(this, rotation_applied)

void VideoStreamEncoder::SetSink(EncoderSink* sink, bool rotation_applied) {

source_proxy_->SetWantsRotationApplied(rotation_applied);

encoder_queue_.PostTask([this, sink] {

RTC_DCHECK_RUN_ON(&encoder_queue_);

sink_ = sink;

});

}

EncodedImageCallback::Result VideoSendStreamImpl::OnEncodedImage(

const EncodedImage& encoded_image,

const CodecSpecificInfo* codec_specific_info,

const RTPFragmentationHeader* fragmentation) {

// Encoded is called on whatever thread the real encoder implementation run

// on. In the case of hardware encoders, there might be several encoders

// running in parallel on different threads.

// Indicate that there still is activity going on.

activity_ = true;

auto enable_padding_task = [this]() {

if (disable_padding_) {

RTC_DCHECK_RUN_ON(worker_queue_);

disable_padding_ = false;

// To ensure that padding bitrate is propagated to the bitrate allocator.

SignalEncoderActive();

}

};

if (!worker_queue_->IsCurrent()) {

worker_queue_->PostTask(enable_padding_task);

} else {

enable_padding_task();

}

EncodedImageCallback::Result result(EncodedImageCallback::Result::OK);

if (media_transport_) {

int64_t frame_id;

{

// TODO(nisse): Responsibility for allocation of frame ids should move to

// VideoStreamEncoder.

rtc::CritScope cs(&media_transport_id_lock_);

frame_id = media_transport_frame_id_++;

}

// TODO(nisse): Responsibility for reference meta data should be moved

// upstream, ideally close to the encoders, but probably VideoStreamEncoder

// will need to do some translation to produce reference info using frame

// ids.

std::vector referenced_frame_ids;

if (encoded_image._frameType != VideoFrameType::kVideoFrameKey) {

RTC_DCHECK_GT(frame_id, 0);

referenced_frame_ids.push_back(frame_id - 1);

}

media_transport_->SendVideoFrame(

config_->rtp.ssrcs[0], webrtc::MediaTransportEncodedVideoFrame(

frame_id, referenced_frame_ids,

config_->rtp.payload_type, encoded_image));

} else {

result = rtp_video_sender_->OnEncodedImage( 真正的发送,RtpVideoSender::OnEncodedImage

encoded_image, codec_specific_info, fragmentation); /// rtp_video_sender_ 在 VideoSendStreamImpl 中的构造函数中被创建

}

// Check if there's a throttled VideoBitrateAllocation that we should try

// sending.

rtc::WeakPtr send_stream = weak_ptr_;

auto update_task = [send_stream]() {

if (send_stream) {

RTC_DCHECK_RUN_ON(send_stream->worker_queue_);

auto& context = send_stream->video_bitrate_allocation_context_;

if (context && context->throttled_allocation) {

send_stream->OnBitrateAllocationUpdated(*context->throttled_allocation);

}

}

};

if (!worker_queue_->IsCurrent()) {

worker_queue_->PostTask(update_task);

} else {

update_task();

}

return result;

}

/// VideoSendStreamImpl 的成员变量 rtp_video_sender_ 的构造

VideoSendStreamImpl::VideoSendStreamImpl

====>

rtp_video_sender_(transport_->CreateRtpVideoSender( /// RtpTransportControllerSend::CreateRtpVideoSender

suspended_ssrcs,

suspended_payload_states,

config_->rtp,

config_->rtcp_report_interval_ms,

config_->send_transport, / 来自 VideoSendStreamImpl 的入参 const VideoSendStream::Config* config

CreateObservers(call_stats,

&encoder_feedback_,

stats_proxy_,

send_delay_stats),

event_log,

std::move(fec_controller),

CreateFrameEncryptionConfig(config_)))

RtpVideoSenderInterface* RtpTransportControllerSend::CreateRtpVideoSender(

std::map suspended_ssrcs,

const std::map& states,

const RtpConfig& rtp_config,

int rtcp_report_interval_ms,

Transport* send_transport, //

const RtpSenderObservers& observers,

RtcEventLog* event_log,

std::unique_ptr fec_controller,

const RtpSenderFrameEncryptionConfig& frame_encryption_config) {

video_rtp_senders_.push_back(std::make_unique( /

clock_, suspended_ssrcs, states, rtp_config, rtcp_report_interval_ms,

send_transport, observers, / 注意这里的 send_transport

// TODO(holmer): Remove this circular dependency by injecting

// the parts of RtpTransportControllerSendInterface that are really used.

this, event_log, &retransmission_rate_limiter_, std::move(fec_controller),

frame_encryption_config.frame_encryptor,

frame_encryption_config.crypto_options));

return video_rtp_senders_.back().get();

}

EncodedImageCallback::Result RtpVideoSender::OnEncodedImage(

const EncodedImage& encoded_image,

const CodecSpecificInfo* codec_specific_info,

const RTPFragmentationHeader* fragmentation) {

fec_controller_->UpdateWithEncodedData(encoded_image.size(),

encoded_image._frameType);

rtc::CritScope lock(&crit_);

RTC_DCHECK(!rtp_streams_.empty());

if (!active_)

return Result(Result::ERROR_SEND_FAILED);

shared_frame_id_++;

size_t stream_index = 0;

if (codec_specific_info &&

(codec_specific_info->codecType == kVideoCodecVP8 ||

codec_specific_info->codecType == kVideoCodecH264 ||

codec_specific_info->codecType == kVideoCodecGeneric)) {

// Map spatial index to simulcast.

stream_index = encoded_image.SpatialIndex().value_or(0);

}

RTC_DCHECK_LT(stream_index, rtp_streams_.size());

uint32_t rtp_timestamp =

encoded_image.Timestamp() +

rtp_streams_[stream_index].rtp_rtcp->StartTimestamp();

// RTCPSender has it's own copy of the timestamp offset, added in

// RTCPSender::BuildSR, hence we must not add the in the offset for this call.

// TODO(nisse): Delete RTCPSender:timestamp_offset_, and see if we can confine

// knowledge of the offset to a single place.

if (!rtp_streams_[stream_index].rtp_rtcp->OnSendingRtpFrame(

encoded_image.Timestamp(), encoded_image.capture_time_ms_,

rtp_config_.payload_type,

encoded_image._frameType == VideoFrameType::kVideoFrameKey)) {

// The payload router could be active but this module isn't sending.

return Result(Result::ERROR_SEND_FAILED);

}

absl::optional expected_retransmission_time_ms;

if (encoded_image.RetransmissionAllowed()) {

expected_retransmission_time_ms =

rtp_streams_[stream_index].rtp_rtcp->ExpectedRetransmissionTimeMs();

}

///

bool send_result = rtp_streams_[stream_index].sender_video->SendVideo( // RTPSenderVideo::SendVideo

rtp_config_.payload_type, codec_type_, rtp_timestamp,

encoded_image.capture_time_ms_, encoded_image, fragmentation,

params_[stream_index].GetRtpVideoHeader(

encoded_image, codec_specific_info, shared_frame_id_),

expected_retransmission_time_ms);

if (frame_count_observer_) {

FrameCounts& counts = frame_counts_[stream_index];

if (encoded_image._frameType == VideoFrameType::kVideoFrameKey) {

++counts.key_frames;

} else if (encoded_image._frameType == VideoFrameType::kVideoFrameDelta) {

++counts.delta_frames;

} else {

RTC_DCHECK(encoded_image._frameType == VideoFrameType::kEmptyFrame);

}

frame_count_observer_->FrameCountUpdated(counts,

rtp_config_.ssrcs[stream_index]);

}

if (!send_result)

return Result(Result::ERROR_SEND_FAILED);

return Result(Result::OK, rtp_timestamp);

}

RtpVideoSender 的成员变量 const std::vector rtp_streams_; 的创建

/ 注意 RtpVideoSender 和 RTPSenderVideo 是两个不同的类

RtpVideoSender::RtpVideoSender

====>

rtp_streams_(CreateRtpStreamSenders(clock,

rtp_config,

observers,

rtcp_report_interval_ms,

send_transport,

transport->GetBandwidthObserver(),

transport,

flexfec_sender_.get(),

event_log,

retransmission_limiter,

this,

frame_encryptor,

crypto_options)),

// 对上面创建的 rtp_streams_ 中的数据进行遍历,将它们加入到 PacketRouter 中

for (const RtpStreamSender& stream : rtp_streams_) {

constexpr bool remb_candidate = true;

transport->packet_router()->AddSendRtpModule(stream.rtp_rtcp.get(), // PacketRouter::AddSendRtpModule

remb_candidate);

}

void PacketRouter::AddSendRtpModule(RtpRtcp* rtp_module, bool remb_candidate) {

rtc::CritScope cs(&modules_crit_);

RTC_DCHECK(std::find(rtp_send_modules_.begin(), rtp_send_modules_.end(),

rtp_module) == rtp_send_modules_.end());

// Put modules which can use regular payload packets (over rtx) instead of

// padding first as it's less of a waste

if (rtp_module->SupportsRtxPayloadPadding()) {

rtp_send_modules_.push_front(rtp_module);

} else {

rtp_send_modules_.push_back(rtp_module); // 后面 PacketRouter::SendPacket 的遍历会用到

}

if (remb_candidate) {

AddRembModuleCandidate(rtp_module, /* media_sender = */ true);

}

}

std::vector CreateRtpStreamSenders(

Clock* clock,

const RtpConfig& rtp_config,

const RtpSenderObservers& observers,

int rtcp_report_interval_ms,

Transport* send_transport, //

RtcpBandwidthObserver* bandwidth_callback,

RtpTransportControllerSendInterface* transport,

FlexfecSender* flexfec_sender,

RtcEventLog* event_log,

RateLimiter* retransmission_rate_limiter,

OverheadObserver* overhead_observer,

FrameEncryptorInterface* frame_encryptor,

const CryptoOptions& crypto_options) {

RTC_DCHECK_GT(rtp_config.ssrcs.size(), 0);

RtpRtcp::Configuration configuration;

configuration.clock = clock;

configuration.audio = false;

configuration.receiver_only = false;

configuration.outgoing_transport = send_transport; //

configuration.intra_frame_callback = observers.intra_frame_callback;

configuration.rtcp_loss_notification_observer =

observers.rtcp_loss_notification_observer;

configuration.bandwidth_callback = bandwidth_callback;

configuration.network_state_estimate_observer =

transport->network_state_estimate_observer();

configuration.transport_feedback_callback =

transport->transport_feedback_observer();

configuration.rtt_stats = observers.rtcp_rtt_stats;

configuration.rtcp_packet_type_counter_observer =

observers.rtcp_type_observer;

configuration.paced_sender = transport->packet_sender();

configuration.send_bitrate_observer = observers.bitrate_observer;

configuration.send_side_delay_observer = observers.send_delay_observer;

configuration.send_packet_observer = observers.send_packet_observer;

configuration.event_log = event_log;

configuration.retransmission_rate_limiter = retransmission_rate_limiter;

configuration.overhead_observer = overhead_observer;

configuration.rtp_stats_callback = observers.rtp_stats;

configuration.frame_encryptor = frame_encryptor;

configuration.require_frame_encryption =

crypto_options.sframe.require_frame_encryption;

configuration.extmap_allow_mixed = rtp_config.extmap_allow_mixed;

configuration.rtcp_report_interval_ms = rtcp_report_interval_ms;

std::vector rtp_streams;

const std::vector& flexfec_protected_ssrcs =

rtp_config.flexfec.protected_media_ssrcs;

RTC_DCHECK(rtp_config.rtx.ssrcs.empty() ||

rtp_config.rtx.ssrcs.size() == rtp_config.rtx.ssrcs.size());

for (size_t i = 0; i < rtp_config.ssrcs.size(); ++i) {

configuration.local_media_ssrc = rtp_config.ssrcs[i];

bool enable_flexfec = flexfec_sender != nullptr &&

std::find(flexfec_protected_ssrcs.begin(),

flexfec_protected_ssrcs.end(),

configuration.local_media_ssrc) !=

flexfec_protected_ssrcs.end();

configuration.flexfec_sender = enable_flexfec ? flexfec_sender : nullptr;

auto playout_delay_oracle = std::make_unique();

configuration.ack_observer = playout_delay_oracle.get();

if (rtp_config.rtx.ssrcs.size() > i) {

configuration.rtx_send_ssrc = rtp_config.rtx.ssrcs[i];

}

auto rtp_rtcp = RtpRtcp::Create(configuration); // return std::make_unique(configuration)

rtp_rtcp->SetSendingStatus(false);

rtp_rtcp->SetSendingMediaStatus(false);

rtp_rtcp->SetRTCPStatus(RtcpMode::kCompound);

// Set NACK.

rtp_rtcp->SetStorePacketsStatus(true, kMinSendSidePacketHistorySize);

FieldTrialBasedConfig field_trial_config;

RTPSenderVideo::Config video_config;

video_config.clock = configuration.clock;

video_config.rtp_sender = rtp_rtcp->RtpSender();

video_config.flexfec_sender = configuration.flexfec_sender;

video_config.playout_delay_oracle = playout_delay_oracle.get();

video_config.frame_encryptor = frame_encryptor;

video_config.require_frame_encryption =

crypto_options.sframe.require_frame_encryption;

video_config.need_rtp_packet_infos = rtp_config.lntf.enabled;

video_config.enable_retransmit_all_layers = false;

video_config.field_trials = &field_trial_config;

const bool should_disable_red_and_ulpfec =

ShouldDisableRedAndUlpfec(enable_flexfec, rtp_config);

if (rtp_config.ulpfec.red_payload_type != -1 &&

!should_disable_red_and_ulpfec) {

video_config.red_payload_type = rtp_config.ulpfec.red_payload_type;

}

if (rtp_config.ulpfec.ulpfec_payload_type != -1 &&

!should_disable_red_and_ulpfec) {

video_config.ulpfec_payload_type = rtp_config.ulpfec.ulpfec_payload_type;

}

auto sender_video = std::make_unique(video_config); / 注意 RtpVideoSender 和 RTPSenderVideo 是两个不同的类

rtp_streams.emplace_back(std::move(playout_delay_oracle),

std::move(rtp_rtcp), std::move(sender_video));

}

return rtp_streams;

}

bool RTPSenderVideo::SendVideo(

int payload_type,

absl::optional codec_type,

uint32_t rtp_timestamp,

int64_t capture_time_ms,

rtc::ArrayView payload,

const RTPFragmentationHeader* fragmentation,

RTPVideoHeader video_header,

absl::optional expected_retransmission_time_ms)

====>

// fec red 等

LogAndSendToNetwork(std::move(rtp_packets), unpacketized_payload_size);

void RTPSenderVideo::LogAndSendToNetwork(

std::vector> packets,

size_t unpacketized_payload_size) {

int64_t now_ms = clock_->TimeInMilliseconds();

#if BWE_TEST_LOGGING_COMPILE_TIME_ENABLE

for (const auto& packet : packets) {

const uint32_t ssrc = packet->Ssrc();

BWE_TEST_LOGGING_PLOT_WITH_SSRC(1, "VideoTotBitrate_kbps", now_ms,

rtp_sender_->ActualSendBitrateKbit(), ssrc);

BWE_TEST_LOGGING_PLOT_WITH_SSRC(1, "VideoFecBitrate_kbps", now_ms,

FecOverheadRate() / 1000, ssrc);

BWE_TEST_LOGGING_PLOT_WITH_SSRC(1, "VideoNackBitrate_kbps", now_ms,

rtp_sender_->NackOverheadRate() / 1000,

ssrc);

}

#endif

{

rtc::CritScope cs(&stats_crit_);

size_t packetized_payload_size = 0;

for (const auto& packet : packets) {

switch (*packet->packet_type()) {

case RtpPacketToSend::Type::kVideo:

video_bitrate_.Update(packet->size(), now_ms);

packetized_payload_size += packet->payload_size();

break;

case RtpPacketToSend::Type::kForwardErrorCorrection:

fec_bitrate_.Update(packet->size(), clock_->TimeInMilliseconds());

break;

default:

continue;

}

}

RTC_DCHECK_GE(packetized_payload_size, unpacketized_payload_size);

packetization_overhead_bitrate_.Update(

packetized_payload_size - unpacketized_payload_size,

clock_->TimeInMilliseconds());

}

// TODO(sprang): Replace with bulk send method.

for (auto& packet : packets) { / RTPSender* const rtp_sender_; 在 RTPSenderVideo 构造函数中通过 Config 参数传递过来

rtp_sender_->SendToNetwork(std::move(packet)); /// RTPSender::SendToNetwork

}

}

bool RTPSender::SendToNetwork(std::unique_ptr packet) {

RTC_DCHECK(packet);

int64_t now_ms = clock_->TimeInMilliseconds();

auto packet_type = packet->packet_type();

RTC_CHECK(packet_type) << "Packet type must be set before sending.";

if (packet->capture_time_ms() <= 0) {

packet->set_capture_time_ms(now_ms);

}

std::vector> packets;

packets.emplace_back(std::move(packet));

paced_sender_->EnqueuePackets(std::move(packets)); PacedSender::EnqueuePacket 入队,然后再在单独的线程中进行发送

return true;

}

// 单独的线程中进行数据的发送

void PacedSender::Process() {

rtc::CritScope cs(&critsect_);

pacing_controller_.ProcessPackets(); /

}

void PacingController::ProcessPackets()

===>

while (!paused_) {

if (small_first_probe_packet_ && first_packet_in_probe) {

// If first packet in probe, insert a small padding packet so we have a

// more reliable start window for the rate estimation.

auto padding = packet_sender_->GeneratePadding(DataSize::bytes(1));

// If no RTP modules sending media are registered, we may not get a

// padding packet back.

if (!padding.empty()) {

// Insert with high priority so larger media packets don't preempt it.

EnqueuePacketInternal(std::move(padding[0]), kFirstPriority);

// We should never get more than one padding packets with a requested

// size of 1 byte.

RTC_DCHECK_EQ(padding.size(), 1u);

}

first_packet_in_probe = false;

}

auto* packet = GetPendingPacket(pacing_info); /// 从队列中取包

if (packet == nullptr) {

// No packet available to send, check if we should send padding.

DataSize padding_to_add = PaddingToAdd(recommended_probe_size, data_sent);

if (padding_to_add > DataSize::Zero()) {

std::vector> padding_packets =

packet_sender_->GeneratePadding(padding_to_add);

if (padding_packets.empty()) {

// No padding packets were generated, quite send loop.

break;

}

for (auto& packet : padding_packets) {

EnqueuePacket(std::move(packet));

}

// Continue loop to send the padding that was just added.

continue;

}

// Can't fetch new packet and no padding to send, exit send loop.

break;

}

std::unique_ptr rtp_packet = packet->ReleasePacket();

RTC_DCHECK(rtp_packet);

packet_sender_->SendRtpPacket(std::move(rtp_packet), pacing_info); // 真正的发送数据 PacedSender::SendRtpPacket

data_sent += packet->size();

// Send succeeded, remove it from the queue.

OnPacketSent(packet);

if (recommended_probe_size && data_sent > *recommended_probe_size)

break;

}

void PacedSender::SendRtpPacket(std::unique_ptr packet,

const PacedPacketInfo& cluster_info) {

critsect_.Leave();

packet_router_->SendPacket(std::move(packet), cluster_info); / PacketRouter::SendPacket

critsect_.Enter();

}

void PacketRouter::SendPacket(std::unique_ptr packet,

const PacedPacketInfo& cluster_info) {

rtc::CritScope cs(&modules_crit_);

// With the new pacer code path, transport sequence numbers are only set here,

// on the pacer thread. Therefore we don't need atomics/synchronization.

if (packet->IsExtensionReserved()) {

packet->SetExtension(AllocateSequenceNumber());

}

auto it = rtp_module_cache_map_.find(packet->Ssrc());

if (it != rtp_module_cache_map_.end()) {

if (TrySendPacket(packet.get(), cluster_info, it->second)) {

return;

}

// Entry is stale, remove it.

rtp_module_cache_map_.erase(it);

}

// Slow path, find the correct send module.

for (auto* rtp_module : rtp_send_modules_) {