- 大语言模型与增强现实:空间计算时代的AI原生应用

Agentic AI人工智能与大数据

CS语言模型ar空间计算ai

大语言模型与增强现实:空间计算时代的AI原生应用关键词:大语言模型(LLM)、增强现实(AR)、空间计算、AI原生应用、多模态交互、具身智能、虚实融合摘要:当“能对话的AI大脑”(大语言模型)遇到“能叠加虚拟世界的魔法眼镜”(增强现实),一场空间计算时代的革命正在发生。本文将带你一步步拆解大语言模型与AR的“强强联合”:从基础概念到技术原理,从真实案例到未来趋势,用“给小学生讲故事”的方式,讲清这

- 大语言模型的具身化——LLM-based Agents实战

apollowin123

人工智能语言模型深度学习

1.概述1.1Agent是什么长期以来,研究者们一直在追求与人类相当、乃至超越人类水平的通用人工智能(ArtificialGeneralIntelligence,AGI)。早在1950年代,AlanTuring就将「智能」的概念扩展到了人工实体,并提出了著名的图灵测试。这些人工智能实体通常被称为——代理(Agent)。「代理」这一概念起源于哲学,描述了一种拥有欲望、信念、意图以及采取行动能力的实体

- 语言大模型综述

Paper:ASurveyofLargelanguageModels目录Paper:ASurveyofLargelanguageModels综述概要LLM关键技术规模定律(ScalingLaws)预训练与微调对齐调优(AlignmentTuning)外部工具集成GPT系列模型的技术演进模型检查点和APIPre-Training数据准备和处理数据准备数据预处理数据调度架构EmergentArchit

- 大语言模型(LLM)课程学习(Curriculum Learning)、数据课程(data curriculum)指南:从原理到实践

在人工智能的浪潮之巅,我们总会惊叹于GPT-4、Llama3.1、Qwen2.5这些顶尖大语言模型(LLM)所展现出的惊人能力。它们似乎无所不知,能写诗、能编程、能进行复杂的逻辑推理。一个自然而然的问题是:它们是如何“学”会这一切的?大多数人会回答:“用海量数据喂出来的。”这个答案只说对了一半。如果你认为只要把互联网上能找到的所有数据(比如15万亿个token)随机打乱,然后“一锅烩”地喂给模型,

- 巨兽的阴影:大型语言模型的挑战与伦理深渊

田园Coder

人工智能科普人工智能科普

当GPT-4这样的庞然大物能够流畅对话、撰写诗歌、编写代码、解析图像,甚至在某些测试中媲美人类专家时,大型语言模型(LLM)仿佛成为了无所不能的“智能神谕”。然而,在这令人目眩的成就之下,潜藏着复杂而严峻的挑战与伦理困境,如同光芒万丈的科技巨兽脚下那片难以忽视的深邃阴影。这些挑战并非技术进步的偶然副作用,而是深植于LLM的运作本质、训练数据来源以及其与社会交互的复杂性之中。它们警示我们,在追逐能力

- AI LLM架构与原理 - 预训练模型深度解析

陈乔布斯

AI人工智能大模型人工智能架构机器学习深度学习大模型PythonAI

一、引言在人工智能领域,大型语言模型(LLM)的发展日新月异,预训练模型作为LLM的核心技术,为模型的强大性能奠定了基础。预训练模型通过在大规模无标注数据上进行学习,能够捕捉语言的通用模式和语义信息,从而在各种自然语言处理任务中展现出卓越的能力。本文将深入探讨AILLM架构与原理中预训练模型的方法论和技术,结合图解、代码解析和实际案例,为读者呈现一个全面且易懂的预训练模型图景。二、预训练模型的基本

- 2025主流AI大模型终极指南:横向对比+实战测评+官方注册教程

AI新视界

AI工具全指南:从入门到精通解锁高效生产力人工智能

《2025主流AI大模型终极指南:横向对比+实战测评+官方注册教程》在人工智能技术飞速发展的今天,大型语言模型(LLM)已成为推动数字化转型的核心引擎。作为CSDN资深AI技术专家,我将通过本文为您全面剖析2025年主流大模型的技术特点、应用场景和性能差异,并提供详细的官方注册和使用指南,帮助您快速掌握这些强大的AI工具。一、2025年主流大模型全景概览1.1大模型技术发展现状2024-2025年

- [论文阅读] 人工智能 | 读懂Meta-Fair:让LLM摆脱偏见的自动化测试新方法

张较瘦_

前沿技术论文阅读人工智能

读懂Meta-Fair:让LLM摆脱偏见的自动化测试新方法论文标题:Meta-Fair:AI-AssistedFairnessTestingofLargeLanguageModelsarXiv:2507.02533Meta-Fair:AI-AssistedFairnessTestingofLargeLanguageModelsMiguelRomero-Arjona,JoséA.Parejo,Jua

- FastAPI WebSocket:你的双向通信通道为何如此丝滑?

url:/posts/0faebb0f6c2b1bde4ba75869f4f67b76/title:如何在FastAPI中玩转WebSocket,让实时通信不再烦恼?date:2025-07-06T20:11:20+08:00lastmod:2025-07-06T20:11:20+08:00author:cmdragonsummary:FastAPI的WebSocket路由通过@app.webso

- 开源模型应用落地-OpenAI Agents SDK-集成Qwen3-8B-探索output_guardrail的创意应用(六)

开源技术探险家

开源模型-实际应用落地开源pythonai人工智能

一、前言随着人工智能技术的迅猛发展,大语言模型(LLM)在各行各业的应用日益广泛。然而,模型生成的内容是否安全、合规、符合用户预期,成为开发者和企业不可忽视的问题。为此,OutputGuardrail应运而生,作为一种关键的安全机制,它在模型生成结果之后进行内容审核与过滤,确保输出不偏离道德、法律和业务规范。通过检测不当的内容,不仅提升了AI系统的可信度,也为构建更加稳健和负责任的人工智能应用提供

- LangGraph是为了解决哪些问题?为了解决这些问题,LangGraph采用哪些方法?LangGraph适用于什么场景?LangGraph有什么局限性?

杰瑞学AI

AI/AGINLP/LLMslangchain人工智能自然语言处理深度学习神经网络

LangGraph旨在解决的问题LangGraph是LangChain生态系统中的一个高级库,它专注于解决构建复杂、有状态、多步LLM应用程序的挑战。它扩展了LangChain的链和代理概念,尤其针对以下问题:多步决策和循环工作流:传统的链通常是线性的或简单的分支,难以处理复杂的决策路径、条件跳转以及需要循环迭代才能达到最终结果的任务。状态管理:在复杂的、多轮的LLM应用中,需要维护和管理应用的状

- 利用systemd启动部署在服务器上的web应用

不是吧这都有重名

遇到的问题服务器前端运维

0.背景系统环境:Ubuntu22.04web应用情况:前后端分类,前端采用react,后端采用fastapi1.具体配置1.1前端配置开发态运行(启动命令是npmrundev),创建systemd服务文件sudonano/etc/systemd/system/frontend.service内容如下:[Unit]Description=ReactFrontendDevServerAfter=ne

- star31.6k,Aider:让代码编写如虎添翼的终端神器

ider是一款运行在终端中的AI结对编程工具,它能与大型语言模型(LLM)无缝协作,直接在您的本地Git仓库中编辑代码。无论是启动新项目,还是优化现有代码库,Aider都能成为您最得力的助手。它支持Claude3.5Sonnet、DeepSeekV3、GPT-4o等顶级AI模型,几乎可以连接任何LLM,让编程体验如虎添翼。Stars数35,188Forks数3,230主要特点Git操作:Aider

- vllm推理实践

try2find

java前端服务器

1.vllm推理demo实验fromvllmimportLLM,SamplingParams#定义生成参数sampling_params=SamplingParams(temperature=0.7,top_p=0.9,max_tokens=100,)#加载DeepSeek模型(以deepseek-llm-7b为例)#model_name="deepseek-ai/deepseek-llm-7b"

- 目前最火的agent方向-A2A快速实战构建(二): AutoGen模型集成指南:从OpenAI到本地部署的全场景LLM解决方案

引言:打破模型壁垒,构建灵活AI应用在AI应用开发中,大语言模型(LLM)的选择往往决定了系统的能力边界。AutoGen通过标准化的模型客户端协议,实现了对OpenAI、AzureOpenAI、本地模型等多源LLM的统一接入,让开发者能够根据场景需求自由切换模型服务。本文将深入解析AutoGen的模型集成框架,从云端服务到本地部署,助你构建弹性可扩展的AI代理系统。一、模型客户端核心架构:统一接口

- 在 Dify 平台中集成上下文工程技术

由数入道

人工智能数据库大数据人工智能软件工程dify

1.提升LLM问答准确率的上下文构建与提示策略大语言模型在开放领域问答中常面临幻觉和知识过时等问题。为提高回答准确率,上下文工程的关键是在提示中注入相关背景知识与指导。具体策略包括:检索增强(RAG):通过从知识库中检索相关内容并将其纳入提示,可以显著提升回答的准确性和可信度。Dify提供了知识检索节点,支持向量数据库存储外部知识,并将检索结果通过上下文变量注入LLM提示中。例如,在知识库问答应用

- Go 语言实现本地大模型聊天机器人:从推理到 Web UI 的全流程

雷羿 LexChien

Gogolang机器人前端

接续Go-LLM-CPP专案,继续扩充前端聊天室功能一.专案目录架构:go-llm-cpp/├──bin/#第三方依赖│├──go-llama.cpp/#封裝GGUF模型推理(CGo)│└──llm-go/#prompt构建+回合管理(Go)│├──cmd/#可执行应用│└──main.go#CLI/HTTPserver入口点│├──config/│└──persona.yaml#人格模板(系统p

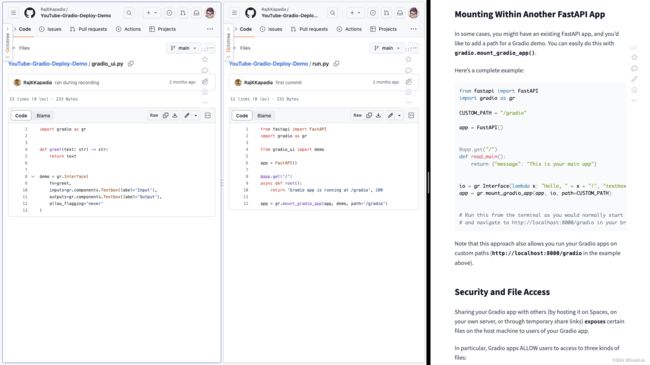

- Gradio全解13——MCP详解(2)——MCP能力协商与通信机制

Gradio全解13——MCP详解(2)——MCP能力协商与通信机制第13章MCP详解13.2MCP能力协商与通信机制13.2.1能力协商机制与消息规范1.能力协商机制2.消息规范及错误码13.2.2MCP通信机制1.协议层四种方法2.传输层机制:Stdio与StreamableHTTP3.Stdio与StreamableHTTP实战参考文献本章目录如下:《Gradio全解13——MCP详解(1)

- Gradio全解5——Interface:高级抽象界面类(上)

龙焰智能

Gradio全解教程InterfaceAPI参数成员函数launchloadfrom_pipelineintergrate

Gradio全解5——Interface:高级抽象界面类(上)前言5.Interface:高级抽象界面类5.1Interface类详解5.1.1Interface示例1.代码及运行2.代码解析5.1.2InterfaceAPI参数5.1.3Interface类成员函数1.launch()2.load()3.from_pipeline()4.integrate()5.queue()参考文献前言本系列

- Chat Memory

虾条_花吹雪

SpringAIai人工智能

大型语言模型(LLM)是无状态的,这意味着它们不保留有关以前交互的信息。当您想在多个交互中维护上下文或状态时,这可能是一个限制。为了解决这个问题,SpringAI提供了聊天记忆功能,允许您在与LLM的多次交互中存储和检索信息。ChatMemory抽象允许您实现各种类型的内存来支持不同的用例。消息的底层存储由ChatMemoryRepository处理,其唯一职责是存储和检索消息。由ChatMemo

- 动手实践OpenHands系列学习笔记3:LLM集成基础

JeffWoodNo.1

笔记人工智能

笔记3:LLM集成基础一、引言大型语言模型(LLM)是OpenHands代理系统的核心驱动力。本笔记将深入探讨LLMAPI调用的基本原理,以及如何在实践中实现与Claude等先进模型的基础连接模块,为构建AI代理系统奠定基础。二、LLMAPI调用基础知识2.1LLMAPI基本概念API密钥认证:访问LLM服务的身份凭证提示工程:构造有效请求以获取预期响应推理参数:控制模型输出的各种参数流式响应:增

- 【深度学习】神经网络剪枝方法的分类

烟锁池塘柳0

机器学习与深度学习深度学习神经网络剪枝

神经网络剪枝方法的分类摘要随着深度学习模型,特别是大语言模型(LLM)的参数量爆炸式增长,模型的部署和推理成本变得异常高昂。如何在保持模型性能的同时,降低其计算和存储需求,成为了工业界和学术界的核心议题。神经网络剪枝(Pruning)作为模型压缩的关键技术之一,应运而生。本文将解析剪枝技术的不同分类,深入探讨其原理、优缺点。文章目录神经网络剪枝方法的分类摘要1为什么我们需要剪枝?2分类方法一:剪什

- FastAPI如何玩转安全防护,让黑客望而却步?

url:/posts/c1314c623211c9269f36053179a53d5c/title:FastAPI如何玩转安全防护,让黑客望而却步?date:2025-07-04T18:28:43+08:00lastmod:2025-07-04T18:28:43+08:00author:cmdragonsummary:FastAPI通过内置的OAuth2和JWT支持,提供了开箱即用的安全解决方案,

- 语言模型之谜:提示内容与格式的交响诗

步子哥

AGI通用人工智能语言模型人工智能自然语言处理

当代人工智能领域中,语言模型(LLM)正以前所未有的规模和深度渗透到各行各业。从代码生成到数学推理,从问答系统到多项选择题,每一次技术的跃进都离不开一个看似简单却充满玄机的关键环节——提示(prompt)的设计。而在这场提示优化的探索中,内容与格式的双重奏正逐渐揭开其神秘面纱,谱写出一曲宏大的交响诗。本文将带您走进“内容格式集成提示优化(CFPO)”的奇幻世界,揭示如何透过细腻的内容雕琢和精妙的格

- 当我的代码评审开始 “AI 打工”:聊聊这个让我摸鱼更心安的神器

Honesty861024

ci/cdaigit

作为一个每天和代码打交道的打工人,最头疼的莫过于提完MR后漫长的等待——reviewer可能在开会、可能在改bug、可能在摸鱼,而你的代码只能在“进行中”状态里默默吃灰。更惨的是偶尔遇到“人工漏检”,上线后发现奇奇怪怪的bug,只能对着屏幕疯狂道歉:“这锅我背,下次一定仔细查!”直到我发现了这个藏在云效里的神器——yunxiao-LLM-reviewer,现在我的MR终于有了一个24小时在线的“A

- Xtuner:大模型微调快速上手

潘达斯奈基~

AIGCAIGC

一、XTuner是什么?简单来说,XTuner是一个轻量级、易于使用的、为大语言模型(LLM)设计的微调工具库。它由上海人工智能实验室(OpenMMLab)开发,是其强大AI工具生态(MMCV,MMEngine等)的一部分。它的核心设计理念是“用一个配置文件搞定一切”,让开发者和研究人员可以极大地简化微调流程。二、为什么选择XTuner?(核心优势)轻量且用户友好:命令行驱动:你不需要编写复杂的训

- 【V18.0 - 飞升篇】我把“大模型”装进电脑后,我的AI学会了改稿!——本地部署LLM终极保姆级教程

爱分享的飘哥

人工智能语言模型pythonLLMai

在过去的十几篇文章中,我们已经将我们的AI打造成了一个顶级的“分析师”。它能看、能听、能读,能预测多维度的价值指标,甚至能用SHAP解释自己的决策。它很强大,但它的能力,始终停留在“分析”和“诊断”的层面。它能告诉我“你的开头不行”,但无法告诉我“一个好的开头应该怎么写”。这就像我的副驾驶是一位顶级的F1数据分析师,他能告诉我每个弯道的最佳速度和刹车点,但他自己并不会开车。我需要一次终极的升级,我

- happy-llm 第一章 NLP 基础概念

weixin_38374194

自然语言处理人工智能学习

文章目录一、什么是NLP?二、NLP发展三大阶段三、NLP核心任务精要四、文本表示演进史1.传统方法:统计表征2.神经网络:语义向量化课程地址:happy-llmNLP基础概念一、什么是NLP?核心目标:让计算机理解、生成、处理人类语言,实现人机自然交互。现状与挑战:成就:深度学习推动文本分类、翻译等任务达到近人类水平。瓶颈:歧义性、隐喻理解、跨文化差异等。二、NLP发展三大阶段时期代表技术核心思

- Happy-LLM 第二章 Transformer

HalukiSan

transformer深度学习人工智能

Transform架构图片来自[Happy-llm](happy-llm/docs/chapter2/第二章Transformer架构.mdatmain·datawhalechina/happy-llm),若加载不出来,请开梯子注意力机制前馈神经网络每一层的神经元都与上下两层的每一个神经元完全连接数据在其中只向前流动,用于处理静态的数据,进行图像识别或者分类,但是该网络没有记忆能力,数据在它里面没

- happy-llm 第二章 Transformer架构

weixin_38374194

transformer深度学习人工智能学习

文章目录一、注意力机制核心解析1.1注意力机制的本质与核心变量1.2注意力机制的数学推导1.3注意力机制的变种实现1.3.1自注意力(Self-Attention)1.3.2掩码自注意力(MaskedSelf-Attention)1.3.3多头注意力(Multi-HeadAttention)二、Encoder-Decoder架构详解2.1Seq2Seq任务与架构设计2.2核心组件解析2.2.1前馈

- java类加载顺序

3213213333332132

java

package com.demo;

/**

* @Description 类加载顺序

* @author FuJianyong

* 2015-2-6上午11:21:37

*/

public class ClassLoaderSequence {

String s1 = "成员属性";

static String s2 = "

- Hibernate与mybitas的比较

BlueSkator

sqlHibernate框架ibatisorm

第一章 Hibernate与MyBatis

Hibernate 是当前最流行的O/R mapping框架,它出身于sf.net,现在已经成为Jboss的一部分。 Mybatis 是另外一种优秀的O/R mapping框架。目前属于apache的一个子项目。

MyBatis 参考资料官网:http:

- php多维数组排序以及实际工作中的应用

dcj3sjt126com

PHPusortuasort

自定义排序函数返回false或负数意味着第一个参数应该排在第二个参数的前面, 正数或true反之, 0相等usort不保存键名uasort 键名会保存下来uksort 排序是对键名进行的

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8&q

- DOM改变字体大小

周华华

前端

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/1999/xhtml&q

- c3p0的配置

g21121

c3p0

c3p0是一个开源的JDBC连接池,它实现了数据源和JNDI绑定,支持JDBC3规范和JDBC2的标准扩展。c3p0的下载地址是:http://sourceforge.net/projects/c3p0/这里可以下载到c3p0最新版本。

以在spring中配置dataSource为例:

<!-- spring加载资源文件 -->

<bean name="prope

- Java获取工程路径的几种方法

510888780

java

第一种:

File f = new File(this.getClass().getResource("/").getPath());

System.out.println(f);

结果:

C:\Documents%20and%20Settings\Administrator\workspace\projectName\bin

获取当前类的所在工程路径;

如果不加“

- 在类Unix系统下实现SSH免密码登录服务器

Harry642

免密ssh

1.客户机

(1)执行ssh-keygen -t rsa -C "

[email protected]"生成公钥,xxx为自定义大email地址

(2)执行scp ~/.ssh/id_rsa.pub root@xxxxxxxxx:/tmp将公钥拷贝到服务器上,xxx为服务器地址

(3)执行cat

- Java新手入门的30个基本概念一

aijuans

javajava 入门新手

在我们学习Java的过程中,掌握其中的基本概念对我们的学习无论是J2SE,J2EE,J2ME都是很重要的,J2SE是Java的基础,所以有必要对其中的基本概念做以归纳,以便大家在以后的学习过程中更好的理解java的精髓,在此我总结了30条基本的概念。 Java概述: 目前Java主要应用于中间件的开发(middleware)---处理客户机于服务器之间的通信技术,早期的实践证明,Java不适合

- Memcached for windows 简单介绍

antlove

javaWebwindowscachememcached

1. 安装memcached server

a. 下载memcached-1.2.6-win32-bin.zip

b. 解压缩,dos 窗口切换到 memcached.exe所在目录,运行memcached.exe -d install

c.启动memcached Server,直接在dos窗口键入 net start "memcached Server&quo

- 数据库对象的视图和索引

百合不是茶

索引oeacle数据库视图

视图

视图是从一个表或视图导出的表,也可以是从多个表或视图导出的表。视图是一个虚表,数据库不对视图所对应的数据进行实际存储,只存储视图的定义,对视图的数据进行操作时,只能将字段定义为视图,不能将具体的数据定义为视图

为什么oracle需要视图;

&

- Mockito(一) --入门篇

bijian1013

持续集成mockito单元测试

Mockito是一个针对Java的mocking框架,它与EasyMock和jMock很相似,但是通过在执行后校验什么已经被调用,它消除了对期望 行为(expectations)的需要。其它的mocking库需要你在执行前记录期望行为(expectations),而这导致了丑陋的初始化代码。

&nb

- 精通Oracle10编程SQL(5)SQL函数

bijian1013

oracle数据库plsql

/*

* SQL函数

*/

--数字函数

--ABS(n):返回数字n的绝对值

declare

v_abs number(6,2);

begin

v_abs:=abs(&no);

dbms_output.put_line('绝对值:'||v_abs);

end;

--ACOS(n):返回数字n的反余弦值,输入值的范围是-1~1,输出值的单位为弧度

- 【Log4j一】Log4j总体介绍

bit1129

log4j

Log4j组件:Logger、Appender、Layout

Log4j核心包含三个组件:logger、appender和layout。这三个组件协作提供日志功能:

日志的输出目标

日志的输出格式

日志的输出级别(是否抑制日志的输出)

logger继承特性

A logger is said to be an ancestor of anothe

- Java IO笔记

白糖_

java

public static void main(String[] args) throws IOException {

//输入流

InputStream in = Test.class.getResourceAsStream("/test");

InputStreamReader isr = new InputStreamReader(in);

Bu

- Docker 监控

ronin47

docker监控

目前项目内部署了docker,于是涉及到关于监控的事情,参考一些经典实例以及一些自己的想法,总结一下思路。 1、关于监控的内容 监控宿主机本身

监控宿主机本身还是比较简单的,同其他服务器监控类似,对cpu、network、io、disk等做通用的检查,这里不再细说。

额外的,因为是docker的

- java-顺时针打印图形

bylijinnan

java

一个画图程序 要求打印出:

1.int i=5;

2.1 2 3 4 5

3.16 17 18 19 6

4.15 24 25 20 7

5.14 23 22 21 8

6.13 12 11 10 9

7.

8.int i=6

9.1 2 3 4 5 6

10.20 21 22 23 24 7

11.19

- 关于iReport汉化版强制使用英文的配置方法

Kai_Ge

iReport汉化英文版

对于那些具有强迫症的工程师来说,软件汉化固然好用,但是汉化不完整却极为头疼,本方法针对iReport汉化不完整的情况,强制使用英文版,方法如下:

在 iReport 安装路径下的 etc/ireport.conf 里增加红色部分启动参数,即可变为英文版。

# ${HOME} will be replaced by user home directory accordin

- [并行计算]论宇宙的可计算性

comsci

并行计算

现在我们知道,一个涡旋系统具有并行计算能力.按照自然运动理论,这个系统也同时具有存储能力,同时具备计算和存储能力的系统,在某种条件下一般都会产生意识......

那么,这种概念让我们推论出一个结论

&nb

- 用OpenGL实现无限循环的coverflow

dai_lm

androidcoverflow

网上找了很久,都是用Gallery实现的,效果不是很满意,结果发现这个用OpenGL实现的,稍微修改了一下源码,实现了无限循环功能

源码地址:

https://github.com/jackfengji/glcoverflow

public class CoverFlowOpenGL extends GLSurfaceView implements

GLSurfaceV

- JAVA数据计算的几个解决方案1

datamachine

javaHibernate计算

老大丢过来的软件跑了10天,摸到点门道,正好跟以前攒的私房有关联,整理存档。

-----------------------------华丽的分割线-------------------------------------

数据计算层是指介于数据存储和应用程序之间,负责计算数据存储层的数据,并将计算结果返回应用程序的层次。J

&nbs

- 简单的用户授权系统,利用给user表添加一个字段标识管理员的方式

dcj3sjt126com

yii

怎么创建一个简单的(非 RBAC)用户授权系统

通过查看论坛,我发现这是一个常见的问题,所以我决定写这篇文章。

本文只包括授权系统.假设你已经知道怎么创建身份验证系统(登录)。 数据库

首先在 user 表创建一个新的字段(integer 类型),字段名 'accessLevel',它定义了用户的访问权限 扩展 CWebUser 类

在配置文件(一般为 protecte

- 未选之路

dcj3sjt126com

诗

作者:罗伯特*费罗斯特

黄色的树林里分出两条路,

可惜我不能同时去涉足,

我在那路口久久伫立,

我向着一条路极目望去,

直到它消失在丛林深处.

但我却选了另外一条路,

它荒草萋萋,十分幽寂;

显得更诱人,更美丽,

虽然在这两条小路上,

都很少留下旅人的足迹.

那天清晨落叶满地,

两条路都未见脚印痕迹.

呵,留下一条路等改日再

- Java处理15位身份证变18位

蕃薯耀

18位身份证变15位15位身份证变18位身份证转换

15位身份证变18位,18位身份证变15位

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

蕃薯耀 201

- SpringMVC4零配置--应用上下文配置【AppConfig】

hanqunfeng

springmvc4

从spring3.0开始,Spring将JavaConfig整合到核心模块,普通的POJO只需要标注@Configuration注解,就可以成为spring配置类,并通过在方法上标注@Bean注解的方式注入bean。

Xml配置和Java类配置对比如下:

applicationContext-AppConfig.xml

<!-- 激活自动代理功能 参看:

- Android中webview跟JAVASCRIPT中的交互

jackyrong

JavaScripthtmlandroid脚本

在android的应用程序中,可以直接调用webview中的javascript代码,而webview中的javascript代码,也可以去调用ANDROID应用程序(也就是JAVA部分的代码).下面举例说明之:

1 JAVASCRIPT脚本调用android程序

要在webview中,调用addJavascriptInterface(OBJ,int

- 8个最佳Web开发资源推荐

lampcy

编程Web程序员

Web开发对程序员来说是一项较为复杂的工作,程序员需要快速地满足用户需求。如今很多的在线资源可以给程序员提供帮助,比如指导手册、在线课程和一些参考资料,而且这些资源基本都是免费和适合初学者的。无论你是需要选择一门新的编程语言,或是了解最新的标准,还是需要从其他地方找到一些灵感,我们这里为你整理了一些很好的Web开发资源,帮助你更成功地进行Web开发。

这里列出10个最佳Web开发资源,它们都是受

- 架构师之面试------jdk的hashMap实现

nannan408

HashMap

1.前言。

如题。

2.详述。

(1)hashMap算法就是数组链表。数组存放的元素是键值对。jdk通过移位算法(其实也就是简单的加乘算法),如下代码来生成数组下标(生成后indexFor一下就成下标了)。

static int hash(int h)

{

h ^= (h >>> 20) ^ (h >>>

- html禁止清除input文本输入缓存

Rainbow702

html缓存input输入框change

多数浏览器默认会缓存input的值,只有使用ctl+F5强制刷新的才可以清除缓存记录。

如果不想让浏览器缓存input的值,有2种方法:

方法一: 在不想使用缓存的input中添加 autocomplete="off";

<input type="text" autocomplete="off" n

- POJO和JavaBean的区别和联系

tjmljw

POJOjava beans

POJO 和JavaBean是我们常见的两个关键字,一般容易混淆,POJO全称是Plain Ordinary Java Object / Pure Old Java Object,中文可以翻译成:普通Java类,具有一部分getter/setter方法的那种类就可以称作POJO,但是JavaBean则比 POJO复杂很多, Java Bean 是可复用的组件,对 Java Bean 并没有严格的规

- java中单例的五种写法

liuxiaoling

java单例

/**

* 单例模式的五种写法:

* 1、懒汉

* 2、恶汉

* 3、静态内部类

* 4、枚举

* 5、双重校验锁

*/

/**

* 五、 双重校验锁,在当前的内存模型中无效

*/

class LockSingleton

{

private volatile static LockSingleton singleton;

pri