keepalive+nginx高可用K8S集群部署

1.准备工作

1.1集群部署规划

| K8S集群角色 |

节点IP |

节点名称 |

OS |

| 控制节点 |

192.168.0.180 |

k8smaster1 |

Centos7.9 |

| 控制节点 |

192.168.0.181 |

k8smaster2 |

Centos7.9 |

| 工作节点 |

192.168.0.182 |

k8swork1 |

Centos7.9 |

| 工作节点 |

192.168.0.183 |

k8swork2 |

Centos7.9 |

| 控制节点VIP |

192.168.0.199 |

1.2 网卡配置

K8smaster1的配置,其它节点,参照示例配置不同的IP。

[root@k8smaster1 yum.repos.d]# less /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=no

IPV6_DEFROUTE=no

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=a1c41e41-6217-43a3-b3c8-76db36aa5885

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.0.180

PREFIX=24

GATEWAY=192.168.0.2

DNS1=192.168.0.2

1.3 host文件配置

配置主机hosts文件,相互之间通过主机名互相访问

修改每台机器的/etc/hosts文件,增加如下四行:

192.168.0.180 k8smaster1

192.168.0.181 k8smaster2

192.168.0.182 k8swork1

192.168.0.183 k8swork2

1.4 关闭selinux

确保selinux关闭,其它几台保持一致

[root@k8smaster1 ~]# less /etc/selinux/config |grep SELINUX=

# SELINUX= can take one of these three values:

SELINUX=disabled

1.5 免密登录

配置主机之间无密码登录

ssh-keygen

ssh-copy-id k8smaster2

ssh-copy-id k8swork1

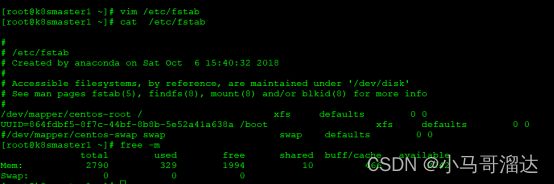

1.6 关闭交换分区

关闭交换分区swap,提升性能

4台机器执行相同操作

#临时关闭swapoff -a

#永久关闭:注释swap挂载,给swap这行开头加一下注释

1.7修改机器内核参数

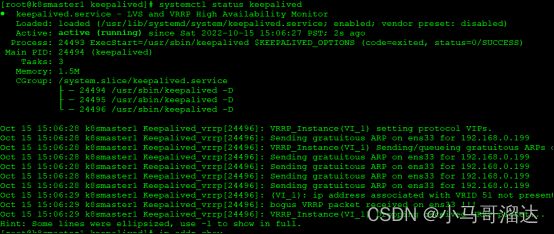

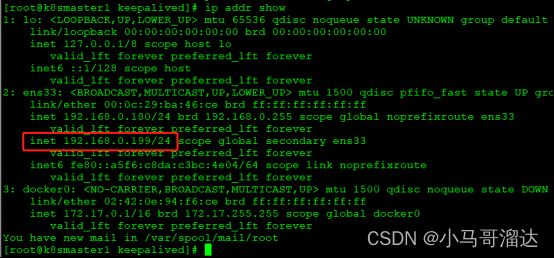

[root@k8smaster1~]# echo "modprobe br_netfilter" >> /etc/profile

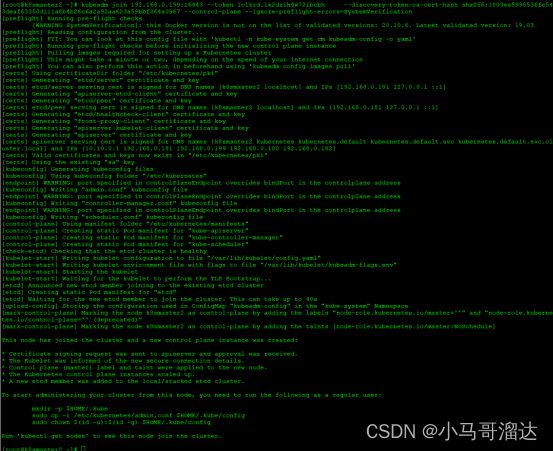

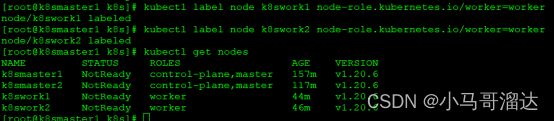

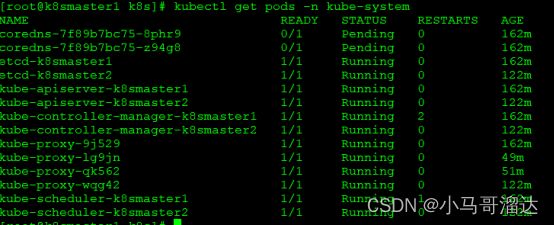

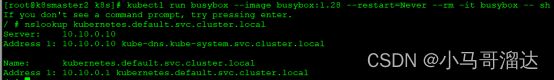

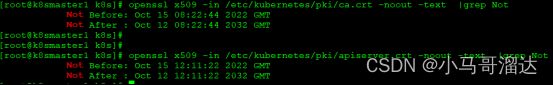

[root@k8smaster1~]# cat > /etc/sysctl.d/k8s.conf < > net.bridge.bridge-nf-call-ip6tables = 1 > net.bridge.bridge-nf-call-iptables = 1 > net.ipv4.ip_forward = 1 > EOF [root@k8smaster1 ~]# sysctl -p /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 [root@k8smaster1 ~]# systemctl stop firewalld ; systemctl disable firewalld [root@k8smaster1 ~]# mkdir /root/repo.bak&&mv /etc/yum.repos.d/* /root/repo.bak/ #配置国内阿里云docker的repo源 [root@k8smaster1 yum.repos.d]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo Loaded plugins: fastestmirror adding repo from: http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo grabbing file http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.repo repo saved to /etc/yum.repos.d/docker-ce.repo #配置安装k8s组件需要的阿里云的repo源 [root@k8smaster1 yum.repos.d]# cat kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 #将Kubernetes的repo源复制给其它节点 [root@k8smaster1 yum.repos.d]# scp /etc/yum.repos.d/kubernetes.repo k8smaster2:/etc/yum.repos.d/ kubernetes.repo 100% 129 114.3KB/s 00:00 [root@k8smaster1 yum.repos.d]# scp /etc/yum.repos.d/kubernetes.repo k8swork1:/etc/yum.repos.d/ kubernetes.repo 100% 129 84.8KB/s 00:00 [root@k8smaster1 yum.repos.d]# scp /etc/yum.repos.d/kubernetes.repo k8swork2:/etc/yum.repos.d/ kubernetes.repo 时间同步,每台机器执行以下操作 [root@k8smaster1 yum.repos.d]# yum install ntpdate -y [root@k8smaster1 yum.repos.d]# ntpdate cn.pool.ntp.org [root@k8smaster1 yum.repos.d]# crontab -e * */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org [root@k8smaster1 yum.repos.d]# service crond restart 按照以下步骤执行: 创建配置文件 [root@k8smaster1 modules]#vim /etc/sysconfig/modules/ipvs.modules/ipvs.modules #!/bin/bash ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack" for kernel_module in ${ipvs_modules}; do /sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1 if [ 0 -eq 0 ]; then /sbin/modprobe ${kernel_module} fi done 让配置文件生效 [root@k8smaster1 modules]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs [root@k8smaster1 yum.repos.d]# yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm [root@k8smaster1 yum.repos.d]# yum install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io -y [root@k8smaster1 yum.repos.d]# systemctl start docker && systemctl enable docker && systemctl status docker 备注:如果docker版本过高,可以降到指定版本 yum downgrade --setopt=obsoletes=0 -y docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io [root@k8smaster1 yum.repos.d]# vim /etc/docker/daemon.json { "registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com", "https://rncxm540.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] } 同步给其它机器 [root@k8smaster1 yum.repos.d]# scp /etc/docker/daemon.json k8smaster2:/etc/docker/ [root@k8smaster1 yum.repos.d]# scp /etc/docker/daemon.json k8swork1:/etc/docker/ [root@k8smaster1 yum.repos.d]# scp /etc/docker/daemon.json k8swork2:/etc/docker/ [root@k8smaster1 yum.repos.d]#yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 [root@k8smaster1 yum.repos.d]# systemctl enable kubelet 安装软件 [root@k8smaster1 yum.repos.d]# yum install nginx keepalived -y 更新nginx配置文件 [root@k8smaster1 yum.repos.d]# vim /etc/nginx/nginx.conf user nginx; worker_processes auto; error_log /var/log/nginx/error.log; pid /run/nginx.pid; include /usr/share/nginx/modules/*.conf; events { worker_connections 1024; } # 四层负载均衡,为两台Master apiserver组件提供负载均衡 stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent'; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 192.168.0.180:6443; # Master1 APISERVER IP:PORT server 192.168.0.181:6443; # Master2 APISERVER IP:PORT } server { listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突 proxy_pass k8s-apiserver; } } http { log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; include /etc/nginx/mime.types; default_type application/octet-stream; server { listen 80 default_server; server_name _; location / { } } } 启动nginx 报错 安装modules插件 [root@k8smaster1 nginx]# yum -y install nginx-all-modules.noarch keepalive配置,主从节点只有优先级的差异 [root@k8smaster1 keepalived]# vim /etc/keepalived/keepalived.conf notification_email { } notification_email_from [email protected] smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state MASTER interface ens33 # 修改为实际网卡名 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } # 虚拟IP virtual_ipaddress { 192.168.0.199/24 } track_script { check_nginx } } 创建判断Nginx是否存活的脚本,主从节点一样 [root@k8smaster1 keepalived]# vim /etc/keepalived/keepalived.conf global_defs { notification_email { } notification_email_from [email protected] smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state MASTER interface ens33 # 修改为实际网卡名 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } # 虚拟IP virtual_ipaddress { 192.168.0.199/24 } track_script { check_nginx } } 在两个控制节点执行以下操作 [root@k8smaster1 ]# chmod +x /etc/keepalived/check_nginx.sh [root@k8smaster1 ]# systemctl daemon-reload [root@k8smaster1 ]# systemctl start nginx [root@k8smaster1 ]# systemctl start keepalived [root@k8smaster1 ]# systemctl enable nginx keepalived [root@k8smaster1 ]# systemctl status keepalived 检查VIP是否绑定成功,通过在主节点执行ip addr 可以看到VIP192.168.0.199绑定成功 停掉k8smaster1上的keepalived,VIP会漂移到k8smaster2 ] [root@k8smaster1 keepalived]# service keepalived stop Redirecting to /bin/systemctl stop keepalived.service [root@k8smaster2 ]#ip addr #启动k8smaster1 上的keepalived,VIP又漂移回来了 [root@k8smaster1 ]# systemctl daemon-reload [root@k8smaster1 ]# systemctl start keepalived [root@k8smaster1 ]# ip addr [root@k8smaster1 ]# mkdir -p /opt/k8s [root@k8smaster1 ]# vim /opt/k8s/kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.20.6 controlPlaneEndpoint: 192.168.0.199:16443 imageRepository: registry.aliyuncs.com/google_containers apiServer: certSANs: - 192.168.0.180 - 192.168.0.181 - 192.168.0.182 - 192.168.0.199 networking: podSubnet: 10.244.0.0/16 serviceSubnet: 10.10.0.0/16 --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs 把初始化k8s集群需要的离线镜像包k8simage-1-20-6.tar.gz 上传到k8smaster1 、k8smaster2 、k8swork1机器上,手动解压: [root@k8smaster1 k8s]# docker load -i k8simage-1-20-6.tar.gz 使用kubeadm初始化k8s集群 [root@k8smaster1 k8s]# kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=SystemVerification 初始化成功了 #配置kubectl的配置文件config,相当于对kubectl进行授权,这样kubectl命令可以使用这个证书对k8s集群进行管理 root@k8smaster1 k8s]# mkdir -p $HOME/.kube [root@k8smaster1 k8s]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8smaster1 k8s]# sudo chown $(id -u):$(id -g) $HOME/.kube/config [root@k8smaster1 k8s]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8smaster1 NotReady control-plane,master 15m v1.20.6 备注:此时集群状态还是NotReady状态,因为没有安装网络插件。 在k8smaster2创建证书存放目录 [root@k8smaster2 k8s]# cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/ 把k8smaster1节点的证书拷贝到k8smaster2上 [root@k8smaster1 k8s]# scp /etc/kubernetes/pki/ca.crt k8smaster2:/etc/kubernetes/pki/ca.crt [root@k8smaster1 k8s]# scp /etc/kubernetes/pki/ca.key k8smaster2:/etc/kubernetes/pki/ca.key [root@k8smaster1 k8s]# scp /etc/kubernetes/pki/sa.key k8smaster2:/etc/kubernetes/pki/sa.key [root@k8smaster1 k8s]# scp /etc/kubernetes/pki/sa.pub k8smaster2:/etc/kubernetes/pki/sa.pub [root@k8smaster1 k8s]# scp /etc/kubernetes/pki/front-proxy-ca.crt k8smaster2:/etc/kubernetes/pki/front-proxy-ca.crt [root@k8smaster1 k8s]# scp /etc/kubernetes/pki/front-proxy-ca.key k8smaster2:/etc/kubernetes/pki/front-proxy-ca.key [root@k8smaster1 k8s]# scp /etc/kubernetes/pki/etcd/ca.crt k8smaster2:/etc/kubernetes/pki/etcd/ca.crt [root@k8smaster1 k8s]# scp /etc/kubernetes/pki/etcd/ca.key k8smaster2:/etc/kubernetes/pki/etcd/ca.key 在k8smaster1 上查看加入节点的命令 [root@k8smaster1 k8s]# kubeadm token create --print-join-command kubeadm join 192.168.0.199:16443 --token iblwwk.9pry17r07m5a2ao8 --discovery-token-ca-cert-hash sha256:1803ea5998536fc543deaf63350d111a8b4b26c6a2a92aa623a59bbf864a0967 在k8smaster2上执行加入集群的命令, 备注:控制节点需要--control-plane 参数,工作节点不需要 [root@k8smaster2 ~]# kubeadm join 192.168.0.199:16443 --token lolkrd.lk2dzih4w72ibcbh --discovery-token-ca-cert-hash sha256:1803ea5998536fc543deaf63350d111a8b4b26c6a2a92aa623a59bbf864a0967 --control-plane --ignore-preflight-errors=SystemVerification [root@k8smaster1 k8s]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8smaster1 NotReady control-plane,master 44m v1.20.6 k8smaster2 NotReady control-plane,master 3m55s v1.20.6 可以看到k8smaster2已经加入到集群了. 在k8smaster1 上查看加入节点的命令: [root@k8smaster1 k8s]# kubeadm token create --print-join-command kubeadm join 192.168.0.199:16443 --token hcxe47.mz9htyoya2rowwh0 --discovery-token-ca-cert-hash sha256:1803ea5998536fc543deaf63350d111a8b4b26c6a2a92aa623a59bbf864a0967 在k8swork1上执行 [root@k8swork1 ~]#kubeadm join 192.168.0.199:16443 --token hcxe47.mz9htyoya2rowwh0 --discovery-token-ca-cert-hash sha256:1803ea5998536fc543deaf63350d111a8b4b26c6a2a92aa623a59bbf864a0967 查看集群,现在是2个控制节点和1个工作节点 [root@k8smaster1 k8s]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8smaster1 NotReady control-plane,master 111m v1.20.6 k8smaster2 NotReady control-plane,master 71m v1.20.6 k8swork1 NotReady 参照1.准备工作 2.安装软件中涉及工作节点的安装步骤,对k8swork2的基础环境进行部署准备工作,最后在k8swork2上执行以下加入命令 备注:需在控制节点提前通过kubeadm token create --print-join-command获取最新的加入命令 [root@k8swork2 ~]# kubeadm join 192.168.0.199:16443 --token 5vhx42.faf58lb9v1vkusrj --discovery-token-ca-cert-hash sha256:1803ea5998536fc543deaf63350d111a8b4b26c6a2a92aa623a59bbf864a0967 显示输出: [preflight] Running pre-flight checks [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.6. Latest validated version: 19.03 [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. 通过下图可以查看当前集群为2个控制节点和2个工作节点 可以看到工作节点的ROLES角色为空, 把工作节点的ROLES变成work,按照如下方法: [root@k8smaster1 k8s]# kubectl label node k8swork1 node-role.kubernetes.io/worker=worker [root@k8smaster1 k8s]# kubectl label node k8swork2 node-role.kubernetes.io/worker=worker 没有安装网络组件集群,集群各节点都是NoReady状态,还有coredns也是pengding状态,这个时候集群是不能使用的。这是因为还没有安装网络插件,等到下面安装好网络插件之后这个cordns就会变成running了 如下图,没有安装网络组件的pod状态: [root@k8smaster1 k8s]# kubectl get pods -n kube-system 从官网下载配置文件,上传到服务器 /opt/k8s/calico.yaml 注:在线下载配置文件地址是: https://docs.projectcalico.org/manifests/calico.yaml [root@k8smaster1 k8s]# cd /opt/k8s/ [root@k8smaster1 k8s]# kubectl apply -f calico.yaml 查看集群状态,各节点已经是Ready状态 [root@k8smaster1 k8s]# kubectl get nodes 查看pod状态,coredns也变成running了 [root@k8smaster1 k8s]# kubectl get pods -n kube-system 上传并导入busybox [root@k8smaster1 k8s]# docker load -i busybox-1-28.tar.gz #注意: busybox要用指定的1.28版本,不能用最新版本,最新版本,nslookup会解析不到dns和ip [root@k8smaster1 k8s]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh If you don't see a command prompt, try pressing enter. / # ping www.baidu.com PING www.baidu.com (112.80.248.75): 56 data bytes 64 bytes from 112.80.248.75: seq=0 ttl=127 time=10.481 ms 64 bytes from 112.80.248.75: seq=1 ttl=127 time=10.153 ms 64 bytes from 112.80.248.75: seq=2 ttl=127 time=10.433 ms 64 bytes from 112.80.248.75: seq=3 ttl=127 time=10.164 ms --- www.baidu.com ping statistics --- 4 packets transmitted, 4 packets received, 0% packet loss round-trip min/avg/max = 10.153/10.307/10.481 ms / # [root@k8smaster2 k8s]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh If you don't see a command prompt, try pressing enter. / # nslookup kubernetes.default.svc.cluster.local Server: 10.10.0.10 Address 1: 10.10.0.10 kube-dns.kube-system.svc.cluster.local Name: kubernetes.default.svc.cluster.local Address 1: 10.10.0.1 kubernetes.default.svc.cluster.local / # openssl x509 -in /etc/kubernetes/pki/ca.crt -noout -text |grep Not 显示如下,通过下面可看到ca证书有效期是10年,从2022到2032年: [root@k8smaster1 k8s]# openssl x509 -in /etc/kubernetes/pki/ca.crt -noout -text |grep Not Not Before: Oct 15 08:22:44 2022 GMT Not After : Oct 12 08:22:44 2032 GMT [root@k8smaster1 k8s]# openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text |grep Not 显示如下,通过下面可看到apiserver证书有效期是1年,从2022到2023年: Not Before: Oct 15 08:22:44 2022 GMT Not After : Oct 15 08:22:44 2023 GMT 把update-kubeadm-cert.sh文件上传到控制节点,分别执行如下: 1)给update-kubeadm-cert.sh证书授权可执行权限 [root@k8smaster1 k8s]#chmod +x update-kubeadm-cert.sh 2)执行下面命令,修改证书过期时间,把时间延长到10年 [root@k8smaster1 k8s]#./update-kubeadm-cert.sh all 3)给update-kubeadm-cert.sh证书授权可执行权限 [root@k8smaster2 k8s]#cchmod +x update-kubeadm-cert.sh 4)执行下面命令,修改证书过期时间,把时间延长到10年 [root@k8smaster2 k8s]#c./update-kubeadm-cert.sh all 其中一控制节点,截图如下: 在k8smaster1节点查询Pod是否正常,能查询出数据说明证书签发完成 kubectl get pods -n kube-system 显示如下,能够看到pod信息,说明证书签发正常: 验证证书有效时间是否延长到10年 [root@k8smaster1 k8s]# openssl x509 -in /etc/kubernetes/pki/ca.crt -noout -text |grep Not [root@k8smaster1 k8s]# openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text |grep Not 1.8关闭防火墙

1.9 准备安装源

1.9.1备份repo

1.9.2 配置国内源

1.10 时间同步

1.11 开启IPVS

2.安装软件

2.1安装基础软件包

2.2安装docker-ce

2.3 配置docker镜像加速器和驱动

2.4 安装k8s

2.5 安装nginx+keepalive

2.5.1 nginx

![]()

2.5.2 keepalive

global_defs {2.5.3启动服务

2.5.4测试keepalived

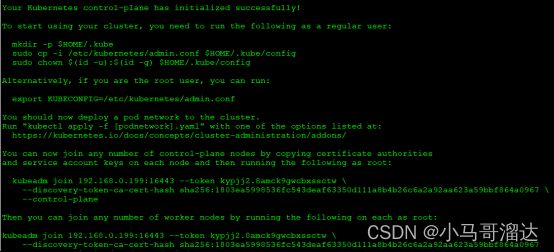

3.3集群初始化

3.4 kubectl授权

4.1 同步证书

4.2 加入集群

4.3 查看集群

5.1 获取加入集群的命令

5.2 加入集群

5.3 查看集群状态

5.4 扩容工作节点

5.5 扩容验证

5.6 更改工作节点标识

6.2应用生效

6.3查看集群状态

7.2在控制节点启动Pod

7.3 验证网络连通性

7.4 测试coredns是否正常

7.5 延长k8s证书

7.5.1查看证书有效时间:

7.5.2延长证书过期时间

7.5.3 证书验证