Deep Learning for Remote Sensing Data

Deep Learning for Remote Sensing Data_A technical tutorial on the state of the art

- 一,Abstract

- 二,ADVANTAGES OF REMOTE SENSING METHODS遥感方法的优点

-

- 注释2:Handcrafted features

- 注释1:discriminability and robustness

- 注释3:unitary transformation

- 三,THE GENERAL FRAMEWORK 总体框架

-

- 注释1:pan sharpening,全色锐化/全色波段融合panchromatic (PAN) images全色(PAN)图像

- 注释2:object proposals

- 注释3:shallow module such as an autoencoder (AE) or a sparse coding algorithm 浅模块,例如:自动编码器(AE)或稀疏编码算法

- 四,BASIC ALGORITHMS IN DEEP LEARNING深度学习的基本算法

-

- 注释1:restricted Boltzmann machines (RBMs)

- 注释2:机器学习中DL算法的更多详细信息可以在[14]和[44]中找到

- 1,Convolutional neural networks

-

- 1.1CONVOLUTIONAL LAYER卷积层

- 1.2NONLINEARITY LAYER非线性层

- 1.3POOLING LAYER池化层/采样层

- 1.4 auto encoders(AE)自动编码器

- 1.5 restricted boltzmann machines受限玻尔兹曼机

- 1.6 sparse coding稀疏编码

- 2,DEEP LEARNING FOR REMOTE SENSING DATA

-

- 2.1 REMOTE SENSING IMAGE PREPROCESSING

-

- 2.1.1 RESTORATION AND DENOISING 修复和去噪

- 2.1.2 PAN SHARPENING 全色波段融合/全色锐化

-

- 解释 PAN SHARPENING 全色锐化

- 2.2 PIXEL-BASED CLASSIFICATION

-

- 2.2.1 SPECTRAL FEATURE CLASSIFICATION光谱特征分类

- 2.2.2 CLASSIFICATION WITH SPATIAL INFORMATION空间信息分类

- 2.3 TARGET RECOGNITION

-

- 2.3.1 GENERAL DEEP-LEARNING FRAMEWORK OF REMOTE SENSING TARGET RECOGNITION 遥感目标识别的一般深度学习框架

- 2.3.2 SAMPLE SELECTION PROPOSALS样本选择的建议

- 2.3.3 LOW-TO MIDDLE-LEVEL FEATURE LEARNING低级到中级的特征学习

- 2.3.4 TRAINING THE DEEP-LEARNING NETWORKS

- 2.3.5 SUPERVISED METHODS

- 2.3.6 UNSUPERVISED METHODS

- 2.4 SCENE UNDERSTANDING

- 2.4.1 UNSUPERVISED HIERARCHICAL FEATURE-LEARNING-BASED METHODS

-

- 2.4.2 PATCH EXTRACTION

- 2.4.3 FEATURE EXTRACTION

- 2.4.4 FEATURE REPRESENTATION

- 2.4.5 CLASSIFICATION

- 2.4.6 SUPERVISED HIERARCHICAL FEARTURE-LEARNING-BASED METHODS

- 五,EXPERIMENTS AND ANALYSIS

- 六,CONCLUSIONS AND FUTURE WORK

文章来源:https://ieeexplore.ieee.org/abstract/document/7486259

Advances in Machine Learning for Remote Sensing and Geosciences

一,Abstract

Deep-learning (DL) algorithms, which learn the representative and discriminative features in a hierarchical manner from the data, have recently become a hotspot in the machine-learning area and have been introduced into the geoscience and remote sensing (RS) community for RS big data analysis.深度学习(deep learning, DL)算法从数据中分层学习代表性特征和区分性特征,是近年来机器学习领域的一个研究热点,已被引入地球科学和遥感领域,用于遥感大数据分析。Considering the low-level features (e.g., spectral and texture) as the bottom level, the output feature representation from the top level of the network can be directly fed into a subsequent classifier for pixel-based classification。将底层特征(如光谱和纹理)作为底层,网络顶层的输出特征表示可以直接被送入后续的基于像素的分类器中进行分类。As a matter of fact, by carefully addressing the practical demands in RS applications and designing the input–output levels of the whole network, we have found that DL is actually everywhere in RS data analysis: from the traditional topics of image preprocessing, pixel-based classification, and target recognition, to the recent challenging tasks of high-level semantic feature extraction and RS scene understanding. 事实上,通过仔细处理RS应用的实际需求和设计整个网络的输入—输出水平,我们发现DL实际上在RS数据分析中无处不在:从图像预处理的传统主题,基于像素的分类、目标识别,到最近的有挑战性的任务:高水平语义特征提取和RS场景理解。

In this technical tutorial, a general framework of DL for RS data is provided, and the state-of-the-art DL methods in RS are regarded as special cases of input–output data combined with various deep networks and tuning tricks. Although extensive experimental results confirm the excellent performance of the DL-based algorithms in RS big data analysis, even more exciting prospects can be expected for DL in RS. Key bottlenecks and potential directions are also indicated in this article, guiding further research into DL for RS data.在本技术教程中,给出了用于RS数据的DL通用框架,并且RS中最先进的DL方法被认为是输入—输出数据与各种深度网络和调优技巧相结合的特殊情况。尽管大量的实验结果证实了基于dl的算法在RS大数据分析中的优异性能,但是还可以期待DL在RS领域会有更令人兴奋的前景。本文还指出了关键瓶颈和潜在发展方向,为进一步开展DL在RS数据中的研究提供指导。

二,ADVANTAGES OF REMOTE SENSING METHODS遥感方法的优点

RS techniques have opened a door to helping people widen their ability to understand the earth. In fact, RS techniques are becoming more and more important in data-collection tasks. RS技术在数据采集中发挥着越来越重要的作用. Information technology companies depend on RS to update their location-based services. 信息技术公司依靠RS来更新他们的基于位置的服务. Google Earth employs high-resolution (HR) RS images to provide vivid pictures of the earth’s surface. 谷歌地球采用高分辨率(HR) RS图像,提供生动的地球表面图片。 Governments have also utilized RS for a variety of public services, from weather reporting to traffic monitoring. 政府还将RS用于各种公共服务,从天气报告到交通监控。 Nowadays, one cannot imagine a life without RS. Recent years have even witnessed a boom in RS satellites,

providing for the first time an extremely large number of geographical images of nearly every corner of the earth’s surface.近年来,RS卫星发展迅猛,

首次提供了大量地球表面几乎每个角落的地理图像. Data warehouses of RS images are increasing daily, including images with different spectral and spatial resolutions. 遥感影像数据仓库每天都在增加,包括不同光谱分辨率和空间分辨率的影像。

How can we extract valuable information from the various kinds of RS data? How should we deal with the ever-increasing data types and volume? 如何从各种遥感数据中提取有价值的信息?我们应该如何处理不断增长的数据类型和容量? The traditional approaches exploit features from RS images with which information-extraction models can be constructed. 传统的方法利用遥感图像的特征,利用这些特征可以构建信息提取模型. Handcrafted features have proved effective and can represent a variety of spectral, textural, and geometrical attributes of the images. However, since these features cannot easily consider the details of real data, it is impossible for them to achieve an optimal balance between discriminability and robustness.手工制作的特征已被证明是有效的,可以代表图像的各种光谱、纹理和几何属性. 然而,由于这些特征不能很容易地考虑真实数据的细节,它们无法在可区分性和鲁棒性之间达到最优平衡。When facing the big data of RS images, the situation is even worse, since the imaging circumstances vary so greatly that images can change a lot in a short interval. Thanks to DL theory , which provides an alternative way to automatically learn fruitful features from the training set, unsupervised feature learning from very large raw-image data sets has become possible. Actually, DL has proven to be a new and exciting tool that could be the next trend in the development of RS image processing. 当面对遥感图像大数据时,情况更是如此,因为成像环境变化很大,图像在短时间内可以发生很大的变化。DL理论提供了一种从训练集自动学习丰富特性的替代方法,DL理论使得从非常大的原始图像数据集进行无监督特征学习成为可能。Actually, DL has proven to be a new and exciting tool that could be the next trend in the development of RS image processing. 事实上,DL已经被证明是一种新的和令人兴奋的工具,它可能是RS图像处理的下一个发展趋势。

注释2:Handcrafted features

注释1:discriminability and robustness

可区分性和鲁棒性

robustness——鲁棒性;稳健性;健壮性

RS images, despite the spectral and spatial resolution, are reflections of the land surface , with an important property being their ability to record multiple-scale information within an area. 尽管遥感图像具有光谱和空间分辨率,但它是地表的反射,其重要的特性是能够记录一个区域内的多尺度信息. According to the type of information that is desired, pixel-based, object-based, or structure-based features can be extracted. 根据所需的信息类型,可以提取基于像素的、基于对象的或基于结构的特征. However, an effective and universal approach has not yet been reported to optimally fuse these features, due to the subtle relationships between the data. In contrast, DL can represent and organize multiple levels of information to express complex relationships between data. 然而,由于数据之间的微妙关系,目前还没有一种有效的、通用的方法来最佳地融合这些特征. 相反,DL可以表示和组织多层次的信息来表达数据之间的复杂关系. In fact, DL techniques can map different levels of abstractions from the images and combine them from low level to high level. 事实上,DL技术可以从图像中映射不同层次的抽象,并将它们从低层次组合到高层次。 Consider scene recognition as an example, where, with the help of DL, the scenes can be represented as a unitary transformation by exploiting the variations in the local spatial arrangements and structural patterns captured by the low-level features, where no segmentation stage or individual object extraction stage is needed. 以场景识别为例,在DL的帮助下,利用底层特征捕捉到的局部空间布局和结构模式的变化,将场景表示为一个酉变换,在这里不需要分割阶段或单独的对象提取阶段。

注释3:unitary transformation

Despite its great potential, DL cannot be directly used in many RS tasks, with one obstacle being the large numbers of bands. 尽管DL具有巨大的潜力,但它不能直接用于许多RS任务,一个障碍是波段的数量太多。Some RS images, especially hyperspectral ones, contain hundreds of bands that can cause a small patch to be a really large data cube, which corresponds to a large number of neurons in a pretrained network .一些遥感图像,尤其是高光谱图像,包含数百个波段,可以使一个小斑块变成一个非常大的数据立方体,这对应着一个预先训练好的网络中的大量神经元。 In addition to the visual geometrical patterns within each band, the spectral curve vectors across bands are also important information. 除了每个波段内的视觉几何模式外,跨波段的光谱曲线向量也是重要的信息。 However, how to utilize this information still requires

further research. Problems still exist in the high-spatial-resolution RS images, which have only green, red, and blue channels, the same as the benchmark data sets for DL. In practice, very few labeled samples are available, which may make a pretrained network difficult to construct. Furthermore, images acquired by different sensors present large differences. How to transfer the pretrained network to other images is still unknown. 然而,如何利用这些信息仍然需要进一步的研究。与DL的基准数据集一样,高空间分辨率遥感图像只有绿、红、蓝三通道,但是高空间分辨率遥感图像中仍然存在问题。在实践中,可用的标签样本很少,这可能使预先训练好的网络难以构建。另外,不同传感器获取的图像差异较大。如何将预先训练好的网络迁移到其他图像上仍然是未知的。

In this article, we survey the recent developments in DL for the RS field and provide a technique tutorial on the design of DL-based methods for optical RS data. Although there are also several advanced techniques for DL for synthetic aperture radar images and light detection and ranging (LiDAR) point clouds data , they share the

similar basic DL ideas of the data analysis model. 在这篇文章中,我们调查了遥感领域DL的最新发展,并提供了一个关于设计处理光学遥感数据的DL方法的技术教程。尽管也有一些用于合成孔径雷达图像和光探测和测距(LiDAR)点云数据的DL先进技术,但它们的数据分析模型的基本DL思想类似。

三,THE GENERAL FRAMEWORK 总体框架

Despite the complex hierarchical structures, all of the DL-based methods can be fused into a general framework. 尽管层次结构复杂,但所有基于DL的方法都可以融合为一个通用框架.Figure 1 illustrates a general framework of DL for RS data analysis. 图1展示了用于RS数据分析的DL的一般框架. The flowchart includes three main components, the prepared input data, the core deep networks, and the expected output data. 流程图包括三个主要部分,准备好的输入数据、核心深度网络和预期的输出数据. In practice, the input output data pairs are dependent on the particular application. 在实践中,输入输出数据对 依赖于特定的应用.For example, for RS image pan sharpening, they are the HR and low-resolution (LR) image patches from the panchromatic (PAN) images; for pixel-based classification, they are the spectral–spatial features and their feature representations (unsupervised version) or label information (supervised version) ; while, for tasks of target recognition and scene understanding , the inputs are the features extracted from the object proposals, as well as the raw pixel digital numbers from the HR images and RS image databases respectively, and the output data are always the same as in the application of pixel-based classification, as described previously. 例如,对于RS图像的pan锐化,它们是来自全色(pan)图像的高分辨率(HR)和低分辨率(LR)图像斑块;对于基于像素的分类,它们是光谱—空间特征及其特征表示(无监督版本)或标签信息(监督版本);而对于目标识别和场景理解,输入分别为从目标建议中提取的特征,以及从HR图像和RS图像数据库中提取的原始像素数字,并且如前所述,输出数据始终是与在应用基于像素的分类时是相同的。

注释1:pan sharpening,全色锐化/全色波段融合panchromatic (PAN) images全色(PAN)图像

注释2:object proposals

When the input–output data pairs have been properly defined, the intrinsic and natural relationship between the input and output data is then constructed by a deep architecture composed of multiple levels of nonlinear operations, where each level is modeled by a shallow module such as an autoencoder (AE) or a sparse coding algorithm. 当输入—输出数据对已被正确定义,输入和输出数据之间的内在和自然关系由多层非线性操作组成的深层体系结构构成,其中每一层都是由一个浅层模块(如自动编码器(AE)或稀疏编码算法)建模的。由深架构组成的多级非线性操作,在每个级别由浅建模模块如autoencoder (AE)或稀疏编码算法。It should be noted that, if a sufficient training sample set is available, such a deep network turns out to be a supervised approach. 需要指出的是,如果有足够的训练样本集,那么这种深度网络是一种有监督的方法。 It can be further fine-tuned by the use of the label information, and the top-layer output of the network is the label information rather than the abstract feature representation learned by an unsupervised deep network. 它可以利用标签信息进行进一步的微调,网络的顶层输出是标签信息,而不是无监督深度网络学习的抽象特征表示。When the core deep network has been well trained, it can be employed to predict the expected output data of a given test sample. 当核心深度网络经过良好的训练后,就可以用来预测给定测试样本的预期输出数据。Along with the general framework in Figure 1, we describe a few basic algorithms in the deep networkconstruction tutorial in the following section, and we then review the representative techniques in DL for RS data analysis from four perspectives: 1) RS image preprocessing, 2) pixel-based classification, 3) target recognition, and 4) scene understanding. 除了图1中的一般框架外,我们将在下一节的深度网络构建教程中描述一些基本算法,然后从四个角度回顾RS数据分析中的DL代表性技术:1)RS图像预处理,2)基于像素分类,3)目标识别,4)场景理解

注释3:shallow module such as an autoencoder (AE) or a sparse coding algorithm 浅模块,例如:自动编码器(AE)或稀疏编码算法

四,BASIC ALGORITHMS IN DEEP LEARNING深度学习的基本算法

In recent years, the various DL architectures have thrived and have been applied in fields such as audio recognition, natural language processing, and many classification tasks, where they have usually outperformed the traditional methods. M近年来,各种DL体系结构蓬勃发展,并已应用于音频识别、自然语言处理和许多分类任务等领域,在这些领域它们的性能通常优于传统的方法. The motivation for such an idea is inspired by the fact that the mammal brain is organized in a deep architecture, with a given input percept represented at multiple levels of abstraction, for the primate visual system in particular. 产生这种想法(DL体系)的动机是由于哺乳动物的大脑是在一个深层结构中组织起来的,给定的输入知觉在多个抽象层次上表示,尤其是灵长类动物的视觉系统。Inspired by the architectural depth of the human brain, DL researchers have developed novel deep architectures as an alternative to shallow architectures. 受人类大脑架构深度的启发,DL研究人员开发了新颖的深层架构,作为浅层架构的替代方案。Deep belief networks (DBNs) are a major breakthrough in DL research and train one layer at a time in an unsupervised manner by restricted Boltzmann machines (RBMs) . 深度信念网络(DBNs)是DL研究的一个重大突破,它采用限制玻尔兹曼机器(RBMs)进行无监督的逐层训练。A short while later, a number of AE-based algorithms

were proposed that also train the intermediate levels of representation locally at each level (i.e., the AE and its variants, such as the sparse AE and the denoising AE). 不久之后,提出了一些基于自动编码器(AE)的算法,可以在每一层(即声发射及其变体,如稀疏声发射和去噪声发射)局部地训练中间层(自动编码器及其变体,如稀疏自动编码器和去噪自动编码器)。Unlike AEs, the sparse coding algorithms generate sparse representations from the data themselves from a different perspective by learning an overcomplete dictionary via self-decomposition. 与自动编码器不同的是,稀疏编码算法通过自分解来学习一个超完整的字典,借此从不同的角度从数据本身生成稀疏表示。In addition, as the most representative supervised DL model, convolutional neural networks (CNNs) have outperformed most algorithms in visual recognition. 并且,作为最具代表性的监督DL模型,卷积神经网络(CNNs)在视觉识别中的表现优于大多数算法。The deep structure of CNNs allows the model to learn highly abstract feature detectors and to map the input features into representations that can clearly boost the performance of the subsequent classifiers. CNNs的深层结构使得模型可以学习高度抽象的特征检测器,并将输入的特征映射成表征,可以明显提升后续分类器的性能。Furthermore, there are many optional techniques that can be used to train the DL architecture shown in Figure 1.此外,还有许多可选的技术可以用来训练图1所示的DL架构。In this review, we only provide a brief introduction to the following four typical models that have already been used in the RS community and can be embedded into the general framework to achieve the particular application. 在这篇综述中,我们只对以下四种典型模型进行简单的介绍,这些模型已经在RS界使用并且可以嵌入到通用框架中实现特定的应用。More detailed information regarding the DL algorithms in the machine-learning community can be found in [14] and [44]. 关于机器学习社区中DL算法的更多详细信息可以在[14]和[44]中找到。

注释1:restricted Boltzmann machines (RBMs)

注释2:机器学习中DL算法的更多详细信息可以在[14]和[44]中找到

论文: [14] Y. Bengio, A. Courville, and P. Vincent, “Representation learn-

ing: A review and new perspectives,” IEEE Trans. Pattern Anal.

Mach. Intell., vol. 35, no. 8, pp. 1798–1828, 2013.

[44] Y. Bengio, “Learning deep architectures for AI,” Found. Trends

Mach. Learning, vol. 2, no. 1, pp. 1–127, 2009.

1,Convolutional neural networks

The CNN is a trainable multilayer architecture composed of multiple feature-extraction stages. Each stage consists of three layers: 1) a convolutional layer, 2) a nonlinearity layer, and 3) a pooling layer. CNN是一个可训练的多层架构,由多个特征提取阶段组成。The architecture of a CNN is designed to take advantage of the two-dimensional structure of the input image.CNN的架构被设计可以利用输入图像的二维结构。A typical CNN is composed of one, two, or three such feature-extraction stages, followed by one or more traditional, fully connected layers and a final classifier layer. Each layer type is described in the following sections.一个典型的CNN是由一个、两个或三个这样的特征提取阶段组成,然后是一个或多个传统的、全连接层和一个最后的分类层。每种层类型在下面的章节中进行描述。

1.1CONVOLUTIONAL LAYER卷积层

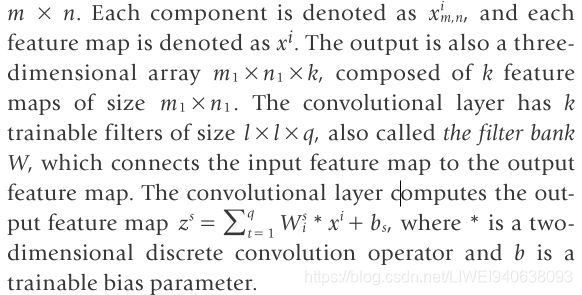

The input to the convolutional layer is a three-dimensional array with r two-dimensional feature maps of size m # n. 卷积层的输入是一个三维数组(r个尺寸为m#n的二维特征图)。

每个分量表示为![]() 每个特征图表示为xi。输出也是一个三维数组mi×ni×k,由k个尺寸为mi×ni的特征图组成。卷积层有k个尺寸为L×L×q的可训练的滤波器,也被称为滤波器组

每个特征图表示为xi。输出也是一个三维数组mi×ni×k,由k个尺寸为mi×ni的特征图组成。卷积层有k个尺寸为L×L×q的可训练的滤波器,也被称为滤波器组

W(滤波器组将输入特征图连接到输出特征图)。卷积层计算输出特征图![]() ,在这里 * 是一种二维离散卷积算子并且b是可训练的偏差参数。

,在这里 * 是一种二维离散卷积算子并且b是可训练的偏差参数。

1.2NONLINEARITY LAYER非线性层

In the traditional CNN, this layer simply consists of a pointwise nonlinearity function applied to each component in a feature map. The nonlinearity layer computes the output feature map ![]() , as

, as ![]() is commonly chosen to be a rectified linear unit (ReLU)

is commonly chosen to be a rectified linear unit (ReLU) ![]() .

.

在传统的CNN中,这一层仅仅由一个点态(逐点的)非线性函数组成,点态(逐点的)非线性函数应用于特征图中的每个组件。非线性层计算输出特征图![]() ,通常

,通常![]() 被选择为修正线性单元(ReLU)

被选择为修正线性单元(ReLU)![]() 。

。

1.3POOLING LAYER池化层/采样层

The pooling layer involves executing a max operation over the activations within a small spatial region G of each feature map:![]() 池化层涉及在每个特征图的一个小空间区域G内对激活执行一个最大化操作:

池化层涉及在每个特征图的一个小空间区域G内对激活执行一个最大化操作:![]() 。To be more precise, the pooling layer can be thought of as consisting of a grid of pooling units spaced s pixels apart, each summarizing a small spatial region of size p * p centered at the location of the pooling unit. 更准确地说,池化层可以被认为是由间隔s个像素的池化单元网格组成,每个网格概括了以池化单元位置为中心的尺寸为p*p的小空间区域。After the multiple feature-extraction stages, the entire network is trained with back propagation of a supervised loss function such as the classic least-squares output, and the target output y is represented as a 1–of–K vector, where K is the number of output and L is the number of layers:

。To be more precise, the pooling layer can be thought of as consisting of a grid of pooling units spaced s pixels apart, each summarizing a small spatial region of size p * p centered at the location of the pooling unit. 更准确地说,池化层可以被认为是由间隔s个像素的池化单元网格组成,每个网格概括了以池化单元位置为中心的尺寸为p*p的小空间区域。After the multiple feature-extraction stages, the entire network is trained with back propagation of a supervised loss function such as the classic least-squares output, and the target output y is represented as a 1–of–K vector, where K is the number of output and L is the number of layers: 。在经过多个特征提取阶段后,对整个网络进行监督损失函数的反向传播训练(如经典的最小二乘输出),并且目标输出y表示为1-of-K向量,其中K为输出数,L为层数:

。在经过多个特征提取阶段后,对整个网络进行监督损失函数的反向传播训练(如经典的最小二乘输出),并且目标输出y表示为1-of-K向量,其中K为输出数,L为层数: 在这里,I指层数。Our goal is to minimize

在这里,I指层数。Our goal is to minimize ![]()

as a function of ![]() .To train the CNN, we can apply stochastic gradient descent with backpropagation to optimize the function.我们的目标是最小化

.To train the CNN, we can apply stochastic gradient descent with backpropagation to optimize the function.我们的目标是最小化![]() 。为了训练CNN,我们可以使用带有反向传播的随机梯度下降来优化函数。CNNs have recently become a popular DL method and have achieved great success in large-scale visual recognition, which has become possible due to the large public

。为了训练CNN,我们可以使用带有反向传播的随机梯度下降来优化函数。CNNs have recently become a popular DL method and have achieved great success in large-scale visual recognition, which has become possible due to the large public

image repositories, such as ImageNet. CNN最近成为一种流行的DL方法,并在大规模的视觉识别中取得了巨大的成功,(这得益于大量的公共图像存储库,如ImageNet)。In the RS community, there are also some recent works on CNN-based RS image pixel classification, target recognition, and scene understanding.在RS界,最近也有一些基于CNN的RS图像像素分类、目标识别和场景理解的工作。

1.4 auto encoders(AE)自动编码器

An AE is a symmetrical neural network that is used to learn the features from a data set in an unsupervised manner by minimizing the reconstruction error between the input data at the encoding layer and its reconstruction at the decoding layer. AE是一种对称的神经网络,它通过最小化编码层输入数据和其在解码层重构之间的重构误差,以无监督的方式从数据集中学习特征。During the encoding step, an input vector ![]() is processed by applying a linear mapping and a nonlinear activation function to the network:

is processed by applying a linear mapping and a nonlinear activation function to the network:![]() where

where ![]() is a weight matrix with K features,

is a weight matrix with K features,![]() is the encoding bias, and g(x) is the logistic sigmoid function

is the encoding bias, and g(x) is the logistic sigmoid function ![]() We decode a vector using a separate linear decoding matrix:

We decode a vector using a separate linear decoding matrix:![]() where

where ![]() is a weight matrix and

is a weight matrix and ![]() is the decoding bias. Feature extractors in the data set are learned by minimizing the cost function, and the first term in the reconstruction is the error term. The second term is a regularization term (also called a weight decay term in a neural network):

is the decoding bias. Feature extractors in the data set are learned by minimizing the cost function, and the first term in the reconstruction is the error term. The second term is a regularization term (also called a weight decay term in a neural network):![]() where X and Z are the training and reconstructed data, respectively. 在编码步骤中,通过对网络

where X and Z are the training and reconstructed data, respectively. 在编码步骤中,通过对网络![]()

应用线性映射和非线性激活函数来处理输入向量![]() 。在这里,

。在这里,![]() 是一个具有K个特征的权值矩阵,

是一个具有K个特征的权值矩阵,![]() 是解码偏差。数据集中的特征提取器是通过最小化代价函数来学习的,重构中的第一项是误差项。第二项是正则化项(在神经网络中也称为权重衰减项):

是解码偏差。数据集中的特征提取器是通过最小化代价函数来学习的,重构中的第一项是误差项。第二项是正则化项(在神经网络中也称为权重衰减项):![]() 。其中,X和Z分别为训练数据和重构数据。

。其中,X和Z分别为训练数据和重构数据。

We recall that a denotes the activation of hidden units in the AE. 我们回顾一下,a表示AE中隐藏单元的激活。Thus, when the network is provided with a specific input ![]() be the average activation of

be the average activation of ![]() averaged over the training set. We want to approximately enforce the constraint

averaged over the training set. We want to approximately enforce the constraint ![]() where

where ![]() is the

is the

sparsity parameter, which is typically a small value close to zero. In other words,we want the average activation of each hidden neuron ![]() to be close to zero. To satisfy this constraint, the hidden units activations must be mostly inactive and close to zero so that most of the neurons are inactive.因此,当网络被提供给一个特定的输入

to be close to zero. To satisfy this constraint, the hidden units activations must be mostly inactive and close to zero so that most of the neurons are inactive.因此,当网络被提供给一个特定的输入![]() 时,假设

时,假设![]() 为 在训练集上的

为 在训练集上的![]() 平均的平均激活量。我们想要近似地执行约束条件

平均的平均激活量。我们想要近似地执行约束条件![]() ,在这里

,在这里![]() 是稀疏性参数,通常是一个接近于零的小值。换句话说,我们要每个隐藏神经元

是稀疏性参数,通常是一个接近于零的小值。换句话说,我们要每个隐藏神经元![]() 的平均激活量近似为0。为了满足这个约束条件,隐藏单元的激活必须大部分是不活跃的,并且接近于零,所以大部分神经元是不活跃的. To achieve this, the objective in the sparse AE learning is to minimize the reconstruction error with a sparsity constraint, i.e., a sparse AE:

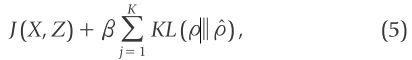

的平均激活量近似为0。为了满足这个约束条件,隐藏单元的激活必须大部分是不活跃的,并且接近于零,所以大部分神经元是不活跃的. To achieve this, the objective in the sparse AE learning is to minimize the reconstruction error with a sparsity constraint, i.e., a sparse AE:  where β is the weight of the sparsity penalty, K is the number of features in the weight matrix, and KL(.) is the Kull-back-Leibler divergence given by

where β is the weight of the sparsity penalty, K is the number of features in the weight matrix, and KL(.) is the Kull-back-Leibler divergence given by  .为了实现这一目标,稀疏AE学习中的目标是在稀疏性约束下最小化重构误差,即稀疏AE

.为了实现这一目标,稀疏AE学习中的目标是在稀疏性约束下最小化重构误差,即稀疏AE 。在这里,β 是稀疏性惩罚的权重,K是权重矩阵中的特征数。KL(.) 是Kull-back-Leibler分叉点

。在这里,β 是稀疏性惩罚的权重,K是权重矩阵中的特征数。KL(.) 是Kull-back-Leibler分叉点 。

。

This penalty function has the property that ![]() .Otherwise, it increases monotonically as

.Otherwise, it increases monotonically as ![]() diverges from ρ,which acts as the sparsity constraint. An AE can be directly employed as a feature extractor for RS data analysis [51], and it has been more frequently stacked into the AEs for DL from RS data [52]–[54]. 这个惩罚函数具有以下性质

diverges from ρ,which acts as the sparsity constraint. An AE can be directly employed as a feature extractor for RS data analysis [51], and it has been more frequently stacked into the AEs for DL from RS data [52]–[54]. 这个惩罚函数具有以下性质 ![]() 。否则,它单调地递增,因为

。否则,它单调地递增,因为![]() 偏离ρ(其作为稀疏性约束)。AE可以直接作为RS数据分析的特征提取器,并且它更多地被堆积到了RS数据的DL的AEs中。

偏离ρ(其作为稀疏性约束)。AE可以直接作为RS数据分析的特征提取器,并且它更多地被堆积到了RS数据的DL的AEs中。

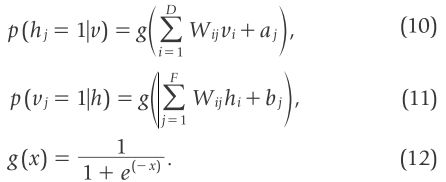

1.5 restricted boltzmann machines受限玻尔兹曼机

An RBM is commonly used as a layer-wise training model in the construction of a DBN.在构建DBN的过程中,通常使用RBM作为层间训练模型。It is a two-layer network, presenting a particular type of Markov random field with visible units ![]() and hidden units

and hidden units ![]() .它是一个两层网络,呈现的是一种特殊类型的马尔科夫随机场(可见单元v和隐藏单元h)。A joint configuration of the units has an energy given by

.它是一个两层网络,呈现的是一种特殊类型的马尔科夫随机场(可见单元v和隐藏单元h)。A joint configuration of the units has an energy given by  where

where ![]() is the weight between visible unit i and hidden unit j, and

is the weight between visible unit i and hidden unit j, and ![]() are bias terms of the visible and hidden unit, respectively. 单元的联合配置具有以下能量

are bias terms of the visible and hidden unit, respectively. 单元的联合配置具有以下能量  ,在这里

,在这里![]() 是可见单元i和隐藏单元j之间的权重,而

是可见单元i和隐藏单元j之间的权重,而![]() 分别为可见单元和隐藏单元的偏置项。

分别为可见单元和隐藏单元的偏置项。

The joint distribution over the units is defined by  where Z(θ) is the normalizing constant. 这里,Z(θ)是归一化常数。The network assigns a probability to every input vector via the energy function. 网络通过能量函数给每个输入向量分配一个概率。The probability of the training vector can be raised by adjustment to lower the energy, as given in (7).通过调整降低能量,可以提高训练向量的概率,如(7)所示。 The conditional distributions of hidden unit h and input vector v are given by the logistic function(如下). 隐藏单元h和输入向量v的条件分布由对数函数给出。

where Z(θ) is the normalizing constant. 这里,Z(θ)是归一化常数。The network assigns a probability to every input vector via the energy function. 网络通过能量函数给每个输入向量分配一个概率。The probability of the training vector can be raised by adjustment to lower the energy, as given in (7).通过调整降低能量,可以提高训练向量的概率,如(7)所示。 The conditional distributions of hidden unit h and input vector v are given by the logistic function(如下). 隐藏单元h和输入向量v的条件分布由对数函数给出。 .

.

Once the states of hidden units are chosen, the input data can be reconstructed by setting each vi to 1 with the probability in (11). 一旦选择了隐藏单元的状态,就可以按(11)中的概率将每个vi设为1来重构输入数据。The hidden units’ states are then updated to represent the features of the reconstruction. 然后更新隐藏单元的状态来代表重构的特征。The learning of W is done through a method called contrastive divergence (CD). W的学习是通过一种叫做对比散度(CD)的方法来完成的。The DBN has been applied to the RS image spatial–spectral classification and shows superior performance compared to the conventional feature dimensionality-reduction methods, such as principal component analysis (PCA), and classifiers, such as support vector machines (SVMs) [55], [29]. DBN已应用于RS图像空间光谱分类,与传统的特征维度还原方法相比,表现出优越的性能。In recent years, it has also been successfully proposed for object recognition [56] and scene classification [57].近年来,还成功提出目标识别和场景分类 。

1.6 sparse coding稀疏编码

Sparse coding is a type of unsupervised method for learning sets of overcomplete bases to represent data efficiently to find a set of basis vectors ![]() such that we can represent an input vector x as a linear combination of these basis vectors:

such that we can represent an input vector x as a linear combination of these basis vectors:  稀疏编码是一种无监督的方法,用于学习过完整的基数集来有效地表示数据,以找到一组基向量

稀疏编码是一种无监督的方法,用于学习过完整的基数集来有效地表示数据,以找到一组基向量![]() 以致于我们可以表示一个输入向量x是这些基向量的线性组合

以致于我们可以表示一个输入向量x是这些基向量的线性组合 。While techniques such as PCA allow us to learn a complete set of basis vectors efficiently, we wish to learn an overcomplete set of basis vectors to represent the input vectors x. 虽然PCA等技术允许我们高效地学习一组完整的基向量,但我们希望学习一组超完整的基向量来表示输入向量x。 The advantage of having an overcomplete basis set is that our basis vectors are better able to capture structures and patterns inherent in the input data. 拥有一个过度完整的基础集的好处是,我们的基础向量能够更好地捕捉输入数据中固有的结构和模式。However, with an overcomplete basis set, the coefficients

。While techniques such as PCA allow us to learn a complete set of basis vectors efficiently, we wish to learn an overcomplete set of basis vectors to represent the input vectors x. 虽然PCA等技术允许我们高效地学习一组完整的基向量,但我们希望学习一组超完整的基向量来表示输入向量x。 The advantage of having an overcomplete basis set is that our basis vectors are better able to capture structures and patterns inherent in the input data. 拥有一个过度完整的基础集的好处是,我们的基础向量能够更好地捕捉输入数据中固有的结构和模式。However, with an overcomplete basis set, the coefficients ![]() are no longer uniquely determined by the input vector x. 然而,在一个过完整的基础集上,系数

are no longer uniquely determined by the input vector x. 然而,在一个过完整的基础集上,系数![]() 不再由输入向量x唯一决定。 Therefore, in sparse coding, we introduce the additional criterion of sparsity to resolve the degeneracy introduced by the overcompleteness. 因此,在稀疏编码中,我们引入了额外的稀疏性标准,以解决过度完整所带来的退化问题。

不再由输入向量x唯一决定。 Therefore, in sparse coding, we introduce the additional criterion of sparsity to resolve the degeneracy introduced by the overcompleteness. 因此,在稀疏编码中,我们引入了额外的稀疏性标准,以解决过度完整所带来的退化问题。

We define the sparse coding cost function on a set of m input vectors as 我们将一组m个输入向量的稀疏编码代价函数定义为  where S(.) is a sparsity cost function that penalizes

where S(.) is a sparsity cost function that penalizes ![]()

for being far from zero. 其中S(.)是一个稀疏性代价函数,对![]() 远离零进行惩罚。 We can interpret the first term of the sparse coding objective as a reconstruction term that tries to force the algorithm to provide a good representation of x, and the second term can be defined as a sparsity penalty that forces our representation of x to be sparse. 我们可以将稀疏编码目标的第一项解释为重构项,其试图迫使算法提供x的良好表示,第二项可以定义为稀疏性惩罚,迫使我们对x的表示是稀疏的。

远离零进行惩罚。 We can interpret the first term of the sparse coding objective as a reconstruction term that tries to force the algorithm to provide a good representation of x, and the second term can be defined as a sparsity penalty that forces our representation of x to be sparse. 我们可以将稀疏编码目标的第一项解释为重构项,其试图迫使算法提供x的良好表示,第二项可以定义为稀疏性惩罚,迫使我们对x的表示是稀疏的。

A large number of sparse coding methods have been proposed. 已有大量的稀疏编码方法被提出。Notably, for RS scene classification, Cheriyadat [58] introduces a variant of sparse coding that combines local scale-invariant feature transform (SIFT)-based feature descriptors to generate a new sparse representation, while, in [59], the sparse coding is used to reduce the potential redundant information in the feature representation. 值得注意的是,对于RS场景分类,Cheriyadat引入了一种稀疏编码的变体,其结合基于局部尺度不变特征变换(SIFT)的特征描述符来生成新的稀疏表示,而在[59]中,稀疏编码是用来减少特征表示中潜在的冗余信息。In addition, as a computationally efficient unsupervised feature-learning technique, k-means clustering has also been played as a single-layer feature extractor for RS scene classification [60]–[62] and achieves state-of-the-art performance. 此外,作为一种计算效率很高的无监督特征学习技术,k-means聚类作为RS场景分类的单层特征提取器也得到了利用[60]-[62],并达到了最先进的性能。

2,DEEP LEARNING FOR REMOTE SENSING DATA

The “Basic Algorithms in Deep Learning” section discussed some of the basic elements used in constructing a DL architecture as well as the general framework. "深度学习中的基本算法 "部分讨论了构建DL架构时使用的一些基本元素以及总体框架。 In practice, the mathematical problems of the various RS data analysis techniques can be regarded as special cases of input–output data combined with a particular DL network based on the aforementioned algorithms. 在实践中,各种RS数据分析技术的数学问题可以看作是输入输出数据与基于上述算法的特定DL网络相结合的特殊情况。In this section, we provide a tutorial on DL for RS data from four perspectives: 1) image preprocessing, 2) pixel-based classification, 3) target recognition, and 4) scene understanding. 在本节中,我们从四个方面对RS数据的DL提供了指导。1)图像预处理,2)基于像素的分类,3)目标识别,4)场景理解。

2.1 REMOTE SENSING IMAGE PREPROCESSING

In practice, the observed RS images are not always as satisfactory as we demand due to many factors, including the limitations of the sensors and the influence of the atmosphere. 在实际工作中,由于传感器的局限性和大气层的影响等诸多因素,观测到的RS图像并不总是像我们要求的那样令人满意。 Therefore, there is a need for RS image preprocessing to enhance the image quality before the subsequent classification and recognition tasks. 因此,在后续的分类和识别任务之前,需要对RS图像进行预处理,以提高图像质量。 According to the related RS literature, most of the existing methods in RS image denoising, deblurring, superresolution, and pan sharpening are based on the standard image-processing techniques in the signal processing society, while there are very few machine-learning-based techniques. 根据相关的RS文献,现有的RS图像去噪、去模糊、超解像、平移锐化等方法大多是基于信号处理领域的标准图像处理技术,而基于机器学习的技术非常少。 In fact, if we can effectively model the intrinsic correlation between the input (observed data) and output (ideal data) by a set of training samples, then the observed RS image could be enhanced by the same model. 事实上,如果我们能够通过一组训练样本有效地模拟输入(观察数据)和输出(理想数据)之间的内在相关性,那么观察到的RS图像就可以通过相同的模型来增强。 According to the basic techniques in the previous section, such an intrinsic correlation can be effectively explored by DL. 根据上一节的基本技术,这样的内在关联性可以通过DL进行有效的探索。In this tutorial, we consider two typical applications as the case studies, i.e., RS image restoration and pan sharpening, to show the state-of-the-art DL achievements in RS image preprocessing. 在本教程中,我们以两个典型的应用为案例,即RS图像修复和平移锐化,来展示DL在RS图像预处理方面的最新成果。

Followed by the general framework of DL-based RS data preprocessing that we introduced in the “General Framework” section, the input data of the framework are usually the whole original image or the local image patches. 按照我们在 "总体框架 "部分介绍的基于DL的RS数据预处理的总体框架,该框架的输入数据通常是整个原始图像或局部图像补丁。A specific deep network is then constructed, such as a deconvolution network [63] or a sparse denoising AE [28]. After that, the observed RS image is recovered by the learned DL model per spectral channel or per patch. 然后构建一个特定的深度网络,如解卷积网络[63]或稀疏去噪AE[28]。之后,观察到的RS图像由学习到的DL模型每一个频谱通道或每一个补丁进行恢复。

2.1.1 RESTORATION AND DENOISING 修复和去噪

For RS image restoration and denoising, the original image is the input to a certain network that is trained with the clean image to obtain the restored and denoised image. 对于RS图像的恢复和去噪,原始图像是输入(到一定的网络中),用干净的图像进行训练,得到恢复和去噪后的图像。For instance, Zhang et al. utilized the ![]() deconvolution network for the restoration and denoising of RS images [63], which is an improved version of the L1-regularized deconvolution network. 例如,Zhang等利用

deconvolution network for the restoration and denoising of RS images [63], which is an improved version of the L1-regularized deconvolution network. 例如,Zhang等利用![]() 解卷网络用于RS图像的恢复和去噪[63],它是L1-正则化解卷网络的改进版。The classical deconvolution network model is based on the convolutional decomposition of images under an L1 regularization, which is a sparse constraint term. 经典的解卷积网络模型是基于L1正则化下图像的卷积分解,这是一个稀疏的约束项。In the experiments undertaken in this study, adopting the L1∕2 regularization in the deep network gave sparser solutions than their L1 counterpart and has achieved satisfactory results. 在本研究进行的实验中,在深度网络中采用L1∕2正则化,得到的解比其对应的L1更稀疏,已经取得了令人满意的结果。

解卷网络用于RS图像的恢复和去噪[63],它是L1-正则化解卷网络的改进版。The classical deconvolution network model is based on the convolutional decomposition of images under an L1 regularization, which is a sparse constraint term. 经典的解卷积网络模型是基于L1正则化下图像的卷积分解,这是一个稀疏的约束项。In the experiments undertaken in this study, adopting the L1∕2 regularization in the deep network gave sparser solutions than their L1 counterpart and has achieved satisfactory results. 在本研究进行的实验中,在深度网络中采用L1∕2正则化,得到的解比其对应的L1更稀疏,已经取得了令人满意的结果。

2.1.2 PAN SHARPENING 全色波段融合/全色锐化

解释 PAN SHARPENING 全色锐化

全色锐化使用分辨率较高的全色图像(或栅格波段)与分辨率较低的多波段栅格数据集进行融合。最终生成一个具有全色栅格的高分辨率的多波段栅格数据集,该数据集中的两个栅格完全重叠。

一些图像公司可提供相同场景下的低分辨率多波段图像和较高分辨率全色图像, 此过程用于提高空间分辨率,并使用高分辨率单波段图像提供视觉效果更佳的多波段图像。

panchromatic image 全色影像,全色图像

以上为全色锐化的解释

By introducing deep neural networks, Huang et al. proposed a new pan-sharpening method for RS image preprocessing [28] that used a stacked modified sparse denoising AE (S-MSDA) to train the relationship between HR and LR image patches. 通过引入深度神经网络,Huang等人提出了一种新的用于RS图像预处理的全色锐化方法[28],该方法采用堆叠修改的稀疏去噪自动编码器(S-MSDA)来训练HR(hign-resolution)和LR(low-resolution)图像斑块之间的关系。Similar to the structure of the sparse AE, S-MSDA is constructed by stacking a series of MSDAs. 与稀疏自动编码器的结构类似,S-MSDA是通过堆叠一系列MSDAs(修正的稀疏去噪自动编码器)来构建的。The MSDA is a modified version of the sparse denoising AE (SDA), which is obtained by combining sparsity and a denoising AE together. MSDA是稀疏去噪自动编码器(SDA)的改进版,它是将稀疏性和去噪自动编码器结合在一起得到的。 The SDA is trained to reconstruct a clean, repaired input from the corresponding corrupted version [64]. SDA被训练为从相应的损坏版本中重建一个干净的、修复的输入。 Meanwhile, the modified version (i.e., the MSDA) takes the HR image patches and the corresponding LR image patches as clean data and corrupted data, respectively, and represents the relationship between them. 同时,修改版(即MSDA)将HR图像斑块和对应的LR图像斑块分别作为干净数据和损坏数据,并表示它们之间的关系。 There is a key hypothesis that the HR and LR multispectral (MS) image patches have the same relationship as that between the HR and LR PAN image patches; thus, it is a learning-based method that requires a set of HR–LR image pairs for training. 有一个关键的假设,即HR和LR多光谱(MS)图像斑块与HR和LR PAN(全色)图像斑块之间具有相同的关系;因此,它是一种基于学习的方法,需要一组HR-LR图像对进行训练。 Since the HR PAN is already available, we have designed an approach to obtain its corresponding LR PAN. 由于HR PAN(全色)已经存在,因而我们设计了一种方法来获取其对应的LR PAN(全色)。Therefore, we can use the fully trained DL network to reconstruct the HR MS image from the observed LR MS image.因此,我们可以使用完全训练的DL网络来从观察到的LR MS图像重建HR MS图像。The experimental results demonstrated that the DL-based pan sharpening method outperforms the other traditional and state-of-the-art methods.实验结果表明,基于DL的全色锐化方法优于其他传统和最先进的方法。 The aforementioned methods are just two aspects of DL-based RS image preprocessing. 上述方法只是基于DL的RS图像预处理的两个方面。In fact, we can use the general framework to generate more DL algorithms for RS image-quality improvement for different applications. 事实上,我们可以利用该通用框架生成更多的DL算法,用于不同应用的RS图像质量改进。

2.2 PIXEL-BASED CLASSIFICATION

Pixel-based classification is one of the most popular topics in the geoscience and RS community. 基于像素的分类是地球科学和RS界最热门的话题之一。 Significant progress has been achieved in recent years, e.g., in the aspects of handcrafted feature description [65]–[68], discriminative feature learning [13], [69], [70], and powerful classifier designing [71], [72]. 近年来,在手工制作的特性描述[65]-[68]、判别性特征学习[13]、[69]、[70]和强大的分类器设计[71]、[72]等方面都取得了重大进展。 However, from the DL point of view, most of the existing methods can extract only shallow features of the original data (the classification step can also be treated as the top level of the network), which is not robust enough for the classification task. DL-based pixel classification for RS images involves constructing a DL architecture for the pixel-wise data representation and classification. 但从DL的角度来看,现有的方法大多只能提取原始数据的浅层特征(分类步骤也可视为网络的顶层),对分类任务的鲁棒性不够。基于DL的RS图像像素分类涉及到构建一个DL架构来实现像素级数据的表示和分类。 By adopting DL techniques, it is possible to extract more robust and abstract feature representations and thus improve the classification accuracy. 通过采用DL技术,它可以提取出更健壮、更抽象的特征表示,从而提高分类精度。

The scheme of DL for RS image pixel-based classification consists of three main steps: 1) data input, 2) hierarchical DL model training, and 3) classification. 用于RS图像像素分类的DL的方案主要包括三个步骤。1)数据输入,2)层次化DL模型训练,3)分类。 A general flow chart of this scheme is shown in Figure 2. 本方案的总体流程图如图2所示。In the first steps, the input vector could be the spectral feature, the spatial feature, or the spectral–spatial feature, as we will discuss

later. 在第一步中,输入向量可以是光谱特征、空间特征或光谱-空间特征,我们将讨论的是后者。 Then, for the hidden layers, a deep network structure is designed to learn the expected feature representation of the input data. 然后,针对隐藏层,设计深度网络结构,学习输入数据的预期特征表示。 In the related literature, both the supervised DL structures (e.g., the CNN [45]) and the unsupervised DL structures (e.g., the AEs [73]–[75], DBNs [29], [76], and other self-defined neurons in each layer [77]) are employed. 在相关文献中,既采用了有监督的DL结构(如CNN[45]),也采用了无监督的DL结构(如AEs[73]-[75]、DBNs[29]、[76]和其他各层自定义神经元[77])。 The third step is the classification, which involves classification by utilizing the learned feature in the second step (the top layer of the DL network). 第三步是分类,即利用第二步(DL网络的顶层)学习的特征进行分类。In general, there are two main styles of classifiers: 1) the hard classifiers, such as SVMs, which directly output an integer number as the class label of each sample [76], and 2) the soft classifiers, such as logistic regression, which can simultaneously fine-tune the whole pretrained network and predict the class label in a probability distribution manner [29], [73], [74], [78]. 一般来说,分类器主要有两种风格:1)硬分类器,如SVM,直接输出一个整数作为每个样本的类标签[76];2)软分类器,如逻辑回归,可以同时对整个预训练网络进行微调,并以概率分布的方式预测类标签[29],[73],[74],[78]。

figure 2. 利用DL方法对RS图像进行像素分类的一般框架。DL网络的输入可以分为

三类:光谱特征、空间特征、光谱空间特征。

2.2.1 SPECTRAL FEATURE CLASSIFICATION光谱特征分类

The spectral information usually contains abundant discriminative information.光谱信息通常包含丰富的判别信息。A frequently used and direct approach for RS image classification is spectral feature-based classification, i.e., image classification with only the spectral feature. RS图像分类中经常使用的、直接的方法是基于光谱特征的分类,即只有光谱特征的图像分类。Most of the existing common approaches for RS image classification are shallow in their architecture, such as SVMs and k-nearest neighbor (KNN). Instead, DL adopts a deep architecture to deal with the complicated relationships between the original data and the specific class label.现有常见的RS图像分类方法大多是浅层架构,如SVMs和k-最近邻(KNN)。相反,DL采用深层架构来处理原始数据和特定类标签之间的复杂关系。

For spectral feature classification, the spectral feature of the original image data is directly deployed as the input vector. 对于光谱特征分类,直接部署原始图像数据的光谱特征作为输入向量。The input pixel vector is trained in the network part to obtain the robust deep feature representation, which is used as the input for the subsequent classification step. 在网络部分对输入像素向量进行训练,得到鲁棒的深度特征表示,作为后续分类步骤的输入。The selected deep networks could be the deep CNN [45] and a stack of the AE [73], [75], [79], [80]. 选用的深度网络可以是深度CNN[45]和自动编码器 [73], [75], [79], [80]的叠加。In particular, Lin et al. adopted an AE plus SVMs and a stacked AE plus logistic regression as the network structure and classification layer to perform the classification task with shallow and deep representation, respectively. 其中,Lin等采用了自动编码器加SVMs和叠加自动编码器加逻辑回归作为网络结构和分类层,分别完成浅层和深层表示的分类任务。It is worth noting that, due to the deeper network structure and the fine-tuning step, the deep spectral representation achieved a better performance than the shallow spectral representation [73].值得注意的是,由于网络结构较深、微调步骤较多,深光谱表示法取得了比浅光谱表示法更好的性能。

2.2.2 CLASSIFICATION WITH SPATIAL INFORMATION空间信息分类

Land covers are known to be continuous in the spatial domain, and adjacent pixels in an RS image are likely to belong to the same class.众所周知,土地覆盖物在空间域中是连续的,RS图像中相邻的像素可能属于同一类别。 As indicated in many spectral–spatial classification studies, the use of the spatial feature can significantly improve the classification accuracy [81]–[83]. 在许多光谱-空间分类研究中表明,使用空间特征可以显著提高分类精度[81]-[83]。However, traditional methods cannot extract robust deep feature representations due to their shallow properties. 然而,传统方法由于其浅层特性,无法提取出稳健的深层特征表征。To address this problem, a number of DL-based feature-learning methods have been proposed to find a new way of extracting the deep spectral–spatial representation for classification [84].为了解决这个问题,人们提出了许多基于DL的特征学习方法,找到了一种用于分类的提取深度光谱—空间表示的新方法。

For a certain pixel in the original RS image, it is natural to consider its neighboring pixels to extract the spatial feature representation.对于原始RS图像中的某一像素,自然要考虑它的相邻像素,以提取空间特征表征。 However, due to the hundreds of channels along the spectral dimension of a hyperspectral image, the region-stacked feature vector will result in too large an input dimension. 然而,由于高光谱图像的光谱维度有数百个通道,区域叠加的特征向量将导致输入维度过大。As a result, it is necessary to reduce the spectral feature dimensionality before the spatial feature representation. 因此,在空间特征表示之前,有必要降低光谱特征维度。 PCA is commonly executed in the first step to map the data to an acceptable scale with a low information loss. 第一步通常执行PCA,在信息损失小的情况下,将数据映射到一个可接受的尺度。Then, in the second step, the spatial information

is collected by the use of a w#w (w is the size of window) neighboring region of every certain pixel in the original image [85]. 然后,在第二步中,空间信息是通过使用原始图像中每一个像素的 w#w(w是窗口尺寸)邻近区域(邻域)来收集的。 After that, the spatial data is straightened into a one-dimensional vector to be fed into a DL network. Lin et al.[73] and Chen at al. [74] adopted the stacked AE as the deep network structure. 之后,将空间数据拉直为一维向量,送入DL网络。Lin等[73]和Chen等[74]采用堆叠式自编码器作为深度网络结构。 When the abstract feature has been learned, the final classification step is carried out, which is similar to the spectral classification scheme. 当抽象特征学习完毕后,进行最后的分类步骤,这与光谱分类方案类似。

When considering a joint spectral and spatial feature-extraction and classification scheme, there are two mainstrategies to achieve this goal under the framework summarized in Figure 2. 当考虑光谱和空间特征结合的提取和分类方案时,在图2总结的框架下,有两个主要策略来实现这一目标。Straightforwardly, differing from the spectral–spatial classification scheme, the spectral and initial spatial features are combined together into a vector as the input of the DL network in a joint framework, as presented in the works [29], [53]–[55], [73], and [74].直观地说,与光谱-空间分类方案不同的是,在联合框架中,将光谱和初始空间特征一起组合成一个向量作为DL网络的输入,如[29]、[53]-[55]、[73]和[74]等著作中提出的.The preferred deep networks in these papers are SAEs and DBNs, respectively. Then, by the learned deep network, the joint spectral–spatial feature representation of each test sample is obtained for the subsequent classification task, which is the same as the spectral–spatial classification scheme described previously. 这些论文中首选的深度网络分别是SAEs和DBNs。然后,通过学习的深度网络,得到每个测试样本的联合光谱-空间特征表示,用于后续的分类任务,这与前面介绍的光谱-空间分类方案相同。The other approach is to address the spectral and spatial information of a certain pixel by a convolutional deep network, such as the CNNs [46], [47], [76], the convolutional AEs [78], and a particular defined deep network [78]. 另一种方法是通过卷积深层网络来解决某个像素的光谱和空间信息,如CNNs[46]、[47]、[76]、卷积AEs[78]和特定定义的深层网络[78]等。Moreover, there are a few hierarchical learning frameworks that take each step of operation (e.g., feature extraction, classification, and postprocessing) as a single layer of the deep network [86] ,[90]. 此外,有一些分层学习框架将每一步操作(如特征提取、分类和后处理)都作为深度网络的单层[86] ,[90] 。We also regard them as the spectral–spatial DL techniques in this tutorial article.我们也把它们看作是本教程文章中的光谱-空间DL技术。

2.3 TARGET RECOGNITION

Target recognition in large HR RS images, such as ship, aircraft, and vehicle detection, is a challenging task due to the small size and large numbers of targets and the complex neighboring environments, which can cause the recognition algorithms to mistake irrelevant ground objects for target objects. 在大型HR RS(高分遥感)图像中的目标识别,如舰船、飞机、车辆检测等,由于目标体积小、数量多,且相邻环境复杂,会导致识别算法将不相关的地面物体误认为目标物体,是一项具有挑战性的任务。However, objects in natural images are relatively large, and the environments in the local fields are not that complex compared to RS images, making the targets easier to recognize. 但是,自然图像中的物体相对较大,与RS图像相比,局部场中的环境没有那么复杂,因此目标更容易识别。This is one of the main differences between detecting RS targets and natural targets. Although many studies have been undertaken, we are still lacking an efficient location method and robust classifier for target recognition in complex environments.这也是检测RS目标与自然目标的主要区别之一。虽然我们已经进行了很多研究,但是对于复杂环境下的目标识别,我们仍然缺乏一种高效的定位方法和鲁棒分类器。In the literature, Cai et al. [91] showed how difficult it is to segment aircraft from the background, and Chen et al. [30], [92] made great efforts in vehicle detection in HR RS images.在文献中,Cai等[91]表明了从背景中分割飞机的难度,Chen等[30]、[92]在HR RS图像中的车辆检测方面做了很大努力。

The performance of target recognition in such a complex context relies on the features extracted from the objects. 在如此复杂的背景下,目标识别的性能依赖于从对象中提取的特征。DL methods are well suited for this task, as this type of algorithm can extract low-level features with a high frequency, such as edges, contours, and outlines of objects, whatever the shape, size, color, or rotation angle of the targets. DL方法很适合这项任务,因为这种类型的算法可以提取出频率很高的低级特征,如物体的边缘、轮廓和轮廓,无论目标的形状、大小、颜色或旋转角度如何。This type of algorithm can also learn hierarchical representations from the input images or patches, such as the parts of the objects that are compounded by the lower-level features, making recognition of RS targets discriminative and robust. 这类算法还可以从输入的图像或斑块中学习分层表示,如物体的部分是由低层特征复合而成的,使得对RS目标的识别具有分辨性和鲁棒性。 A number of these approaches achieved state-of-the-art performance in target recognition by use of a DL method [30], [48], [49], [52], [56], [93]–[96]. 其中一些方法通过使用DL方法,在目标识别方面取得了最先进的性能。

2.3.1 GENERAL DEEP-LEARNING FRAMEWORK OF REMOTE SENSING TARGET RECOGNITION 遥感目标识别的一般深度学习框架

The DL methods used in target recognition can be divided into two maincategories: unsupervised methods and supervised methods. 目标识别中使用的DL方法可以分为两大类:无监督方法和有监督方法。 The unsupervised methods learn features from the input data without knowing the correlated labels or other supervisory information, while the supervised methods use the input data as well as the supervisory information attached to the input to discriminatively learn the feature representations. 无监督方法在不知道相关标签或其他监督信息的情况下,从输入数据中学习特征,而监督方法则利用输入数据以及附加在输入上的监督信息来辨别学习特征表征。However, both of these DL methods are utilized to learn features from the object images, and the learning processes can be unified into the same framework, as depicted in Figure 3.然而,这两种DL方法都是利用从对象图像中学习特征,学习过程可以统一到同一个框架中,如图3所示。

The RS images are first preprocessed to subtract the mean and divide the variance, or to simply convert the images to gray images with only one channel. Other preprocessing techniques compute the gradient images [97] of the original image with a certain threshold [30]. 首先对RS图像进行预处理,减去均值并划分方差,或者干脆将图像转换为只有一个通道的灰色图像。其他的预处理技术是计算原始图像的梯度图像[97],有一定的阈值[30]。The second term of this general pipeline is extracting the object proposals, which is a bounding box locating the probable targets. 这个一般流程的第二项是提取对象建议,这是一个定位可能目标的边界框。Following the process of selecting the proposals from the whole image, a simple feature extraction is conducted for each proposal or the whole image to extract the low-level descriptors that are invariant to shift, rotation, and scaling,

to some extent, such as SIFT [98], Gabor [99], and the histogram of oriented gradients (HOG) [97]. 接下来,从整幅图像中选取提案后,对每幅提案或整幅图像进行简单的特征提取,提取出一定程度上对移位、旋转和缩放不变的低级描述符,如SIFT[98]、Gabor[99]、定向梯度直方图(HOG)[97]。Next, the middle-level feature representations can be generated by performing codebook learning on the learned descriptors. 接下来,可以通过对学习到的描述符进行代码本学习来生成中间层次的特征表示。 This step is not essential, but using these low- or middle-level features usually outperforms merely using the raw pixels when learning hierarchical feature representations by the following deep neural networks. 这一步并不是必不可少的,但在通过以下深度神经网络学习分层特征表示时,使用这些低级或中级特征的表现通常优于仅仅使用原始像素。

The deep neural networks such as the CNNs, sparse AEs, and DBNs are hierarchical models that can learn high-level feature representations in the deep layers automatically generated by the features learned in the shallow layers. CNNs、稀疏AEs和DBNs等深度神经网络是分层模型,可以在深层学习由浅层中学习到的特征自动生成的高级特征表示。Having learned the discriminative and robust representations of the proposals, a classifier such as an SVM is trained with training samples composed of the representations of some data and the corresponding supervisory information. 在学习了提案的判别性和鲁棒性表示后,用一些数据的表示和相应的监督信息组成的训练样本来训练一个分类器,如SVM。When a new proposal is generated from a new image, this framework can automatically learn the high-level features from the raw image, and then classification is undertaken by the well-trained classifier to tell whether the proposal is the target or not. 当从一张新的图像中生成一个新的提案时,这个框架可以从原始图像中自动学习高级特征,然后由训练好的分类器进行分类,判断提案是否是目标。

FFIGURE 3. 利用DL方法进行目标识别的一般框架。深度网络学习到的高级特征被发送到要分类的分类器(或直接由深度网络对监督网络进行分类)。

2.3.2 SAMPLE SELECTION PROPOSALS样本选择的建议

To choose the most accurate area that exactly contains the target, a number of proposals should be extracted from the input image. 为了选择准确包含目标的最精确区域,应从输入图像中提取一些建议。Each proposal is usually a bounding box covering an object that probably contains the target. 每个提案通常是一个边界框,覆盖一个可能包含目标的对象。 The most satisfactory case is that the target is in the center of the bounding box, and the bounding box can just cover the edge of the object. 最满意的情况是,目标在边界框的中心,边界框可以刚好覆盖物体的边缘。

There are different ways of selecting the proposals. The baseline technique is the sliding window method [100], which slides the bounding box over the whole image with a small stride to generate a number of proposals. 有不同的选择提案的方法。基线技术是滑动窗口法[100],它将边界框在整个图像上以很小的步幅滑动,以产生若干提案。The sliding window technique is accurate and will not miss any possible proposals that may exactly contain the target, yet it is slow and burdens the subsequent feature-learning algorithms and classifiers, especially when there are quite a lot of objects in an image (e.g., in RS images). 滑动窗口技术是准确的,不会漏掉任何可能完全包含目标的提案,然而它的速度很慢,给后续的特征学习算法和分类器带来了负担,特别是当图像中存在相当多的物体时(如RS图像中)。 Other methods have been proposed to solve this problem, e.g., Chen et al. [30] proposed an object-location technique that can discover the coarse locations of the targets, and hence can greatly reduce the number of proposals. 还有一些方法被提出来解决这个问题,例如,Chen等[30]提出了一种对象定位技术,可以发现目标的粗位置,因此可以大大减少提案的数量。 The search efficiency of this method is more than 20 times the baseline sliding window method. Tang et al. [101] proposed a coarse ship location technique that can extract the candidate locations of the targets with little decrease in accuracy. 该方法的搜索效率是基线滑动窗口法的20倍以上。 Tang等人[101]提出了一种粗船定位技术,可以在精度下降不大的情况下提取目标的候选位置。

2.3.3 LOW-TO MIDDLE-LEVEL FEATURE LEARNING低级到中级的特征学习

Low-level features, which have the ability to handle variations in terms of intensity, rotation, scale, and affine projection, are utilized to characterize the local region of the key points in each image or patch. 低级特征,具有处理强度、旋转、尺度和仿射投影等方面变化的能力,被用来描述每个图像或斑块中关键点的局部区域。Han et al. [94] utilized SIFT descriptors to represent the image patches, which made the subsequent training process of the DBMs easier. Dalal and Triggs [97] proposed a method for object detection using the HOG.Han等人[94]利用SIFT描述符来表示图像斑块,这使得DBMs的后续训练过程更加容易。Dalal和Triggs[97]提出了一种利用HOG进行目标检测的方法。

The low-level descriptors can boost the feature-learning performance of the deep networks. 低级描述符可以提升深度网络的特征学习性能。However, they catch only limited local spatial geometric characteristics, which can lead to poor classification or detection performance when they are directly used to describe the structural contents of image patches. 然而,它们只能捕捉到有限的局部空间几何特征,当它们直接用于描述图像斑块的结构内容时,会导致分类或检测性能不佳。 To tackle this problem, some work has been done to learn codebooks that are used to encode the local descriptors and generate middle-level feature representations and to alleviate the unrecoverable loss of discriminative information. 为了解决这个问题,已经做了一些工作,学习代码本,用于编码局部描述符和生成中间层特征表示,并缓解不可恢复的判别信息的损失。For instance, Han et al. [94] applied the locality-constrained linear coding model [102] to encode the SIFT descriptors into the image patch representation. 例如,Han等[94]应用局域性约束的线性编码模型[102]将SIFT描述符编码到图像斑块表示中。

2.3.4 TRAINING THE DEEP-LEARNING NETWORKS

Although the middle-level features are extracted based on the low-level local descriptors to obtain the structural information and preserve the local relevance of elements in the local region, they cannot provide enough strong description and generalization abilities for object detection when confronted with objects and backgrounds with a large variance.虽然基于低级局部描述符提取中级特征,获取结构信息,保留局部区域内元素的局部相关性,但在面对差异较大的物体和背景时,不能为物体检测提供足够强的描述和泛化能力。To better understand the complexity of the environments in an image, better descriptors should be utilized. The DL methods can handle complex ground objects with large variance, as the features learned by the deep neural networks can be highly abstract, which makes them invariant to relatively large deformations, including different shapes, sizes, and rotations, and discriminative to some objects that belong to different categories but resemble each other in some other aspect, such as white targets on a white background. 为了更好地理解图像中环境的复杂性,应该利用更好的描述器。由于深度神经网络学习的特征可以是高度抽象的,这使得DL方法可以处理复杂的、具有较大差异的地面物体,这就使得DL方法对比较大的变形,包括不同的形状、大小和旋转都是不变的,对一些属于不同类别的物体,但在其他方面又很相似的物体,如白色背景上的白色目标,也有一定的分辨能力。Generally speaking, the DL methods used in target recognition in RS images can be divided into two categories: 1) the supervised DL algorithms and 2) the unsupervised DL algorithms.一般来说,RS图像中目标识别中使用的DL方法可以分为两类。1)有监督的DL算法;2)无监督的DL算法。

2.3.5 SUPERVISED METHODS

There are two typical supervised DL methods for target recognition: the CNN and the multilayer perceptron (MLP) [103]. 目标识别有两种典型的监督DL方法:CNN和多层感知器(MLP)[103]。 The CNNs are hierarchical models that transform the input image or image patch into layers of feature maps, which are high-level discriminative features representing the original input data. CNN是一种分层模型,它将输入图像或图像斑块转化为层层特征图,这些特征图是代表原始输入数据的高级判别特征。For the MLP model, the input image or patch should be reshaped into a vector. Then, after the transformation of each fully connected layer, the final feature representation can be generated. 对于MLP模型,应将输入的图像或斑块重塑为一个矢量。然后,经过每个全连接层的变换,就可以生成最终的特征表示。The final features are then sent to the classification layer to generate the label of the input image. Both types of supervised networks transform the input image into a two-dimensional vector for a one-class object detection. 最后的特征被送到分类层,生成输入图像的标签。 这两种类型的监督网络都是将输入图像转化为二维向量,进行一类对象检测。This vector indicates the predicted label (whether the input candidate is the target or not, or the probability of the proposal being the target). 这个向量表示预测的标签(投入的候选人是否是目标,或者说提案是目标的概率)。In the training stage, to learn the weights or kernels, the supervised networks are trained with the training samples composed of positive samples that contain the target and negative samples that do not contain the target. In the testing stage, the proposals extracted from a new RS image are processed by the models and attached with a probability y. 在训练阶段,为了学习权重或内核,监督网络的训练样本由包含目标的正样本和不包含目标的负样本组成。在测试阶段,从新的RS图像中提取的建议被模型处理并以概率y附加。The candidates then considered to contain the target are selected by a given empirical threshold or other criteria.然后,通过给定的经验阈值或其他标准选择被认为包含目标的候选者。

Although the CNN has shown robustness to distortion, it only extracts features of the same scale and, therefore, cannot tolerate a large-scale variance of objects. 虽然CNN表现出了对失真的鲁棒性,但它只能提取相同尺度的特征,因此,不能容忍对象的大规模差异。 When it comes to RS images that have a large variance in the backgrounds and objects, training a CNN that extracts multiscale feature representations is necessary for a better detection accuracy. 当涉及到背景和物体差异较大的RS图像时,为了提高检测精度,训练一个能提取多尺度特征表示的CNN是必要的。Chen et al.[30] proposed a hybrid deep neural network (HDNN) by dividing the maps of the final convolutional layer and the max-pooling layer of the deep neural network into multiple blocks of variable receptive field sizes or max-pooling field sizes to enable the HDNN to extract variable-scale features for detecting the RS objects. Chen等[30]提出了一种混合深度神经网络(HDNN),将深度神经网络的最后卷积层和最大池化层的地图划分为多个可变接收场大小或最大池化场大小的区块,使HDNN能够提取可变尺度的特征来检测RS对象。The input of the HDNN with L convolutional layers is a gray image. 具有L个卷积层的HDNN的输入是一幅灰色图像。The image is filtered by the filters in the first convolutional layers ![]()

to get the feature maps ![]()

, which are then subsampled by the first max-pooling layer to select the representative features as well as reduce the number of parameters to be processed. 图像经过第一卷积层中![]() 的滤波器过滤后,得到特征图

的滤波器过滤后,得到特征图 ![]() 然后由第一个最大池化层对其进行子采样,以选择具有代表性的特征,并减少需要处理的参数数量。After transferring the L layers’ activations or feature maps,the final convolutional feature maps of the Lth layer

然后由第一个最大池化层对其进行子采样,以选择具有代表性的特征,并减少需要处理的参数数量。After transferring the L layers’ activations or feature maps,the final convolutional feature maps of the Lth layer ![]()

are generated. 转移L层的激活或特征图后,最终得到第L层![]() 的卷积特征图。 In the architecture of the conventional CNNs, the final layer is followed by some fully connected layers and finally the classification layer. 在传统CNN的架构中,最后一层是一些完全连接的层,最后是分类层。

的卷积特征图。 In the architecture of the conventional CNNs, the final layer is followed by some fully connected layers and finally the classification layer. 在传统CNN的架构中,最后一层是一些完全连接的层,最后是分类层。

However, this kind of feature-processing method does not make full use of the features and the filters. 但是,这种特征处理方法并没有充分利用特征和过滤器。 The receptive field size of each convolutional layer is fixed, and thus it cannot extract multiscale features. 每个卷积层的接受场尺寸是固定的,因此它无法提取多尺度特征。 However, there are still rich features in the final convolutional layer that can be learned and transformed into more discriminative representations. 然而,在最后的卷积层中仍有丰富的特征,可以学习并转化为更有辨别力的表征。One way to better utilize the rich features is to increase the depth of the convolutional layers, which may, however, introduce a huge amount of computational burden when training the model. 为了更好地利用丰富的特征,一种方法是增加卷积层的深度,然而,这可能会在训练模型时带来巨大的计算负担。Another way is to use a multiscale receptive field size that can train filters with different sizes and generate multiscale feature maps.另一种方法是使用多尺度的接受场尺寸,可以训练不同尺寸的滤波器,并生成多尺度的特征图。

In the HDNN, the last layer’s feature maps are divided into T blocks ![]() with filter sizes of

with filter sizes of ![]() , respectively. 在HDNN中,最后一层的特征图被划分为T块

, respectively. 在HDNN中,最后一层的特征图被划分为T块![]() ,滤波器大小分别为

,滤波器大小分别为![]() 。The ith block covers

。The ith block covers ![]()

feature maps of the final convolutional layer. Then the activation propagation between the last two convolutional layers can be formulated as ![]() where

where ![]()

denotes the ![]() block of the last feature maps,

block of the last feature maps, ![]() denotes the filters of the corresponding block, and

denotes the filters of the corresponding block, and ![]()

denotes the activation function. 第i个块覆盖了最后卷积层的ni个特征图。那么最后两个卷积层之间的激活传播可以表述为![]() ,在这里Bt表示最后特征图的第t个块,ft表示对应块的滤波器,

,在这里Bt表示最后特征图的第t个块,ft表示对应块的滤波器,![]() 表示激活函数。

表示激活函数。

Having learned the multiscale feature representations to form the final convolutional layer, an MLP network is used to classify the features. 在学习了多尺度特征表示以形成最后的卷积层后,使用MLP网络对特征进行分类。The output of the HDNN is a two-node layer, which indicates the probability of whether the input image patch contains the target.HDNN的输出是一个双节点层,它表示输入图像斑块是否包含目标的概率。 Some of the vehicle-detection results are referred to in [30], from which it can be concluded that, although there are a number of vehicles in the scene, the modified CNN model can successfully recognize the precise location of most of the targets, indicating that the HDNN has learned fairly discriminative feature representations to recognize the objects.在[30]中提到了一些车辆检测的结果,从中可以得出结论,虽然场景中存在一些车辆,但修改后的CNN模型可以成功识别大部分目标的精确位置,说明HDNN已经学会了相当有分辨力的特征表示来识别物体。

2.3.6 UNSUPERVISED METHODS

Although the supervised DL methods like the CNN and its modified models can achieve acceptable performances in target recognition tasks, there are limitations to such methods since their performance relies on large amounts of labeled data, while, in RS image data sets, high-quality images with labels are limited.虽然像CNN及其修改后的模型这样的监督DL方法可以在目标识别任务中获得可接受的性能,但由于其性能依赖于大量的标签数据,而在RS图像数据集中,带有标签的高质量图像是有限的,因此这类方法存在局限性。It is therefore necessary to recognize the targets with a few labeled image patches while learning the features with the unlabeled images. 因此,在用未标记的图像学习特征的同时,有必要用一些标记的图像斑块来识别目标。

Unsupervised feature-learning methods are models that can learn feature representations from the patches with no supervision. 无监督的特征学习方法是指可以在没有监督的情况下从斑块中学习特征表示的模型。 Typical unsupervised feature-learning methods are RBMs, sparse coding, AEs, k-means clustering, and the Gaussian Mixture Model [104]. 典型的无监督特征学习方法有RBMs、稀疏编码、AEs、k-means聚类和高斯混合物模型[104]。 All of these shallow feature-learning models can be stacked to form deep unsupervised models, some of which have been successfully applied to recognizing RS scenes and targets. 所有这些浅层的特征学习模型都可以叠加起来形成深层的无监督模型,其中一些模型已经成功应用于识别RS场景和目标。For instance, the DBN generated by stacking RBMs has shown its superiority over conventional models in the task of recognizing aircraft in RS scenes [105].例如,在RS场景中识别飞机的任务中,通过堆叠RBMs生成的DBN已经显示出比传统模型的优越性[105]。

The DBN is a deep probabilistic generative model that can learn the joint distribution of the input data and its ground truth. DBN是一个深度概率生成模型,它可以学习输入数据及其真值的联合分布。 The general framework of the DBN model is illustrated in Figure 4. DBN模型的总体框架如图4所示。The weights of each layer are updated through layer-wise training using the CD algorithm, i.e., training each layer separately. 通过使用CD算法进行逐层训练,更新各层的权重,即分别对各层进行训练。 The joint distribution between the observed vector x and the L hidden layers is ![]() , where

, where ![]() is a conditional distribution for the visible units conditioned on the hidden units of the RBM at level k, and

is a conditional distribution for the visible units conditioned on the hidden units of the RBM at level k, and ![]() is the visible–hidden joint distribution in the top-level RBM. 观察到的向量x与L个隐藏层之间的联合分布为

is the visible–hidden joint distribution in the top-level RBM. 观察到的向量x与L个隐藏层之间的联合分布为![]() , 这里

, 这里![]() 是可见单元的条件分布,条件是RBM的隐藏单元在水平k上的条件分布,并且

是可见单元的条件分布,条件是RBM的隐藏单元在水平k上的条件分布,并且![]() 是顶层RBM中可见-隐藏的联合分布。Some aircraft detection results from large airport scenes can be seen in [105], from which we can see that most aircrafts with different shapes and rotation angles have been detected. 在[105]中可以看到一些大型机场场景下的飞机检测结果,从中我们可以看到大部分形状和旋转角度不同的飞机都被检测到。

是顶层RBM中可见-隐藏的联合分布。Some aircraft detection results from large airport scenes can be seen in [105], from which we can see that most aircrafts with different shapes and rotation angles have been detected. 在[105]中可以看到一些大型机场场景下的飞机检测结果,从中我们可以看到大部分形状和旋转角度不同的飞机都被检测到。

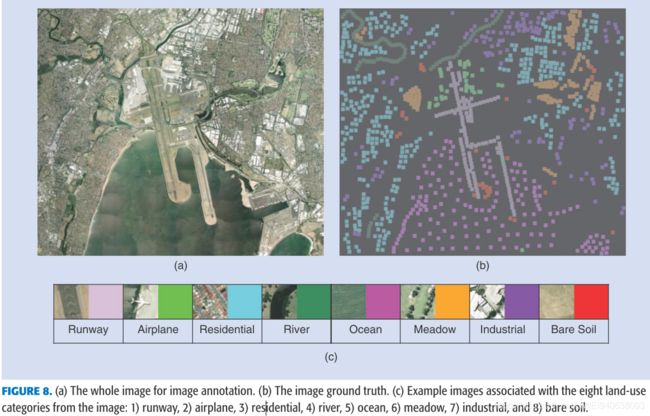

2.4 SCENE UNDERSTANDING

Satellite imaging sensors can now acquire images with a spatial resolution of up to 0.41 m. 卫星成像传感器现在可以获得空间分辨率高达0.41米的图像。These images, which are usually called very high-resolution (VHR) images, have abundant spatial and structural patterns. 这些图像通常被称为甚高分辨率(VHR)图像,具有丰富的空间和结构模式。 However, due to the huge volume of the image data, it is difficult to directly access the VHR data containing the scenes of interest. 但由于图像数据量巨大,很难直接获取包含感兴趣场景的VHR数据。 Due to the complex composition and large number of land-cover types, efficient representation and understanding of the scenes from VHR data have become a challenging problem, which has drawn great interest in the RS field. 由于土地覆盖类型构成复杂、数量众多,从VHR数据中高效地表示和理解场景成为一个具有挑战性的问题,这引起了RS领域的极大兴趣。

Recently, a lot of work in RS scene understanding has been proposed that focuses on learning hierarchical internal feature representations from image data sets [50], [106]. 最近,在RS场景理解方面提出了很多工作,主要是从图像数据集中学习层次化的内部特征表征。Good internal feature representations are hierarchical. In an image, pixels are assembled into edgelets, edgelets into motifs, motifs into parts, and parts into objects.好的内部特征表示是分层的。在图像中,像素被组合成边缘小点,边缘小点被组合成图案,图案被组合成部件,部件被组合成对象。 Finally, objects are assembled into scenes [107], [108]. This suggests that recognizing and analyzing scenes from VHR images should have multiple trainable feature-extraction stages stacked on top of each other, and we should learn the hierarchical internal feature representations from the image. 最后,将对象组装成场景[107],[108]。这说明从VHR图像中识别和分析场景应该有多个可训练的特征提取阶段叠加在一起,我们应该从图像中学习层次化的内部特征表示。

2.4.1 UNSUPERVISED HIERARCHICAL FEATURE-LEARNING-BASED METHODS

As indicated in the “General Framework” section, there is some work that focuses on unsupervised feature-learning techniques for RS images scene classification, such as sparse coding [58], k-means clustering [60], [109], and topic model [110], [111]. 如“一般框架”部分所示,有一些工作是针对RS图像场景分类的非监督特征学习技术,如稀疏编码[58],k-means聚类[60],[109],主题模型[110],[111]。These shallow models could be considered to stack into deep versions in a hierarchical manner [31], [106]. Here, we summarize an overall architecture of the unsupervised feature-learning framework for RS scene classification.这些浅层模型可以考虑以分层的方式堆叠成深层版本[31],[106]。在这里,我们总结了RS场景分类的无监督特征学习框架的整体架构。 As depicted in Figure 5, the framework consists of four parts: 1) patch extraction, 2) feature extraction, 3) feature representation, and 4) classification. 如图5所示,该框架由四个部分组成:1)斑块提取,2)特征提取,3)特征表示,4)分类。

2.4.2 PATCH EXTRACTION

In this step, the patches are extracted from the image by using random sampling or another method. Each patch has a dimension of w×w and has three bands (R, G, and B), with w referred to as the receptive field size.在这一步骤中,利用随机采样或其他方法从图像中提取出斑块。每个斑块的维度为w×w,有三个波段(R、G、B),w称为接受场尺寸。Each w×w patch can be represented as a vector in ![]() of the pixel intensity values, with N=w×w×3. 每个w×w斑块可以用

of the pixel intensity values, with N=w×w×3. 每个w×w斑块可以用![]() 表示像素强度值的向量,N=w×w×3。A data set of m sampled patches can thus be constructed. Then various features can be extracted from the image patch to construct the training and test data, such as raw pixel or other low-level features (e.g., color histogram, local binary pattern, and SIFT). 因此,可以构建一个由m个采样斑块组成的数据集。然后可以从图像斑块中提取各种特征来构建训练和测试数据,如原始像素或其他低级特征(如颜色直方图、局部二元模式和SIFT)。The patch feature composed of the training feature and test feature is then fed into an unsupervised feature-learning method that is used for the unsupervised learning of the feature extractor W. 然后将训练特征和测试特征组成的斑块特征输入到无监督特征学习方法中,用于特征提取器W的无监督学习。

表示像素强度值的向量,N=w×w×3。A data set of m sampled patches can thus be constructed. Then various features can be extracted from the image patch to construct the training and test data, such as raw pixel or other low-level features (e.g., color histogram, local binary pattern, and SIFT). 因此,可以构建一个由m个采样斑块组成的数据集。然后可以从图像斑块中提取各种特征来构建训练和测试数据,如原始像素或其他低级特征(如颜色直方图、局部二元模式和SIFT)。The patch feature composed of the training feature and test feature is then fed into an unsupervised feature-learning method that is used for the unsupervised learning of the feature extractor W. 然后将训练特征和测试特征组成的斑块特征输入到无监督特征学习方法中,用于特征提取器W的无监督学习。

2.4.3 FEATURE EXTRACTION

After the unsupervised feature learning, the features can be extracted from the training and test images using the learned feature extractor W, as illustrated in Figure 6.在无监督的特征学习之后,可以使用学习到的特征提取器W从训练和测试图像中提取特征,如图6所示。 Given a w-×-w image patch, we can now extract a representative ![]() for that patch by using the learned feature extractor

for that patch by using the learned feature extractor ![]() 。给定一个w-×-w的图像斑块,我们现在可以通过使用学习到的特征提取器

。给定一个w-×-w的图像斑块,我们现在可以通过使用学习到的特征提取器![]() 来提取该斑块的代表

来提取该斑块的代表![]() 。We then define a new representation of the entire image using the feature extractor function

。We then define a new representation of the entire image using the feature extractor function ![]() with each image. 然后,我们使用每个图像的特征提取函数

with each image. 然后,我们使用每个图像的特征提取函数![]() 对整个图像定义一个新的表示。Specifically, given an image of n-×-n pixels (with three channels: R, G, and B), we can define an ( n-w+1)-×-(n-w+1) representation (with K channels) by computing the representative

对整个图像定义一个新的表示。Specifically, given an image of n-×-n pixels (with three channels: R, G, and B), we can define an ( n-w+1)-×-(n-w+1) representation (with K channels) by computing the representative ![]() for each w-×-w subpatch of the input image. 具体来说,给定一幅n-×-n像素的图像(有三个通道:R,G,B),我们可以通过计算输入图像的每个w-×-w子块的代表

for each w-×-w subpatch of the input image. 具体来说,给定一幅n-×-n像素的图像(有三个通道:R,G,B),我们可以通过计算输入图像的每个w-×-w子块的代表![]() 来定义一个( n-w+1)-×-(n-w+1)的代表(有K个通道)。 More formally, we denote

来定义一个( n-w+1)-×-(n-w+1)的代表(有K个通道)。 More formally, we denote ![]() as the K-dimensional feature extracted from location i, j of the input image. 更正式地,我们表示

as the K-dimensional feature extracted from location i, j of the input image. 更正式地,我们表示![]() 是从输入图像的位置i,j中提取的K维特征。For computational efficiency, we can also convolute our n-×-n image with a step size (or stride) greater than 1 across the image. 为了提高计算效率,我们也可以对n-×-n图像进行卷积,整个图像的步长(或步幅)大于1。

是从输入图像的位置i,j中提取的K维特征。For computational efficiency, we can also convolute our n-×-n image with a step size (or stride) greater than 1 across the image. 为了提高计算效率,我们也可以对n-×-n图像进行卷积,整个图像的步长(或步幅)大于1。

FIGURE 6.特征提取采用w-×-w特征提取器,步幅为s,我们首先提取w-×-w的斑块,每个斑块之间用s个像素隔开。然后将它们映射到K维的特征向量上,形成新的图像表示。然后将这些向量汇集到图像的16个象限,形成分类的特征向量。

2.4.4 FEATURE REPRESENTATION

After the feature extraction, the new feature representation for an image scene will usually have a very high dimensionality. For computational efficiency and storage volume, it is standard practice to use max-pooling or another strategy to reduce the dimensionality of the image representation [112], [36]. 在特征提取后,图像场景的新特征表示通常会有很高的维度。为了计算效率和存储量,标准的做法是使用最大池化或其他策略来降低图像表示的维度[112],[36]。

For a stride of s = 1, the feature mapping produces an ( n-w+1)-×-( n-w+1)-×-K representation. 对于s=1的步长,特征映射产生( n-w+1)-×-( n-w+1)-×-K表示。We can reduce this by finding the maximum over local regions of ![]() ,as done previously. This procedure is commonly used in computer vision,

,as done previously. This procedure is commonly used in computer vision,

with many variations, as well as in deep feature learning. 我们可以通过寻找![]() 的局部区域的最大值来减少这个问题,就像之前做的那样。这个过程在计算机视觉中常用,有很多变化,在深度特征学习中也是如此。

的局部区域的最大值来减少这个问题,就像之前做的那样。这个过程在计算机视觉中常用,有很多变化,在深度特征学习中也是如此。

2.4.5 CLASSIFICATION

Finally, the extracted feature is combined with the SVM or another classifier to predict the scene label. 最后,将提取的特征与SVM或其他分类器相结合,预测场景标签。 However, most methods for unsupervised feature learning produce filters that operate either on intensity or color information.然而,大多数无监督特征学习的方法都会产生对强度或颜色信息进行操作的滤波器。Vladimir [113] proposed a quaternion PCA and k-means combined approach for unsupervised feature learning that makes joint encoding of the intensity and color information possible. Vladimir[113]提出了一种四元PCA和k-means相结合的无监督特征学习方法,使得强度和颜色信息的联合编码成为可能。In addition, Cheriyadat [58] introduced a variant of sparse coding that combines local SIFT-based feature descriptors to generate a new sparse representation, producing an excellent classification accuracy. 此外,Cheriyadat[58]引入了一种稀疏编码的变体,将基于SIFT的局部特征描述符结合起来,生成新的稀疏表示,产生了优秀的分类精度。The sparse AE-based method also produces excellent performance.基于稀疏AE的方法也会产生优异的性能。 In [31], Zhang et al. proposed a saliency-guided sparse AE method to learn a set of feature extractors that are robust and efficient, proposing a saliency-guided sampling strategy to extract a representative set of patches from a VHR image so that the salient parts of the image that contain the representative information in the VHR image can be explored, which differs from the traditional random sampling strategy. 在[31]中,Zhang等人提出了一种显著性引导的稀疏AE方法,以学习一组稳健高效的特征提取器,提出了一种显著性引导的采样策略,从VHR图像中提取一组有代表性的斑块,从而可以探索出VHR图像中包含代表性信息的突出部分,这与传统的随机采样策略不同。They also explored the new dropout technique in the feature-learning procedure to reduce data overfitting [114]. The extracted feature generated from the learned feature extractors can characterize a complex scene very well and can produce an excellent classification accuracy.他们还在特征学习过程中探索了新的dropout技术,以减少数据过拟合[114]。通过学习的特征提取器产生的提取特征可以很好地描述复杂场景的特征,可以产生很好的分类精度。

2.4.6 SUPERVISED HIERARCHICAL FEARTURE-LEARNING-BASED METHODS

Before 2006, it was believed that training deep supervised neural networks was too difficult to perform (and indeed did not work). The first breakthrough in training happened in Geoff Hinton’s lab with an unsupervised pretraining by RBMs, as discussed in the previous subsection. 在2006年之前,人们认为训练深度监督神经网络太难了,无法进行(事实上也没有用)。训练方面的第一次突破发生在Geoff Hinton的实验室,由RBMs进行无监督的预训练,如前一小节所述。 However, more recently, it was discovered that one could train deep supervised networks by proper initialization, just large enough for the gradients to flow well and the activations to convey useful information.然而,最近发现,人们可以通过适当的初始化来训练深度监督网络,只要足够大,梯度就能很好地流动,激活就能传递有用的信息。 These good results with the pure supervised training of deep networks seem to be especially apparent when large quantities of labeled data are available.当有大量的标签数据时,深度网络的纯监督训练的这些良好效果似乎特别明显。

In the early years after 2010, based on the latent Dirichlet allocation (LDA) model [115], various supervised hierarchical feature-learning methods have been proposed in the RS community [116]–[120]. 2010年以后的初期,基于潜伏的Dirichlet分配(LDA)模型[115],RS界提出了各种监督的分层特征学习方法[116]-[120]。LDA is a generative probabilistic graphical model for independent collections of discrete data and is a three-level hierarchical model, in which the documents inside a corpus are represented as random mixtures over a set of latent variables called topics. LDA是一种针对离散数据独立集合的生成性概率图形模型,是一种三层层次模型,其中,语料库内的文档被表示为一组称为主题的潜变量上的随机混合物。Each topic is in turn characterized by a distribution over words. The LDA model captures all of the important information contained in a corpus by considering only the statistics of the words. 每一个主题又由一个词的分布来描述。LDA模型只考虑词的统计量,就能捕捉到语料库中的所有重要信息。The contextual relationships are neglected due to the Bayesian assumption. For this reason, LDA iscategorized as a bag of words model. Its main characteristic is based on the words’ exchangeability. 由于贝叶斯假设,上下文关系被忽略了。为此,LDA被归类为词袋模型。其主要特点是基于词的可交换性。The LDA-based supervised hierarchical feature-learning approaches have been shown to generate excellent hierarchical feature representations for RS scene classification. 基于LDA的监督分层特征学习方法已经被证明可以为RS场景分类生成优秀的分层特征表示。