Linux (CentOS)安装torch2.0.0 +tensorflow2.12.0 +NVIDIA530.30.02 +CUDA12.1.1 +cuDNN8.9.0 +TensorRT8.6.0

Linux (CentOS)安装 NVIDIA530.30.02+CUDA12.1.1+cuDNN8.9.0+torch2.0.0+tensorflow2.12.0

NVIDIA

wget https://us.download.nvidia.cn/XFree86/Linux-x86_64/530.30.02/NVIDIA-Linux-x86_64-530.30.02.run

如果wget无法连接,可以直接打开网址进行下载,然后再传到服务器上

chmod +x NVIDIA-Linux-x86_64-530.30.02.run

赋予权限后运行

sh ./NVIDIA-Linux-x86_64-530.30.02.run -s

安装完驱动后进行验证

# nvidia-smi

Wed Apr 26 16:12:43 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 530.30.02 Driver Version: 530.30.02 CUDA Version: 12.1 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce GTX 1080 Ti Off| 00000000:01:00.0 Off | N/A |

| 20% 39C P0 56W / 250W| 0MiB / 11264MiB | 2% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+

CUDA

wget https://developer.download.nvidia.com/compute/cuda/12.1.1/local_installers/cuda_12.1.1_530.30.02_linux.run

如果wget无法连接,可以直接打开网址进行下载,然后再传到服务器上

sudo sh cuda_12.1.1_530.30.02_linux.run

运行后在出现的页面中以下2步需要进行调整

1.输入accept

2. - [×] Driver 取消×

开始验证

# nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2023 NVIDIA Corporation

Built on Mon_Apr__3_17:16:06_PDT_2023

Cuda compilation tools, release 12.1, V12.1.105

Build cuda_12.1.r12.1/compiler.32688072_0

cuDNN

https://developer.nvidia.com/rdp/cudnn-download

下载完成后在服务器上解压

tar -zxvf cudnn-linux-x86_64-8.9.0.131_cuda12-archive.tar.xz

逐一执行下面的命令进行cudnn的安装

sudo cp cudnn-linux-x86_64-8.9.0.131_cuda12-archive/include/cudnn.h /usr/local/cuda/include/

sudo cp cudnn-linux-x86_64-8.9.0.131_cuda12-archive/lib/libcudnn* /usr/local/cuda/lib64/

sudo chmod a+r /usr/local/cuda/include/cudnn.h

sudo chmod a+r /usr/local/cuda/lib64/libcudnn*

由于cuda文件过大,可以适当使用软连接

torch

conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

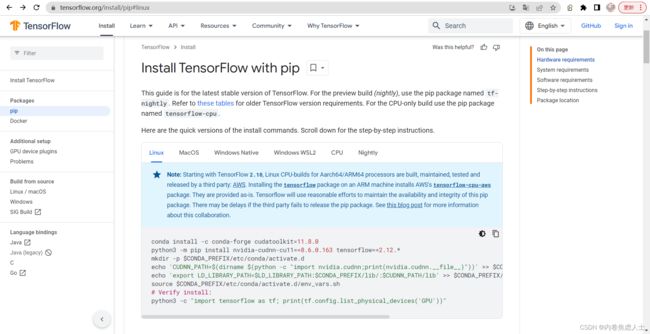

tensorflow

官网给出的安装程序如下

conda install -c conda-forge cudatoolkit=11.8.0

python3 -m pip install nvidia-cudnn-cu11==8.6.0.163 tensorflow==2.12.*

mkdir -p $CONDA_PREFIX/etc/conda/activate.d

echo 'CUDNN_PATH=$(dirname $(python -c "import nvidia.cudnn;print(nvidia.cudnn.__file__)"))' >> $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CONDA_PREFIX/lib/:$CUDNN_PATH/lib' >> $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

source $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

# Verify install:

python3 -c "import tensorflow as tf; print(tf.config.list_physical_devices('GPU'))"

最后一行代码运行完成后会显示

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

TensorRT

前置条件

pip install cuda-python

python3 -m pip install --upgrade tensorrt

https://developer.nvidia.com/nvidia-tensorrt-8x-download

下载后解压

tar -xzvf TensorRT-8.6.0.12.Linux.x86_64-gnu.cuda-12.0.tar.gz

添加 TensorRT 的绝对路径lib目录到环境变量LD_LIBRARY_PATH:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:TensorRT-8.6.0.12/lib

安装 Python TensorRT wheel 文件

python3 -m pip install tensorrt-8.6.0-cp310-none-linux_x86_64.whl

(可选)安装 TensorRT lean 和dispatch runtime wheel文件:

python3 -m pip install tensorrt_lean-8.6.0-cp310-none-linux_x86_64.whl

python3 -m pip install tensorrt_dispatch-8.6.0-cp310-none-linux_x86_64.whl

(可选)安装 graphsurgeon wheel 文件:

cd TensorRT-8.6.0.12/graphsurgeon

python3 -m pip install graphsurgeon-0.4.6-py2.py3-none-any.whl

(可选)安装 onnx-graphsurgeon wheel 文件:

cd TensorRT-8.6.0.12/onnx_graphsurgeon

python3 -m pip install onnx_graphsurgeon-0.3.12-py2.py3-none-any.whl

Final test

见我之前写的代码,运行成功后会显示

torch.__version__ 2.0.0+cu118

torch.version.cuda 11.8

torch.cuda.is_available True

torch.cuda.get_device_name NVIDIA GeForce GTX 1080 Ti

torch.cuda.device_count 1

-------------------------------------------------------------

tf.__version__ 2.12.0

tf.config.list_physical_devices True

tf.test.is_built_with_cuda True

- 若出现告警

I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

可输入以下命令解决

for a in /sys/bus/pci/devices/*; do echo 0 | sudo tee -a $a/numa_node; done