live555音视频同步

文章目录

- live555音视频同步

-

- 通过testOnDemandRTSPServer.cpp实现,仅需修改部分代码即可,

- 具体思路:

- 注意

- 具体代码(对testOnDemandRTSPServer.cpp进行修改)

- 修改之后成功在所在目录下直接分步执行以下命令

- 点赞收藏加关注,追求技术不迷路!!!欢迎评论区互动。

live555音视频同步

通过testOnDemandRTSPServer.cpp实现,仅需修改部分代码即可,

具体思路:

流式传输音频+视频很简单:只需调用“addSubsession()”两次 - 一次用于视频源,另一次用于音频源。(这样你的“ServerMediaSession”对象包含两个“ServerMediaSubsession”对象 - 一个用于视频,一个用于音频。)

但是,为了音频/视频同步正常工作,每个源(视频和音频)必须生成正确的“每个帧的“fPresentationTime”值,并且这些“必须”与“挂钟”时间对齐 - 即,与调用“gettimeofday()”时获得的时间相同。

注意

测试用的h264和mp3尽量从同一个mp4上进行提取。以验证同步问题。

提取具体操作:

Mp4文件提取详细H.264和MP3文件_泷fyk的博客-CSDN博客

现成的我已提取好的文件:

音视频同步h264-live555(水龙吟)-直播技术文档类资源-CSDN文库

音视频同步测试mp3-live555(水龙吟)资源-CSDN文库

具体代码(对testOnDemandRTSPServer.cpp进行修改)

#include "liveMedia.hh"

#include "BasicUsageEnvironment.hh"

#include "announceURL.hh"

UsageEnvironment* env;

// To make the second and subsequent client for each stream reuse the same

// input stream as the first client (rather than playing the file from the

// start for each client), change the following "False" to "True":

//单播、组播开关,false为单播,true为组播,单播每个链接接入时都会重新播放,组播的话会随着上一个接入流的位置进行播放

//经过实测,组播模式各链接同步流畅,延迟很小。

Boolean reuseFirstSource = false;

// To stream *only* MPEG-1 or 2 video "I" frames

// (e.g., to reduce network bandwidth),

// change the following "False" to "True":

Boolean iFramesOnly = False;

static void announceStream(RTSPServer* rtspServer, ServerMediaSession* sms,

char const* streamName, char const* inputFileName); // forward

int main(int argc, char** argv) {

// Begin by setting up our usage environment:

TaskScheduler* scheduler = BasicTaskScheduler::createNew();

env = BasicUsageEnvironment::createNew(*scheduler);

UserAuthenticationDatabase* authDB = NULL;

#ifdef ACCESS_CONTROL

// To implement client access control to the RTSP server, do the following:

authDB = new UserAuthenticationDatabase;

authDB->addUserRecord("username1", "password1"); // replace these with real strings

// Repeat the above with each , that you wish to allow

// access to the server.

#endif

// Create the RTSP server:

#ifdef SERVER_USE_TLS

// Serve RTSPS: RTSP over a TLS connection:

RTSPServer* rtspServer = RTSPServer::createNew(*env, 322, authDB);

#else

// Serve regular RTSP (over a TCP connection):

RTSPServer* rtspServer = RTSPServer::createNew(*env, 8554, authDB);

#endif

if (rtspServer == NULL) {

*env << "Failed to create RTSP server: " << env->getResultMsg() << "\n";

exit(1);

}

#ifdef SERVER_USE_TLS

#ifndef STREAM_USING_SRTP

#define STREAM_USING_SRTP True

#endif

rtspServer->setTLSState(PATHNAME_TO_CERTIFICATE_FILE, PATHNAME_TO_PRIVATE_KEY_FILE,

STREAM_USING_SRTP);

#endif

char const* descriptionString

= "Session streamed by \"testOnDemandRTSPServer\"";

// Set up each of the possible streams that can be served by the

// RTSP server. Each such stream is implemented using a

// "ServerMediaSession" object, plus one or more

// "ServerMediaSubsession" objects for each audio/video substream.

// A H.264 video elementary stream:

//在此处进行修改

{

//输出文件名称,按需求修改

char const* streamName = "h264ESVideoTest";

//h264输入文件所在位置,按实际修改

char const* inputFileName = "./test/output_video.264";

//mp3输入文件名称所在位置,按实际修改

char const* inputFileNameAdus = "./test/output_audio.mp3";

//创建ServerMediaSession

//下面这几行定义了两个变量,useADUs 和 interleaving,用于配置MP3音频流的传输方式。useADUs 设置为False 表示不使用ADUs(Audio Data Units),即不进行分组音频数据的传输。interleaving 初始化为NULL,表示不进行数据交错。

ServerMediaSession* sms

= ServerMediaSession::createNew(*env, streamName, streamName,

descriptionString);

Boolean useADUs = False;

Interleaving* interleaving = NULL;

#ifdef STREAM_USING_ADUS

useADUs = True;

#ifdef INTERLEAVE_ADUS

unsigned char interleaveCycle[] = {0,2,1,3}; // or choose your own...

unsigned const interleaveCycleSize

= (sizeof interleaveCycle)/(sizeof (unsigned char));

interleaving = new Interleaving(interleaveCycleSize, interleaveCycle);

#endif

#endif

//添加音频子会话

sms->addSubsession(MP3AudioFileServerMediaSubsession

::createNew(*env, inputFileNameAdus, reuseFirstSource,

useADUs, interleaving));

//添加视频子会话

sms->addSubsession(H264VideoFileServerMediaSubsession

::createNew(*env, inputFileName, reuseFirstSource));

//将创建的ServerMediaSession* sms添加到rtsp服务器。

rtspServer->addServerMediaSession(sms);

//

announceStream(rtspServer, sms, streamName, inputFileName);

}

env->taskScheduler().doEventLoop(); // does not return

return 0; // only to prevent compiler warning

}

static void announceStream(RTSPServer* rtspServer, ServerMediaSession* sms,

char const* streamName, char const* inputFileName) {

UsageEnvironment& env = rtspServer->envir();

env << "\n\"" << streamName << "\" stream, from the file \""

<< inputFileName << "\"\n";

announceURL(rtspServer, sms);

}

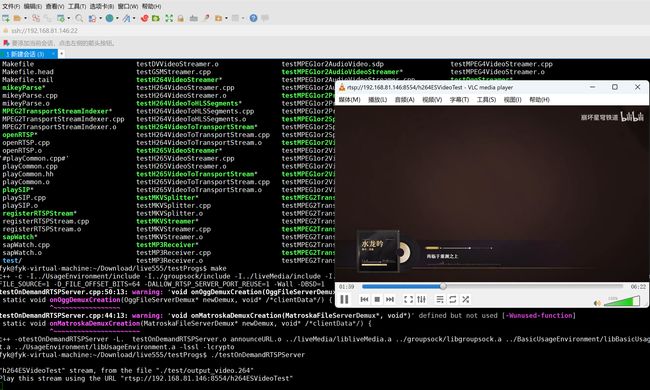

修改之后成功在所在目录下直接分步执行以下命令

make

./testOnDemandRTSPServer

windowsVLC接到rtsp流