GoogLeNet 08

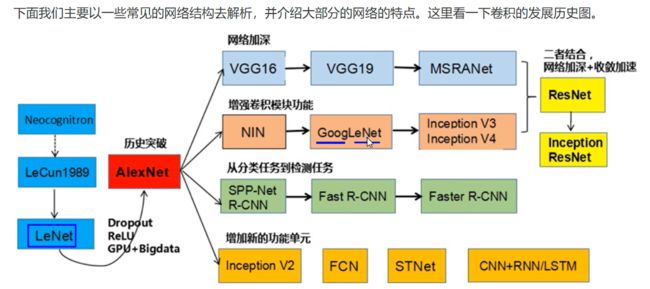

一、发展

1989年,Yann LeCun提出了一种用反向传导进行更新的卷积神经网络,称为LeNet。

1998年,Yann LeCun提出了一种用反向传导进行更新的卷积神经网络,称为LeNet-5

AlexNet是2012年ISLVRC 2012(ImageNet Large Scale Visual Recognition Challenge)竞赛的冠军网络,分类准确率由传统的 70%+提升到 80%+。 它是由Hinton和他的学生Alex Krizhevsky设计的。也是在那年之后,深度学习开始迅速发展。

VGG在2014年由牛津大学著名研究组VGG (Visual Geometry Group) 提出,斩获该年ImageNet竞 中 Localization Task (定位 任务) 第一名 和 Classification Task (分类任务) 第二名。[1.38亿个参数]

GoogLeNet在2014年由Google团队提出,斩获当年ImageNet竞赛中Classification Task (分类任务) 第一名。[论文:Going deeper with convolutions][600多万参数]

ResNet

二、GoogLeNet

2.1 特点

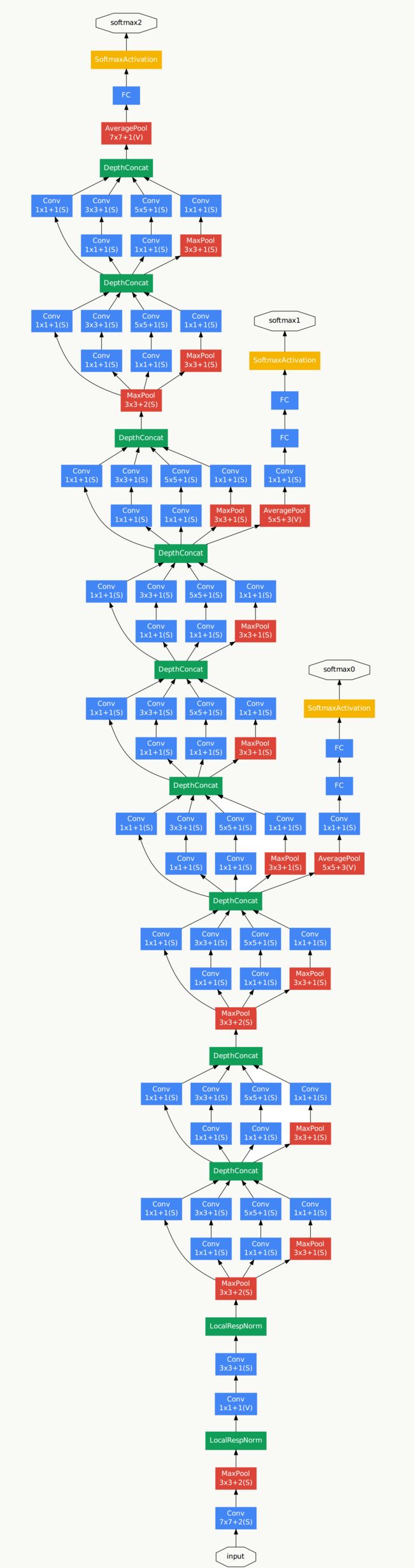

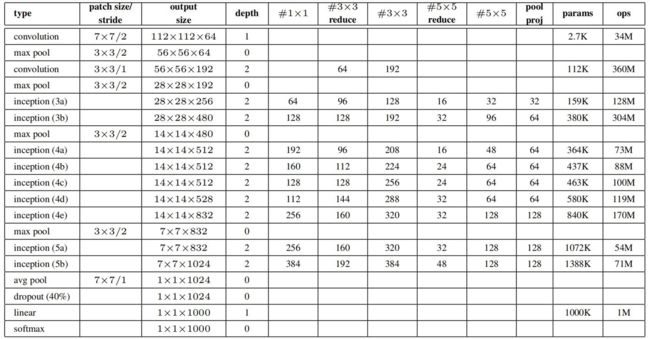

- 引入了Inception结构(融合不同尺度的特征信息)

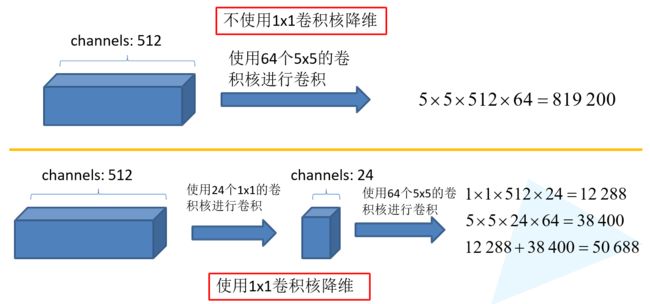

- 使用1x1的卷积核进行降维以及映射处理

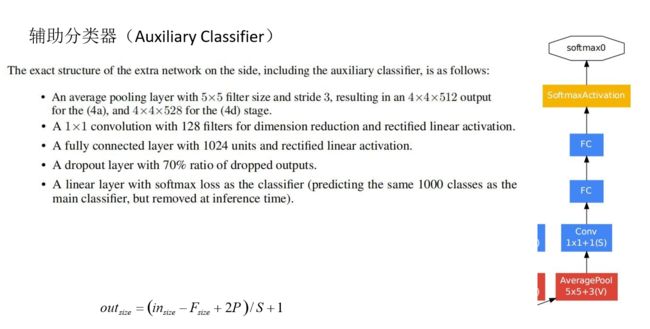

- 添加两个辅助分类器帮助训练

- 丢弃全连接层,使用平均池化层(大大减少模型 参数)

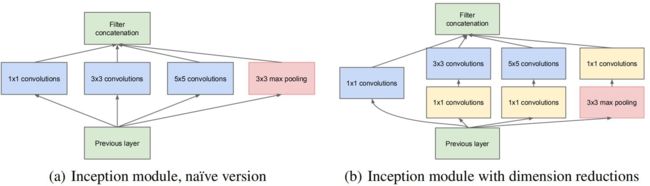

2.2 inception结构

(1)减少参数

(2)卷积核比较小,可以扫到细节特征;卷积核大,可以扫描大的结构

左边是inception原始结构(用多个卷积核分别扫描,然后组合起来)

右边加了一个维度缩减(用多个卷积核分别扫描,在卷积核基础上进行堆叠减少维度)

2.3 辅助分类器

三、GoogLeNet实现

3.1 Inception实现

from tensorflow import keras

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

class Inception(keras.layers.Layer):

def __init__(self, ch1x1, ch3x3red, ch3x3, ch5x5red, ch5x5, pool_proj, **kwargs):

super().__init__(**kwargs)

self.branch1 = keras.layers.Conv2D(ch1x1, kernel_size=1, activation='relu')

self.branch2 = keras.Sequential([

keras.layers.Conv2D(ch3x3red, kernel_size=1, activation='relu'),

keras.layers.Conv2D(ch3x3, kernel_size=3, padding='SAME', activation='relu')

])

self.branch3 = keras.Sequential([

keras.layers.Conv2D(ch5x5red, kernel_size=1, activation='relu'),

keras.layers.Conv2D(ch5x5, kernel_size=5, padding='SAME', activation='relu')

])

self.branch4 = keras.Sequential([

keras.layers.MaxPool2D(pool_size=3, strides=1, padding='SAME'),

keras.layers.Conv2D(pool_proj, kernel_size=1, activation='relu')

])

def call(self, inputs, **kwargs):

branch1 = self.branch1(inputs)

branch2 = self.branch2(inputs)

branch3 = self.branch3(inputs)

branch4 = self.branch4(inputs)

outputs = keras.layers.concatenate([branch1, branch2, branch3, branch4])

return outputs3.2 辅助输出结构

# 定义辅助输出结构

class InceptionAux(keras.layers.Layer):

def __init__(self, num_classes, **kwargs):

super().__init__(**kwargs)

self.average_pool = keras.layers.AvgPool2D(pool_size=5, strides=3)

self.conv = keras.layers.Conv2D(128, kernel_size=1, activation='relu')

self.fc1 = keras.layers.Dense(1024, activation='relu')

self.fc2 = keras.layers.Dense(num_classes)

self.softmax = keras.layers.Softmax()

def call(self, inputs, **kwargs):

x = self.average_pool(inputs)

x = self.conv(x)

x = keras.layers.Flatten()(x)

x = keras.layers.Dropout(rate=0.5)(x)

x = self.fc1(x)

x = keras.layers.Dropout(rate=0.5)(x)

x = self.fc2(x)

x = self.softmax(x)

return x3.3 GoogLeNet实现

def GoogLeNet(im_height=224, im_width=224, class_num=1000, aux_logits=False):

input_image = keras.layers.Input(shape=(im_height, im_width, 3), dtype='float32')

x = keras.layers.Conv2D(64, kernel_size=7, strides=2, padding='SAME', activation='relu')(input_image)

# 注意MaxPool2D, padding='SAME', 224/2=112, padding='VALID', (224 -(3 -1 )) / 2 = 111, same向上取整.

x = keras.layers.MaxPool2D(pool_size=3, strides=2, padding='SAME')(x)

x = keras.layers.Conv2D(64, kernel_size=1, strides=1, padding='SAME', activation='relu')(x)

x = keras.layers.Conv2D(192, kernel_size=3, strides=1, padding='SAME', activation='relu')(x)

x = keras.layers.MaxPool2D(pool_size=3, strides=2, padding='SAME')(x)

x = Inception(64, 96, 128, 16, 32, 32, name='inception_3a')(x)

x = Inception(128, 128, 192, 32, 96, 64, name='inception_3b')(x)

x = keras.layers.MaxPool2D(pool_size=3, strides=2, padding='SAME')(x)

x = Inception(192, 96, 208, 16, 48, 64, name='inception_4a')(x)

if aux_logits:

aux1 = InceptionAux(class_num, name='aux_1')(x)

x = Inception(160, 112, 224, 24, 64, 64, name='inception_4b')(x)

x = Inception(128, 128, 256, 24, 64, 64, name='inception_4c')(x)

x = Inception(112, 144, 288, 32, 64, 64, name='inception_4d')(x)

if aux_logits:

aux2 = InceptionAux(class_num, name='aux_2')(x)

x = Inception(256, 160, 320, 32, 128, 128, name='inception_4e')(x)

x = keras.layers.MaxPool2D(pool_size=3, strides=2, padding='SAME')(x)

x = Inception(256, 160, 320, 32, 128, 128, name='inception_5a')(x)

x = Inception(384, 192, 384, 48, 128, 128, name='inception_5b')(x)

x = keras.layers.AvgPool2D(pool_size=7, strides=1)(x)

x = keras.layers.Flatten()(x)

x = keras.layers.Dropout(rate=0.4)(x)

x = keras.layers.Dense(class_num)(x)

aux3 = keras.layers.Softmax(name='aux_3')(x)

if aux_logits:

aux = aux1 * 0.2 + aux2 * 0.3 + aux3 * 0.5

model = keras.models.Model(inputs=input_image, outputs=aux)

else:

model = keras.models.Model(inputs=input_image, outputs=aux3)

return model3.4 数据生成

train_dir = './training/training/'

valid_dir = './validation/validation/'

# 图片数据生成器

train_datagen = keras.preprocessing.image.ImageDataGenerator(

rescale = 1. / 255,

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True,

vertical_flip = True,

fill_mode = 'nearest'

)

height = 224

width = 224

channels = 3

batch_size = 32

num_classes = 10

train_generator = train_datagen.flow_from_directory(train_dir,

target_size = (height, width),

batch_size = batch_size,

shuffle = True,

seed = 7,

class_mode = 'categorical')

valid_datagen = keras.preprocessing.image.ImageDataGenerator(

rescale = 1. / 255

)

valid_generator = valid_datagen.flow_from_directory(valid_dir,

target_size = (height, width),

batch_size = batch_size,

shuffle = True,

seed = 7,

class_mode = 'categorical')

print(train_generator.samples)

print(valid_generator.samples)3.4 训练

googlenet = GoogLeNet(class_num=10)

googlenet.summary()

googlenet.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['acc'])

history = googlenet.fit(train_generator,

steps_per_epoch=train_generator.samples // batch_size,

epochs=10,

validation_data=valid_generator,

validation_steps = valid_generator.samples // batch_size

)