Docker——compose简述部署

前言

Consul是HashiCorp公司推出的开源工具,用于实现分布式系统的服务发现与配置;

●支持健康检查,允许存储键值对;

●基于Golong语言,可移植性强;

●支持ACL访问控制;

与Docker等轻量级容器可无缝配合。

Docker Compose的前身是Fig,它是一个定义及运行多个Docker容器的工具;

使用Docker Compose不再需要使用Shell脚本来启动容器;

Docker Compose非常适合组合使用多个容器进行开发的场景;

Consul是HashiCorp公司推出的开源工具,用于实现分布式系统的服务发现与配置

提示:以下是本篇文章正文内容,下面案例仅可供参考

一、Docker Compose常用字段和命令

1、字段

build dockerfile context 指定Dockerfile文件名构建镜像上下文路径

image 指定镜像

command 执行命令,覆盖默认命令

container name 指定容器名称,由于容器名称是唯一的,如果指定自定 义名称,则无法scale

deploy 指定部署和运行服务相关配置,只能在Swarm模式使用

environment 添加环境变量

networks 加入网络

ports 暴露容器端口,与-p相同,但端口不能低于60

volumes 挂载宿主机路径或命令卷

restart 重启策略,默认no,always,no-failure,unless-stoped

hostname 容器主机名

2、命令

build 重新构建服务

ps 列出容器

up 创建和启动容器

exec 在容器里面执行命令

scale 指定一个服务容器的启动数量

top 显示容器进程3=

logs 查看容器输出

down 删除容器、网络、数据卷(彻底删除)

stop/start/restart/ 停止/启动/重启服务

二、compose部署

1.

//环境部署所有主机安装docker环境(内容为docker基础)

yum install docker-ce -y

//下载compose(上传docker_compose)

curl -L https://github.com/docker/compose/releases/download/1.21.1/docker-compose-uname -s-uname -m -o /usr/local/bin/docker-compose

cp -p docker-compose /usr/local/bin/

chmod +x /usr/local/bin/docker-compose

mkdir /root/compose_nginx

[root@docker ~]# rz -E

rz waiting to receive.

[root@docker ~]# ls

anaconda-ks.cfg docker-compose initial-setup-ks.cfg

[root@docker ~]# chmod +x docker-compose

[root@docker ~]# ls

anaconda-ks.cfg docker-compose initial-setup-ks.cfg

[root@docker ~]# mv docker-compose /usr/local/bin

[root@docker ~]# mkdir /root/compose_nginx

[root@docker ~]# cd compose_nginx/

[root@docker compose_nginx]# ls

[root@docker compose_nginx]# pwd

/root/compose_nginx

vim /root/compose_nginx/docker-compose.yml

version: ‘3’

services:

nginx:

hostname: nginx

build:

context: ./nginx

dockerfile: Dockerfile

ports:

- 1216:80

- 1217:443

networks:

- cluster

volumes:

- ./wwwroot:/usr/local/nginx/html

networks:

cluster:

[root@docker compose_nginx]# vim docker-compose.yml

version: '3'

services:

nginx:

hostname: nginx

build:

context: ./nginx

dockerfile: Dockerfile

ports:

- 1216:80

- 1217:443

networks:

- cluster

volumes:

- ./wwwroot:/usr/local/nginx/html

networks:

cluster:

vim dockerfile

FROM centos:7 as build

ADD nginx-1.15.9.tar.gz /mnt

WORKDIR /mnt/nginx-1.15.9

RUN yum install -y gcc pcre pcre-devel devel zlib-devel make &> /dev/null && \

yum clean all &&\

sed -i 's/CFLAGS="$CFLAGS -g"/#CFLAGS="$CFLAGS -g"/g' auto/cc/gcc && \

./configure --prefix=/usr/local/nginx &> /dev/null && \

make &>/dev/null && \

make install &>/dev/null && \

rm -rf /mnt/nginx-1.15.9

FROM centos:7

EXPOSE 80

VOLUME ["/usr/local/nginx/html"]

COPY --from=build /usr/local/nginx /usr/local/nginx

CMD ["/usr/local/nginx/sbin/nginx","-g","daemon off;"]

docker-compose -f docker-compose.yml up -d

[root@docker compose_nginx]# echo "this is a container nginx" >> wwwroot/index.html

[root@docker compose_nginx]# tree ./

./

├── docker-compose.yml

├── nginx

│ ├── dockerfile

│ └── nginx-1.15.9.tar.gz

└── wwwroot

└── index.html

2 directories, 4 files

[root@consul compose_nginx]# docker-compose -f docker-compose.yml up -d

[root@docker compose_nginx]# docker-compose -f docker-compose.yml up -dCreating network "compose_nginx_cluster" with the default driver

Building nginx

Step 1/9 : FROM centos:7 as build

---

Successfully built 921483a5da3b

Successfully tagged compose_nginx_nginx:latest

WARNING: Image for service nginx was built because it did not already exist. To rebuild this image you must use `docker-compose build` or `docker-compose up --build`.

Creating compose_nginx_nginx_1 ... done

[root@docker compose_nginx]# docker-compose ps

Name Command State Ports

-----------------------------------------------------------------------

compose_nginx_nginx /usr/local/nginx/sb Up 0.0.0.0:1217->443/t

_1 in/ngin ... cp,:::1217->443/tcp

, 0.0.0.0:1216->80/

tcp,:::1216->80/tc

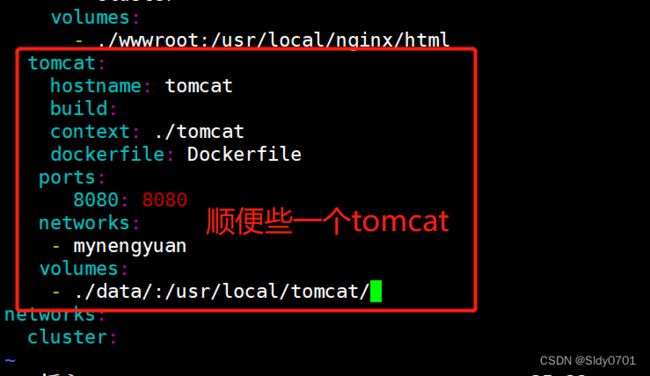

tomcat:

hostname: tomcat

build:

context: ./tomcat

dockerfile: Dockerfile

ports:

8080: 8080

networks:

- mynengyuan

volumes:

- ./data/:/usr/local/tomcat/

[root@docker compose_nginx]# docker-compose -f docker-compose.yml up -d

docker 基础操作/常规操作

1、image 容器的管理命令

2、dockerfile

3、docker 网络

4、docker 私有仓库

registry

harbor

docker-compose ——>资源编排和管理手段 (docker swarm && k8s )

三.consul部署

consul注册中心/注册机

服务器nginx:192.168.10.41 docker-ce; consul;consul-template

服务器docker:192.168.10.30 docker-ce;registrator (注册的组件)

template 模板(更新)

registrator(自动发现)

后端每构建出一个容器,会向registrator进行注册,控制consul 完成更新操作,consul会触发consul template模板进行热更新

核心机制:consul :自动发现、自动更新,为容器提供服务(添加、删除、生命周期)

1.consul服务器

mkdir /root/consul

cp consul_0.9.2_linux_amd64.zip /root/consul

cd /root/consul

unzip consul_0.9.2_linux_amd64.zip

mv consul /usr/bin

[root@consul ~]# rz -E

rz waiting to receive.

[root@consul ~]# ls

anaconda-ks.cfg consul_0.9.2_linux_amd64.zip initial-setup-ks.cfg

[root@consul ~]# mkdir consul

[root@consul ~]# mv consul

consul/ consul_0.9.2_linux_amd64.zip

[root@consul ~]# mv consul_0.9.2_linux_amd64.zip consul

[root@consul ~]# ls

anaconda-ks.cfg consul initial-setup-ks.cfg

[root@consul ~]# cd consul/

[root@consul consul]# ls

consul_0.9.2_linux_amd64.zip

[root@consul consul]# unzip consul_0.9.2_linux_amd64.zip

Archive: consul_0.9.2_linux_amd64.zip

inflating: consul

[root@consul consul]# mv consul /usr/bin/

consul agent \ agent代理模式

-server \ server模式

-bootstrap \ 前端框架

-ui \ 可被访问的web界面

-data-dir=/var/lib/consul-data

-bind=192.168.10.30

-client=0.0.0.0

-node=consul-server01 &> /var/log/consul.log &

consul agent \

-server \

-bootstrap \

-ui \

-data-dir=/var/lib/consul-data \

-bind=192.168.10.30 \

-client=0.0.0.0 \

-node=consul-server01 &> /var/log/consul.log &

##查看集群信息

[root@consul consul]# consul members

[root@consul consul]# jobs

[1]+ 运行中 consul agent -server -bootstrap -ui -data-dir=/var/lib/consul-data -bind=192.168.10.30 -client=0.0.0.0 -node=consul-server01 &>/var/log/consul.log &

[root@consul consul]# consul members

Node Address Status Type Build Protocol DC

consul-server01 192.168.10.30:8301 alive server 0.9.2 2 dc1

[root@consul consul]# consul info

[root@consul consul]# consul info | grep leader

leader = true

leader_addr = 192.168.10.30:8300

##通过httpd api 获取集群信息

curl 127.0.0.1:8500/v1/status/peers //查看集群server成员

curl 127.0.0.1:8500/v1/status/leader //集群 Raf leader

curl 127.0.0.1:8500/v1/catalog/services //注册的所有服务

curl 127.0.0.1:8500/v1/catalog/nginx //查看 nginx 服务信息

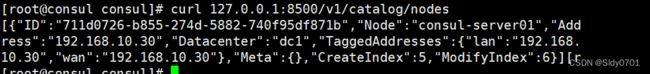

[root@consul consul]# curl 127.0.0.1:8500/v1/catalog/nodes //集群节点详细信息

[root@consul consul]# curl 127.0.0.1:8500/v1/catalog/nodes

[{"ID":"711d0726-b855-274d-5882-740f95df871b","Node":"consul-server01","Address":"192.168.10.30","Datacenter":"dc1","TaggedAddresses":{"lan":"192.168.10.30","wan":"192.168.10.30"},"Meta":{},"CreateIndex":5,"ModifyIndex":6}][r

2.容器服务自动加入consul集群

1). 安装 Gliderlabs/Registrator Gliderlabs/Registrator

可检查容器运行状态自动注册,还可注销 docker 容器的服务 到服务配置中心。

目前支持 Consul、Etcd 和 SkyDNS2。

在 192.168.10.41 节点,执行以下操作:

docker run -d

–name=registrator

–net=host

-v /var/run/docker.sock:/tmp/docker.sock

–restart=always

gliderlabs/registrator:latest

-ip=192.168.10.41

consul://192.168.10.30:8500

[root@docker ~]# docker run -d \

> --name=registrator \

> --net=host \

> -v /var/run/docker.sock:/tmp/docker.sock \

> --restart=always \

> gliderlabs/registrator:latest \

> -ip=192.168.10.41 \

> consul://192.168.10.30:8500

Unable to find image 'gliderlabs/registrator:latest' locally

latest: Pulling from gliderlabs/registrator

Image docker.io/gliderlabs/registrator:latest uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

c87f684ee1c2: Pull complete

a0559c0b3676: Pull complete

a28552c49839: Pull complete

Digest: sha256:6e708681dd52e28f4f39d048ac75376c9a762c44b3d75b2824173f8364e52c10

Status: Downloaded newer image for gliderlabs/registrator:latest

851a2220f6fd9bcb80fef6e3b7d9858ae1935d66431a7eb1e0dd4a2612e60edc

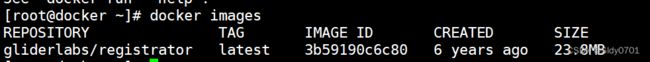

[root@docker ~]# docker images

2). 测试服务发现功能是否正常

docker run -itd -p:83:80 --name test-01 -h test01 nginx

docker run -itd -p:84:80 --name test-02 -h test02 nginx

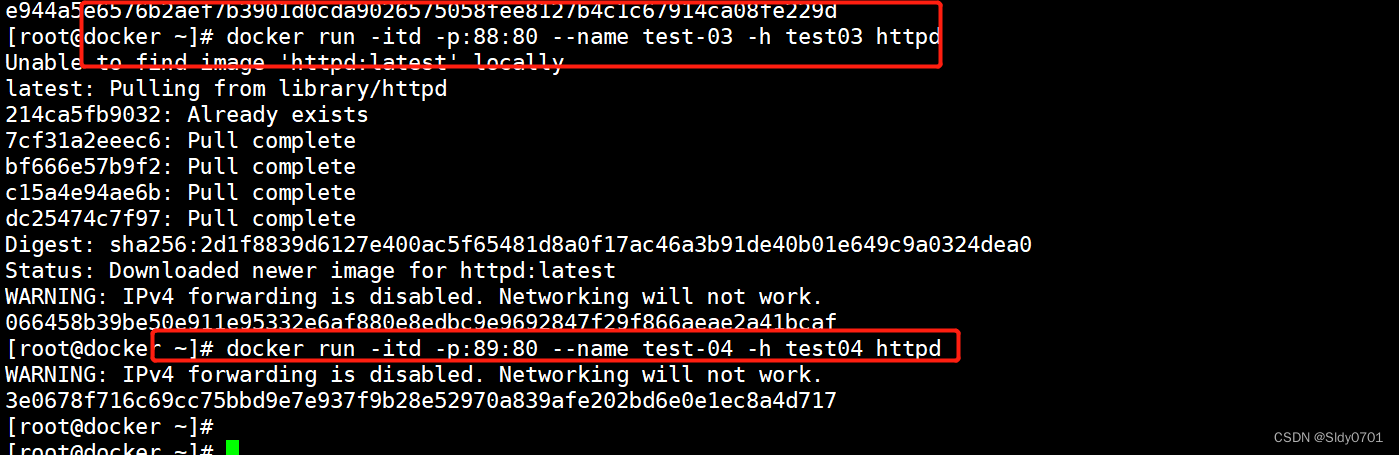

docker run -itd -p:88:80 --name test-03 -h test03 httpd

docker run -itd -p:89:80 --name test-04 -h test04 httpd

[root@docker ~]# docker run -itd -p:88:80 --name test-03 -h test03 httpd

Unable to find image 'httpd:latest' locally

latest: Pulling from library/httpd

214ca5fb9032: Already exists

7cf31a2eeec6: Pull complete

bf666e57b9f2: Pull complete

c15a4e94ae6b: Pull complete

dc25474c7f97: Pull complete

Digest: sha256:2d1f8839d6127e400ac5f65481d8a0f17ac46a3b91de40b01e649c9a0324dea0

Status: Downloaded newer image for httpd:latest

WARNING: IPv4 forwarding is disabled. Networking will not work.

066458b39be50e911e95332e6af880e8edbc9e9692847f29f866aeae2a41bcaf

[root@docker ~]# docker run -itd -p:89:80 --name test-04 -h test04 httpd

WARNING: IPv4 forwarding is disabled. Networking will not work.

3e0678f716c69cc75bbd9e7e937f9b28e52970a839afe202bd6e0e1ec8a4d717

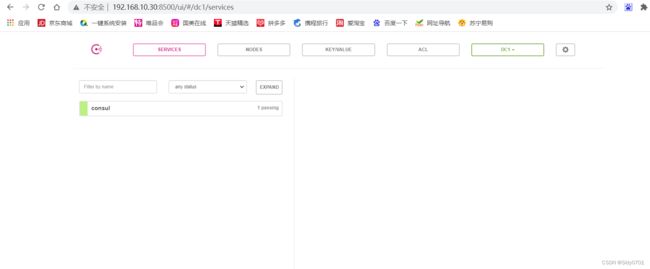

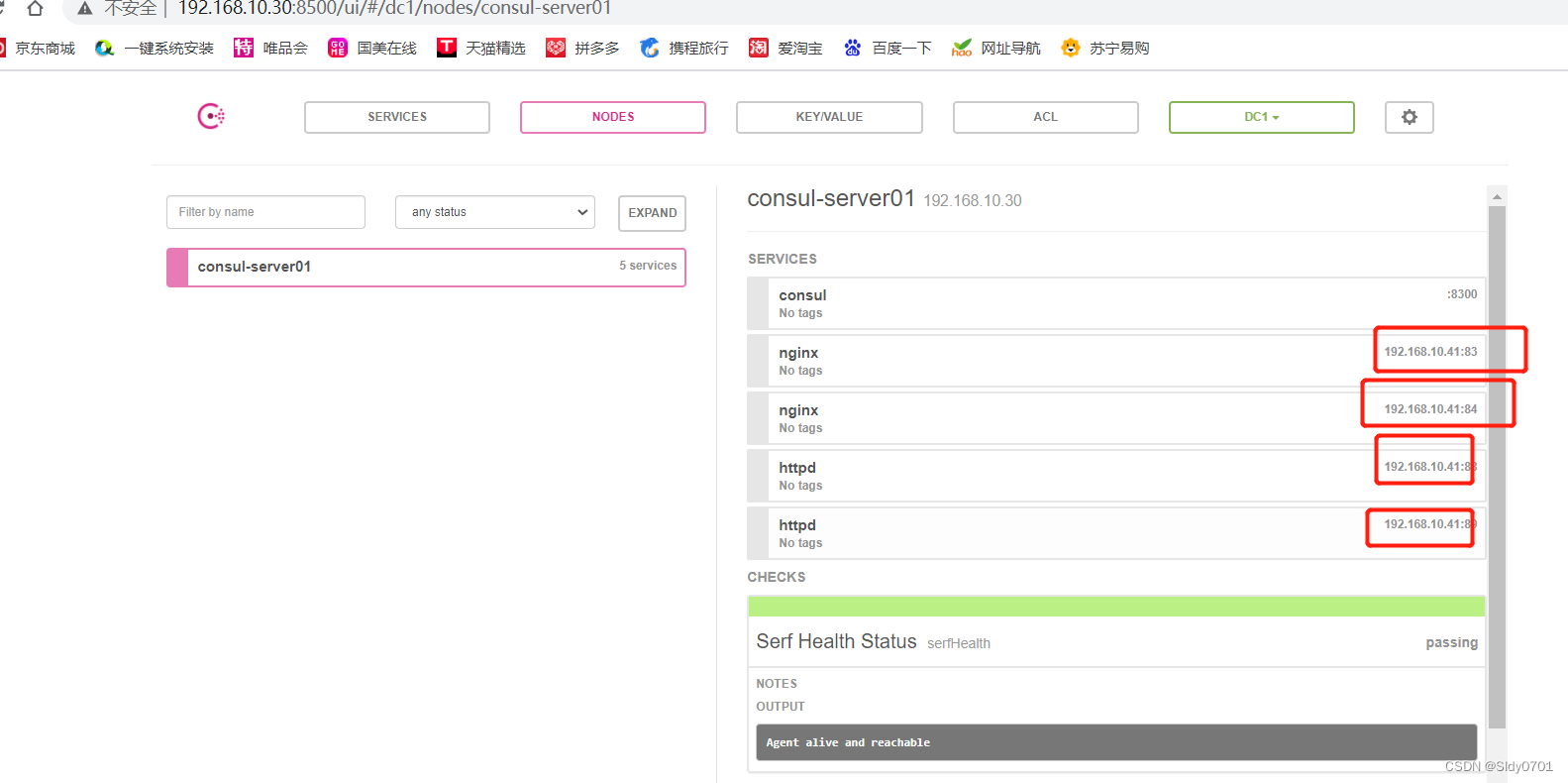

### 3). 验证 http 和 nginx 服务是否注册到 consul

浏览器输入 http://192.168.226.128:8500,“单击 NODES”,然后单击 “consurl-server01”,会出现 5 个服务.

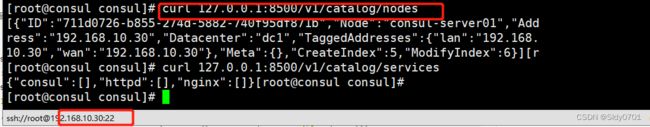

##在consul服务器上查看服务

```c

[root@consul consul]# curl 127.0.0.1:8500/v1/catalog/services

{"consul":[],"httpd":[],"nginx":[]}

[root@consul consul]# curl 127.0.0.1:8500/v1/catalog/nodes

[{"ID":"711d0726-b855-274d-5882-740f95df871b","Node":"consul-server01","Address":"192.168.10.30","Datacenter":"dc1","TaggedAddresses":{"lan":"192.168.10.30","wan":"192.168.10.30"},"Meta":{},"CreateIndex":5,"ModifyIndex":6}][r

[root@consul consul]# curl 127.0.0.1:8500/v1/catalog/services

{"consul":[],"httpd":[],"nginx":[]}[root@consul consul]#

4). 安装 consul-template

Consul-Template 是一个守护进程,用于实时查询 Consul 集群信息,

并更新文件系统 上任意数量的指定模板,生成配置文件。更新完成以后,

可以选择运行 shell 命令执行更新 操作,重新加载 Nginx。Consul-Template

可以查询 Consul 中的服务目录、Key、Key-values 等。

这种强大的抽象功能和查询语言模板可以使 Consul-Template 特别适合动态的创建配置文件。

例如:创建 Apache/Nginx Proxy Balancers、Haproxy Backends

5). 准备 template nginx 模板文件

##在consul上操作

vim /root/consul/nginx.ctmpl

upstream http_backend {

{{range service “nginx”}}

server {{.Address}}:{{.Port}}; #此处引用的变量会指向后端的地址和端口(动态变化)

{{end}}

}

server {

listen 83;

server_name localhost 192.168.10.30; #反向代理的IP地址(前端展示的NG服务的IP)

access_log /var/log/nginx/kgc.cn-access.log;

index index.html index.php;

location / {

proxy_set_header HOST $host;

proxy_set_header X-Real-IP $remote_addr; #后端真实IP

proxy_set_header Client-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; #转发地址

proxy_pass http://http_backend;

}

}

[root@consul consul]# vim nginx.ctmpl

upstream http_backend {

{{range service "nginx"}}

server {{.Address}}:{{.Port}};

{{end}}

}

server {

listen 83;

server_name localhost 192.168.10.30;

access_log /var/log/nginx/kgc.cn-access.log;

index index.html index.php;

location / {

proxy_set_header HOST $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Client-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; #转发地址

proxy_pass http://http_backend;

}

}

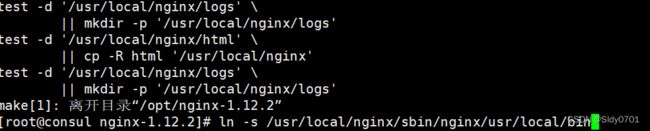

6).编译安装nginx

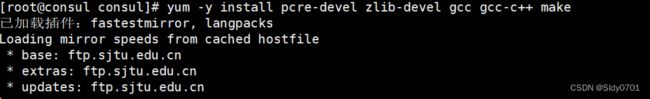

yum install gcc pcre-devel zlib-devel -y

tar zxvf nginx-1.12.0.tar.gz -C /opt

./configure --prefix=/usr/local/nginx

make && make install

ln -s /usr/local/nginx/sbin/nginx /usr/local/sbin

7). 配置 nginx

vim /usr/local/nginx/conf/nginx.conf

http {

include mime.types; #默认存在的

include vhost/*.conf; ####添加虚拟主机目录(consul动态生成的配置文件就会放在这里)

default_type application/octet-stream;

##创建虚拟主机目录

mkdir /usr/local/nginx/conf/vhost

##创建日志文件目录

mkdir /var/log/nginx

##启动nginx

#usr/local/nginx/sbin/nginx

[root@consul nginx]# cd conf

[root@consul conf]# nginx

[root@consul conf]# netstat -natp | grep nginx

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 86751/nginx: master

8). 配置并启动 template

上传 consul-template_0.19.3_linux_amd64.zip 包到/root 目录下

cp consul-template_0.19.3_linux_amd64.zip /root/

unzip consul-template_0.19.3_linux_amd64.zip

mv consul-template /usr/bin/

##关联nginx 虚拟目录中的子配置文件操作

consul-template -consul-addr 192.168.10.30:8500

-template “/root/consul/nginx.ctmpl:/usr/local/nginx/conf/vhost/pxs.conf:/usr/local/nginx/sbin/nginx -s reload”

–log-level=info

consul_0.9.2_linux_amd64.zip consul-template_0.19.3_linux_amd64.zip nginx.ctmpl

[root@consul consul]# unzip consul-template_0.19.3_linux_amd64.zip

Archive: consul-template_0.19.3_linux_amd64.zip

inflating: consul-template

[root@consul consul]# ls

consul_0.9.2_linux_amd64.zip consul-template consul-template_0.19.3_linux_amd64.zip nginx.ctmpl

[root@consul consul]# mv consul-template /usr/bi

[root@consul consul]# consul-template -consul-addr 192.168.10.30:8500 \

> -template "/root/consul/nginx.ctmpl:/usr/local/nginx/conf/vhost/pxs.conf:/usrlocal/nginx/sbin/nginx -s reload" \

> --log-level=info

2022/05/15 11:53:19.169612 [INFO] consul-template v0.19.3 (ebf2d3d)

2022/05/15 11:53:19.169631 [INFO] (runner) creating new runner (dry: false, once: false)

2022/05/15 11:53:19.169911 [INFO] (runner) creating watcher

2022/05/15 11:53:19.170169 [INFO] (runner) starting

2022/05/15 11:53:19.170190 [INFO] (runner) initiating run

2022/05/15 11:53:19.172738 [INFO] (runner) initiating run

2022/05/15 11:53:19.181218 [INFO] (runner) rendered "/root/consul/nginx.ctmpl" => "/usr/local/nginx/conf/vhost/pxs.conf"

2022/05/15 11:53:19.181261 [INFO] (runner) executing command "/usrlocal/nginx/sbin/nginx -s reload" from "/root/consul/nginx.ctmpl" => "/usr/local/nginx/conf/vhost/pxs.conf"

2022/05/15 11:53:19.181331 [INFO] (child) spawning: /usrlocal/nginx/sbin/nginx -s reload

2022/05/15 11:53:19.182147 [ERR] (cli) 1 error occurred:

* failed to execute command "/usrlocal/nginx/sbin/nginx -s reload" from "/root/consul/nginx.ctmpl" => "/usr/local/nginx/conf/vhost/pxs.conf": child: fork/exec /usrlocal/nginx/sbin/nginx: no such file or directory

##另外打开一个终端查看生成配置文件

[root@consul conf]# cat /usr/local/nginx/conf/vhost/pxs.conf

upstream http_backend {

server 192.168.10.41:83;

server 192.168.10.41:84;

}

server {

listen 86;

server_name localhost 192.168.10.30;

access_log /var/log/nginx/kgc.cn-access.log;

index index.html index.php;

location / {

proxy_set_header HOST $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Client-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; #转发地址

proxy_pass http://http_backend;

}

}

四. 增加一个nginx容器节点

1)增加一个 nginx 容器节点,测试服务发现及配置更新功能

//在registrator服务端注册

[root@docker ~]# docker run -itd -p 85:80 --name test-05 -h test05 nginx

WARNING: IPv4 forwarding is disabled. Networking will not work.

c4236bec0b85647264fe21475aac91daf2a544e5edd88838767d05e8b4d416c8

[root@docker ~]# docker run -itd -p 86:80 --name test-06 -h test06 nginx

WARNING: IPv4 forwarding is disabled. Networking will not work.

27bfd684bc19fadd4bc730a83cda1bb98c689d5dbe3d50a655a396fba035cb63

[root@docker ~]# echo "net.ipv4.ip_forward=1" >>/usr/lib/sysctl.d/00-system.conf

[root@docker ~]# systemctl restart network && systemctl restart docker

[root@docker ~]# docker run -itd -p 87:80 --name test-07 -h test07 nginx

6eabf25d1705bfe8b6177e9cead71ceb4f2e967a5b106b7f813d09c11c365743

//在consul服务器监控装填会有提示自动更新

2019/12/30 14:59:21.750556 [INFO] (runner) initiating run

2019/12/30 14:59:21.751767 [INFO] (runner) rendered “/root/consul/nginx.ctmpl” => “/usr/local/nginx/conf/vhost/kgc.conf”

2019/12/30 14:59:21.751787 [INFO] (runner) executing command “/usr/local/nginx/sbin/nginx -s reload” from “/root/consul/nginx.ctmpl” => “/usr/local/nginx/conf/vhost/kgc.conf”

2019/12/30 14:59:21.751823 [INFO] (child) spawning: /usr/local/nginx/sbin/nginx -s reload

2)查看三台nginx容器日志,请求正常轮询到各个容器节点上

docker logs -f test-01

docker logs -f test-02

docker logs -f test-05

五.总结

1.docker:容器技术之一,是虚拟化技术迭代后的分支产物——>容器和虚拟化有和区别(容器和kvm有何区别)

容器和KVM有何区别:

核心点:KVM是完整的操作系统,但是容器是共享内核的进程支持

容器优势——>对于市场的流行的技术来说(微服务)

容器:对应用程序和系统进行解耦,发布方便;更新方便;移植方便;冗余备份方便(LB副本集)

K8S中的Pod和容器之间区别

docker能做什么?

docker的三个统一

统一了应用封装的方式 images

统一了应用环境 docker engine

统一了应用的运行的方式 container 容器 runc (容器运行时)

docker 三要素

镜像

容器

仓库

2.docker能做什么

①docker基于三要素

images

container

engine

同时,docker是一种c/s端架构 server端位于系统,表现形式为daemon(守护进程)

:扩展一个容器Pod man 无daemon 守护进程(无server端) 直接和系统交互,达到更快;更省的一种效果

3.docker的底层原理

①cgroup

②namespace

基础概念:

namespace:6个名称空间(的隔离),这是做为多个应用之间完全隔离的一个判断依据(内核最低要求3.8+)

centos red-hat ubantu MAC (偏于领导层) 华为 linux发行版

docker 常规命令

基于images的命令

基于container的命令

docker网络

1.none

2.host

3.bridge

4.container

5.overlay

6.ip/macvlan

docker 散装的功能

①挂载;数据卷;数据卷容器

②暴露:-p指定端口;-P “默认分配” 49153

③自定义网络 (指定容器IP)

dockerfile

docker image的制作——>过程:docker image 镜像分层——>缓存复用

基于镜像分层

叠加能力:早期借助于aufs现在借助于overlay2(存储引擎)

分层分类(4层):lower(下层)upper(上层) worker(容器层) merged(视图/展示层)

dockerfile内的常规指令

FROM 基础镜像 (on/as build)

RUN 表示执行,并且RUN指令必定回产生一层新的镜像层

ENV 环境传入

ADD/COPY

EXPOSE

VOLUME

WORKERDIR

HEATHCHECK

CMD/ENTRYPOINT

cmd 不可传参 entry point 可传参 (exec)

docker底层原理之一cgroup

cgroup控制容器资源的(cpu 内存 上限)

镜像仓库

registry私有仓库的核心,只有字符中终端

harbor的架构是什么

haproxy/LB +harbor+共享存储

如何使用命令/终端查看仓库中有哪些镜像

curl-XGET http://$HARBOR IP:

harbor的登陆: docker login登出docker logout

私有仓库创建完成之后,要把需要使用到此仓库的节点,加入这个私有仓库的位置

PS:指向私有镜像仓库的方式2种,1、/etc/docker/daemon.json 2、/usr/lib/systemd/ system/docker.service

insecure-registries $HARBOR_IP

docker-compose多容器统一编排管理的一个功能

docker-compose是编排管理工具,那么docker-compose 编排什么?管理什么?

方面介绍:( yml文件内部的控制参数◎ docker-compose命令工具的选项)

yml 文件内部的控制参数

name

自定义挂载卷

自定义env 环境变量(运行前)

自定义网络

自定义端口

自定义传参(运行后)args

可以捅死对多个容器个性化定义/编排

docker-compose命令

consul(注册中心)

动态发现和更新应用配置(配置中心,例如: template)

consul agent 的server模式

prometheus consul agent dev模式开发者模式(能使用所有功能)

docker里面跑什么

大部分的公司: docker是不会跑主数据库的

小公司的主数据库:

sQL关系型:MySQL Postgresql ( Pg)

NoSQL非关: Redis mongoDb(分片副本集做为集群) memcached(缓存会话session/JWr和令牌token)

InfluxDb/ OpenTSDB ( TSDB 时序数据库)

中型(2类)

第1类:自研型mysql会做为主数据库(前提:小项目

2、其他服务:

nginx tomcat weblogic redis(存储、缓存压力不大的情况下) mysql nacos/consul prometheus放在容器中除了主数据库需要考虑要不要放在容器中之外,还有一类服务不会放容器中:提供共享存储的服务NFS NAS

重点:

放在容器中的应用,需要着重考虑以下几个方面,如果都能解决,那么就可以放入容器

放入容器后,对于网络带宽压力、传输性能压力的影响(网络稳定性和可靠性)

容器的特性:短生命周期(副本集+LB)

存储问题,包括了因短生命周期而导致的数据丢失问题(磁盘中和内存中的)

应用是否极度吃资源,例如oracle ( DB2)