Docker——k8s集群搭建

一、知识剖析

- 简介

-

官方中文文档:https://www.kubernetes.org.cn/docs

-

Kubernetes是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效(powerful),Kubernetes提供了应用部署,规划,更新,维护的一种机制。

-

Kubernetes一个核心的特点就是能够自主的管理容器来保证云平台中的容器按照用户的期望状态运行着(比如用户想让apache一直运行,用户不需要关心怎么去做,Kubernetes会自动去监控,然后去重启,新建,总之,让apache一直提供服务),管理员可以加载一个微型服务,让规划器来找到合适的位置,同时,Kubernetes也系统提升工具以及人性化方面,让用户能够方便的部署自己的应用。

- Kubernetes组成

- Kubernetes节点有运行应用容器必备的服务,而这些都是受Master的控制。每个节点上都要运行Docker。Docker来负责所有具体的映像下载和容器运行。

- Kubernetes主要由以下几个核心组件组成:

-

etcd:保存了整个集群的状态;

-

apiserver:提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制;

-

controller manager:负责维护集群的状态,比如故障检测、自动扩展、滚动更新等;

-

scheduler:负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上;

-

kubelet:负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理;

-

Container runtime:负责镜像管理以及Pod和容器的真正运行(CRI);

-

kube-proxy:负责为Service提供cluster内部的服务发现和负载均衡;

除了核心组件,还有一些推荐的Add-ons: -

kube-dns:负责为整个集群提供DNS服务

-

Ingress Controller:为服务提供外网入口

-

Heapster:提供资源监控

-

Dashboard:提供GUI

-

Federation:提供跨可用区的集群

-

Fluentd-elasticsearch:提供集群日志采集、存储与查询

二、Kubernetes集群搭建

-

实验环境:(安装docker并开启)

docker1:172.25.79.1 (k8s-master)

docker2: 172.25.79.2 (k8s-node1) -

注意:此实验需要联网!!!

-

先清理之前的环境:(之前配置过swarm集群,没有做过配置的读者可以跳过)

[root@docker2 ~]# docker swarm leave

Node left the swarm.

[root@docker3 ~]# docker swarm leave

Node left the swarm.

[root@docker1 ~]# docker swarm leave --force

[root@docker1 ~]# docker container prune

WARNING! This will remove all stopped containers.

Are you sure you want to continue? [y/N] y

[root@docker2 ~]# docker container prune

[root@docker3 ~]# docker container prune

- 安装相应软件

[root@docker1 mnt]# yum install -y kubeadm-1.12.2-0.x86_64.rpm kubelet-1.12.2-0.x86_64.rpm kubectl-1.12.2-0.x86_64.rpm kubernetes-cni-0.6.0-0.x86_64.rpm cri-tools-1.12.0-0.x86_64.rpm

[root@docker2 mnt]# yum install -y kubeadm-1.12.2-0.x86_64.rpm kubelet-1.12.2-0.x86_64.rpm kubectl-1.12.2-0.x86_64.rpm kubernetes-cni-0.6.0-0.x86_64.rpm cri-tools-1.12.0-0.x86_64.rpm

- 关闭系统的交换分区

[root@docker1 ~]# swapoff -a

[root@docker1 mnt]# vim /etc/fstab

#/dev/mapper/rhel-swap swap swap defaults 0 0

[root@docker1 ~]# systemctl enable kubelet.service

##2同上

[root@docker1 ~]# kubeadm config images list

I0323 16:49:02.001547 11145 version.go:93] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

I0323 16:49:02.001631 11145 version.go:94] falling back to the local client version: v1.12.2

k8s.gcr.io/kube-apiserver:v1.12.2

k8s.gcr.io/kube-controller-manager:v1.12.2

k8s.gcr.io/kube-scheduler:v1.12.2

k8s.gcr.io/kube-proxy:v1.12.2

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.2.24

k8s.gcr.io/coredns:1.2.2

- 导入需要的镜像

[root@docker1 mnt]# docker load -i kube-apiserver.tar

[root@docker1 mnt]# docker load -i kube-controller-manager.tar

[root@docker1 mnt]# docker load -i kube-proxy.tar

[root@docker1 mnt]# docker load -i pause.tar

[root@docker1 mnt]# docker load -i etcd.tar

[root@docker1 mnt]# docker load -i coredns.tar

[root@docker1 mnt]# docker load -i kube-scheduler.tar

[root@docker1 mnt]# docker load -i flannel.tar

- 初始化

[root@docker1 mnt]# vim kube-flannel.yml

76 "Network": "10.244.0.0/16"

[root@docker1 mnt]# kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=172.25.19.1

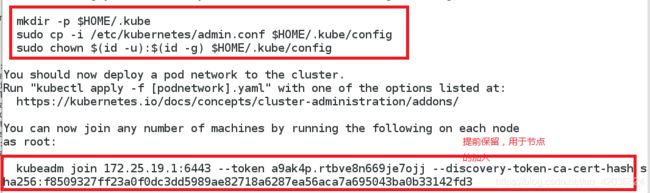

##执行下面的命令进行配置(注意这三个命令必须用k8s用户执行)

[root@docker1 mnt]# su - k8s

[k8s@docker1 ~]$ mkdir -p $HOME/.kube

[k8s@docker1 ~]$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[k8s@docker1 ~]$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 创建一个k8s用户,并授权,设置环境变量

[root@docker1 mnt]# useradd k8s

[root@docker1 mnt]# vim /etc/sudoers

92 k8s ALL=(ALL) NOPASSWD:ALL

[root@docker1 mnt]# vim /home/k8s/.bashrc

source <(kubectl completion bash)'

[root@docker1 mnt]# su - k8s

[root@docker1 mnt]# yum install bash-* -y

[k8s@docker1 ~]$ kubectl ##能补齐就好

- 在master部署flannel

[root@docker1 mnt]# cp kube-flannel.yml /home/k8s

[root@docker1 mnt]# su - k8s

[k8s@docker1 ~]$ kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

[k8s@docker1 ~]$ sudo docker ps ##查看

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

de6708eb4742 95b66263fd52 "/coredns -conf /etc…" 6 seconds ago Up 4 seconds k8s_coredns_coredns-576cbf47c7-bx8cl_kube-system_537756d8-4d4a-11e9-a4a7-5254004f4b70_0

50b03df7ee97 95b66263fd52 "/coredns -conf /etc…" 6 seconds ago Up 4 seconds k8s_coredns_coredns-576cbf47c7-r9628_kube-system_53b786e2-4d4a-11e9-a4a7-5254004f4b70_0

ed3556e9a5e5 k8s.gcr.io/pause:3.1 "/pause" 17 seconds ago Up 9 seconds k8s_POD_coredns-576cbf47c7-bx8cl_kube-system_537756d8-4d4a-11e9-a4a7-5254004f4b70_0

b5da0cf05f76 k8s.gcr.io/pause:3.1 "/pause" 17 seconds ago Up 9 seconds k8s_POD_coredns-576cbf47c7-r9628_kube-system_53b786e2-4d4a-11e9-a4a7-5254004f4b70_0

1a23638d94c4 f0fad859c909 "/opt/bin/flanneld -…" 37 seconds ago Up 36 seconds k8s_kube-flannel_kube-flannel-ds-amd64-z2x9x_kube-system_818ae80a-4d4e-11e9-a4a7-5254004f4b70_0

474053984c3e k8s.gcr.io/pause:3.1 "/pause" 45 seconds ago Up 41 seconds k8s_POD_kube-flannel-ds-amd64-z2x9x_kube-system_818ae80a-4d4e-11e9-a4a7-5254004f4b70_0

b0235d34e1f0 96eaf5076bfe "/usr/local/bin/kube…" 30 minutes ago Up 30 minutes k8s_kube-proxy_kube-proxy-4654x_kube-system_535e2a50-4d4a-11e9-a4a7-5254004f4b70_0

9720b0f0ff00 k8s.gcr.io/pause:3.1 "/pause" 30 minutes ago Up 30 minutes k8s_POD_kube-proxy-4654x_kube-system_535e2a50-4d4a-11e9-a4a7-5254004f4b70_0

19530f0e9612 a84dd4efbe5f "kube-scheduler --ad…" 31 minutes ago Up 31 minutes k8s_kube-scheduler_kube-scheduler-docker1_kube-system_ee7b1077c61516320f4273309e9b4690_0

65296718b6f3 k8s.gcr.io/pause:3.1 "/pause" 31 minutes ago Up 31 minutes k8s_POD_kube-scheduler-docker1_kube-system_ee7b1077c61516320f4273309e9b4690_0

dd1e68372bb4 b9a2d5b91fd6 "kube-controller-man…" 31 minutes ago Up 31 minutes k8s_kube-controller-manager_kube-controller-manager-docker1_kube-system_ce6614527f7b9b296834d491867f5fee_0

aa5a7e93f43b k8s.gcr.io/pause:3.1 "/pause" 31 minutes ago Up 31 minutes k8s_POD_kube-controller-manager-docker1_kube-system_ce6614527f7b9b296834d491867f5fee_0

8c1108cd24a7 6e3fa7b29763 "kube-apiserver --au…" 31 minutes ago Up 31 minutes k8s_kube-apiserver_kube-apiserver-docker1_kube-system_6d52485b839af4dc2fad49dc4a448eaa_0

c71383630c98 k8s.gcr.io/pause:3.1 "/pause" 31 minutes ago Up 31 minutes k8s_POD_kube-apiserver-docker1_kube-system_6d52485b839af4dc2fad49dc4a448eaa_0

d4944af4e5f7 b57e69295df1 "etcd --advertise-cl…" 31 minutes ago Up 31 minutes k8s_etcd_etcd-docker1_kube-system_81963084b5efb20842fffc6e9fd635c6_0

6e4093612e96 k8s.gcr.io/pause:3.1 "/pause" 31 minutes ago Up 31 minutes k8s_POD_etcd-docker1_kube-system_81963084b5efb20842fffc6e9fd635c6_0

1c19c834d83d haproxy "/docker-entrypoint.…" 6 hours ago Up 6 hours 0.0.0.0:80->80/tcp compose_haproxy_1

268d63c1cfb9 ubuntu "/bin/bash" 6 hours ago Up 6 hours vm1

- 部署node节点

[root@docker2 mnt]# swapon -s

[root@docker2 mnt]# modprobe ip_vs_wrr

[root@docker2 mnt]# modprobe ip_vs_sh

[root@docker2 mnt]# kubeadm join 172.25.19.1:6443 --token a9ak4p.rtbve8n669je7ojj --discovery-token-ca-cert-hash sha256:f8509327ff23a0f0dc3dd5989ae82718a6287ea56aca7a695043ba0b33142fd3

9.在master节点查看节点信息,可以看到node1 已经加入集群了

[k8s@docker1 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

docker1 Ready master 34m v1.12.2

docker2 Ready <none> 92s v1.12.2

- 在真机添加火墙策略

[root@foundation19 k8s]# iptables -t nat -I POSTROUTING -s 172.25.19.0/24 -j MASQUERADE

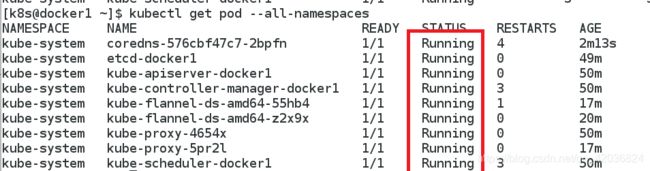

- 查看所有namespaces的pod

[k8s@docker1 ~]$ kubectl get pod --all-namespaces

然后删除状态有问题的,直到所有都是running,可以多刷新一会

[k8s@docker1 ~]$ kubectl delete pod coredns-576cbf47c7-bx8cl -n kube-system

[k8s@docker1 ~]$ kubectl get pod --all-namespaces

- 显示全部running即可