Part7---Java更新Hbase表的数据

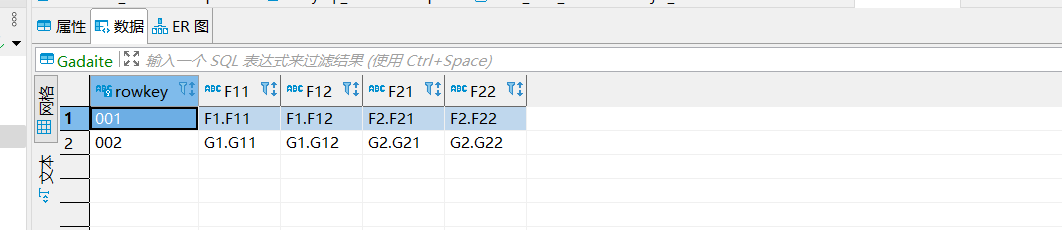

01.原始数据

hbase shell中:

hbase(main):011:0> scan "Gadaite"

ROW COLUMN+CELL

001 column=F1:F11, timestamp=1650130559218, value=F1.F11

001 column=F1:F12, timestamp=1650130559237, value=F1.F12

001 column=F1:_0, timestamp=1650130559292, value=

001 column=F2:F21, timestamp=1650130559268, value=F2.F21

001 column=F2:F22, timestamp=1650130559292, value=F2.F22

002 column=F1:F11, timestamp=1650130559315, value=G1.G11

002 column=F1:F12, timestamp=1650130559331, value=G1.G12

002 column=F1:_0, timestamp=1650130560291, value=

002 column=F2:F21, timestamp=1650130559351, value=G2.G21

002 column=F2:F22, timestamp=1650130560291, value=G2.G22

2 row(s)

phoenix / dbeaver中:

02.使用代码插入数据到Hbase

Put put1 = new Put(Bytes.toBytes(“101”));

该参数为指定行键的值,需要先被指定

put1.addColumn(Bytes.toBytes(“F1”), Bytes.toBytes(“F11”), Bytes.toBytes(“T1:T11”));

第一个参数为列簇,第二个参数为列簇下的字段,第三个参数为值

与直接在hbase shell上插入有所不同,第一个参数不再需要使用 列簇:字段 的格式指定,否则是插入不进去的,找不到列簇下对应的字段,但是编辑器不会报错。

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

import java.util.ArrayList;

// 通过put的api向Hbase中写入数据

public class UpsertHbaseData {

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

conf.set("hbase.zookeeper.quorum","192.168.1.10:2181");

Connection conn = ConnectionFactory.createConnection(conf);

Table gadaite = conn.getTable(TableName.valueOf("Gadaite"));

ArrayList puts = new ArrayList();

Put put1 = new Put(Bytes.toBytes("101"));// 行键

put1.addColumn(Bytes.toBytes("F1"), Bytes.toBytes("F11"), Bytes.toBytes("T1:T11"));// 列簇---字段(不需要再指定列簇)---值

put1.addColumn(Bytes.toBytes("F1"), Bytes.toBytes("F12"), Bytes.toBytes("T1:T22"));

put1.addColumn(Bytes.toBytes("F2"), Bytes.toBytes("F21"), Bytes.toBytes("T2:T21"));

put1.addColumn(Bytes.toBytes("F2"), Bytes.toBytes("F22"), Bytes.toBytes("T2:T22"));

puts.add(put1);

gadaite.put(put1);

gadaite.close();

conn.close();

}

}

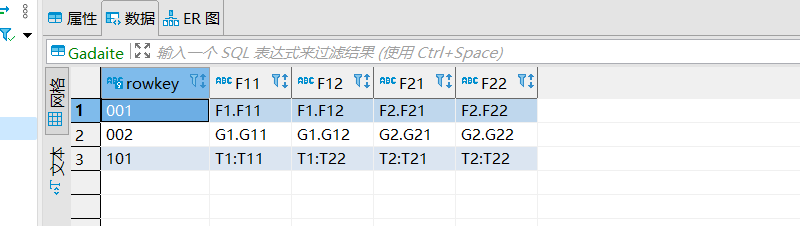

查看结果:

dbeaver中:

hbase shell中:

hbase(main):012:0> scan "Gadaite"

ROW COLUMN+CELL

001 column=F1:F11, timestamp=1650130559218, value=F1.F11

001 column=F1:F12, timestamp=1650130559237, value=F1.F12

001 column=F1:_0, timestamp=1650130559292, value=

001 column=F2:F21, timestamp=1650130559268, value=F2.F21

001 column=F2:F22, timestamp=1650130559292, value=F2.F22

002 column=F1:F11, timestamp=1650130559315, value=G1.G11

002 column=F1:F12, timestamp=1650130559331, value=G1.G12

002 column=F1:_0, timestamp=1650130560291, value=

002 column=F2:F21, timestamp=1650130559351, value=G2.G21

002 column=F2:F22, timestamp=1650130560291, value=G2.G22

101 column=F1:F11, timestamp=1650132406751, value=T1:T11

101 column=F1:F12, timestamp=1650132406751, value=T1:T22

101 column=F2:F21, timestamp=1650132406751, value=T2:T21

101 column=F2:F22, timestamp=1650132406751, value=T2:T22

3 row(s)

Took 0.0199 seconds

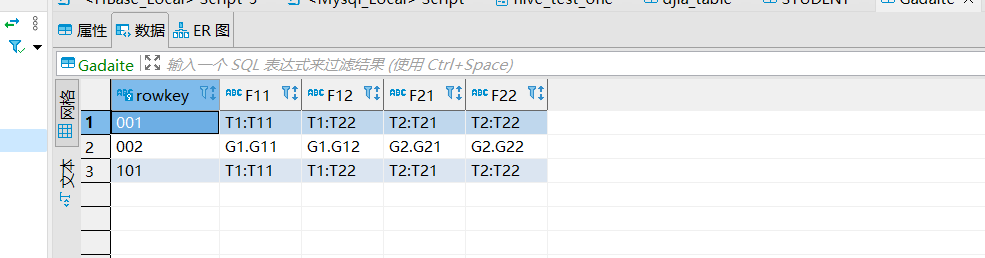

03.插入已有的行键

前面提到过hbase在phoenix上更新和插入的逻辑是:没有进行插入,如果有才进行更新,现在使用Hadoop的Hbase的API试试在该 情况下是否也满足这样的情况

我们将代码中的行键设置成我们已有的值如001,再看执行结果:和hbase shell一致

hbase(main):013:0> scan "Gadaite"

ROW COLUMN+CELL

001 column=F1:F11, timestamp=1650132892478, value=T1:T11

001 column=F1:F12, timestamp=1650132892478, value=T1:T22

001 column=F1:_0, timestamp=1650130559292, value=

001 column=F2:F21, timestamp=1650132892478, value=T2:T21

001 column=F2:F22, timestamp=1650132892478, value=T2:T22

002 column=F1:F11, timestamp=1650130559315, value=G1.G11

002 column=F1:F12, timestamp=1650130559331, value=G1.G12

002 column=F1:_0, timestamp=1650130560291, value=

002 column=F2:F21, timestamp=1650130559351, value=G2.G21

002 column=F2:F22, timestamp=1650130560291, value=G2.G22

101 column=F1:F11, timestamp=1650132406751, value=T1:T11

101 column=F1:F12, timestamp=1650132406751, value=T1:T22

101 column=F2:F21, timestamp=1650132406751, value=T2:T21

101 column=F2:F22, timestamp=1650132406751, value=T2:T22

3 row(s)

Took 0.0103 seconds

再执行一遍代码:

数据没变,但是时间戳却改变了

hbase(main):014:0> scan "Gadaite"

ROW COLUMN+CELL

001 column=F1:F11, timestamp=1650132994491, value=T1:T11

001 column=F1:F12, timestamp=1650132994491, value=T1:T22

001 column=F1:_0, timestamp=1650130559292, value=

001 column=F2:F21, timestamp=1650132994491, value=T2:T21

001 column=F2:F22, timestamp=1650132994491, value=T2:T22

002 column=F1:F11, timestamp=1650130559315, value=G1.G11

002 column=F1:F12, timestamp=1650130559331, value=G1.G12

002 column=F1:_0, timestamp=1650130560291, value=

002 column=F2:F21, timestamp=1650130559351, value=G2.G21

002 column=F2:F22, timestamp=1650130560291, value=G2.G22

101 column=F1:F11, timestamp=1650132406751, value=T1:T11

101 column=F1:F12, timestamp=1650132406751, value=T1:T22

101 column=F2:F21, timestamp=1650132406751, value=T2:T21

101 column=F2:F22, timestamp=1650132406751, value=T2:T22

3 row(s)

Took 0.0230 seconds