python爬取某音直播间的实时评论(仅学习)

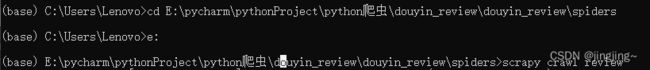

先看一下我的运行效果,通过控制台对项目进行运行(如下图所示)

然后会自动运行并且将抓取的内容存为json文件(以下为运行效果图)

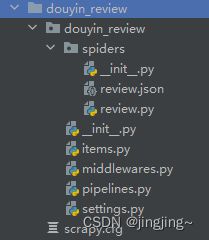

首先,我采用scrapy爬虫框架自动创建包结构(下图是我的包结构):(特别说明如何创建框架在最后说明)

下面是review.py其中start_urls需要更改为想要爬取的直播间的链接

import scrapy

from scrapy.http import HtmlResponse

from selenium import webdriver

from douyin_review.items import DouyinReviewItem

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import time

class ReviewSpider(scrapy.Spider):

name = "review"

allowed_domains = ["live.douyin.com"]

start_urls = ["https://live.douyin.com/6602922152"]

def __init__(self):

super(ReviewSpider, self).__init__()

self.driver = webdriver.Edge() # 使用 Edge WebDriver

def closed(self, reason):

self.driver.quit()

def start_requests(self):

# 获取settings中的COOKIES字典

cookies = self.settings.get('COOKIES', {})

for url in self.start_urls:

yield scrapy.Request(url, cookies=cookies, callback=self.parse)

def parse(self, response):

while True:

self.driver.get(response.url)

time.sleep(30)

# 使用 Selenium 等待评论加载

# WebDriverWait(self.driver, 10).until(

# EC.presence_of_element_located((By.CLASS_NAME, 'webcast-chatroom___items'))

# )

rendered_html = self.driver.page_source

response = HtmlResponse(url=response.url, body=rendered_html, encoding='utf-8')

ts=response.xpath("//div[@class='mUQC4JAd']/span//text()").getall()

# ts=[]

name_list=[]

comment=[]

# for text in texts:

# if(text!='大马猴11' and text!='\xa0×\xa0111'):

# ts.append(text)

# print(ts)

i=1

for t in ts:

if (":" in t) and (i==1):

name_list.append(t)

i=0

continue

elif i==0:

comment.append(t)

i=1

continue

else:

continue

for i in range(len(name_list)):

name=name_list[i]

pingLun=comment[i]

review=DouyinReviewItem(name=name,pingLun=pingLun)

yield review

下面是items.py创建对应字段的类

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class DouyinReviewItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

name=scrapy.Field()

pingLun=scrapy.Field()下面是pipelines.py用于下载对应json并保存

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

class DouyinReviewPipeline:

def open_spider(self, spider):

self.fp = open('review.json', 'a', encoding='utf-8')

def process_item(self, item, spider):

self.fp.write(str(item))

return item

def close_spider(self, spider):

self.fp.close()最后的settings.py 需要在其中增加游览器中的COOKIE值才能够在未登入的情况下展示出评论区

此处代码不完整,只展示需要修改的地方

# Disable cookies (enabled by default)

# COOKIES_ENABLED = False

COOKIES_ENABLED = True

COOKIES = {

'cookie': 'xgplayer_user_id=959220653001; ttwid=1%7COxB24VTg7_9c6RQirvBA2fH6augroyGe8w3ItG5Mufo%7C1692369185%7Cd3b97aabdde5736142051c531cf7ce3860bcbecb18a2abc45c10c09935519c9a; passport_csrf_token=d96375526151ab754dbf46727dfbfbeb; passport_csrf_token_default=d96375526151ab754dbf46727dfbfbeb; FORCE_LOGIN=%7B%22videoConsumedRemainSeconds%22%3A180%7D; volume_info=%7B%22isUserMute%22%3Afalse%2C%22isMute%22%3Afalse%2C%22volume%22%3A0.6%7D; n_mh=jDYj_Z3sDnUA97LaOtZtdBTpK1fhYEKFwpSke88SQvM; sso_uid_tt=c68756314f0e59755b9aa6aff2d4db2b; sso_uid_tt_ss=c68756314f0e59755b9aa6aff2d4db2b; toutiao_sso_user=34ab2676a869b18f4d5932138d666eaf; toutiao_sso_user_ss=34ab2676a869b18f4d5932138d666eaf; passport_assist_user=CjyqECbVe5KcuZUksVjmPRxTeiBGVNL2fwlVoIFNzDkqM9N6oOpyhj7Fek2cvvrce8O2Q7jjD_jy5VHUZH4aSAo8aif1UR0jyXJwFkBVns_BMFttnON_8BcoHWGWB0nsX1jN95nCCBQS6jSprVH45JuGum4qYatucDPEKlxFEOnFuQ0Yia_WVCIBAz7R8RA%3D; sid_ucp_sso_v1=1.0.0-KDNjMWVlZTdkYzdlOTcyMGIxMTUxNTUzNWM1ZTBhNTlhYTdiZWRiZjQKHQjToObekwIQwoL-pgYY7zEgDDDkkuLPBTgGQPQHGgJsZiIgMzRhYjI2NzZhODY5YjE4ZjRkNTkzMjEzOGQ2NjZlYWY; ssid_ucp_sso_v1=1.0.0-KDNjMWVlZTdkYzdlOTcyMGIxMTUxNTUzNWM1ZTBhNTlhYTdiZWRiZjQKHQjToObekwIQwoL-pgYY7zEgDDDkkuLPBTgGQPQHGgJsZiIgMzRhYjI2NzZhODY5YjE4ZjRkNTkzMjEzOGQ2NjZlYWY; odin_tt=2b87fbe8273217ea09cd345967e549dd747c2767374e75ce43d6ebc988709c2c9d106981c37137aa2398d86a0c935dac; passport_auth_status=3c4f1af283a22adab6ef4fae7378271a%2C; passport_auth_status_ss=3c4f1af283a22adab6ef4fae7378271a%2C; uid_tt=71a9277428c3f8a210489e7143508857; uid_tt_ss=71a9277428c3f8a210489e7143508857; sid_tt=ab1f8419458ab0ad1785a3ead35fb7ce; sessionid=ab1f8419458ab0ad1785a3ead35fb7ce; sessionid_ss=ab1f8419458ab0ad1785a3ead35fb7ce; LOGIN_STATUS=1; __security_server_data_status=1; store-region=cn-ha; store-region-src=uid; d_ticket=e8595569e1daeffd5ea56ba844ec2eb263a0c; sid_guard=ab1f8419458ab0ad1785a3ead35fb7ce%7C1692369257%7C5183965%7CTue%2C+17-Oct-2023+14%3A33%3A42+GMT; sid_ucp_v1=1.0.0-KDU4Mjg4YzliNjBkNjlhOTgzNmU4NzYxYTEzNTAxYTIyNWQ2NWI4YjAKGQjToObekwIQ6YL-pgYY7zEgDDgGQPQHSAQaAmxmIiBhYjFmODQxOTQ1OGFiMGFkMTc4NWEzZWFkMzVmYjdjZQ; ssid_ucp_v1=1.0.0-KDU4Mjg4YzliNjBkNjlhOTgzNmU4NzYxYTEzNTAxYTIyNWQ2NWI4YjAKGQjToObekwIQ6YL-pgYY7zEgDDgGQPQHSAQaAmxmIiBhYjFmODQxOTQ1OGFiMGFkMTc4NWEzZWFkMzVmYjdjZQ; bd_ticket_guard_client_data=eyJiZC10aWNrZXQtZ3VhcmQtdmVyc2lvbiI6MiwiYmQtdGlja2V0LWd1YXJkLWl0ZXJhdGlvbi12ZXJzaW9uIjoxLCJiZC10aWNrZXQtZ3VhcmQtY2xpZW50LWNzciI6Ii0tLS0tQkVHSU4gQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tXHJcbk1JSUJEekNCdFFJQkFEQW5NUXN3Q1FZRFZRUUdFd0pEVGpFWU1CWUdBMVVFQXd3UFltUmZkR2xqYTJWMFgyZDFcclxuWVhKa01Ga3dFd1lIS29aSXpqMENBUVlJS29aSXpqMERBUWNEUWdBRVNHTCt1eGxqckRKL3BZcFd4V0dBb1Ntd1xyXG5WTFZQekY1NEI2RXNOaWdnUkxxNWQ3Mnl5M0lSNXhaNDVza2VlOUJmM2k5aFBwNWNENkg4Tzh6cGJYZUh3cUFzXHJcbk1Db0dDU3FHU0liM0RRRUpEakVkTUJzd0dRWURWUjBSQkJJd0VJSU9kM2QzTG1SdmRYbHBiaTVqYjIwd0NnWUlcclxuS29aSXpqMEVBd0lEU1FBd1JnSWhBS3hOL2xYalNsTFZ6dUMvak1WR0MzaEZla1BrUzdndzdicitXQzN3MEtJR1xyXG5BaUVBaVhyWUt0Nk9US0xQdUt4T2xLMHEwMWgvQ2FoeDRVWldEd3F1dXgzMmFRcz1cclxuLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tXHJcbiJ9; _bd_ticket_crypt_cookie=5815417d7c8f3c1d2fcfdd994f20a6fe; download_guide=%223%2F20230818%2F0%22; publish_badge_show_info=%221%2C0%2C0%2C1692369887329%22; pwa2=%220%7C0%7C3%7C0%22; SEARCH_RESULT_LIST_TYPE=%22single%22; strategyABtestKey=%221692521719.565%22; stream_recommend_feed_params=%22%7B%5C%22cookie_enabled%5C%22%3Atrue%2C%5C%22screen_width%5C%22%3A1920%2C%5C%22screen_height%5C%22%3A1080%2C%5C%22browser_online%5C%22%3Atrue%2C%5C%22cpu_core_num%5C%22%3A8%2C%5C%22device_memory%5C%22%3A8%2C%5C%22downlink%5C%22%3A2.9%2C%5C%22effective_type%5C%22%3A%5C%224g%5C%22%2C%5C%22round_trip_time%5C%22%3A50%7D%22; FOLLOW_NUMBER_YELLOW_POINT_INFO=%22MS4wLjABAAAAT7e7EzZ8q9Hghdni7lyYQUmGe-zbxBHRfkdz-9hpvA8%2F1692547200000%2F0%2F1692536260267%2F0%22; VIDEO_FILTER_MEMO_SELECT=%7B%22expireTime%22%3A1693141060322%2C%22type%22%3Anull%7D; FOLLOW_LIVE_POINT_INFO=%22MS4wLjABAAAAT7e7EzZ8q9Hghdni7lyYQUmGe-zbxBHRfkdz-9hpvA8%2F1692547200000%2F0%2F1692536263200%2F0%22; device_web_cpu_core=8; device_web_memory_size=8; webcast_local_quality=origin; csrf_session_id=a33981e524a13303166e5b59406cadaa; passport_fe_beating_status=true; home_can_add_dy_2_desktop=%221%22; tt_scid=Y1zvGZuLQiavyxfxeyhsfSn0MCK7.oikyCwM9tHQ3U2oN8rE-yv2pdQELP4C9wkq207a; IsDouyinActive=false; __ac_nonce=064e20dd900cb394bc15,

}创建scrapy框架

1. 创建爬虫的项目 scrapy startproject 项目的名字

注意:项目的名字不允许使用数字开头 也不能包含中文

2.创建爬虫文件

要在spiders文件夹中去创建爬虫文件

cd 项目的名字\项目的名字\spiders

cd douyin_review\douyin_review\spiders

创建爬虫文件

scrapy genspider 爬虫文件的名字 要爬取网页

eg:scrapy genspider review https://live.douyin.com/

一般情况下 不需要添加http协议 因为start_urls的值是根据allowed_domains

修改的 所以添加了http的话 那么start_urls就需要手动去修改了

3.运行爬虫代码

scrapy crawl 爬虫的名字

eg:

scrapy crawl review