K8S部署RabbitMQ集群+镜像模式实现高可用

参考说明:

安装思路: Kubernetes全栈架构师:基于世界500强的k8s实战课程

镜像模式: RabbitMQ高可用-镜像模式部署使用

目录

-

- 1. 安装helm

-

- 1.1. 安装helm

- 1.2. 基本命令参考

- 2. 安装RabbitMQ

-

- 2.1. 下载chart包

- 2.2. 配置参数

-

- 2.2.1. 编辑配置文件

- 2.2.2. 设置管理员密码

- 2.2.3. rabbitmq集群意外宕机强制启动

- 2.2.4. 模拟rabbitmq集群宕机(可跳过)

- 2.2.5. 指定时区

- 2.2.6. 指定副本数

- 2.2.7. 设置持久化存储

- 2.2.8. 设置service

- 2.3. 部署RabbitMQ

-

- 2.3.1. 创建命名空间

- 2.3.2. 安装

- 2.3.3. 查看rabbitmq安装状态

- 3. 配置RabbitMQ集群外部访问方式

-

- 3.1. 建议方式

- 3.2. 方式一:Service-Nodeport(5672,15672)

- 3.3. 方式二:Service-公网LoadBalancer(5672,15672)

- 3.4. 方式三:Service-私网LoadBalancer(5672)+Ingress-公网ALB(15672)

-

- 3.4.1. 创建Service-私网LoadBalancer

- 3.4.2. 创建Ingress-ALB

- 4. 配置镜像模式实现高可用

-

- 4.1. 镜像模式介绍

- 4.2. rabbitmqctl设置镜像模式

- 5. 清理RabbitMQ集群

-

- 5.1. 卸载RabbitMQ

- 5.2. 删除pvc

- 5.3. 清理手动创建的service,ingress

1. 安装helm

1.1. 安装helm

-

项目地址:https://github.com/helm/helm

-

安装:

# 下载(自行选择版本)

wget https://get.helm.sh/helm-v3.6.1-linux-amd64.tar.gz

# 解压

tar zxvf helm-v3.6.1-linux-amd64.tar.gz

# 安装

mv linux-amd64/helm /usr/local/bin/

# 验证

helm version

1.2. 基本命令参考

# 添加仓库

helm repo add

# 查询 charts

helm search repo

# 更新repo仓库资源

helm repo update

# 查看当前安装的charts

helm list -A

# 安装

helm install

# 卸载

helm uninstall

# 更新

helm upgrade

2. 安装RabbitMQ

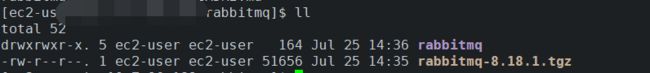

2.1. 下载chart包

# 添加bitnami仓库

helm repo add bitnami https://charts.bitnami.com/bitnami

# 查询chart

helm search repo bitnami

# 创建工作目录

mkdir -p ~/test/rabbitmq

cd ~/test/rabbitmq

# 拉取rabbitmq

helm pull bitnami/rabbitmq

# 解压

tar zxvf [rabbitmq]

2.2. 配置参数

2.2.1. 编辑配置文件

官方配置参考:https://github.com/bitnami/charts/tree/master/bitnami/rabbitmq

- 进入工作目录,配置持久化存储、副本数等

- 建议首次部署时直接修改values中的配置,而不是用–set的方式,这样后期upgrade不必重复设置。

cd ~/test/rabbitmq/rabbitmq

vim values.yaml

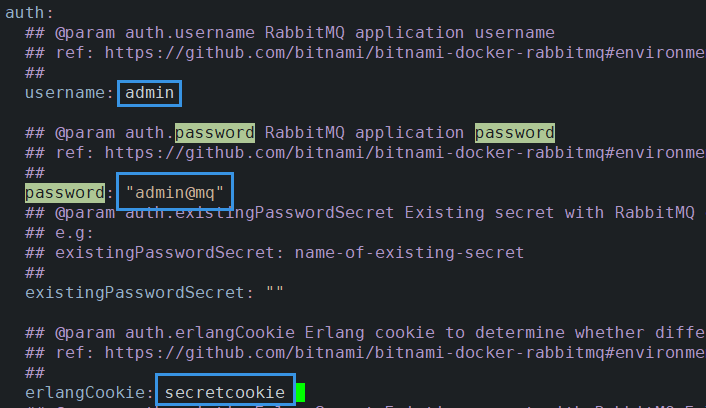

2.2.2. 设置管理员密码

- 方式一:在配置中指定

auth:

username: admin

password: "admin@mq"

existingPasswordSecret: ""

erlangCookie: secretcookie

- 方式二:在安装时通过set方式指定(避免密码泄露)

--set auth.username=admin,auth.password=admin@mq,auth.erlangCookie=secretcookie

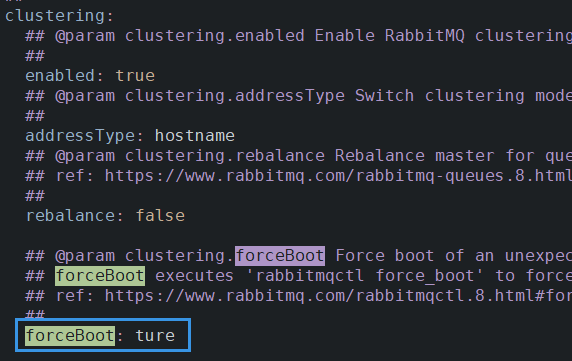

2.2.3. rabbitmq集群意外宕机强制启动

- 当rabbitmq启用持久化存储时,若rabbitmq所有pod同时宕机,将无法重新启动,因此有必要提前开启

clustering.forceBoot

clustering:

enabled: true

addressType: hostname

rebalance: false

forceBoot: true

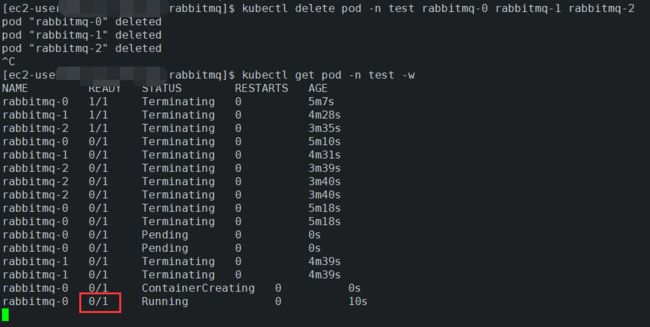

2.2.4. 模拟rabbitmq集群宕机(可跳过)

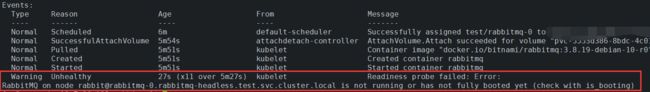

- 未设置

clustering.forceBoot时,如下图,通过删除rabbitmq集群所有pod模拟宕机,可见集群重新启动时第一个节点迟迟未就绪

- 报错信息如下:

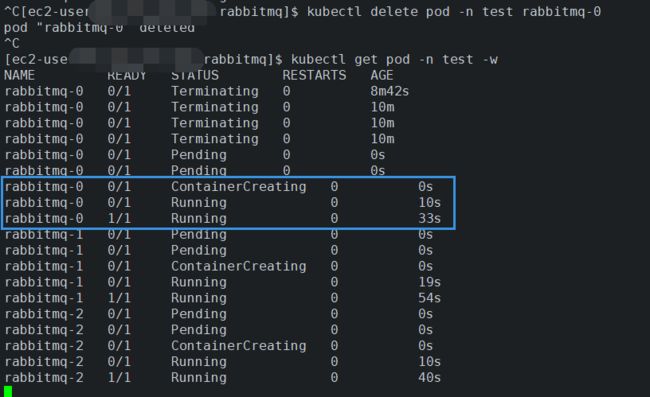

- 启用

clustering.forceBoot,并更新rabbitmq,可见集群重启正常

helm upgrade rabbitmq -n test .

kubectl delete pod -n test rabbitmq-0

get pod -n test -w

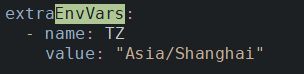

2.2.5. 指定时区

extraEnvVars:

- name: TZ

value: "Asia/Shanghai"

2.2.6. 指定副本数

replicaCount: 3

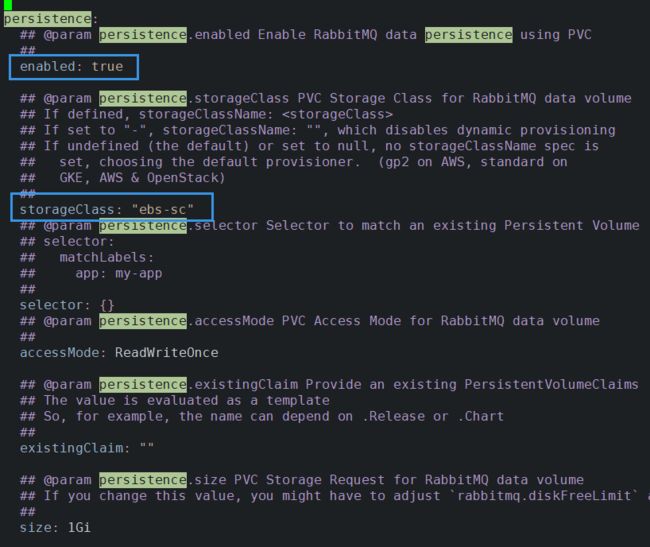

2.2.7. 设置持久化存储

-

若无需持久化,将

enabled设置为false -

持久化需使用块存储,本文通过aws的ebs-csi创建storageClass,亦可使用自建块存储storageClass

注:sc最好具备扩容属性

persistence:

enabled: true

storageClass: "ebs-sc"

selector: {}

accessMode: ReadWriteOnce

existingClaim: ""

size: 8Gi

2.2.8. 设置service

- 默认通过ClusterIP暴露5672(amqp)和15672(web管理界面)等端口供集群内部使用,外部访问方式将在第三章中详细说明

- 不建议在values中直接配置nodeport,不方便后期灵活配置

2.3. 部署RabbitMQ

2.3.1. 创建命名空间

cd ~/test/rabbitmq/rabbitmq

kubectl create ns test

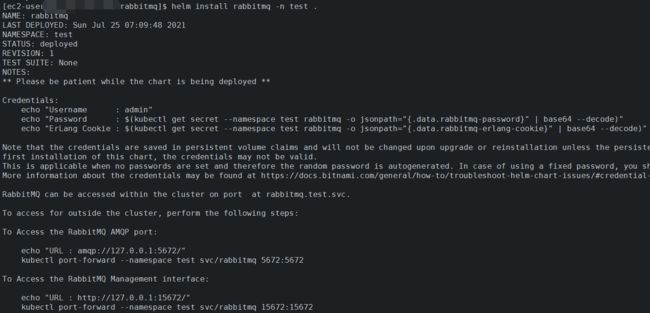

2.3.2. 安装

- 方式一 :在配置文件中指定管理员帐号密码

helm install rabbitmq -n test .

- 方式二:通过set方式指定密码

helm install rabbitmq -n test . \

--set auth.username=admin,auth.password=admin@mq,auth.erlangCookie=secretcookie

后期upgrade时亦须指定上述参数

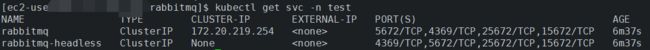

2.3.3. 查看rabbitmq安装状态

- 查看rabbitmq安装进度

kubectl get pod -n test -w

- 待各节点都正常启动后,查看svc

kubectl get svc -n test

当前rabbitmq通过ClusterIP方式暴露,供集群内部访问;外部访问方式将在下章介绍。

- 查看集群状态

# 进入pod

kubectl exec -it -n test rabbitmq-0 -- bash

# 查看集群状态

rabbitmqctl cluster_status

# 列出策略(尚未设置镜像模式)

rabbitmqctl list_policies

#设置集群名称

rabbitmqctl set_cluster_name [cluster_name]

3. 配置RabbitMQ集群外部访问方式

3.1. 建议方式

-

不建议在默认安装方式中指定nodeport,而是另外创建

-

5672:建议通过

service-私网负载均衡器暴露给私网其它应用使用 -

15672:建议通过

ingress或service-公网负载均衡器暴露给外界访问

| 端口 | 暴露方式(见下文方式三) | 访问方式 |

|---|---|---|

| 5672 | Service-LoadBalancer(配置为私网负载均衡器) | k8s集群内:rabbitmq.test:5672 私网:私网负载均衡IP:5672 |

| 15672 | ingress-ALB(配置为公网负载均衡器) | 公网负载均衡URL |

注:本文使用亚马逊托管版k8s集群,已配置

aws-load-balancer-controller

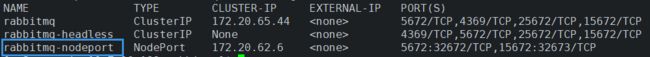

3.2. 方式一:Service-Nodeport(5672,15672)

- 获取原始Service-ClusterIP的yaml文件:

cd /test/rabbitmq

kubectl get svc -n test rabbitmq -o yaml > service-clusterip.yaml

- 参考service-clusterip,创建service-nodeport.yaml

cp service-clusterip.yaml service-nodeport.yaml

- 配置service-nodeport(去除多余信息)

apiVersion: v1

kind: Service

metadata:

name: rabbitmq-nodeport

namespace: test

spec:

ports:

- name: amqp

port: 5672

protocol: TCP

targetPort: amqp

nodePort: 32672

- name: http-stats

port: 15672

protocol: TCP

targetPort: stats

nodePort: 32673

selector:

app.kubernetes.io/instance: rabbitmq

app.kubernetes.io/name: rabbitmq

type: NodePort

- 创建service

kubectl apply -f service-nodeport.yaml

kubectl get svc -n test

- 即可通过NodeIP:Port访问服务。

3.3. 方式二:Service-公网LoadBalancer(5672,15672)

- 创建

service-loadbalancer.yaml:

vim service-loadbalancer.yaml

apiVersion: v1

kind: Service

metadata:

name: rabbitmq-loadbalance

namespace: test

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: external

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

spec:

ports:

- name: amqp

port: 5672

protocol: TCP

targetPort: amqp

- name: http-stats

port: 15672

protocol: TCP

targetPort: stats

selector:

app.kubernetes.io/instance: rabbitmq

app.kubernetes.io/name: rabbitmq

type: LoadBalancer

- 创建service:

kubectl apply -f service-loadbalancer.yaml

kubectl get svc -n test

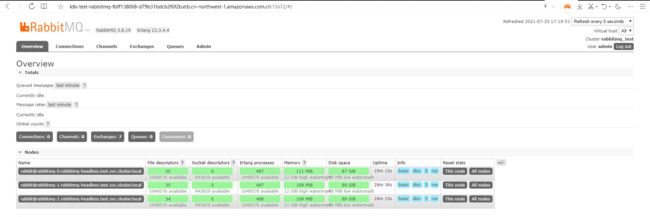

浏览器登录控制台: http://k8s-test-rabbitmq-fbff138068-d79b31bdcb2f6f2b.elb.cn-northwest-1.amazonaws.com.cn:15672:

3.4. 方式三:Service-私网LoadBalancer(5672)+Ingress-公网ALB(15672)

3.4.1. 创建Service-私网LoadBalancer

vim service-lb-internal.yaml

apiVersion: v1

kind: Service

metadata:

name: rabbitmq-lb-internal

namespace: test

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: external

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

# service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing #注释后即为私网

spec:

ports:

- name: amqp

port: 5672

protocol: TCP

targetPort: amqp

selector:

app.kubernetes.io/instance: rabbitmq

app.kubernetes.io/name: rabbitmq

type: LoadBalancer

kubectl apply -f service-lb-internal.yaml

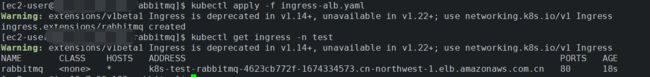

3.4.2. 创建Ingress-ALB

vim ingress-alb.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: rabbitmq

namespace: test

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

labels:

app: rabbitmq

spec:

rules:

- http:

paths:

- path: /*

backend:

serviceName: "rabbitmq"

servicePort: 15672

kubectl apply -f ingress-alb.yaml

浏览器登录控制台: k8s-test-rabbitmq-4623cb772f-1674334573.cn-northwest-1.elb.amazonaws.com.cn

4. 配置镜像模式实现高可用

4.1. 镜像模式介绍

镜像模式:将需要消费的队列变为镜像队列,存在于多个节点,这样就可以实现 RabbitMQ 的 HA 高可用性。作用就是消息实体会主动在镜像节点之间实现同步,而不是像普通模式那样,在 consumer 消费数据时临时读取。缺点就是,集群内部的同步通讯会占用大量的网络带宽。

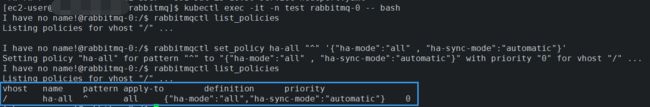

4.2. rabbitmqctl设置镜像模式

# 进入pod

kubectl exec -it -n test rabbitmq-0 -- bash

# 列出策略(尚未设置镜像模式)

rabbitmqctl list_policies

# 设置镜像模式

rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all" , "ha-sync-mode":"automatic"}'

# 再次列出策略

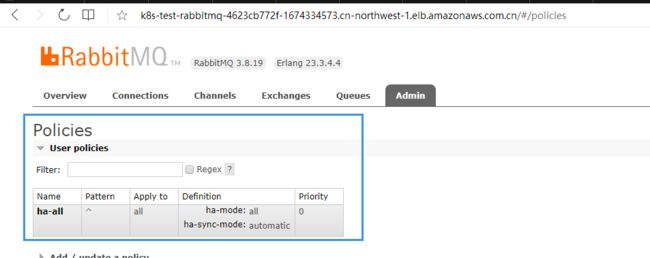

rabbitmqctl list_policies

控制台查看

5. 清理RabbitMQ集群

5.1. 卸载RabbitMQ

helm uninstall rabbitmq -n test

5.2. 删除pvc

kubectl delete pvc -n test data-rabbitmq-0 data-rabbitmq-1 data-rabbitmq-2

5.3. 清理手动创建的service,ingress

kubectl delete -f service-nodeport.yaml

kubectl delete -f service-loadbalancer.yaml

kubectl delete -f ingress-alb.yaml

若本篇内容对您有所帮助,请三连点赞,关注,收藏支持下~