hadoop集群搭建

vim /etc/hosts

192.168.1.2 Master.Hadoop

192.168.1.3 Slave1.Hadoop

192.168.1.4 Slave2.Hadoop

192.168.1.5 Slave3.Hadoop

若能用主机名进行ping通,说明刚才添加的内容,在局域网内能进行DNS解析。

hadoop:

https://dlcdn.apache.org/hadoop/common/hadoop-3.3.6/hadoop-3.3.6.tar.gz

jdk:

yum install java-1.8.0-openjdk java-1.8.0-openjdk-devel

export JAVA_HOME=/usr/lib/jvm/java-1.8.0

vim ~/.bashrc # 使用 vim 编辑器在终端中打开 .bashrc 文件

source ~/.bashrc # 使变量设置生效

echo $JAVA_HOME # 检验变量值

java -version

确保所有的服务器都安装,各台机器之间可以通过密码验证相互登录。

yum install ssh 安装SSH协议

yum install rsync (rsync是一个远程数据同步工具,可通过LAN/WAN快速同步多台主机间的文件)

service sshd restart 启动服务

# 在文件最后加入:

# 配置Java安装路径

export JAVA_HOME=/usr/lib/jvm/java-1.8.0

# 配置Hadoop安装路径

export HADOOP_HOME=/usr/local/hadoop-3.3.6

# Hadoop hdfs配置文件路径

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

# Hadoop YARN配置文件路径

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

# Hadoop YARN 日志文件夹

export YARN_LOG_DIR=$HADOOP_HOME/logs/yarn

# Hadoop hdfs 日志文件夹

export HADOOP_LOG_DIR=$HADOOP_HOME/logs/hdfs

# Hadoop的使用启动用户配置

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export YARN_PROXYSERVER_USER=root

cd /usr/local/hadoop-3.3.6/etc/hadoop/

vi core-site.xml文件

在<configuration>configuration>中间加入以下内容

<property>

<name>fs.defaultFSname>

<value>hdfs://node12:8020value>

<description>description>

property>

<property>

<name>io.file.buffer.sizename>

<value>131072value>

<description>description>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/data/hadoop/tmpvalue>

property>

vi hdfs-site.xml

<property>

<name>dfs.datanode.data.dir.permname>

<value>700value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>/data/hadoop/nnvalue>

<description>Path on the local filesystem where the NameNode stores the namespace and transactions logs persistently.description>

property>

<property>

<name>dfs.namenode.hostsname>

<value>node12,node13,node15value>

<description>List of permitted DataNodes.description>

property>

<property>

<name>dfs.blocksizename>

<value>268435456value>

<description>description>

property>

<property>

<name>dfs.namenode.handler.countname>

<value>100value>

<description>description>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>/data/hadoop/dnvalue>

property>

vi mapred-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HADOOP_JOB_HISTORYSERVER_HEAPSIZE=1000

export HADOOP_MAPRED_ROOT_LOGGER=INFO,RFA

vi mapred-site.xml

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

<description>description>

property>

<property>

<name>mapreduce.jobhistory.addressname>

<value>node12:10020value>

<description>description>

property>

<property>

<name>mapreduce.jobhistory.webapp.addressname>

<value>node12:19888value>

<description>description>

property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dirname>

<value>/data/hadoop/mr-history/tmpvalue>

<description>description>

property>

<property>

<name>mapreduce.jobhistory.done-dirname>

<value>/data/hadoop/mr-history/donevalue>

<description>description>

property>

<property>

<name>yarn.app.mapreduce.am.envname>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOMEvalue>

property>

<property>

<name>mapreduce.map.envname>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOMEvalue>

property>

<property>

<name>mapreduce.reduce.envname>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOMEvalue>

property>

vi yarn-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HADOOP_HOME=/usr/local/hadoop-3.2.4

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_LOG_DIR=$HADOOP_HOME/logs/yarn

export HADOOP_LOG_DIR=$HADOOP_HOME/logs/hdfs

vi yarn-site.xml

<property>

<name>yarn.log.server.urlname>

<value>http://node12:19888/jobhistory/logsvalue>

<description>description>

property>

<property>

<name>yarn.web-proxy.addressname>

<value>node12:8089value>

<description>proxy server hostname and portdescription>

property>

<property>

<name>yarn.log-aggregation-enablename>

<value>truevalue>

<description>Configuration to enable or disable log aggregationdescription>

property>

<property>

<name>yarn.nodemanager.remote-app-log-dirname>

<value>/tmp/logsvalue>

<description>Configuration to enable or disable log aggregationdescription>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>node12value>

<description>description>

property>

<property>

<name>yarn.resourcemanager.scheduler.classname>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairSchedulervalue>

<description>description>

property>

<property>

<name>yarn.nodemanager.local-dirsname>

<value>/data/hadoop/nm-localvalue>

<description>Comma-separated list of paths on the local filesystem where intermediate data is written.description>

property>

<property>

<name>yarn.nodemanager.log-dirsname>

<value>/data/hadoop/nm-logvalue>

<description>Comma-separated list of paths on the local filesystem where logs are written.description>

property>

<property>

<name>yarn.nodemanager.log.retain-secondsname>

<value>10800value>

<description>Default time (in seconds) to retain log files on the NodeManager Only applicable if log-aggregation is disabled.description>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

<description>Shuffle service that needs to be set for Map Reduce applications.description>

property>

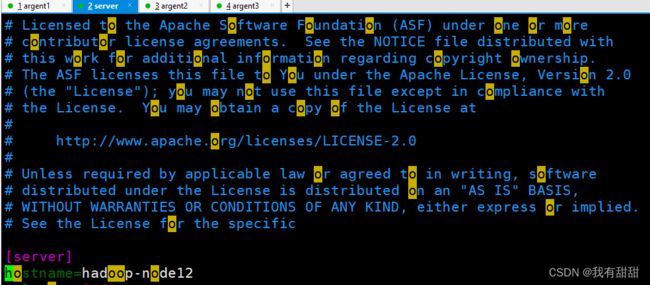

修改workers文件 vi workers

hadoop-node12

hadoop-node13

hadoop-node15

分发hadoop到其他机器

scp -r /usr/local/hadoop-3.2.4 hadoop-node13:/usr/local

scp -r /usr/local/hadoop-3.2.4 hadoop-node15:/usr/local

在每台机器上建立文件夹

mkdir -p /data/hadoop/nn

mkdir -p /data/hadoop/dn

mkdir -p /data/hadoop/nm-log

mkdir -p /data/hadoop/nm-local

修改每台机器环境变量 vi /etc/profile

export HADOOP_HOME=/usr/local/hadoop-3.3.6

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source /etc/profile 一下生效

启动集群

格式化namenode,在主节点node12上执行format命令

格式化命令只执行一次

hadoop namenode -format

start-all.sh

stop-all.sh

在hadoop-node16、hadoop-node18、hadoop-node12上通过jps命令验证进程是否都启动成功

hadoop-node16:应该包含DataNode和NameNode,ResourceManager进程

hadoop-node18和hadoop-node12:应该包含DataNode, NodeManager进程

验证HDFS:浏览器打开:http://192.168.5.16:9870,可正常访问

打开19888端口:

启动历史服务器

mapred --daemon start historyserver

# 如需停止将start更换为stop

mapred --daemon stop historyserver

启动web代理服务器

yarn-daemon.sh start proxyserver

# 如需停止将start更换为stop

yarn-daemon.sh stop proxyserver

netstat -tpnl | grep java

打开8088端口

wget: 无法解析主机地址”的解决方法

sudo vim /etc/resolv.conf

修改名字服务器:

options timeout:2 attempts:3 rotate single-request-reopen

; generated by /usr/sbin/dhclient-script

#nameserver 100.100.2.138

#nameserver 100.100.2.136

nameserver 8.8.8.8

nameserver 8.8.8.4

问题:

[root@hadoop-node12 html]# tar -zxvf HDP-3.1.5.0-centos7-rpm.tar.gz /var/www/html/

tar (child): HDP-3.1.5.0-centos7-rpm.tar.gz:无法 open: 没有那个文件或目录

tar (child): Error is not recoverable: exiting now

tar: Child returned status 2

tar: Error is not recoverable: exiting now

解决方法

chmod u+x HDP-3.1.5.0-centos7-rpm.tar.gz

问题:ambari Confirm Hosts失败

经测试,在主机使用ssh localhost仍需要输入密码,所以需要将id_rsa.pub的文件内容复制到authorized_keys,设置本主机对自己免密登录即可。

依次执行以下命令:

cd ~/.ssh

cat id_rsa.pub >> authorized_keys

问题:Server at https://hadoop-node20:8440 is not reachable

确保 /etc/ambari-agent/conf/ambari-agent.ini 文件中的 server.hostname 为 ambari-server 节点主机名或 ip。