大数据运维---hadoop集群基础环境的配置

准备三台虚拟机,三个节点,CentOS

1.配置静态ip(三台都要配置):

进入网卡ens33的配置页面

[root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static //修改:将原先的dhcp修改为static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=b5619fd4-7e17-42b6-bf6b-5c6c4a6d5fae

DEVICE=ens33

ONBOOT=yes //修改为yes, 网卡开机自启动

IPADDR=192.168.17.60 //新增:配置静态IP地址

NETMASK=255.255.255.0 //新增:配置子网掩码

GATEWAY=192.168.17.2 //新增:配置网关

DNS1=192.168.17.2 //新增:配置DNS

:wq保存并退出

service network restart //重启网卡

2.修改主机名

进入hostname文件,删除原有的主机名

[root@localhost ~]# vi /etc/hostname 主节点修改为master;其他两台分别修改为slave1和slave2

![]()

3.集群IP地址和主机名的映射(三台都需要修改):

[root@master ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.17.60 master #master主机与IP的映射

192.168.17.61 slave1 #slave1主机与IP的映射

192.168.17.62 slave2 #slave2主机与IP的映射 三台都修改以后任意ping一台都能ping通

4.在每个节点创建一个普通用户hadoop 用于使用hadoop。

[root@master ~]# useradd hadoop //创建用户hadoop

[root@master ~]# passwd hadoop //为Hadoop用户创建密码

更改用户 hadoop 的密码 。

新的 密码: //输入密码

passwd:所有的身份验证令牌已经成功更新。

[root@master ~]#

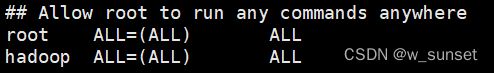

为每个节点的hadoop用户添加root权限

[root@master ~]# vi /etc/sudoers

找到root ALL=(ALL) ALL这一行,在下面添加

5.关闭防火墙

[root@master ~]# systemctl stop firewalld //关闭防火墙

[root@master ~]# systemctl disable firewalld //永久关闭防火墙

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master ~]# systemctl status firewalld //查看防火墙状态

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

12月 05 16:47:15 master systemd[1]: Starting firewalld - dynamic firewall daemon...

12月 05 16:47:15 master systemd[1]: Started firewalld - dynamic firewall daemon.

12月 05 16:47:15 master firewalld[675]: WARNING: ICMP type 'beyond-scope' is not supported by the kernel for ipv6.

12月 05 16:47:15 master firewalld[675]: WARNING: beyond-scope: INVALID_ICMPTYPE: No supported ICMP type., ignoring for run-time.

12月 05 16:47:15 master firewalld[675]: WARNING: ICMP type 'failed-policy' is not supported by the kernel for ipv6.

12月 05 16:47:15 master firewalld[675]: WARNING: failed-policy: INVALID_ICMPTYPE: No supported ICMP type., ignoring for run-time.

12月 05 16:47:15 master firewalld[675]: WARNING: ICMP type 'reject-route' is not supported by the kernel for ipv6.

12月 05 16:47:15 master firewalld[675]: WARNING: reject-route: INVALID_ICMPTYPE: No supported ICMP type., ignoring for run-time.

12月 05 17:18:38 master systemd[1]: Stopping firewalld - dynamic firewall daemon...

12月 05 17:18:39 master systemd[1]: Stopped firewalld - dynamic firewall daemon.

6.配置集群之间时钟同步

执行以下命令安装ntpd服务

[root@master ~]# yum -y install ntp

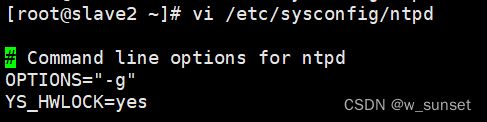

修改每个节点配置文件,在ntpd文档中添加一行内容:YS_HWLOCK=yes

[root@master ~]# vi /etc/sysconfig/ntpd

# Command line options for ntpd

OPTIONS="-g"

YS_HWLOCK=yes

[root@master ~]# service ntpd start //启动ntpd服务

Redirecting to /bin/systemctl start ntpd.service

[root@master ~]# chkconfig ntpd on //设为开机自启

注意:正在将请求转发到“systemctl enable ntpd.service”。

Created symlink from /etc/systemd/system/multi-user.target.wants/ntpd.service to /usr/lib/systemd/system/ntpd.service.

[root@master ~]# vi /etc/ntp.conf

# For more information about this file, see the man pages

# ntp.conf(5), ntp_acc(5), ntp_auth(5), ntp_clock(5), ntp_misc(5), ntp_mon(5).

driftfile /var/lib/ntp/drift

# Permit time synchronization with our time source, but do not

# permit the source to query or modify the service on this system.

restrict default nomodify notrap nopeer noquery

# Permit all access over the loopback interface. This could

# be tightened as well, but to do so would effect some of

# the administrative functions.

restrict 127.0.0.1

restrict ::1

# Hosts on local network are less restricted.

restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap //去掉这一行的注释

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#server 0.centos.pool.ntp.org iburst //把这四行注释掉

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server 127.127.1.0 //添加这一行

fudge 127.127.1.0 stratum 10 //添加这一行

#broadcast 192.168.1.255 autokey # broadcast server

#broadcastclient # broadcast client

#broadcast 224.0.1.1 autokey # multicast server

#multicastclient 224.0.1.1 # multicast client

slave节点与master节点同步时间:

[root@slave1 ~]# crontab -e

*/1 * * * * /usr/sbin/ntpdate 192.168.17.60 //添加这行内容,IP为master的IP

[root@slave2 ~]# crontab -e

*/1 * * * * /usr/sbin/ntpdate 192.168.17.60

执行以下命令查看时间:

[root@master ~]# date

2021年 12月 05日 星期日 18:12:41 CST

[root@master ~]#

[root@slave1 ~]# date

2021年 12月 05日 星期日 18:12:47 CST

您在 /var/spool/mail/root 中有新邮件

[root@slave1 ~]#

7.ssh无密码登录:

切换到hadoop用户:

su hadoop

在master节点上均生产密钥对:

[hadoop@master ~]$ ssh-keygen -t rsa -P '' //使用ssh-keygen命令生成密钥对

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa): //直接回车

Created directory '/home/hadoop/.ssh'.

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:mvC9TYkPGLVx81TbmucScpIJ/iLzaJx6oWuPKgFtAxQ hadoop@master

The key's randomart image is:

+---[RSA 2048]----+

|.E. . |

|. . o |

| o o + . . .|

|. + . = = o o |

| o .. . S . * = .|

| . o *.. o + + |

| . =o*o+ . . .|

| . o.=@ . . |

| ..o+*+ + |

+----[SHA256]-----+

[hadoop@master ~]$ cd ~/.ssh/

[hadoop@master .ssh]$ ls

id_rsa id_rsa.pub //前者为私钥,后者为公钥

[hadoop@master .ssh]$ cat id_rsa.pub > authorized_keys //将公钥写入文件authorized_keys中

[hadoop@master .ssh]$ chmod 700 authorized_keys //给文件authorized_keys授权

[hadoop@master .ssh]$ ls

authorized_keys id_rsa id_rsa.pub

[hadoop@master .ssh]$

在slave1节点上生成密钥对:

[root@slave1 ~]# su hadoop

[hadoop@slave1 root]$ cd

[hadoop@slave1 ~]$ ssh-keygen -t rsa -P ""

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:Um5dcRMg44ZA6GBO/ZOq6zVqdS/9t2T9xvoxcUWtWnQ hadoop@slave1

The key's randomart image is:

+---[RSA 2048]----+

| . oo o o.+.o|

| + o . o o o.oE|

| + o . .o o .. o.|

| . . +o o . o .|

| ...S . o ..|

| o .o .. o|

| oo. o o .+ |

| oo .. o o. .=|

| o+. . ......+o|

+----[SHA256]-----+

[hadoop@slave1 ~]$ cd ~/.ssh/

[hadoop@slave1 .ssh]$ ls

id_rsa id_rsa.pub

[hadoop@slave1 .ssh]$ cat id_rsa.pub > slave1 //将公钥添加到文件slave1中

[hadoop@slave1 .ssh]$ scp slave1 hadoop@master:~/.ssh/ //将公钥发送给master

The authenticity of host 'master (192.168.17.60)' can't be established.

ECDSA key fingerprint is SHA256:Pq4RVrEv9yF42WEkeHf3otw6PQOwZm7BXyfskXOmddM.

ECDSA key fingerprint is MD5:35:88:cf:94:4e:e7:06:7d:31:c2:bf:b4:30:ba:4c:db.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'master,192.168.17.60' (ECDSA) to the list of known hosts.

hadoop@master's password:

Permission denied, please try again.

hadoop@master's password: //此处输入master的hadoop用户的密码

slave1 100% 395 472.0KB/s 00:00

您在 /var/spool/mail/root 中有新邮件

[hadoop@slave1 .ssh]$

在slave2节点生产密钥对:

[root@slave2 ~]# su hadoop

[hadoop@slave2 root]$ cd

[hadoop@slave2 ~]$ ssh-keygen -t rsa -P ""

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:wEMEDvB/2U+wmSHQVw/bEe/GrjvipWLEnmFM5P8+FWU hadoop@slave2

The key's randomart image is:

+---[RSA 2048]----+

|... o+o .o o. |

| . o +. o = o E|

| . . =+o . o .o |

| . *o* o. |

| . o+S.. +. |

| . *o. o. |

| + o..... |

| = .o+. |

| . ooo++ |

+----[SHA256]-----+

[hadoop@slave2 ~]$ cd ~/.ssh/

[hadoop@slave2 .ssh]$ ls

id_rsa id_rsa.pub

[hadoop@slave2 .ssh]$ cat id_rsa.pub > slave2

[hadoop@slave2 .ssh]$ scp slave2 hadoop@master:~/.ssh/

The authenticity of host 'master (192.168.17.60)' can't be established.

ECDSA key fingerprint is SHA256:Pq4RVrEv9yF42WEkeHf3otw6PQOwZm7BXyfskXOmddM.

ECDSA key fingerprint is MD5:35:88:cf:94:4e:e7:06:7d:31:c2:bf:b4:30:ba:4c:db.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'master,192.168.17.60' (ECDSA) to the list of known hosts.

hadoop@master's password:

slave2 100% 395 424.4KB/s 00:00

[hadoop@slave2 .ssh]$

返回master节点操作:

[hadoop@master .ssh]$ ls

authorized_keys id_rsa id_rsa.pub slave1 slave2 //文件slave1和slave2是刚刚从slave节点发送过来的

[hadoop@master .ssh]$ cat slave1 >> authorized_keys //将slave1的公钥追加到授权文件中

[hadoop@master .ssh]$ cat slave2 >> authorized_keys //将slave2的公钥追加到授权文件中

[hadoop@master .ssh]$ scp authorized_keys hadoop@slave1:~/.ssh/ //将授权文件发送到slave1

The authenticity of host 'slave1 (192.168.17.61)' can't be established.

ECDSA key fingerprint is SHA256:Pq4RVrEv9yF42WEkeHf3otw6PQOwZm7BXyfskXOmddM.

ECDSA key fingerprint is MD5:35:88:cf:94:4e:e7:06:7d:31:c2:bf:b4:30:ba:4c:db.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slave1,192.168.17.61' (ECDSA) to the list of known hosts.

hadoop@slave1's password: //输入slave1的Hadoop密码

authorized_keys 100% 1185 1.1MB/s 00:00

[hadoop@master .ssh]$ scp authorized_keys hadoop@slave2:~/.ssh/ //将授权文件发送到slave2

The authenticity of host 'slave2 (192.168.17.62)' can't be established.

ECDSA key fingerprint is SHA256:Pq4RVrEv9yF42WEkeHf3otw6PQOwZm7BXyfskXOmddM.

ECDSA key fingerprint is MD5:35:88:cf:94:4e:e7:06:7d:31:c2:bf:b4:30:ba:4c:db.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slave2,192.168.17.62' (ECDSA) to the list of known hosts.

hadoop@slave2's password: //输入slave2的Hadoop密码

authorized_keys 100% 1185 919.8KB/s 00:00

[hadoop@master .ssh]$

下一步:验证SSH无密码登录

登录任意一个节点都不需要输入密码

[hadoop@master ~]$ ssh slave1

Last login: Sun Dec 5 18:43:15 2021

[hadoop@slave1 ~]$ exit

登出

Connection to slave1 closed.

[hadoop@master ~]$ ssh slave2

Last login: Sun Dec 5 18:47:01 2021

[hadoop@slave2 ~]$ exit

登出

Connection to slave2 closed.

[hadoop@master ~]$